Labbook IMAV

Started Labbook 2023.

November 21, 2022

September 15, 2022

- Would be interesting to look into comparison of Visual SLAM algorithms, not only for flying drones but also for the Nanosaur, Duckiebot or RAE.

September 14, 2022

- Installed the first of the Optitrack cameras in the new Robolab. The camera can see the whole penalty area and from the goal to the middle circle:

- When the sun is shining, the Optitrack system has a lot of problems. Curtains will be essential:

August 18, 2022

- This issue seems to have the same problem.

- According to this tutorial, YOLOv4 has to be first converted from keras to TensorFlow 2.

- So, the step that I missed was python keras-YOLOv3-model-set/tools/model_converter/convert.py /yolov4-tiny.cfg /yolov4-tiny.weights .

- In my case (from ~/git/depthai/public/yolo-v4-tiny-tf) the command is python3.9 keras-YOLOv3-model-set/tools/model_converter/convert.py keras-YOLOv3-model-set/cfg/yolov4-tiny.cfg yolov4-tiny.weights keras-YOLOv4-tiny.h5.

- Yet, this creates a h5 file, while the next steps expects an output dir.

- Instead, cloned the keras-YOLOv3-model-set from david8862 and did python3.9 tools/model_converter/convert.py cfg/yolov4-tiny.cfg weights/yolov4-tiny.weights output/. Note that the yolov4-tiny.weights are a copy from deptai/public. This command creates output/saved_model.pb

- The result is:

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: ~/git/keras-YOLOv3-model-set/models/IRs/yolov4-tiny.xml

[ SUCCESS ] BIN file: ~/git/keras-YOLOv3-model-set/models/IRs/yolov4-tiny.bin

[ SUCCESS ] Total execution time: 64.31 seconds.

[ SUCCESS ] Memory consumed: 731 MB.

- I continued with next first step, and installed python3.9 -m pip install -U blobconverter. Running python3.9 -m blobconverter --openvino-xml ~/git/keras-YOLOv3-model-set/models/IRs/yolov4-tiny.xml --openvino-bin ~/git/keras-YOLOv3-model-set/models/IRs/yolov4-tiny.bin resulted in:

Downloading /home/arnoud/.cache/blobconverter/yolov4-tiny_openvino_2021.4_4shave.blob...

[==================================================]

Done

- Moved that blob from the cache to the public/yolo-v4-tiny-tf directory (instead of suggested resources/nn)

- The resources/nn is needed because that are the options in the AI-drop menu from the depthai-demo. Created a yolov4-tiny directory with the json file and the weights. The json file is not working, because I get the following error:

stopping demo...

=== TOTAL FPS ===

[color]: 30.1

[depthRaw]: 30.1

[depth]: 30.1

[left]: 30.1

[rectifiedLeft]: 29.9

[right]: 30.1

[rectifiedRight]: 30.1

[nn]: 30.1

[nnInput]: 30.1

Setting up demo...

Available devices:

[0] 14442C10710891D000 [X_LINK_UNBOOTED]

USB Connection speed: UsbSpeed.SUPER

[14442C10710891D000] [3.4.4] [4.577] [SpatialDetectionNetwork(14)] [warning] Network compiled for 4 shaves, maximum available 12, compiling for 6 shaves likely will yield in better performance

[14442C10710891D000] [3.4.4] [4.580] [StereoDepth(9)] [error] RGB camera calibration missing, aligning to RGB won't work

[14442C10710891D000] [3.4.4] [5.022] [SpatialDetectionNetwork(14)] [warning] Input image (416x416) does not match NN (608x608)

- Changed in the json file the input size from 416x416 to 608x608.

- The input seems OK, now it goes wrong with the output:

[14442C10710891D000] [3.4.4] [4.233] [SpatialDetectionNetwork(14)] [error] Mask is not defined for output layer with width '19'. Define at pipeline build time using: 'setAnchorMasks' for 'side19'.

[14442C10710891D000] [3.4.4] [4.233] [SpatialDetectionNetwork(14)] [error] Mask is not defined for output layer with width '38'. Define at pipeline build time using: 'setAnchorMasks' for 'side38'.

- Followed the suggestions in post. The xml had already the correct input_shape, although I had to change the values of side26 and side13.

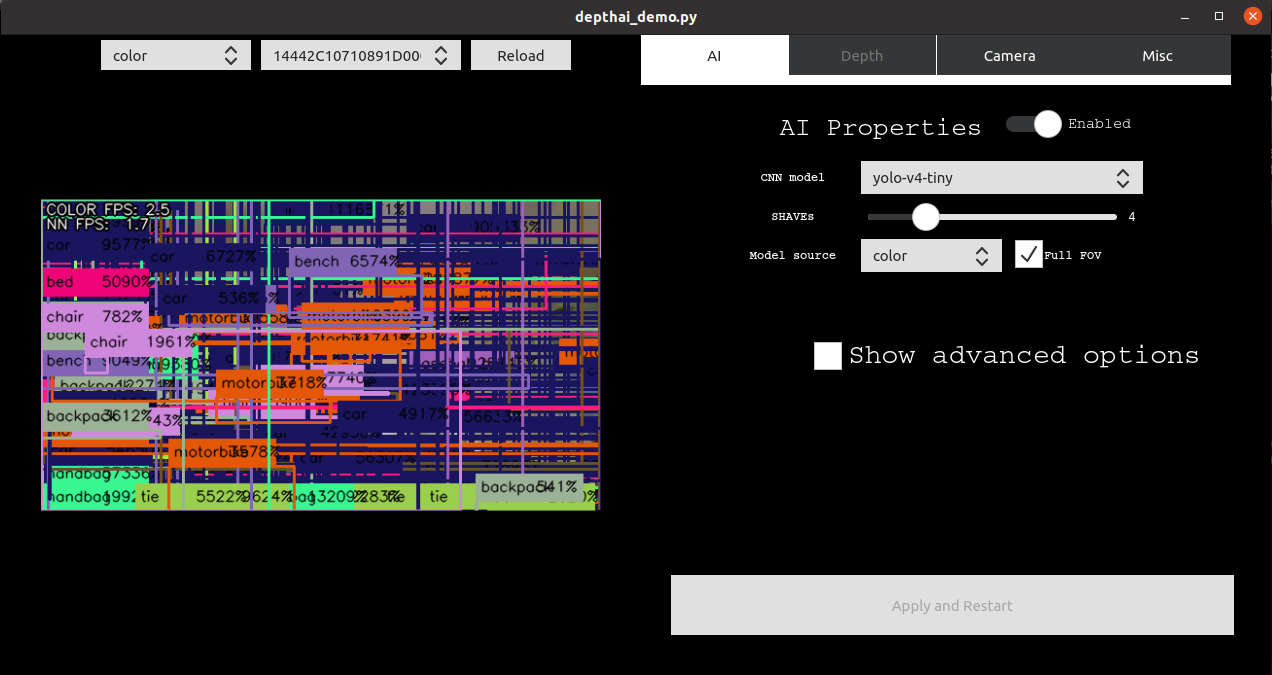

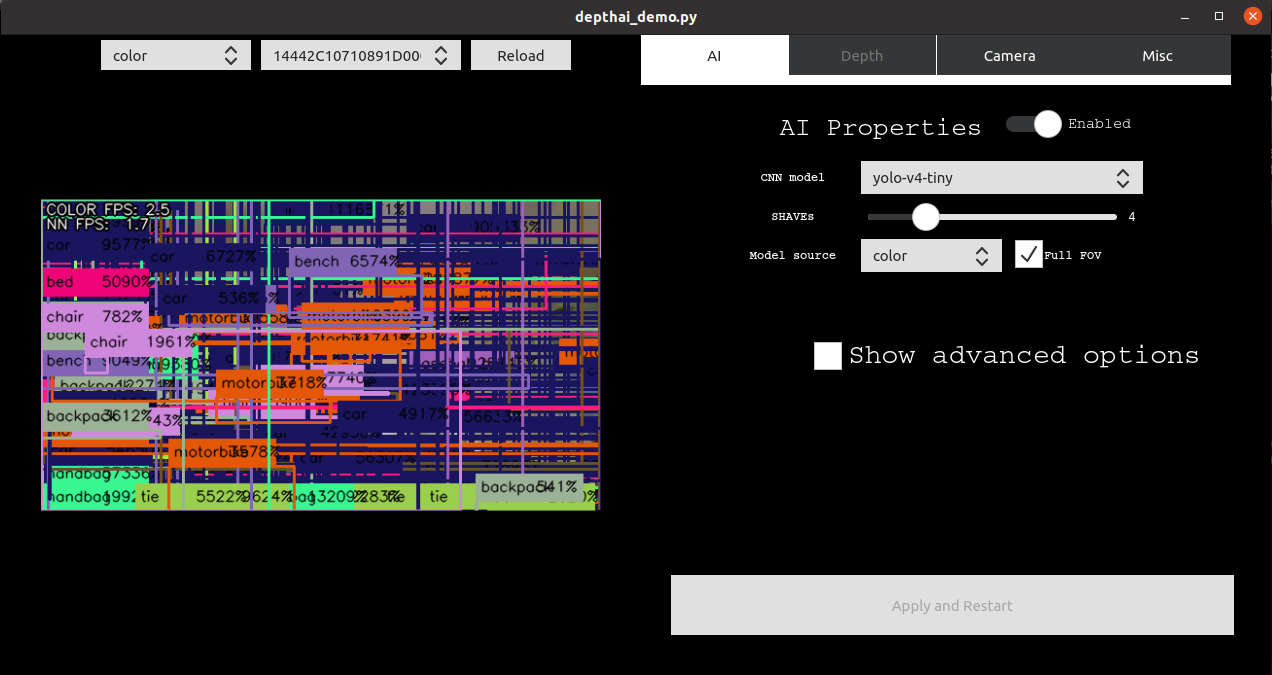

- The detector now sort of works, although it shows far too many labels and complains on

Label of ouf bounds (label index: 66, available labels: 2.

- This is not that strange, because Yolo knows 80 classes, not only faces.

- Copied the labels from yolo-v3-tiny-tf. Now there is only a single warning:

[14442C10710891D000] [3.4.4] [3.781] [DetectionNetwork(9)] [warning] Network compiled for 4 shaves, maximum available 13, compiling for 6 shaves likely will yield in better performance

.

- I removed the depth-calibration warning by enabling Depth-properties on top of the Depth-tab.

- Still, I receive many labels with confidence above 100%:

- Looked into the yolov4-tiny.cfg I used for the conversion. That one still has height/width of 416x416.

- The number of classes is as expected 80, although anchors = 10,14, 23,27, 37,58, 81,82, 135,169, 344,319 could be checked.

- The filter is set to 128, instead of 255, as suggested in this post. The yolov4-tiny.cfg in public (together with the weights), is precisily the same.

- Yet, the xml specifies an input-size of 608x608.

- As suggested in this post, I could add -r 416x416 while converting.

- Looked in the convert.py script, -r reorders the output layers, but there is no option to change the input or output dimensions.

- It seems to go wrong in the next step, where 608 is used as input_shape based on the suggestion is Openvino tutorial. So, now I run python3.9 -m mo --saved_model_dir output --output_dir models416/IRs --input_shape [1,416,416,3] --model_name yolo-v4-tiny, which creates an xml with input 416x416.

- Used that one to create a new blob.

- When I switch to yolo-v3-tiny-tf, the depthai_demo first downloads Downloading ~/.cache/blobconverter/yolo-v3-tiny-tf_openvino_2021.4_4shave.blob..

August 16, 2022

- Downloaded yolo-v4-tiny-tf with command omz_downloader --name yolo-v4-tiny-tf. The model is dowloaded in public/yolo-v4-tiny-tf. This contains the yolov4-tiny.weights and a directory keras-YOLOv3-model-set/, which contains cfg, common, tools and yolo4. In the latter yolo4/models/layers.py can be found.

- Had some troubles with different versions of numpy. Removed numpy from /usr/lib/python3/dist-packages and installed the latest for python3.9 with python3.9 -m pip install numpy, that worked, although there were still some conflicts:

Collecting numpy

Downloading numpy-1.23.2-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (17.1 MB)

|████████████████████████████████| 17.1 MB 11.3 MB/s

ERROR: scikit-image 0.19.3 has requirement scipy>=1.4.1, but you'll have scipy 1.3.3 which is incompatible.

ERROR: openvino 2021.4.2 has requirement numpy<1.20,>=1.16.6, but you'll have numpy 1.23.2 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement defusedxml>=0.7.1, but you'll have defusedxml 0.6.0 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement nltk>=3.5, but you'll have nltk 3.4.5 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement numpy<1.20,>=1.16.6, but you'll have numpy 1.23.2 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement pillow>=8.1.2, but you'll have pillow 7.0.0 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement PyYAML>=5.4.1, but you'll have pyyaml 5.3.1 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement requests>=2.25.1, but you'll have requests 2.22.0 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement scipy~=1.5.4, but you'll have scipy 1.3.3 which is incompatible.

ERROR: openvino-dev 2021.4.2 has requirement texttable~=1.6.3, but you'll have texttable 1.6.2 which is incompatible.

ERROR: imageio 2.21.1 has requirement pillow>=8.3.2, but you'll have pillow 7.0.0 which is incompatible.

Installing collected packages: numpy

Successfully installed numpy-1.23.2

- At least python3.9 -m mo --help now works.

- Next, I looked at Compression to FP16, and did python3.9 -m mo --input_model yolov4-tiny.weights --framework tf --data_type FP16. That fails on missing module tensorflow.

- Did python3.9 -m pip install tensorflow. That helped, although the script now fails on numpy: installed: 1.23.2, required: < 1.20. Installed python3.9 -m pip install numpy==1.19.5, but that gives the warning tensorflow 2.9.1 has requirement numpy>=1.20. That gives an error in bfloat16_type(): Unable to convert function return value to a Python type!.

- Installing python3.9 -m pip install tensorflow==2.8.2, which automatically installed numpy-1.23.2 again (which gives again a conflict with openvino 2021.4.2).

- Tried again with python3.9 -m pip install tensorflow==2.7.3 numpy==1.19.5

- This more or less works. I received the following output:

python3.9 -m mo --input_model yolov4-tiny.weights --framework tf --data_type FP16

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: ~/git/depthai/public/yolo-v4-tiny-tf/yolov4-tiny.weights

- Path for generated IR: ~/depthai/public/yolo-v4-tiny-tf/.

- IR output name: yolov4-tiny

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: None

- Use the config file: None

- It could be that I also have to provide the layers and cfg from keras-YOLOv3-model-set. I tried python3.9 -m mo --input_model yolov4-tiny.weights --framework tf --tensorflow_object_detection_api_pipeline_config keras-YOLOv3-model-set/cfg/yolov4-tiny.cfg, but still Use the config file: None

- Note that in my environment still PYTHONPATH=/opt/ros/foxy/lib/python3.8/site-packages is active, while I commented out all references to foxy. Still, ros2 can be found! Removed the sourcing of ~/git/RoboCup2022RVRL_Demo/install/setup.bash solved that.

- Installing sudo apt install python3.9-dev solved the inference warning, and now I got those warnings:

- Inference Engine found in: /home/arnoud/.local/lib/python3.9/site-packages/openvino

Inference Engine version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

Model Optimizer version: 2021.4.2-3976-0943ed67223-refs/pull/539/head

/home/arnoud/.local/lib/python3.9/site-packages/keras_preprocessing/image/utils.py:23: DeprecationWarning: NEAREST is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.NEAREST or Dither.NONE instead.

'nearest': pil_image.NEAREST,

/home/arnoud/.local/lib/python3.9/site-packages/keras_preprocessing/image/utils.py:24: DeprecationWarning: BILINEAR is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.BILINEAR instead.

'bilinear': pil_image.BILINEAR,

/home/arnoud/.local/lib/python3.9/site-packages/keras_preprocessing/image/utils.py:25: DeprecationWarning: BICUBIC is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.BICUBIC instead.

'bicubic': pil_image.BICUBIC,

/home/arnoud/.local/lib/python3.9/site-packages/keras_preprocessing/image/utils.py:28: DeprecationWarning: HAMMING is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.HAMMING instead.

if hasattr(pil_image, 'HAMMING'):

/home/arnoud/.local/lib/python3.9/site-packages/keras_preprocessing/image/utils.py:30: DeprecationWarning: BOX is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.BOX instead.

if hasattr(pil_image, 'BOX'):

/home/arnoud/.local/lib/python3.9/site-packages/keras_preprocessing/image/utils.py:33: DeprecationWarning: LANCZOS is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.LANCZOS instead.

if hasattr(pil_image, 'LANCZOS'):

/home/arnoud/.local/lib/python3.9/site-packages/flatbuffers/compat.py:19: DeprecationWarning: the imp module is deprecated in favour of importlib; see the module's documentation for alternative uses

import imp

/home/arnoud/.local/lib/python3.9/site-packages/mo/mo/front/tf/loader.py:113: RuntimeWarning: Unexpected end-group tag: Not all data was converted

graph_def.ParseFromString(f.read())

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (): Graph contains 0 node after executing protobuf2nx. It may happen due to problems with loaded model. It considered as error because resulting IR will be empty which is not usual

August 15, 2022

- Following the instructions of Connecting Your POWERUP 4.0 For The First Time.

- The PowerUp 4.0 Android app was not installed yet, so installed it (v1.36.9).

- While charging, I watched the three pre-flight videos. Two essential hints are to have cover of your phone removed and have the antenna wire of the plane vertical.

- The bluetooth connection works. Updating the firmware.

- The first flight experiments without power were not that succesfull. Dived down, looks like the trim flaps are cut too short. Will extend them.

- Made them as large as in this tutorial.

- When starting the engines at 55%, the plane stayed reasonable level. Yet, it is difficult to keep it straight, so the field next to my house is not wide enough (had to get it out of the trees once).

-

-

- After installing the requirements, I could run the demo of the OAK-D, as described in the tutorial.

- The demo has both the yolo-v3-tf model as the yolo-v3-tiny-tf model.

- The next step would be using custom models.

- The OpenVino download tools are part of the openvino-dev, which didn't install under python3.8.

- Installed python3.8. Just python3.9 -m install openvino-dev failed because version 2022.1.0 wasn't availbel yet. Installing with python3.9 -m pip install openvino-dev==2021.4.2 worked, although I also had to update python3.9 -m pip install numpy>=1.19.3.

July 4, 2022

- It would be interesting to see the simulation of Agilicious library.

- The ROS dependence seems to be mainly VRPN library, which is the interface to the Optitrack. This library is available for ROS1 melodic and noetic, no wiki is available for ROS2, although a ROS2-foxy github branch exists (five years old).

- It seems to work fine with Optitrack and a ros2_bridge, although also an attempt has been made to port this drivers to ROS2.

May 24, 2022

- With skip-gates Qualcomm was able to improve the speed of convolution by a factor 300, see CVPR 2021 paper. They demonstrated this on skeleton detection with EfficientNet.

May 10, 2022

April 25, 2022

- With Beam search this paper describes that you can compress 75% with the same performance (for ResNet56, where is now that ResNet18 works also works fine for the same dataset).

April 14, 2022

- This 2019 paper uses Gazebo as test environment for their obstacle avoidance.

April 5, 2022

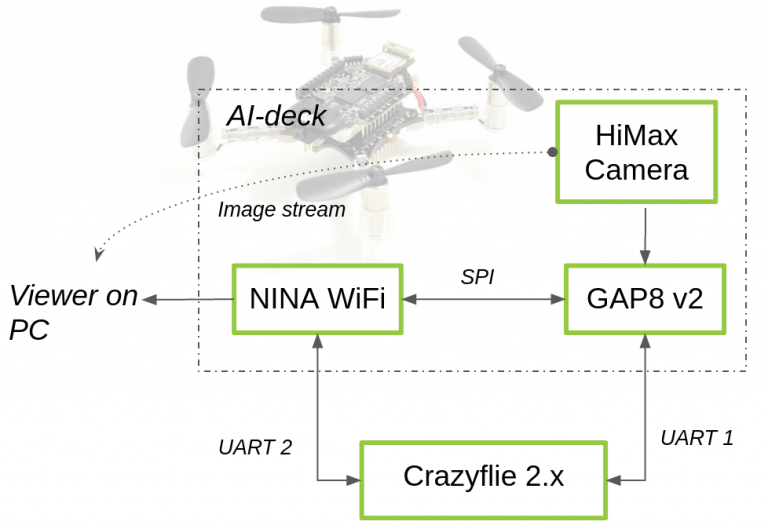

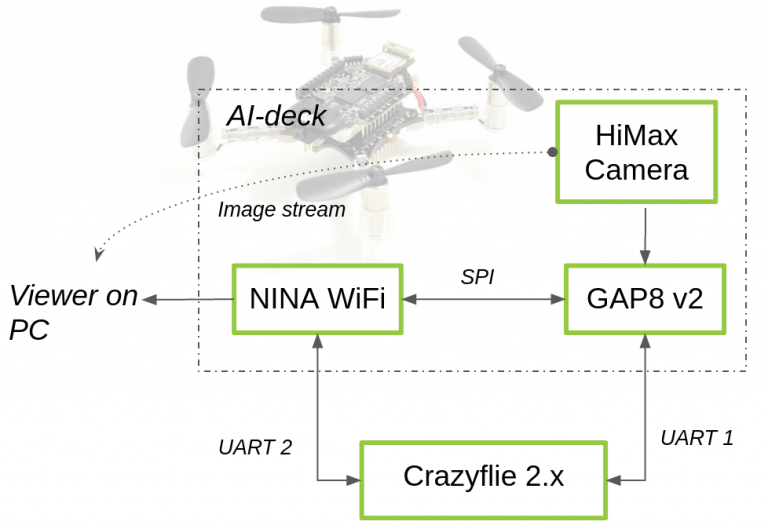

- There is one Crazyflie with AI deck at the Cyberzoo from TU Delft.

- Until the AI desks are available, the teams should start with a simulation environment.

- The organization will provide details, but there are several options, including two Gazebo versions (ROS Melodic and Kinetic).

- One of the new interns started with BAM workshops to get acquanted with the Crazyflie platform.

- The difference between Crazyflie 2.0 vs 2.1 are minor, mainly a better radio performance and more trustable IMU.

- In the mean time BitCraze is still working on AI deck, including a new communication protocol (CPX).

- BitCraze is also working on an AI-deck tutorial:

-

- On October 14, 2013 I experimented with the Crazyradio.

- Watched the presentation of the developers of the AI deck. In addition, they also used flow-deck. Note that the micro-controller has 8 cores, but no floating point support.

- The scientific part of Daniele Palossi's presentation is based on Learning to Fly by Driving.

- They reduced an already shallow ResNet from float32 to int16, and reduced the max-pooling from 3x3 to 2x2. With an additional trainingset, they could reduce the NN without major changes in the precision and accuracy. They also optimized the memory usage and the relative frequencies between the fabric and the cores.

- In their most recent work they perform background augmentation to improve the robustness of the algorithm for new environments.

- Also watched the BRAM 2021 presentation from Guido de Croon.

- Because of the memory limitation, the CrazyFlies use simple finite state-transitions between behaviors, such as using for instance wall following to implement the bug algorithm. They also demonstrate exploration by giving each drone a prefered direction, including homing based on a radio signal.

- For the gas search Bart used a Crazyflie with Laser rangers and again the optical-flow deck. There are actually two laser ranger decks, on measuring the height to the ground and an another to measure obstacles around the drone.

- The laser ranger can only measure in four directions, so the drone can better zig-zag instead of following a direction that is inbetween one of the laser measurements (see end of the presentation.

- Here is the list of all expansion decks for the Crazyflie 2.X

- The dungeon generation of AutoGDM could be also interesting for the RoboCup Rescue competition.

- The MAV lab has a number of other interesting repositories, such as Cyberzoo models for Gazebo.

- Other repositories are the CrazyFlie firmware and Crazyflie suite

- Bart also pointed to swarmulator, which is a lightweight C++ simulator.

April 4, 2022

- Two under-graduates want to work on the challenge

- v1.0 of the rules are published.

- Seems that I have bought Happy hacker bundle, because I saw the Crazyradio USB dongle on one of the two decks.

- Has to figure out how to distinguish my two CrazyFly 1.0s from my CrazyFly 2.1.

- Note that we have a CrazyFly 2.0, and not a 2.1.

March 8, 2022

Previous Labbook

Related Labbooks