December 31, 2023

The news is that I will give the course in Academic year 2024-2025, so I started the preparations again.

Started Labbook 2024.

The Master version of this course will be Vision for Autonomous Robots, so the rest of the RAE development can be followed on this labbook.

October 12, 2023

September 25, 2023

- Nvidia shows three configurations of Yolo5 on the Jetson Orin. With a output-tensor of FP16 250 FPS, with INT8 double the FPS (410). Fast enough to still work with a reasonable FPS on a Jetson Nano.

September 18, 2023

- Read section 6.2. Very nice introduction of the measurement, which updates the estimate (and reduces the uncertainty). How much depends on the trust balance between model and observation (without mentioning the Kalman gain yet).

- Read the story of steamship Tararua, which I found not so clear example of a data-association error (although a sad story, probably well known in New Zealand).

- In section 6.6 Peter Corke mentions that the Monte Carlo method was part of the Manhattan project in WWII, and developed by John von Neumann and Stanislaw Ulam. Read The beginning of the Monte Carlo method. Stan realised that statistical sampling techniques were impractical for manual calculations, but that the ENIAC was the tool taht could resuscitate the technique.

- Stan was interested in 2D games which developed in cellular automata. They coined the name Monte Carlo on a proposal related with neutron diffusion. The random numbers were generated by von Neumann's "middle-square digits" algorithm.

- Section 6.8.1 describes lidar-based odometry.

- For further reading the book of Barfoot (2017) is mentioned, next to the usual suspects. A free copy of the book is also unofficially compiled by the author himself, including errata. Book is quite mathematical, focussing on probability density functions, but includes Exercises.

- Peter Corke also recomments Paul Newman's free ebook C4B: Mobile Robotics (2003). Book contains as appendix Matlab code for i.e. a Particle Filter.

- For Visual SLAM Peter recomments PRO-SLAM as designed for teaching. (latest supported OS is Ubuntu 18.04, combined with OpenCV3.2). The pipeline should look like:

- The algorithm is described in ICRA 2018 paper

September 7, 2023

- Start reading Chapter 6, on Localization and Mapping. Visual Odometry is covered much later, in section 14.8.3 (using Multiple Images). Finished up to section 6.1, next will be 6.2 (Localization with a Landmark Map).

September 4, 2023

- Continued with Biomimicry workshop.

- Checked for available versions with pip3 install numpy==, but packages can be found in the indexes https://pypi.org/simple and https://www.piwheels.org.

- That is strange, because piwheels.org is especially made for rasberry pi. The example with scipy in the virtualenv at least works.

- Read the rest of chapter 3, which included (section 3.3) smoothing dynamic movemnents and sensor fusion of IMU measurements.

-

- Chapter 4 starts with a statement that the wheel was invented 1000 years before hte car, which points to pottery wheels (see wikipedia).

- Finished chapter 4. For more details it pointed to Siegwart's book.

-

- Said that I read chapter 5 already on June 8, 2023. Think that I meant the reactive part, so until section 5.2 (Map-Based Navigation). Don't recall that I read section 5.4 (Planning with an Occupacy-Grid Map).

- For instance, section 5.6 points back to Chapter 4 to plan that are driveable for non-omnidirectional configurations, such as vehicles that can only move forewards (ships, planes): Dubins path-planner. Also the latice planner and curved polynomials are taken into account. The chapter ends with the RRT planner.

- Added the 2-page publication from Dijkstra to the display of the hall of LAB42.

August 30, 2023

- Read chapter 3 of Robotics, Vision and Control up to section 3.2.2. There, translation force (3-tuple) and rotation torque (or moment, also 3-tuple) are combine to wrench (6-tuple).

- Nexit is section 3.3, where dynamics (time-varying poses) are introduced.

August 24, 2023

- Looking into tf2 examples. Cloned the foxy-branch.

- Probably sudo apt install ros-foxy-geometry would also work.

- Made a symbolic link to that packages in ~/ros2_ws/src and did a colcon build --symlink-install --cmake-args=-DCMAKE_BUILD_TYPE=Release.

- Another package (vision_opencv) gave an error, so moved it to ~/ros2_ws/tmp. Same for image_pipeline.

- Seems to work, although I get two non-related warnings after source install/setup.bash:

not found: "/home/arnoud/ros2_ws/install/vdb_mapping/share/vdb_mapping/local_setup.bash"

not found: "/home/arnoud/ros2_ws/install/zed_rgb_convert/share/zed_rgb_convert/local_setup.bash"

- Maybe due that I did the colcon build in ~/ros2_ws/src instead of ~/ros2_ws

- Get package 'examples_tf2_py' not found. Tried again with a build in the ros2_ws directory. Had to move ~/ros2_ws/tmp to /tmp/ros2_ws because it was also checked for packages. Now the command ros2 launch examples_tf2_py broadcasters.launch.xml from Python examples for tf2 works (although the local_setup errors were still there).

- Added COLCON_IGNORE to the /tmp/ros2_ws directory and moved it back

- The receiving example displays: [INFO] [1692878684.413618766] [example_async_waits_for_transform]: Waiting for transform from sonar_2 to starboard_wheel, but nothing seems to change. The broadcasters.launch.xml warned that there was already a dynamic_broadcaster running. With the static_broadcaster the async_waits_for_transform shows the tf-messages.

-

- Before I start with ROS2 navigation TF2 example, I first look at the installation of the workshop.

- Falling back to the entry Simple Publisher-Describe, which indicates that the package build should be done in our ros2_ws/src.

- Did ros2 pkg create --build-type ament_python ros2_workshop.

- Running rosdep install --from-paths src --ignore-src -r --rosdistro foxy -y fails on the missing image_publisher/package.xml (see above).

- Yet, the colcon build works. ros2 run ros2_workshop talker fails, but that is because no talker code was provided.

- Continue with TurtleSim example.

- Installed sudo apt install ros-foxy-turtlesim, followed by ros2 run turtlesim turtlesim_node. That works. Also ros2 run turtlesim turtle_teleop_key works.

August 22, 2023

- The concise ROS2 book has tf2 detector code. Checking which chapter of slides this is covered.

- Chapter 4 covers the tf2 system. Yet, this example is detecting a obstacle in front of the Tiago robot with a laser scan.

- Better start with this ROS2 tutorial, which uses a virtual camera above a TurtleBot3.

- Real robotic applications only appear in Chapter 4. Until Chapter 6 mobile robots are covered, after that the book zooms already in at robot arms. Chapter 11 is an introduction to Image Processing, which could be interesting for the minor.

- Peter Corke has a page with teaching material, including slides (a page which no longer works).

- There is a page on Chapter 11 for the 1st edition.

- Send a request via the 2nd edition teaching book.

August 21, 2023

- Received the STAR version of Peter Corke's Robotics, Vision and Control (3rd edition - Python version).

- Last update on June 26 indicated that I should continue at section 2.3.1.4 (Two-Vector representation).

- Good to remember the node on page 61: the spatialmath.base of the book considers a quaternion ordering of (s,v) while ROS use the convention (v,s) - with s the scalar part and v the vector part (itself a tuple of 3 scalars).

- The 2.3 section describe rigid-body transformation ξ in different parameterisation (SO(2), SE(2), 2D twist, SO(3), unit quaternion, SE(3), 3D twist, vector quaternion, dual quaternion).

- I would like to use the application example of the motion-model of Probabilistic Robotics, with a rotation, movement, rotation.

- I found the introduction of the Msc-thesis of Nienke Reints on rigid-body transformations more intuitive. Table 2.1 of the book lists the effiency of storage, also a topic of Nienke's thesis.

- It would be nic e to try the chapter 2 exercises with the tf2-library of ROS2. Exercise 20 is simple and applied: "

A camera has its z-axis parallel to the vector [0, 1, 0] in the world frame, and

its y-axis parallel to the vector [0, 0, 1]. What is the attitude of the camera

with respect to the world frame expressed as a rotation matrix and as a unit

quaternion?

"

August 14, 2023

June 20, 2023

June 19, 2023

- Made some (wired-connection) progress in ZSB.

- The code of Concise introduction are based on the Tiago-robot, only Chapter 6 - second part introduces Nav2 with the Turtlebot.

- I switched the ROS_DOMAIN_ID to 105 for Turtle5, instead of the default #30 (step 3.2.7). This example can be found in br2_navigation. In the later slides the Tiago is used again.

- Next step in the setup (Foxy tab), is the LDS-02 Configuration.

- Note that in this step both the packages cartographer and navigation2 are removed; exactly the package which is used in Chapter 6.

- Building the packages ld08_driver and turtlebot3_node takes so much time that the kernel is complaining on hanging tasks.

- At the end the compilation failed on not enough memory. Run the compilation again with option --parallel-workers 1, which finishes in a few seconds.

- The tiago_navigation_launch seems only to depend on tiago in tiago_nav_params.

- The TB3 is one of the examples in the tutorials of nav2. The Getting started example uses the TurtleBot3 Waffle.

- It should be possible, although some random jumps were reported. The problem here was unhandled odometry calculation (value 0xFDFDFDFD), as explained in this issue.

- To fix this issue, you have to update the firmware of the Dynamixel motors (v0.2.1), which is at the moment still the latest version as mentioned in opencr-setup.

- Followed the instructions of the OpenCR setup, but at the end the OpenCR test fails. A red led is on, but I see no response on the the SW 1 or SW 2 button.

- Time for a reboot without USB-C power. That helps, both SW button work as expected. Only remaining HW issue is the non-working wifi.

- Changed the 50-cloud-init.yaml to use true instead of yes for dhcp4.

- Run the command sudo cloud-init init, which indicates that wlan0 is not up. It is warns that a deprecated cloud-config is provided (chpasswd.list wiht username:password pairs).

- Changed the REGDOMAIN to NL, as suggested in this post. Now the wlan0 has both a inet and inet6 connection.

- Could still ping when no longer connected with wired ethernet.

July 11, 2023

- Start experimenting with the ros2 concise introduction code on nb-dual. Started with git clone --branch foxy-devel https://github.com/fmrico/book_ros2.git.

- Continued with vcs import . < book_ros2/third_parties.repos, which for instance added /ThirdParty/Groot.

- Next step is sudo rosdep init (default sources list file already exists), followed by rosdep update.

- Next is rosdep install --from-paths src --ignore-src -r -y, which installed libqt5svg5-dev and libdw-dev.

- The last step is colcon build --symlink-install. Got wornings that some selected packages are already built in one or more underlay workspaces: controller_manager, ros2_control_test_assets, controller_interface and hardware_interface (all in /opt/ros/foxy. In total 57 packages will be build.

- The build fails on missing control_toolbox packages. Solved that with sudo apt install ros-foxy-control-toolbox. The whole book_ros2 workspace is bild.

-

- The BR2_Chapter2 starts with ros2 pkg list, followed by ros2 pkg executables demo_nodes_cpp. The later command fails, because there is no such package. That can be repaired with sudo apt install ros-foxy-demo-nodes-cpp (should have installed the whole ros-foxy-desktop). Now ros2 run demo_nodes_cpp talker works.

- Did ros2 interface list and saw that I have test_msgs/action/Fibonacci instead of action_tutorials_interfaces/action/Fibonacci

- After sudo apt install ros-foxy-rqt-graph, the command ros2 run rqt_graph rqt_graph fails on from python_qt_binding import QT_BINDING

- Next step would be to create my_package.

-

- Turtle5 seems to boot without problems.

- Already used the Turtle in Future of Rescue workshop (2016 - Ubuntu 14.04, ROS Indigo).

- Also mentioned the line-following in ZSB 2020.

- In June 2022, the students make use of the TurtleBot. I concentrated on the Mover5 and Franka robot.

- I created the Blockly code for the TurtleBot3 September 2022.

July 10, 2023

- The example code of the book A Concise Introduction to Robot Programming with ROS2 uses behavior trees. Could also be useful for Behavior-Based Robotics.

- Checking the slides. The 2nd Chapter nicely introduces talker-listener, followed by creating your first node. Note that it starts with example code in C++. At the end the Tiago robot is used (in simulation) as example.

- Chapter 3 introduces Finite State Machines with Bump&Go in C++ and Python. It ends with two proposed exercises:

- Check the diagonal; Instead of always turning to the same side, it turns to the side with no obstacle.

- Try to turn an non-obstructed angle in two ways: open-loop (calculate the angle on forehand) and closed loop (turn until the clear space is detected).

- Chapter 4 has 3 proposed exercises: on of them is to point an arrow to the nearest obstacle and change the color based on the observed distance.

- Chapter 5 introduces Reactive Behaviors and Tracking Objects with the camera. Note that we are back to C++ in this chapter. This chapter has again three exercises.

- Chapter 6 finally introduces Behavior Trees. After an introduction Bump&Go is implemented with Behavior Trees (still in C++). The next example directly combines this with Mapping and Nav2. It ends with an exercise to make a 3D-box around the obstacles, as done in Perceptor3D from Gentlebots @Home team.

-

- It would be nice to reproduce this exercises with the TurtleBot 3 (and later the RAE or Pepper).

June 30, 2023

- The example code of ORB-SLAM3 is for the D435i, not the D435, which I got working yesterday. I should have both in the lab.

- I received the D435i on December 18, 2018.

- Looking around where it is now. Did I borrow it to somebody?

- Never received a RealSense LiDAR 515, preorderedDecember 19, 2019.

- I used the D435i on November 19, 2021.

- On April 15, 2019 I was looking at ros-packages for depth cameras, including realsense2_camera.

-

- Found several pages which implement ORB-SLAM for ROS:

- The official ROS ORB SLAM2 package, for ROS Melodic.

- For ROS2 there are not many SLAM entries.

- The Tutorial on SLAM with TurtleBot3, based on Webots simulation and the Cartographer package. This SLAM algorithm is described in this paper, which is essential advanced scan matching with regular pose optimization. The Cartographer algorithm is compared wih Graph Mapping and Graph FLIRT. For Graph Mapping the original algorithm was augmented with the constrains from Edwin Olson's thesis.

- The ROS wrapper for ORB-SLAM3, which used ORB-SLAM3 as standalone library. This is Ubuntu 20.04 based. The ROS DISTRO is not indicated, but is still catkin based.

- The same author also made an integrated package, orb_slam3_ros, including a Docker image for ROS Noetic including the RealSense SDK.

- This video shows how to use ORB-SLAM3 in a simulated environment. No code provided

- This tutorial shows how to build, integrate and calibrate the orb_slam2_ros package for Ubuntu 18.04 and ROS Melodic.

- The last tutorial is ROS Kinetic and ORB SLAM2 based.

-

- Because I have ORB-SLAM3 working, the wrapper seems to be the best first option.

-

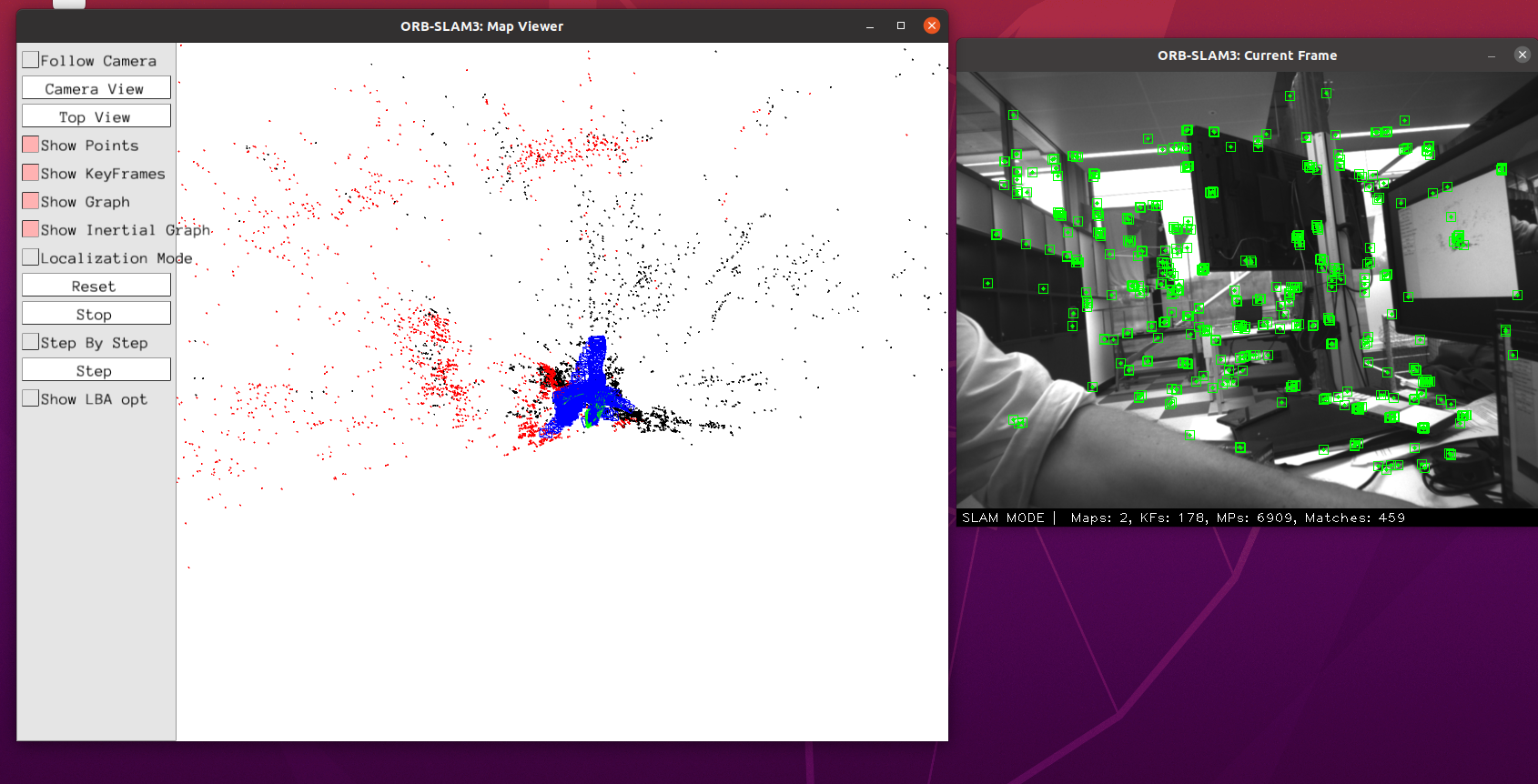

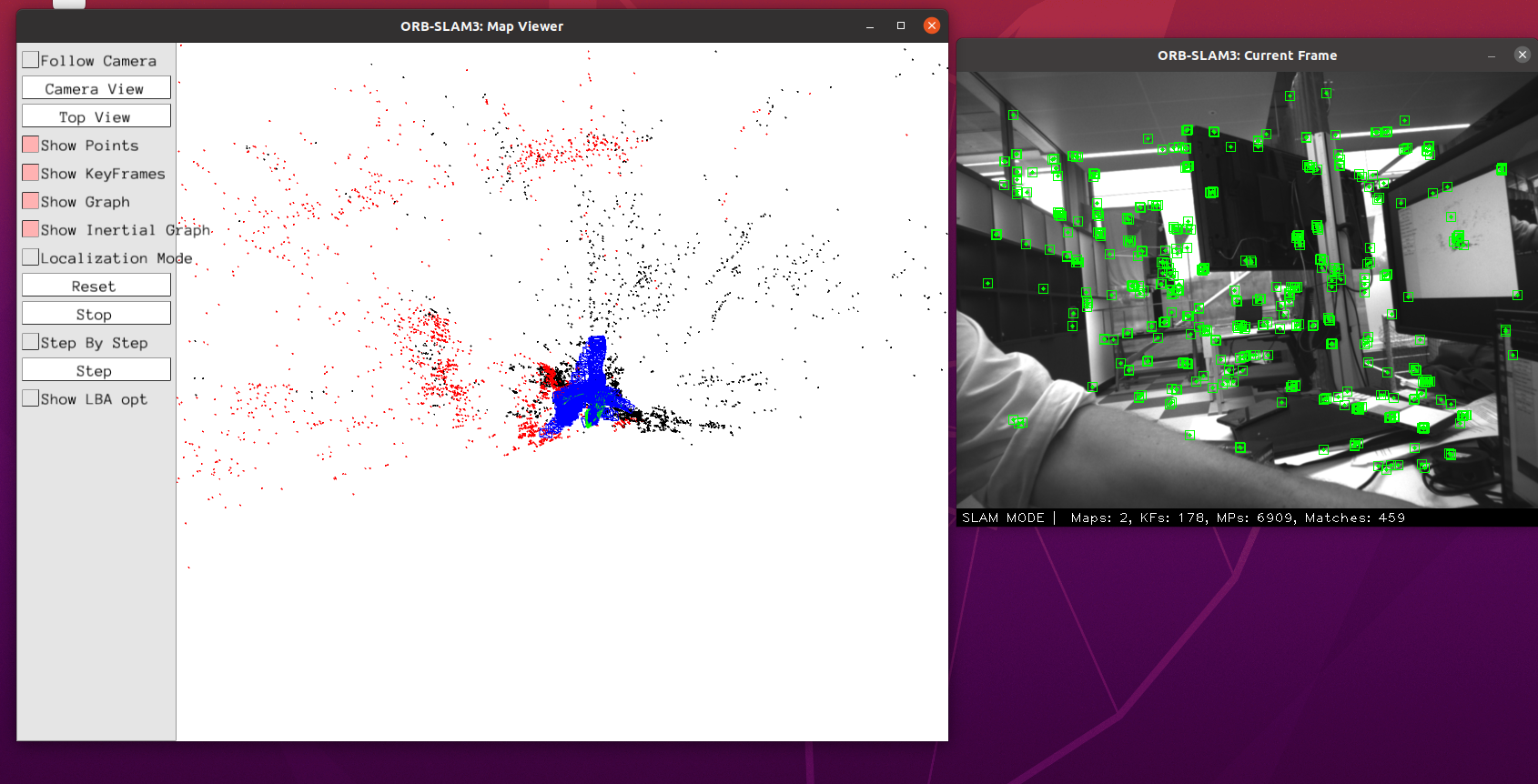

- When quiting, I got the following response:

Shutdown

Saving trajectory to CameraTrajectory.txt ...

There are 2 maps in the atlas

Map 0 has 198 KFs

Map 1 has 250 KFs

June 29, 2023

- ORB-SLAM3 is based on ORB-SLAM2.

- The software is tested on Ubuntu 18.04, but should easily compile on other platforms.

- The version of OpenCV should be at least v3.0. Tested on OpenCV 3.2.0 and 4.4.0.

- ROS support is optional. It has been teste on ROS Melodic.

- Cloning ORB_SLAM3 in ~/git on nb-dual.

- The build finds as C++ compiler GNU 9.4.0, for Eigen v3.3.7

- The build has also found OpenMP v4.5

- Building the modified g20 library gives a few deprecation warnings, yet Thirdparty/g2o/lib/libg2o.so.

- Yet, at the end ORB-SLAM fails on missing OpenCV (version 3.4.4 available, requires version 4.4). Removed the version from the CMakefile, next package missing is Pangolin. Pangulin installs libc++-10-dev, while ORB-SLAM3 requires libc++-11-dev

- Building Pangolin fails on the pango_python component. It fails on PY_VERSION_HEX, which comes from Python.h. A locate Python.h indicates that more than 20 versions of Python.h

- Looked into the CMakeCache.txt, which indicates PYBIND11_PYTHON_EXECUTABLE_LAST:INTERNAL=/usr/bin/python2.7.

- The python component is not required. Did cmake --build build --target help | head -40 to find that I could do cmake --build build --target pango_display.

- Moved the component/pango_python and examples/HelloPangolin to ~/git/Pangolin/tmp. That works.

- Did sudo cmake --build build --target install/local

- Back to ORB-SLAM3. The target ~/git/ORB_SLAM3/Thirdparty/g2o/lib/libg2o.so is build.

- Yet, something goes wrong with Pandolin. The error is /usr/local/include/sigslot/signal.hpp:1180:65: error: ‘slots_reference’ was not declared in this scope, which seems to be related with libc++11-dev.

- Did a sudo apt upgrade. That didn't help. Found this trick on this post. Seems to help, compilation continues.

- Next error is in one of the RGB-D examples. According to this post monotonic_clock is updated to stead_clock for newer compilers. The code was protected, but updated it to #ifdef COMPILEDWITHC11 || COMPILEDWITHC14 . That gave some warnings leading to errors, so replaced it with #if defined(COMPILEDWITHC11) || defined(COMPILEDWITHC14). The same trick in the Monocular and Stereo examples. There is also a Examples_old directory. Build succeeded.

- Tried to run ./Examples/Stereo-Inertial/stereo_inertial_realsense_D435i Vocabulary/ORBvoc.txt ./Examples/Stereo-Inertial/RealSense_D435i.yaml (without calibrating first). Receive No device connected, please connect a RealSense device.

- Looked into the connection with dmesg | tail. That gave 10363.598472] usb 2-3.4: Manufacturer: Intel(R) RealSense(TM) Depth Camera 435, but also:

[10363.620975] usb 2-3.4: Failed to set U1 timeout to 0xff,error code -32

[10409.057590] usb 2-3.4: USB disconnect, device number 19

- Connecting the D435 directly (not via the Dell adapter). That helps (partly).

- Now I receive the error:

terminate called after throwing an instance of 'rs2::invalid_value_error'

what(): object doesn't support option #85

- One of the options RS2_OPTION_AUTO_EXPOSURE_LIMIT or RS2_OPTION_EMITTER_ENABLED fails for the D435. It is RS2_OPTION_AUTO_EXPOSURE_LIMIT. Protected this set_option with a if (sensor.supports(RS2_OPTION_AUTO_EXPOSURE_LIMIT))

- The output is now as follows:

0 : Stereo Module

1 : RGB Camera

Initiating the pipeline

Tlr =

1, 0, 0, 0.0498765

0, 1, 0, 0

0, 0, 1, 0

Left camera:

fx = 390.802

fy = 390.802

cx = 320.736

cy = 236.904

height = 480

width = 640

Coeff = 0, 0, 0, 0, 0,

Model = Brown Conrady

Right camera:

fx = 390.802

fy = 390.802

cx = 320.736

cy = 236.904

height = 480

width = 640

Coeff = 0, 0, 0, 0, 0,

Model = Brown Conrady

Input sensor was set to: Stereo

Loading settings from ./Examples/Stereo-Inertial/RealSense_D435i.yaml

-Loaded camera 1

-Loaded camera 2

Camera.newHeight optional parameter does not exist...

Camera.newWidth optional parameter does not exist...

-Loaded image info

-Loaded ORB settings

Viewer.imageViewScale optional parameter does not exist...

-Loaded viewer settings

System.LoadAtlasFromFile optional parameter does not exist...

System.SaveAtlasToFile optional parameter does not exist...

-Loaded Atlas settings

System.thFarPoints optional parameter does not exist...

-Loaded misc parameters

----------------------------------

SLAM settings:

-Camera 1 parameters (Pinhole): [ 382.613 382.613 320.183 236.455 ]

Segmentation fault (core dumped)

- The error is inside the call ORB_SLAM3::System SLAM().

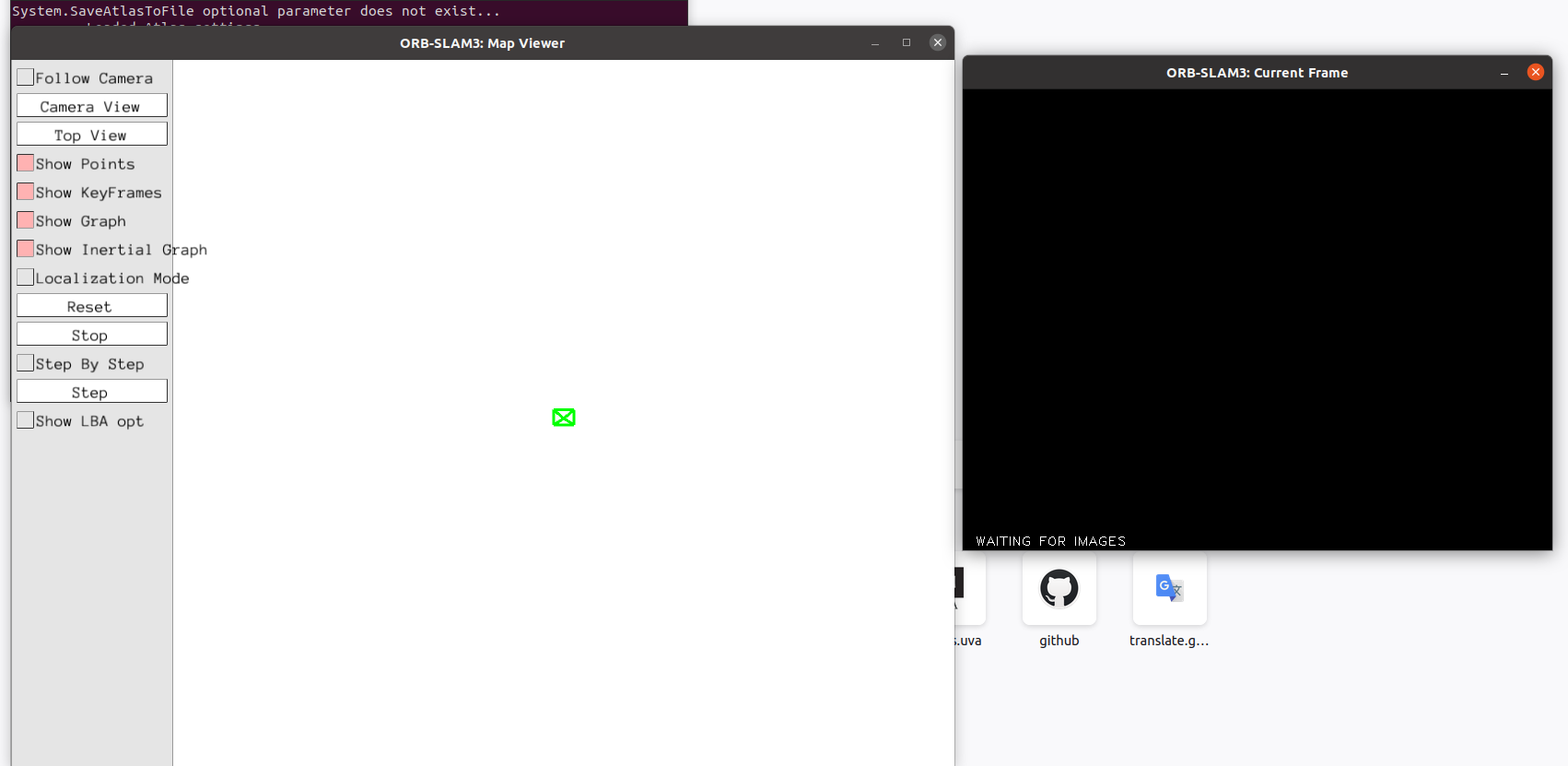

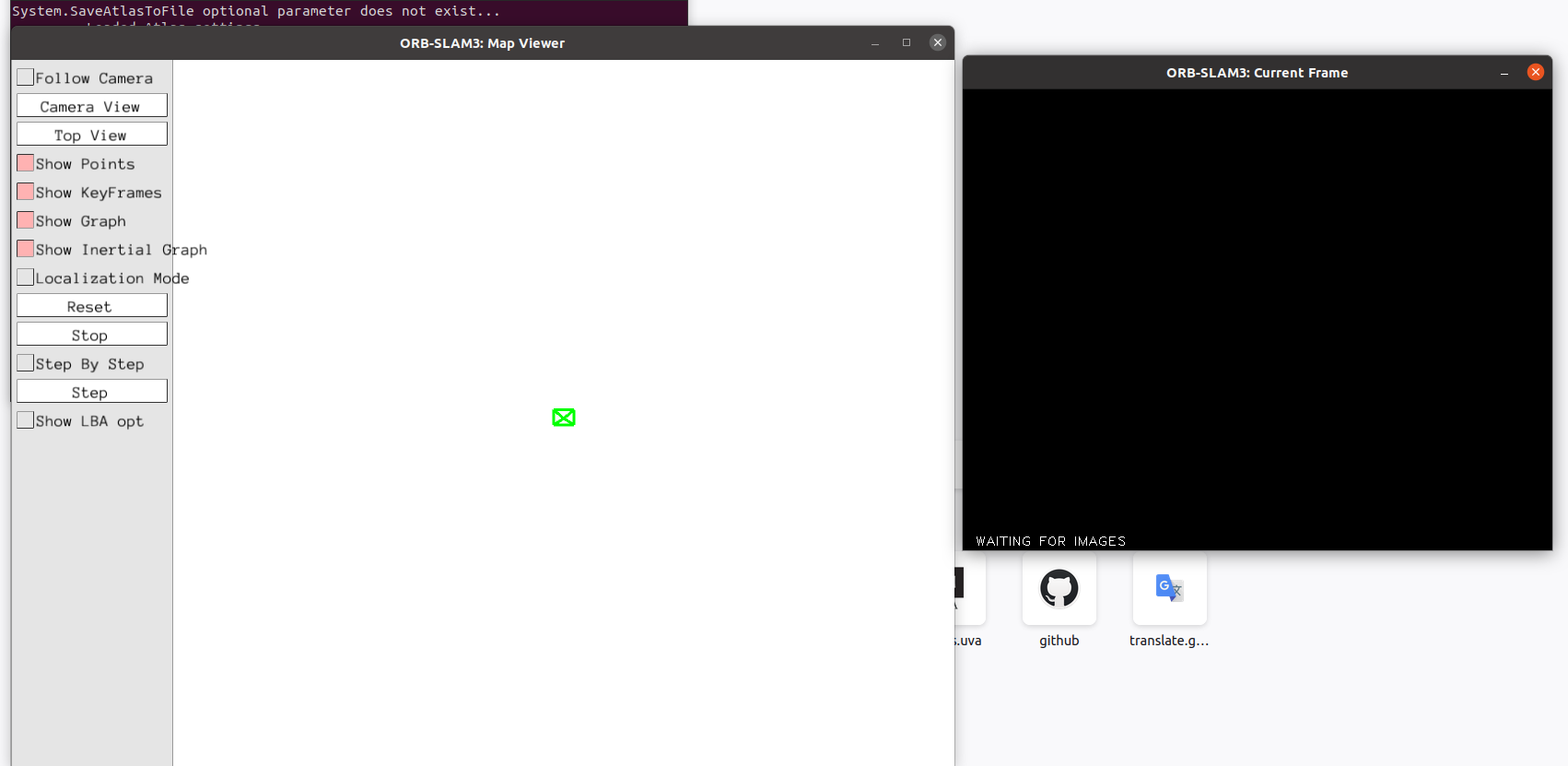

- The error is dumping cout << (*settings_) << endl;, while some optional parameters don't exist. Without this print the Viewer starts up, although it is waiting for images:

Loading ORB Vocabulary. This could take a while...

Vocabulary loaded!

Initialization of Atlas from scratch

Creation of new map with id: 0

Creation of new map with last KF id: 0

Seq. Name:

There are 1 cameras in the atlas

Camera 0 is pinhole

Initializing Loop Closing thread

Starting Loop Closing thread

Started Loop Closing thread

Before GetImageScale

281 dropped frs

Starting the Viewer

1 dropped frs

- After a while (minutes or key-stroke), the tracking started with the statement First KF:0; Map init KF:0. Still frames are dropped, but also receive *Loop detected, Local Mapping STOP, Local Mapping RELEASE, TRACK_REF_KF: Less than 15 matches!!, Fail to track local map!, Relocalized, Finishing session:

June 26, 2023

- Read the rest of section 2.2 (Working in two dimensions). It would be interesting to port this chapter to Geometric Algebra.

- Section 2.3 (Working in three dimensions) not only introduces Euler angles, but also Cardan angles (yaw-pitch-roll).

- Continue tomorrow with section 2.3.1.4 (Two-Vector representation).

- For a 2nd chapter, this material is quite abstract. Should see what sort of exercises comes with it.

June 17, 2023

- Read the 2nd Chapter of Robotics, Vision and Control. The Pose Graphs described in section 2.1.3 can be accomponied with an exercise in ROS.

- Appendix B contains a full refresher on linear algebra.

June 16, 2023

- On nb-dual, on my Ubuntu 20.04 partition, python3 -m pip install rvc3python worked fine. Packages are installed in the site-package of python 3.8.10.

June 15, 2023

- Read the preface of Robotics, Vision and Control. This book is unlike other text books, and deliberately so. The book is inspired by Numerical Recipes in C. The book "Conceptually, the mathematics that underpins robotics is inescapable, but the theoretical complexity has been minimized

and the book assumes no more than an undergraduate-engineering level of mathematical knowledge.".

- Read the first chapter (Introduction), which indicated "The book is intended primarily for third or fourth year engineering undergraduate students, Masters students and first year Ph.D. students"

- Installation of the toolbox can be done with a simple pip install rvc3python.

- Tried it on my WSL partition at my home computer, which is running Ubuntu 20.04. Gave a timeout.

June 9, 2023

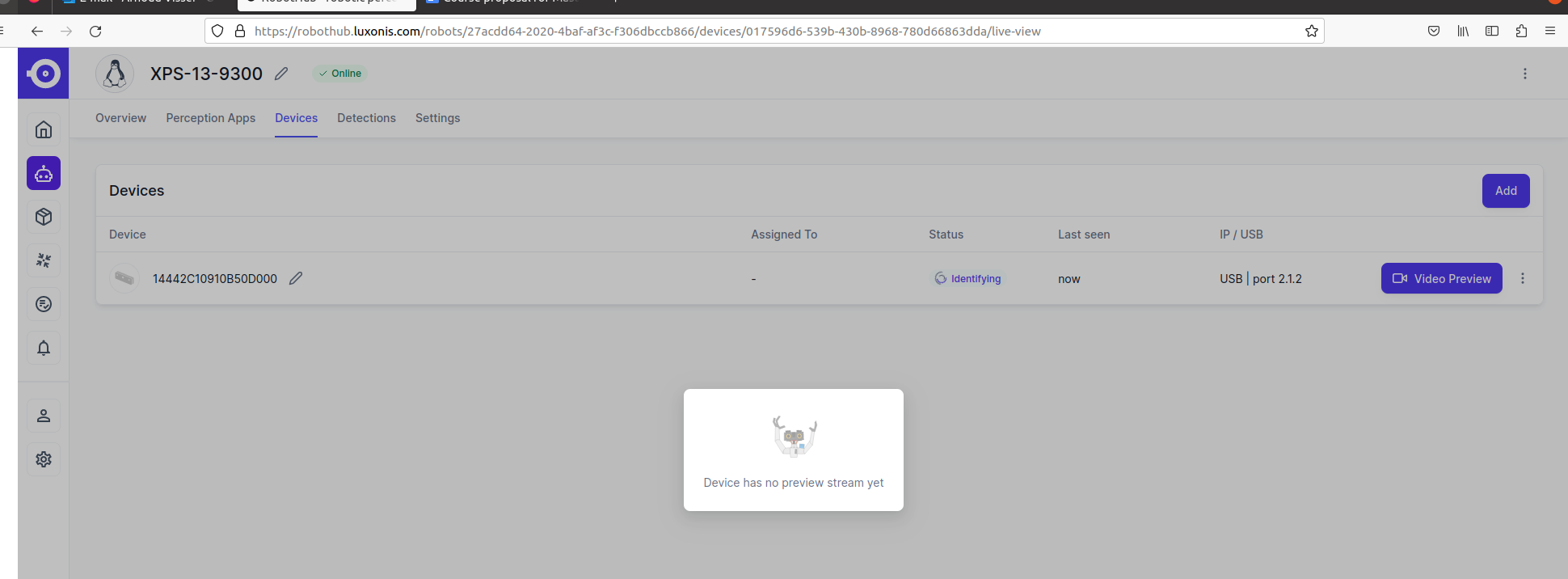

- Starting to read the RobotHub documentation a bit more torough. The idea is that code can be created in the cloud, and executed in a Podman container (currently only as Python code).

- A podman container should be secure and compatible with docker.

- Installed podman. podman ps shows no running containers.

- Connected the OAK-D via the grey USB-C Mobile Adapter, which was visible in the RobotHub Overview (device Ready). Yet, still no stream available.

- Looked at Creating an App. In the the documentation they mention the button 'Create App', which for me is 'Install App'. Yet, I cannot give it a name, I only get a dropdown menu, but no Apps are in the store.

- As first try, I would have like to use this code from robothub-oak:

import robothub

from robothub_oak.manager import DEVICE_MANAGER

class Application(robothub.RobotHubApplication):

def on_start(self):

devices = DEVICE_MANAGER.get_all_devices()

for device in devices:

color = device.get_camera('color', resolution='1080p', fps=30)

color.stream_to_hub(name=f'Color stream {device.id}')

DEVICE_MANAGER.start()

def on_stop(self):

DEVICE_MANAGER.stop()

- Running this code fails on missing robothub package. The package robothub_oak is installed (v1.3.1)

- Another interesting example is Object Detection, which also requires robothub.

- Installed python3 -m pip install robothub-sdk, which should allow me to communicate with RobotHub platform

- Finally are able to see the running podman container with robothub-ctl podman container ps:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8c99fefd5ace ghcr.io/luxonis/robothub-app-v2:2023.108.0914-minimal 28 hours ago Up 4 hours objective_swanson

- The input device of this container is not set to tty, so robothub-ctl podman run -it objective_swanson didn't work.

- Tried robothub-ctl podman attach --latest which gave an empty error. Same if you use objective_swanson as name.

- Yet, the following command works: robothub-ctl podman exec --latest -w /app more app.py. The result is showing both the color and depth stream:

import robothub

from robothub_oak.manager import DEVICE_MANAGER

class BuiltinApplication(robothub.RobotHubApplication):

def on_start(self):

devices = DEVICE_MANAGER.get_all_devices()

for device in devices:

color = device.get_camera('color', resolution='1080p', fps=30)

color.stream_to_hub(name=f'Color stream')

stereo = device.get_stereo_camera('800p', fps=30)

stereo.stream_to_hub(name=f'Depth stream')

def start_execution(self):

DEVICE_MANAGER.start()

def on_stop(self):

DEVICE_MANAGER.stop()

- The command robothub-ctl podman exec --latest -w /usr/local/lib/python3.10/site-packages ls showed that e.g. depthai_sdk-1.9.4.1 and robothub_oak-1.0.5 are installed, but robothub itself not.

- Running robothub-ctl podman exec --latest -w /app python3 app.py also fails on import robothub.

- Also robothub-ctl podman exec --latest pip show robothub gives no further information. Also env didn't give much new information (except some ROBOTHUB_ settings).

- Also robothub-ctl podman exec --latest python3 -m pip install robothub didn't work.

- Finally found /lib/robothub

- Included this directory with sys.path.append(), but this still fails on a unknown sessionId in communicator.py line 25. Maybe I should install python3.10.

- That helps. The import still fails, but on the existence of '/fifo/app2agent' on the local machine. Created an empty file, as in the container.

- Next error is missing the Environment variable ROBOTHUB_TEAM_ID. Add this variable, together with ROBOTHUB_APP_VERSION, ROBOTHUB_ROBOT_APP_ID, ROBOTHUB_ROBOT_ID.

- Next error is missing '/config/config.json'.

- Now I have to install robothub-oak again, together with depthai and depthai_

skd.

- First had to install the latest version of pip for python3.10, because other

wise html5lib gives an error. Trick was curl -sS https://bootstrap.pypa.io/get-pip.py | python3.10

- The app.py now runs, althogh I get RequestsDependencyWarning: urllib3 (2.0.3) or chardet (3.0.4) doesn't match a supported version!. Originally urllib3 was version 1.26.16, which also didn't work.

- The actual check is urllib3 >= 1.21.1, <= 1.25 and # chardet >= 3.0.2, < 3.1.0. This can be solved easily with python3.10 -m pip install requests==2.31.0.

June 8, 2023

- Read Chapter 5 (Navigation) of Peter Corke's book.

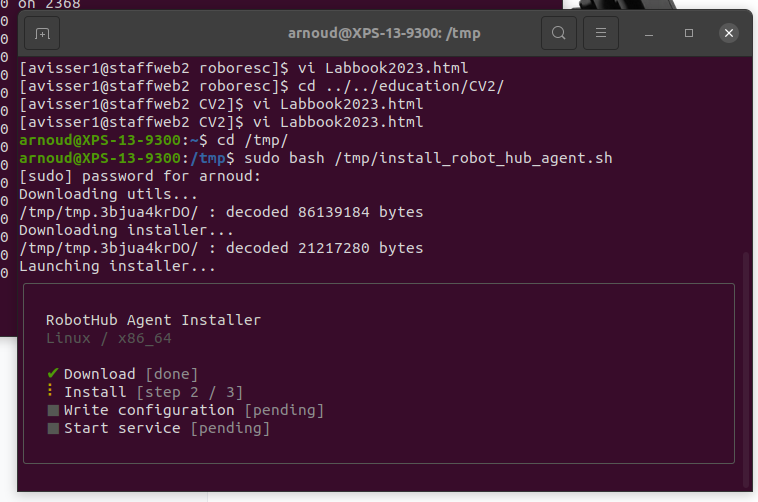

- Created an account at RobotHub.

- My laptop fullfills the requirements: Operating System: Ubuntu 20.04 / 22.04 (64-bit), Raspberry Pi OS (64-bit)

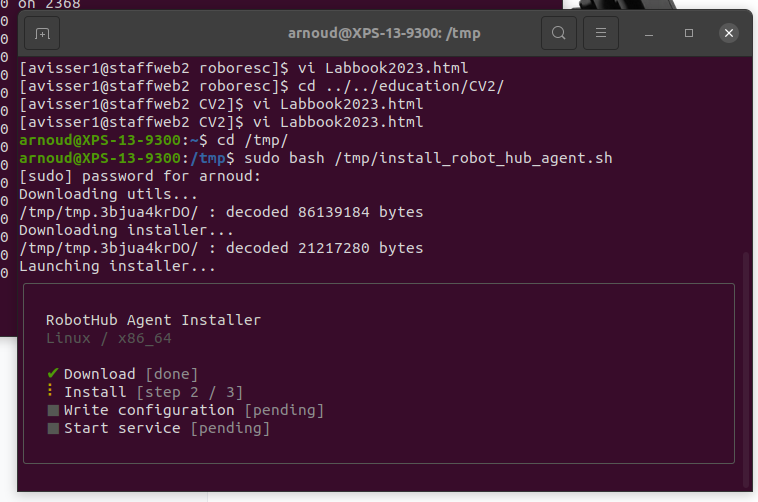

- Run the install script, and added nb-dual as (virtual) robot.

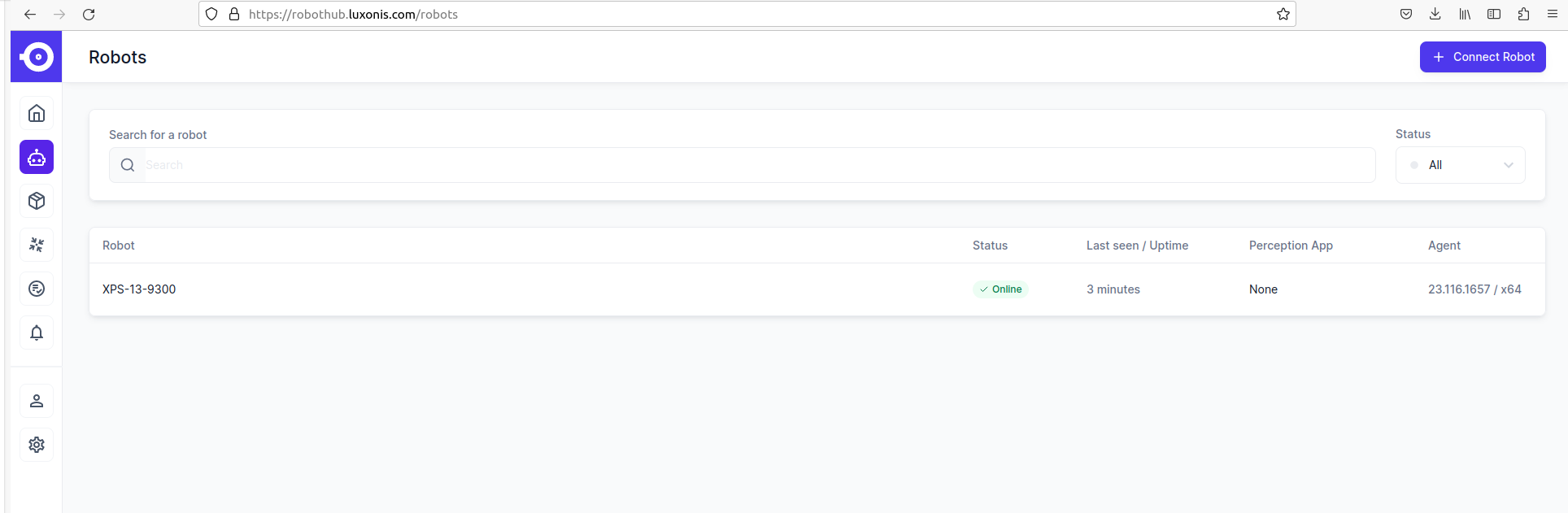

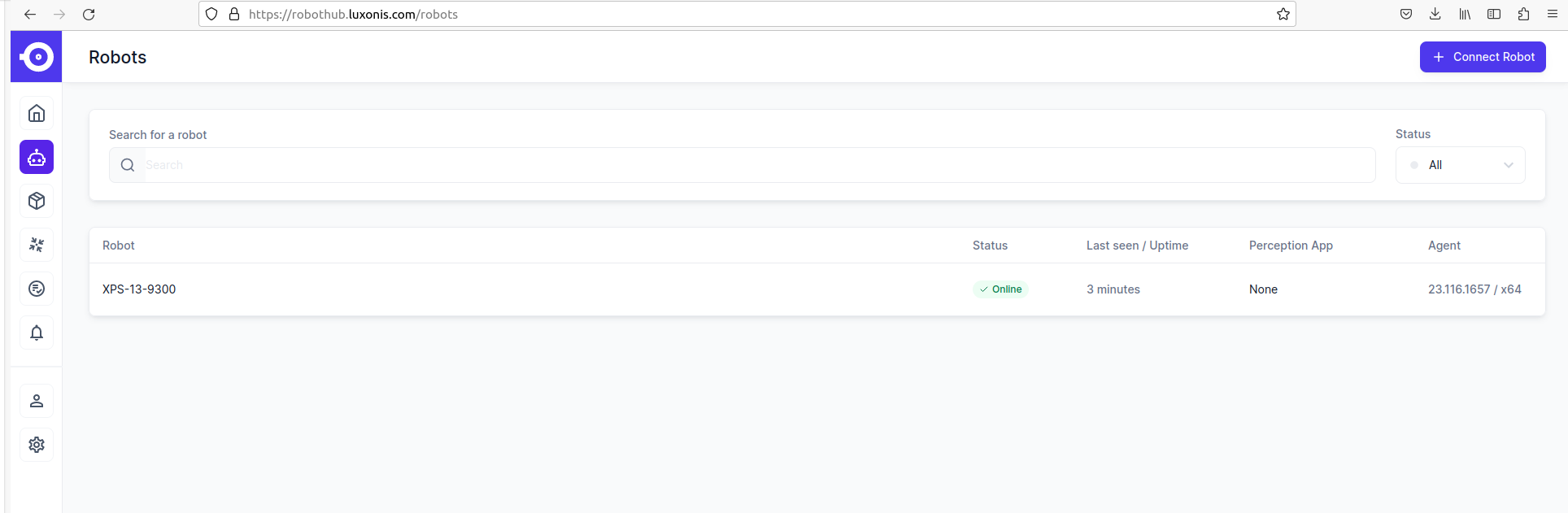

- In the RobotHub cloud my laptop is now visible as (virtual) robot, where I could connect to (actually, connect robot gives the option the install script again):

- Running robothub-ctl help gave Missing permissions! User probably not in group `robothub`., which can be solved with sudo usermod -a -G robothub $USER.

- After reboot, the command robothub-ctl --version works (v1.0.0). The command robothub-ctl --help gives:

Commands:

podman Proxy for podman inside agent

version Prints installed agent version.

apps Prints installed apps.

devices Prints discovered devices.

status Status of the agent.

start Start agent.

stop Stop agent.

restart Restart agent.

startup Enable or disable automatic launch of agent on startup.

logs [options] Logs from the agent process.

upload-support Uploads data to help debug issues. This may include some

confidential information. Access to it is granted only to

select Luxonis employees

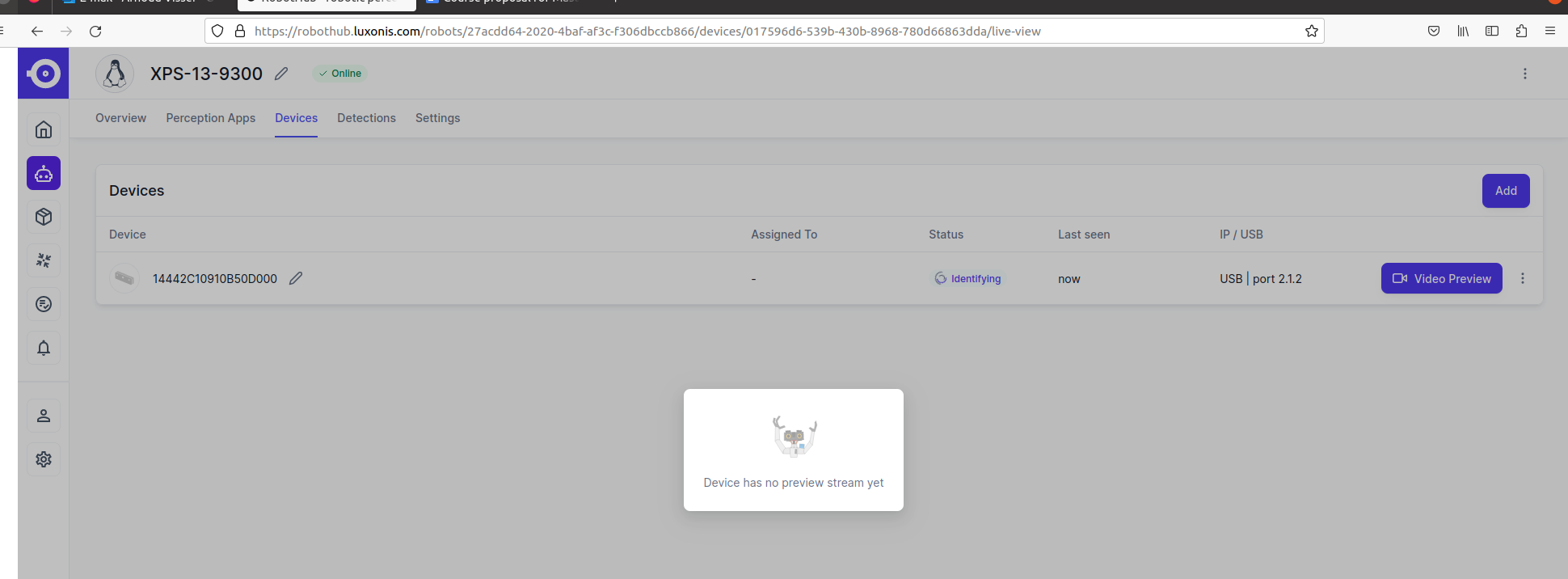

- Without any Luxonis products robothub-ctl devices returns nothing. With a OAK-camera (mono, not the OAK-D), the command gives:

────────────────────────────────────────────┬────────┬──────┬──────────────────┐

──── ──── ──── ── ── ────

serial name product │ locatio│ statu│ connectionSt app │

tus

────────────────────────────────────────────┼────────┼──────┼──────────────────┤

──── ──── ──── ── ── ────

14442C10910B50 14442C10910B50 unknown │ USB │ │ identifying none│

000 000 (unknown) 2.1.2 success

────────────────────────────────────────────┴────────┴──────┴──────────────────┘

- The command lsusb gives here:

Bus 002 Device 006: ID 03e7:f63b Intel Myriad VPU [Movidius Neural Compute Stick]

- Note that dmesg reports two new usb-devices:

[ 7394.066989] usb 3-8.2: New USB device strings: Mfr=1, Product=2, SerialNumber=3

[ 7394.066992] usb 3-8.2: Product: Movidius MyriadX

[ 7394.066995] usb 3-8.2: Manufacturer: Movidius Ltd.

[ 7394.066997] usb 3-8.2: SerialNumber: 03e72485

[ 7401.491081] usb 2-1.2: New USB device strings: Mfr=1, Product=2, SerialNumber=3

[ 7401.491084] usb 2-1.2: Product: Luxonis Device

[ 7401.491086] usb 2-1.2: Manufacturer: Intel Corporation

[ 7401.491088] usb 2-1.2: SerialNumber: 14442C10910B50D000

- Tried to get a Video Preview from the device, but the status of the device stays on 'Identifying'. Changed from Adapter to a USB-B / C connector, what didn help. Trying a OAK-D with a Thunderbolt cable after lunch.

- Strange, because it seems that I am doing what is needed to get started.

-

- Connected the OAK-D with the Thunderbolt cable at the left USB-C port. That shows up as a second device:

────────────────────────────────────────────┼───────┼──────┼──────────────────┤

──── ──── ──── ── ── ────

14442C10710891 unknown │ USB 3.│ │ identifying none│

000 (unknown) success

────────────────────────────────────────────┴───────┴──────┴──────────────────┘

- Yet, strange enough the RobotHub indicates an outage, and doesn't recover, event after sudo robothub-ctl start.

- Checked robothub-ctl status, which gave:

╔═══════════════════════════════════════════════════════════╗

║ ║

║ RobotHub Agent (23.116.1657 | linux/x64 | DepthAI 2.19.1) ║

║ ║

╚═══════════════════════════════════════════════════════════╝

Web UI https://localhost:9010

DepthAI 2.19.1

Connected to cloud? yes (https://robothub.luxonis.com)

Pending detections 0

- Checked https://localhost:9010, which showed that the OAK-D was recognized (the OAK not, because of USB-B (USB 2.0)?)

- The preview asks which stream to use (color or depth), but no image shows up.

- Tried to connect the OAK camera via the USB Thunderbolt, but that device stays in 'Identifying' stage.

- A firmware update seems to be automatic when performing doing update depthai-python with python3 -m pip install depthai

- Yet, I get Requirement already satisfied: depthai in /home/arnoud/.local/lib/python3.8/site-packages (2.17.3.0), while robothub works with v 2.19.1.

- Doing python3.9 -m pip install depthai gives v2.16.0.

- Doing python3.9 -m pip install depthai== shows that v2.19.1, v2.20.1, v2.20.2 and v2.21.2 are also available.

- So, I did python3 -m pip install depthai==2.19.1.0 which installed depthai-2.19.1.0-cp38. No firmware update as far as I can see.

- Instead, downloaded wget -qO- https://docs.luxonis.com/install_dependencies.sh. That works, but git pull fails, because I need to choose a branch.

- I could do a pull in ~/git/depthai-python. Doing python3 install_requirements.py in examples installed depthai-2.21.2, but gives the following error for tensorflow and numpy:

Successfully uninstalled opencv-python-4.5.1.48

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

matplotlib 3.4.2 requires kiwisolver>=1.0.1, which is not installed.

openvino 2022.1.0 requires numpy<1.20,>=1.16.6, but you have numpy 1.24.3 which is incompatible.

openvino-dev 2022.1.0 requires numpy<1.20,>=1.16.6, but you have numpy 1.24.3 which is incompatible.

openvino-dev 2022.1.0 requires numpy<=1.21,>=1.16.6; python_version > "3.6", but you have numpy 1.24.3 which is incompatible.

openvino-dev 2022.1.0 requires opencv-python==4.5.*, but you have opencv-python 4.7.0.72 which is incompatible.

tensorflow 2.6.0 requires numpy~=1.19.2, but you have numpy 1.24.3 which is incompatible.

tensorflow 2.6.0 requires typing-extensions~=3.7.4, but you have typing-extensions 4.2.0 which is incompatible.

- At least python3 ColorCamera/rgb_preview.py works (although RobotHub is still identifying):

Connected cameras: [{socket: RGB/CENTER/CAM_A, sensorName: IMX378, width: 4056, height: 3040, orientation: AUTO, supportedTypes: [COLOR], hasAutofocus: 1, name: }]

Usb speed: SUPER

Device name:

- Went to bootloader-directory in examples. This bootloader seems to be intended for the PoE version of the OAK. At least python3 bootloader_version.py gave:

Found device with name: 3.8

Version: 0.0.24

USB Bootloader - supports only Flash memory

Memory 'FLASH' not available...

- The same happens with OAK-D. Trying python3 cam_test.py, which fails on qt.qpa.plugin: Could not load the Qt platform plugin "xcb" in "/home/arnoud/.local/lib/python3.8/site-packages/cv2/qt/plugins" even though it was found.

- The same command python3.9 cam_test.py fails on cannot import name 'QtCore' from 'PyQt5' (/usr/lib/python3/dist-packages/PyQt5/__init__.py)

- Installing the latest version with python3.9 -m pip install pyqt5==5.15.9.

- According to the device documentation, a firmware update is uploaded together with the pipeline.

- Yet, running this script gives:

MxId: 14442C10710891D000

USB speed: UsbSpeed.SUPER

Connected cameras: [, , ]

Traceback (most recent call last):

File "device_pipeline.py", line 17, in

input_q = device.getInputQueue("input_name", maxSize=4, blocking=False)

RuntimeError: Queue for stream name 'input_name' doesn't exist

- Went to ~/git/deptai-python/examples/IMU and run python3 imu_firmware_update.py. That gave:

IMU type: BNO086, firmware version: 3.9.7, embedded firmware version: 3.9.9

Warning! Flashing IMU firmware can potentially soft brick your device and should be done with caution.

- Performed the update for OAK-D. For the OAK-1 I get:

IMU type: NONE, firmware version: 0.0.0, embedded firmware version: 0.0.0

- So, I didn't update the OAK-1.

- Strange enough, the preview in robothub and localhost are gone, because no stream exist. Install v2.19.1 again?

- At least python3 ColorCamera/rgb_preview.py still works for the OAK-D. Same for python3 StereoDepth/depth_preview.py. Also python3 IMU/imu_version.py works (v 3.9.9).

- Went back to ~/git/depthai. Did git checkout main, which showed that I was behind 950 commits. Did git pull

- Did python3 install_requirements.py, which upgraded again to 2.21.2.0. Yet, python3 depthai_demo.py failed with [14442C10710891D000] [3.8] [1.687] [StereoDepth(7)] [error] RGB camera calibration missing, aligning to RGB won't work

Stopping demo..

- Maybe I should look at calibration documentation.

- Another option is to run some tests with robothub-oak.

- More details on a firmware update for the OAK Series 3 can be found at Google docs, yet this seem not to apply to my device.

- Checked python3 depthai_sdk/examples/CameraComponent/camera_control.py, which worked, as camera_preview.sh (which also showed the depth).

- The RobotHub has Troubleshoot section. One hint is too look for the .robothub-error-log

- In the robothub-monitor.log reference is made to podman, so maybe I should od robothub-ctl podman info. There I see for the plugins - authorization: null

June 1, 2023

- Looked at the 1st year courses of the Msc Robotics of the TU Delft. Julian Kooij gives Robot Software Practicals, which is based on A Gentle Introduction to ROS (2013).

- Read Chapter 1.

- At the end of Chapter 2 there is a tool I didn't know: roswtf.

- Did a test on my catkin_ws on nb-dual. After sourcing both the noetic/setup.bash and devel/setup.bash, I did roscd zed_capture, followed by roswtf launch/zedm_capture.launch. No errors or warnings.

- The book has examples, but no exercises.

- Although a practical, the assignements count only for 25% of the grade.

-

- The course Machine Perception is not based on a book.

- The course Machine Learning for Robotics is based on Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow.

-

- Also looked into the 2nd edition of Introduction to AI Robotics. SLAM is covered in two pages.

- The book Cognitive Robotics is a collection of contributions of different authors.

-

- Shaodi is really in favor to use Peter Corke's book. This only covers 2D Mapping, so I should read Chapter 6.

- The book is using Python, so we should use the ROS-Python interface. Should check how far this goes.

- On beginner level, the following tutorials are Python based:

- On intermediate level, the following tutorials are Python based:

- On advanced level, the following tutorial is Python based:

- We could use the TurtleBot, RAE or JetRacer. Shaodi expects that ORB-SLAM is running on those platforms: I like to check.

- There is a ROS ORB-SLAM package, but ROS1 melodic based, although the github has ROS2 branch (2 years old). This package supports the Intel RealSense r200 and D435.

February 24, 2023

- Cool teaser for a robotics grade at Technische Hochschule Würzburg-Schweinfurt.

January 12, 2023

- A new Grassroot will start at February 8. Deadline March 24.

Previous Labbooks