Started Labbook 2023.

December 23, 2022

- Unpacked one of last year student solutions.

- Activated conda activate opencv4.3.

- Modified the ipaddress in modules/globals.py and started the day1_code with python config.py.

- Robot says 'Hello World' and starts walking (but fails on grass without standing up).

- Got one warning to be checked about qi application:

Setting dependencies for module 'main'...

Setting dependencies for module 'globals'...

Starting system...

[W] 1671799827.994559 20328 qi.path.sdklayout: No Application was created, trying to deduce paths

[I] 1671799828.025765 20328 qimessaging.session: Session listener created on tcp://0.0.0.0:0

- The Quest1 should also be updated with new dates and new versions.

- Also with rubber tiles the robot Moos falls. Should check fall detection / other settings.

-

- Started with day2_code. Here the walking works fine, although I get a warning on the Fall-detection:

Could not switch off FallManager.

Subscribing to video service-----------------------------------

- A number of images are saved. Only messages that no blobs are detected (which was truth in this case):

1.18999993801

1.19999992847

1.27899996042

5.11999999285

5.12789999604

1.66799996972

Saving image image1.png

Saving image hsv_image1.png

Saving image foto.png

Saving image foto2.png

Saving image foto3.png

- The robot walks now perfectly forwards, only the turning fails.

- Repeated experiment with a sign in front of the robot. The code now fails on missing 'vierkant':

\vision_v2.py", line 248, in findContour

crop_img = cv2.drawContours(img2, [vierkant], -1, (0, 255, 0), 1)

UnboundLocalError: local variable 'vierkant' referenced before assignment

- Note that this was with vision_v2, while vision_v3 is available.

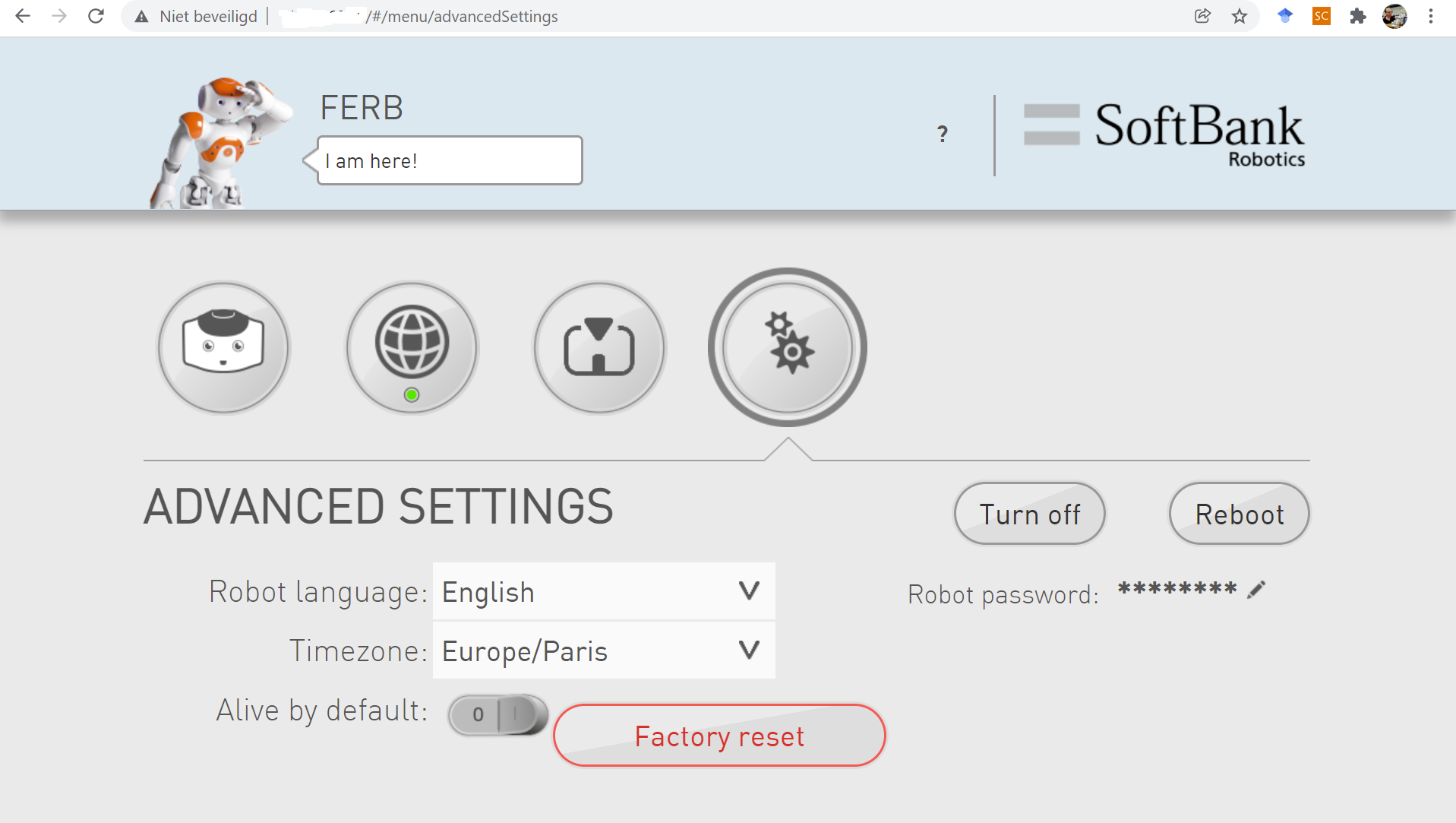

- Repeated the experiment with vision_v3 and Fall detection permitted (set in http:moos.local/advanced/#/settings)

- The vision_v3 should not only be update in config.py, but also in main.py. Note that in the latest code main_v6.py is used, which points to motion_v6.py

- The readme says:

v6.0 Arnoud Visser - modified the stiffness at the end of setHead and changeHead, so that the Nao keeps its head up

v5.0 Arnoud Visser - modified the speed, stiffness and sleep of the setHead and changeHead

v4.0 Arnoud Visser - added interface to control ALAutonomousLife

v3.0 Arnoud Visser - use more NaoQi v2.8.5 commands

v2.0 Kyriacos Shiarlis - 25 November 2015

- The loading of vision_v3 failed because it still used vision_v2 as class-name.

- That helps, now the robot finishes succesfully:

Unsubscribing from video service-----------------------------------

Ready, waiting 5s before rest

After sleep, sit and rest

System finished...

Framework terminiated succesfully

- Tested the code again with 'go right' sign, but still the robot claims to be ready.

- The saved hsv-image seems also not the right color-space, although the blurred image was OK:

- The students based their thresholding of the HSV image on this stackoverflow post.

- Stupid, the main_v3 contained the students solution, so it isn't strange that nothing happens with the provided main_v6.

- Still, the code fails on a missing function takePicture (line 41 of main_v3).

- Same problem: vision_v2 contains the solutions of the students.

- This works better, although when setting off the fall-detection I now receive 'Could not modify background movement.'

- The robot recognizes the 'Going right' sign, but doesn't respond on the 'Turn back' sign (also no 'vierkant' crash).

- In the day1 code the motion module is not loaded (think it should). In motion_v6 our own setBackGroundMovement function is called, which calls setAutonomousAbilityEnabled, as explained autonomous abilities management

- Made quite some modifications to quest1 (both description and code). Yet, starting the main fails for the moment:

Module 'main ' not loaded.

Module 'motion_v6' loaded as 'motion'.

Module 'globals' loaded as 'globals '.

Setting dependencies for module 'motion'...

Error: (getModule) Module 'globals' cannot be used, it is not loaded.

- Removing the space after main and globals all modules are loaded. Also added a speechProxy. Now the code works when the three modules are registered:

Module 'globals' loaded as 'globals'.

Module 'motion_v6' loaded as 'motion'.

Main-Module loaded: 'main' as 'main'.

Setting dependencies for module 'motion'...

Setting dependencies for module 'main'...

Setting dependencies for module 'globals'...

Starting system...

Could not switch off FallManager.

After sleep, sit and rest

System finished...

Framework terminiated succesfully

- Only the FallManager is strange, because that worked before. Yet, now the background movement works. I couldn't find any troubleshoot on the error message, because that was one of my own.

December 20, 2022

- The code of module 12 also fails, because the module dnn is not available in opencv 3.1.0

- Did pip install opencv-contrib-python in the Anaconda2 shell, which installed v4.2.0.32 of the contribution.

- Now the face recognition works.

- The object tracking still fails on the openh264 library.

- Also, I had to update from tracker = cv2.Tracker_create("KCF") to tracker = cv2.TrackerKCF_create().

- Checked opencv-version with cv2.__version__, which gives 4.2.0 (with python2?!)

- Checked possible versions with pip install opencv-contrib-python==, which gave a range between 3.2.0.7 and 4.2.0.32. Also checked opencv versions with >pip install opencv-python==, which gave a range between 3.1.0.0 and 4.3.0.38.

- According to this post, python2 support was dropped in v4.3.0.36, which makes 4.2.0.32 the latest save option.

- Yet, doing a pip install instead of a conda install is a bit of a conflict.

- The downside seems to be:

- Once pip has been used, conda will be unaware of the changes.

- To install additional conda packages, it is best to recreate the environment.

- Did a >conda search opencv*, to see if I could have installed v4.2.0.32 via conda. Not in the default channel (only python3 versions).

- Did conda search -c menpo opencv*. For py27 the latest option is 3.1.0.

- Also added channel conda-forge with conda config --add channels conda-forge. (Doing it twice brings a channel on top). Now I see a slightly newer opencv version: v3.2.0. Yet, the format is np111py27_20*. No idea where the last number is for.

- According to this post, conda environments are entirely compatible with pip packages.

- So, it is a possibility to do in the conda environment >pip install opencv-python==4.2.0.32 and >pip install opencv-contrib-python==4.2.0.32, which will make it much easier to find documentation and examples.

- The remaining question is: will the BBR-code work for v4.2.0.32?

- One way to find out: created conda create --name opencv4.3 python=2.7, (note that opencv4.2 would have been a better name) which is an environment with python-2.7.15. Installed opencv-python and opencv-contrib-python with pip. Added pynaoqi-2.8.7.4 to PYTHONPATH (and restarted anaconda2 prompt. import os; user_paths = os.environ['PYTHONPATH'].split(os.pathsep) shows the current settings.

December 19, 2022

- Next module 12 is youtube - Face detection.

- It uses a caffe-model which can be downloaded by a script which can be found at OpenCV github. The download_models.py script uses the information defined in models.yml file, which also had refences to for instance yolo3.

- Module 13 is on Object detection.

- Module 14 is on Human pose detection.

- The last half hour is an interview on how to get a job in CV.

- Is actually more interesting than I expected, because Satya Mallick tries to define what AI, ML and CV actually is.

-

- Next video is the 4h course on everything you need to know about Python for CV, which is not the official OpenCV course, but one presented at freeCodeCamp (including accompanying github code). The course starts directly with reading in images.

- Looked at the tracker code. The documentation of the trackers v3.1 is C++ based. According to this post the code should work.

- According to this post, v3.2 is a needed

- Found example code for v4.* in here. Switched to v3.1.0, which gave The 'opencv/opencv' repository doesn't contain the 'samples/python/tracker.py' path in '3.1.0'.. Even for v3.4 it doesn't exist.

- So, the best choice for samples seems to be python 3.4 samples, although python 3.1.0 samples also exists.

- Yet, the tracking was at that part of the opencv_contrib repository, which had for each module it's own samples.

- Looking at this code, the following python code should work for v3.1 (which is strange, because GOTURN was introduced much later):

if tracker_type == 'BOOSTING':

tracker = cv2.Tracker_create("BOOSTING")

elif tracker_type == 'MIL':

tracker = cv2.Tracker_create("MIL")

elif tracker_type == 'KCF':

tracker = cv2.Tracker_create("KCF")

elif tracker_type == 'CSRT':

tracker = cv2.Tracker_create("CSRT")

elif tracker_type == 'TLD':

tracker = cv2.Tracker_create("TLD")

elif tracker_type == 'MEDIANFLOW':

tracker = cv2.Tracker_create("MEDIANFLOW")

elif tracker_type == 'GOTURN':

tracker = cv2.Tracker_create("GOTURN")

elif tracker_type == 'MOSSE':

tracker = cv2.Tracker_create("MOSSE")

else:

tracker = cv2.Tracker_create("MIL")

- Restarted the kernel, but code still works. Yet, no new mp4 is created, only the one provided with the notebook.

- Problem seems to be in reading in the original video:

Failed to load OpenH264 library: openh264-1.4.0-win64msvc.dll

Please check environment and/or download library from here: https://github.com/cisco/openh264/releases

[libopenh264 @ 000000000ce32cc0] Incorrect library version loaded

Could not open codec 'libopenh264': Unspecified error

December 16, 2022

- Continue with module 09 - youtube - Panorama stitching.

- Unfortunetelly stitching seems to be only available in OpenCV 4.*

- Continue with modele 10 - youtube - HDR.

- Next time module 11 - youtube - Object tracking

December 15, 2022

- Finished the Video-writing module 06.

- More relevant for BBR is module 07 - Filters. Lecture is available on youtube. No jupyter notebook, only a python script because it is camera based. The filters are activated by 'c', 'b' or 'f'.

- Next is module 08 - Image Alignment - youtube, which could be also handy for the white mask around the circles. The code was quite a step more complex than the code demonstrated in the modules before. Quite impressive.

- The code of module 08 fails in step 3, because the DescriptorMatcher uses an attribute which is not defined in this version of OpenCV. Changed the call to a string with cv2.DescriptorMatcher_create('BruteForce-Hamming'), instead of cv2.DescriptorMatcher_create(cv2.DESCRIPTOR_MATCHER_BRUTEFORCE_HAMMING) which seems to work.

- Tomorrow module 09 - youtube.

December 14, 2022

- Interesting would be to look into Robot Behavior book (March 2023).

- Started with the free OpenCV Crash course of 3h.

- First step was to install Anaconda, so good moment to test the BBR installation instructions.

- Anaconda2-2019.10-Windows-x86_64.exe still seems the latest Anaconda2 distribution.

- Note that in the OpenCV installation instructions they recommend to include anaconda to the path (not recommended option). They install opencv with pip install opencv-contrib-python.

- The command conda create --name BBR python=2.7 works, python2.7.18 is installed.

- Do not see numpy, etc. Did conda activate BBR. The right python is called, but no module numpy yet.

- Openened an Anaconda2 prompt, and continued with conda install -c menpo opencv3.

- Note that the Anaconda2 prompt uses a different version of python (2.7.16). In Anaconda numpy is already installed!

- Also the command jupyter notebook already works. Started with module 1 of the Crash Course.

- The module 01 notebook loads all 4 libraries. The command print(cv2.__version__) gives version 3.1.0.

- The 3h lecture is available on youTube, with sections for each module.

- The SDKs on the Aldebaran support page are quite screwed up. For Windows they point to Linux version of 2.8.7. For the others OS only the 2.8.6 and 2.8.5 versions are linked.

- Luckely I have already copied all three versions on Canvas Too new version directory.

- In the 02 Module for the first time something goes wrong. In the 'Resize while maintaining aspect ratio'. Solved this with a late conversion to ints (otherwise the aspect_ratio is rounded to zero):

desired_width = 100.0

cropped_region_shape = cropped_region.shape[1]

aspect_ratio = desired_width / cropped_region_shape

desired_height = cropped_region.shape[0] * aspect_ratio

dim = (int(desired_width), int(desired_height))

# Resize image

resized_cropped_region = cv2.resize(cropped_region, dsize=dim, interpolation=cv2.INTER_AREA)

plt.imshow(resized_cropped_region)

- In Module 04 the Read Background image cell fails again, which I repaired with aspect_ratio = float(logo_w) / img_background_rgb.shape[1].

- So, this course has quite some overlap with the first part of the OpenCV for Beginners course.

- Module 4 combines thresholding, bitwise operations and masking in one module.

- Module 5 is again not a jupyter notebook, but the code seems to work OK in the Anaconda2 prompt. Now on 1/3 of the course.

December 7, 2022

December 2, 2022

- Watched this video to see how additional python packages can be loaded locally on the Nao robot: mount -o rw,remount /.

- Tried pip install matplotlib==2.2.5. Still makes not much of progress. With pip 8.1.1 the command python2 -m pip install --upgrade pip==. Looked on nb-dual which versions of pip exists. Installed on the Nao moos a newer version of pip with pip install --upgrade pip==9.0.3. That gives Successfully installed pip-9.0.3.

- Yet, still no progress with pip install matplotlib==2.2.5. Upgraded pip to 10.0.1. Still no progress.

- Tried to install matplotlib==2.2.4 and pillow=3.1.2 (v3.1.1 was already installed according to pip list. Still no progress.

- Upgraded pip to v18.1. Still no progress.

- Upgraded pip to v19.3.1. Start getting the DEPRECATION warning that Python 2.7 is reached end of its life.

- The command pip --dry-run doesn't work (yet) for pip v19.3.1. Tried pip install --python-version 2.7.11 --no-deps --target /usr/lib/python2.7/site-packages/ matplotlib==2.2.5, still no progress.

- Switched back to the nao user, and tried python -m pip install --user matplotlib==2.2.5. Still no progress

- Did python -m pip install --user --upgrade pip==20.0.2. Now I have /home/nao/.local/bin/pip. Still no progress.

- Did a full python -m pip install --user --upgrade pip which installed pip-20.3.4. Receive the warning that pip 21.0 will no longer support python2.7

- Did the same command again with the -v option. Now I see that pkg-config is not installed.

December 1, 2022

- Wrote a simple program called record_image.py to grab a single image.

- Received a shot which is None, which could be solved with a nao restart

- After restarting I have stiffness again. Without stiffness the head falls back. Sitting the head has to look upwards. Solution could be to use the top-camera instead of the bottom camera.

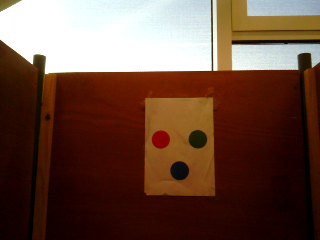

- This is the result:

- Note that there is a lot sunlight coming over the edge, so my contour_turn_around script no longer works.

- Moved the robot closer and used the top camera. This is the result:

- Moved the robot standing position (knees straight). This is the result:

& nbsp;

& nbsp;

- The bottom camera gives much better view. Moved the robot a little back, yet it moved its head:

- Changed the Head angles to [0.0,-0.6] as described on January 27, 2022:

- Maybe I should also turn off Alive (did it via Choregraphe). Now the robot is standing still and not constantly correcting its view.

- Still the image is too bright, although it is now nearly sunset. Moved to [0.0,-0.45] which gave a good view:

- Now at least the orange blob is recognized.

- Changed the green H-range from [45,55] to [50,75]. Changed the blue range from [102, 112] to [105, 116]. All three blobs recognized, although green can be better (and blue slightly)

- Looked only at the green histogram and changed the S-range from [100,255] to [0,255] and the V-value from [100,200] to [100,150]

- Looked only at the blue histogram and changed also here the S-range from [100,255] to [0,255]. Now the recognition looks OK:

November 30, 2022

- Tried to record an image as specified in module 4-1.

- Yet, on moos I get the error that the device is already busy:

libv4l2: error setting pixformat: Device or resource busy

VIDEOIO ERROR: libv4l unable to ioctl S_FMT

libv4l1: error setting pixformat: Device or resource busy

VIDEOIO ERROR: libv4l unable to ioctl VIDIOCSPICT

- Under WSL Ubuntu 18.0 opencv is not installed yet for python2.7, with WSL Ubuntu 20.04 I get failed to capture from webcam.

- Under CMD I get another error (although the webcam-light is now on):

[ WARN:0] global D:\a\opencv-python\opencv-python\opencv\modules\videoio\src\cap_msmf.cpp (376) `anonymous-namespace'::SourceReaderCB::OnReadSample videoio(MSMF): OnReadSample() is called with error status: -1072875772

[ WARN:0] global D:\a\opencv-python\opencv-python\opencv\modules\videoio\src\cap_msmf.cpp (388) `anonymous-namespace'::SourceReaderCB::OnReadSample videoio(MSMF): async ReadSample() call is failed with error status: -1072875772

[ WARN:1] global D:\a\opencv-python\opencv-python\opencv\modules\videoio\src\cap_msmf.cpp (1022) CvCapture_MSMF::grabFrame videoio(MSMF): can't grab frame. Error: -1072875772

failed to capture from webcam

[ WARN:1] global D:\a\opencv-python\opencv-python\opencv\modules\videoio\src\cap_msmf.cpp (438) `anonymous-namespace'::SourceReaderCB::~SourceReaderCB terminating async callback

November 29, 2022

- Once we have the contours, it would be good to perform sorted() with as key the contourArea, as indicated in Intruder detection application video

- Unfortunately, no acces anymore to the OpenCV course.

- The erosion of the foreground mask is also explained in Motion detection video.

-

- Added the following snippet into the code:

#three return values for opencv 3.1, two return values (contours, hierarchy) for opencv 3.*

im2, contours, hierarchy = cv2.findContours(opening, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

'

print("Number of contours found = {}".format(len(contours)))

contours_sorted = sorted(contours, key=cv2.contourArea, reverse=True)

for i, single_contour in enumerate(contours_sorted):

print("Area {} of contour = {}".format(i,cv2.contourArea(single_contour)))

- Which gives:

Number of contours found = 3

Area 0 of contour = 751.5

Area 1 of contour = 636.5

Area 2 of contour = 634.0

November 28, 2022

- Added on Canvas a page the OpenCV course.

- Adjusted the module-3 exercise to work with the TurnAroundSign:

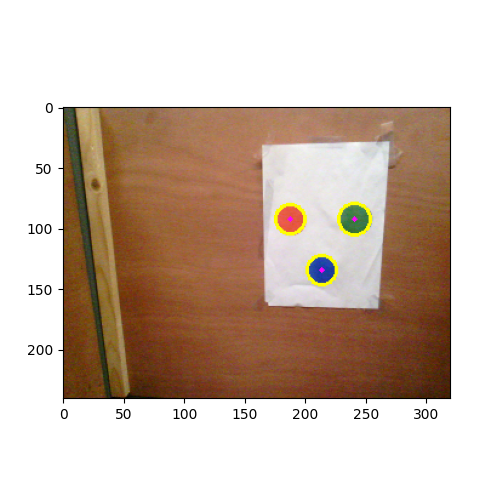

- With Hue in the range 0-20 the Orange blob is well recognized.

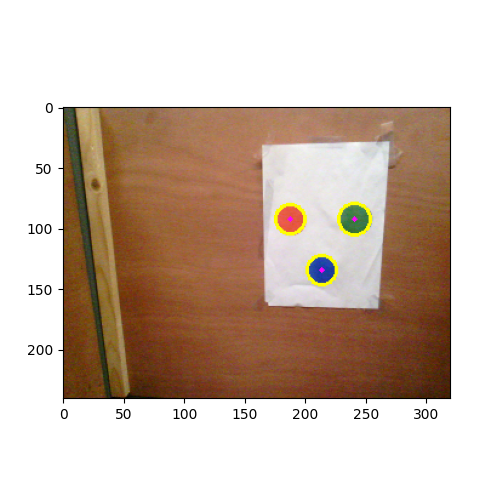

- With Hue the Green blob is in the range 45-55 and the Blue blob between 102-112.

- Did some Opening and Closing to get rid of the white lines on the ground.

- Still 10 contours are visible, but with External only the circular blobs are found.

- Draw the center and fitted circles around the blobs (in purple and yellow):

- Tried the code also on moos (running NaoQi 2.8.7.4). import cv2 works, but import matplotlib.pyplot fails.

- Tried python -m pip install matplotlib and python -m pip install --user matplotlib==2.2.5.

- Response is Collecting matplotlib==2.2.5

Using cached https://files.pythonhosted.org/packages/10/5f/10c310c943f29e67976dcc26dccf9305a5a9bc7483e631ee74a0f95aa5b2/matplotlib-2.2.5.tar.gz, but nothing happens after that. Tried also do update pip, but that is readonly (also as root).

- At least numpy works, so I have natively opencv 3.1.0 and numpy 1.10.4.

- Made a version without the draws. findContours works, but the return value seems to have changed (no hierarchy, other functions complain about not-a-numpy-array.

- Looked at 3.1.0 contours tutorial. Three things are returned instead of two! That seems to work!

November 23, 2022

- Continued with OpenCV for beginners

- Did the exercise of Module 1-3 without problems.

- Module 1-4 is a good one to include in BBR. Also did exercise without problems.

- Did Module 2-1, which is less relevant for BBR. The plt.show() between both subplots is not handy.

- Did Module 2-2 covers thresholding (without masks), so relevant for BBR. Exercise went fine, although I have to remember to run the latest file. In addition, I had to specify plt.rcParams['image.cmap'] = 'gray' and make the plotted image a bit smaller than suggested plt.figure(figsize = [9,5]).

- Moduel 2-3 covers thresholding with masks, so those two should be given together.

November 23, 2022

- OpenCV is offering this week free access to OpenCV for beginners

- Checking out if this is something for the Behavior-Based Robotics students & checking how much python knowledge is expected.

- The About page indicates that you should have a working knowledge of the Python programming language. If not, suggestions will be made. Have not seen those (yet).

- For students without python knowledge The Python for Beginners would be more appropriate, but that seems to be on invitation only.

-

- To run the code locally, they suggest to run the code in a virtual environment (see BBR Mac instructions) or via PyCharm (recommended as IDE). The BBR Windows instructions are PyCharm based. The BBR Linux instruction is a Conda virtual environment.

- Lets focus on a Windows installation for the moment. OpenCV offers two options, with or without Anaconda. Because BBR uses Anaconda, I will go for that option (for the moment).

- The suggested Individual Product is no longer available on Anaconda. Instead selected Anaconda distribution. That points to Anaconda version 2022.10 which is python3.9 based. OpenCV suggested python3.8, although their notebooks are designed for python3.9.

- When asked, I unselected the option to register Anaconda3 as my default python3.9 provider. Also the edition of Anaconda to the PATH I didn't select.

- Without the 2nd option, conda as command is not found in the command prompt.

- Added C:\Packages\Anaconda3\condabin to my environment path. Now conda can be found.

- The installation procedure so far seems not recommended for Psychobiology students.

- Tried conda create --name opencv-env-python39. Fails with CondaSSLError: OpenSSL appears to be unavailable on this machine.

- Looked at this page and tried conda init --dry-run. This indicates the following changes:

modified C:\Packages\Anaconda3\Scripts\activate

modified C:\Packages\Anaconda3\Scripts\deactivate

modified C:\Packages\Anaconda3\etc\profile.d\conda.sh

modified C:\Packages\Anaconda3\etc\fish\conf.d\conda.fish

modified C:\Packages\Anaconda3\shell\condabin\conda-hook.ps1

modified C:\Packages\Anaconda3\etc\profile.d\conda.csh

modified C:\Users\avisser1\Documents\WindowsPowerShell\profile.ps1

modified HKEY_CURRENT_USER\Software\Microsoft\Command Processor\AutoRun

- That didn't help. Adding C:\Packages\Anaconda3\Library\bin to my environment path was the solution. Now.conda create --name opencv-env-python39 works.

- Continued with pip install --user opencv-contrib-python streamlit moviepy jupyter matplotlib ipykernel in this activated environment. The --user option is needed because I use the system version of python3.9.

- Yet, the command import cv2 gave again the circular import error (encountered last week with the Face Recognition software in @Home on Nov 9.

- Did python -m pip install --user opencv-python==4.5.4.60. Now import cv2 works.

- Next jupyter notebook fails. My hypothesis that this is related that 'C:\Users\avisser1\AppData\Roaming\Python\Python39\Scripts is not in the path. jupyter.exe is there. Still, the environment cannot find it.

- Moving to this directory and starting .\jupyter.exe notebook works. cv2.__version__ indicates 4.5.4.

- Trying to install the latest PyCharm, but the installer detects an older 2020.3 version. Will try to work with this version first. Selected C:\Python39\python.exe as Previously configured interpreter. That works to print(cv2.__version__).

- PyCharm doesn't see the OneDrive, so copied the extracted directory on C:\onderwijs\BehaviorBasedRobotics.

- OneDrive is visible, as C:\Users\*\OneDrive.

- Finished the first exercise (read/write), by copying code-snippets from the examples above. 5min work.

- Looked ahead, but the Object Detection is already based on MobileNet. So, Module5 (Contour Analysis) seems a good point to break.

- Module 5: Object Detection lecture is available on YouTube (hidden).

- Module 4-1: Streaming video's is also on YouTube

- Module 4-3: Motion detection is here.

- Module 4 can be skipped.

- Module 3 looks very interesting.

- Module 3-1 lecture can be found here. Note sure if this is needed. Maybe the 2nd part, colour histograms. Nice to use this to help the students to pick the threshold of the three markers. Also the to equalize the colours, would make the detection of the markers less lighting dependent.

- Module 3-2 lecture can be found here. This one is essential for the BBR-students.

- The code of this code can be downloaded from dropbox.

- The course has in total 21 modules (the last one with the code in the cloud (Google, Amazon, Microsoft).

- The Module 1-1 lecture can be found here. I/O operations

- The Module 1-2 lecture can be found here. That explains the basics of color images, including of the alpha-channel and the BGR order. It shows also the HSV channels

- The Module 1-3 lecture can be found here. It is on basic manipulations like crops and flips.

- The Module 1-4 lecture can be found here. Annotating methods.

- Did the exercise of Module 1-2, but the image is saved, but not displayed. Adding plt.show() solved this issue.

June 27, 2022

- Read the Science article about the difference on a expanded interneuron-to-interneoron connection of humans compared to mouse.

June 23, 2022

June 21, 2022

June 20, 2022

- The Max Planck Institute has made BirdBot, which walking style is inspired by a Ostrich.

June 14, 2022

June 13, 2022

- Minoru Asada published the book Cognitive Robotics, including a part on behavior-based robotics.

March 8, 2022

January 31, 2022

- Testing motion_v6.py. Seems that a stiffness of 0.9 before the change and 0.6 after the change works: the Nao keeps its head up.

- Also trying self.globals.navProxy.navigateTo(0.5, 0), which returns followPath not supported. Part of the navigation is not supported on the Nao (only Pepper). Checking documentation. Indeed, in Naoqi v2.8 navigateTo is not supported for the Nao.

January 27, 2022

- In the code provided I refer to globals_v5, but only provide globals_v5.pyc. I believe that v5 is equal to globals_v4, but should check. The provided main_v3 also has most modules commented out.

- In naoqi v2.8.5 moveTo can be replaced by navigateTo (which should stop when encountering an obstacle).

- With Head-stiffness zero, the head moves after setting the angle.

January 27, 2022

- At the bottom of this page there is an example how to start an application with arguments.

- This works when the robot could be reached, but doesn't give a warning when the ip is not reachable. Solved that with a try around app.start() in globals_v5.py.

- In one of the Windows machines import cv2 doesn't work, while conda list indicates that opencv3 is installed. Tried to install it from channel conda-forge.

- Problem was that the Anaconda2 directory was moved. Edited the Anaconda prompt script (in C:\Programs\Anaconda3). Now at least the Anaconda prompt can load cv2.

- The function setHead only moves the pitch. Tried this piece of code from , which turns the head to the left:

self.globals.motProxy.setStiffnesses("Head",1.0)

self.globals.motProxy.changeAngles("HeadYaw",0.5,0.05)

time.sleep(2.0)

self.globals.motProxy.setStiffnesses("Head", 0.0)

- The same code with absolute angles self.globals.motProxy.setAngles(["HeadYaw"],[0.5],0.05) also works.

- Also self.globals.motProxy.setAngles(["HeadYaw","HeadPitch"],[-0.5,0.3],0.1) works.

- So, the main problem previous encountered is setting the stiffness and give the command some time to be executed. Reduced the speed and published the code as motion_v5.py

-

- Read the introduction of chapter 38 of Information Theory, Inference, and Learning Algorithms, which compare ANN with biological memory and association.

January 25, 2022

- Trying to integrate the code of yesterday in motion.py.

- Downloaded the latest assignment code.

- Had to install naoqi and other packages.

- Used get-pip.py to install python2-pip on Ubuntu20.04.

- Continued with python2 -m pip install numpy

- Yet, for opencv3 I have to make it from source or use conda (as in the installation).

- Created a version my_config.py, which has no dependency on opencv.

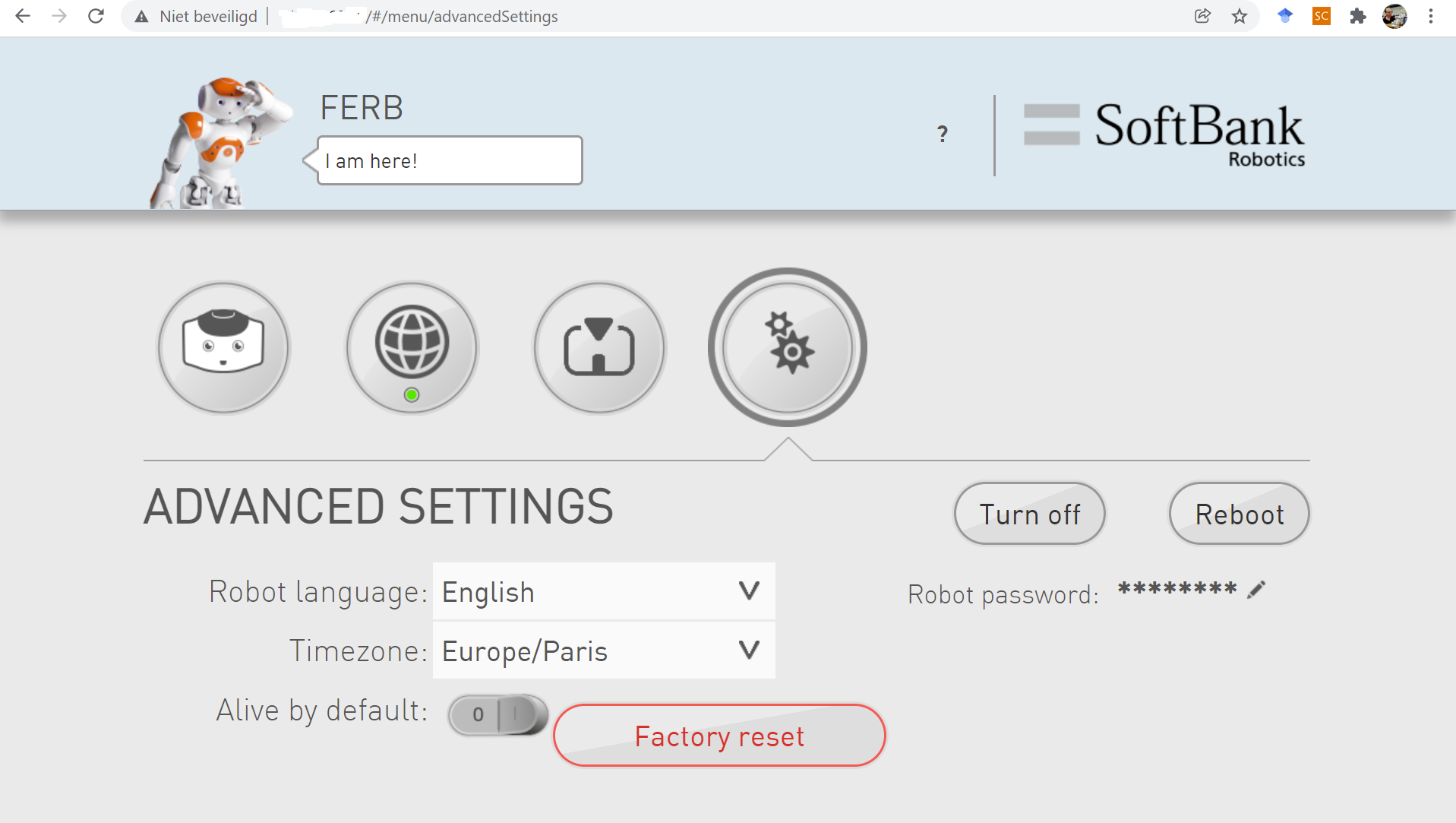

- The module loads, but no 'behavior' is loaded (nothing happens, which was due to wrong network). Once connected the robot Ferb sitdown. The system complained that the Fallmanager couldn't switched off, which could be corrected by going to advanced/#settings.

- Still, I receive a warning No Application was created, trying to deduce paths.

January 24, 2022

- Could switch of Nao Life from Choregraphe, but it is automatically switched on again after reboot.

- Stuck in the wizard for both the webinterface as Robot Settings.

- Looking into the Autonomous Life API, to see if we can easily switch it off through its programming interface.

- Made a very simple python script on ferb in ~/naoqi/src/autonomous_life to connect to the AutonomousLife service:

from naoqi import qi

import sys

session = qi.Session()

session.connect("tcp://127.0.0.1:9559")

life = session.service("ALAutonomousLife")

state = life.getState()

print(state)

session.close()

- Yet, the result is No Application was created, trying to deduce paths

disabled.

- Moved on the robot to /opt/aldebaran/www/apps/life/etc, which indicates that the settings could be copied to ~/config/naoqi/autonomy.cfg. Switched the NatureOfNoActivity from dynamic to solitary and rebooted. Still alife.

- Found calls to ALAutonomousLife in /opt/aldebaran/www/apps/boot-config/services/albootconfig.py

- Life is not called directly, but queried via get_preference(self.DISABLELIFEANDDIALOG).

- Note that preference documentation indicates that the preferences are stored in SQLite database /home/nao/.local/share/PreferenceManager/prefs.db

- Seems that this commmand is the trick self.alpreferencemanager.setValue("com.aldebaran.debug", "DisableLifeAndDialog", "1"). Rebooting

- Made a very simple script to switch AutonomousLife off.

import qi

application = qi.Application("")

application.start()

preferences = application.session.service("ALPreferenceManager")

preferences.setValue("com.aldebaran.debug", "DisableLifeAndDialog", "1")

application.session.close()

- Also made remote version of get and set.

- Yet, after hitting the chest-button twice, autonomous life is back.

- Looked up what the wizardstate is. That is None (not in an software update). If a wizard page has to be displayed, is set my CurrentPage. On Ferb I got the answer wizard.root.network.list, while on Jerry (Nao5 with NaoQi 2.1.4) I get menu.root.myrobot. Set that as CurrentPage, and I have the webinterface working!

- Also made a script to the current life state, which switched it to disabled (which also turns the stiffness off). The chest-button actives the breathing and looking for an interaction again.

- Checking what happens if I switch the BasicAwareness off. That is still not enough, so at the end switch all autonomous activity off, except the Background movement.

- In globals.py, I should start application = qi.Application(sys.argv), which would give application.session, to remove the No Application warning. Yet, I have to specify the url, otherwise it starts it locally.

- Published this as v4 of the code.

-

- Aldebaran published two weeks ago a new version of 3D simulator based on bullit. Should work for both python 2.7 and python 3. There is a ros-wrapper (not clear which version).

- Should check the Virtual Robot tutorial. Seems that most moveTo python calls are there. Camera is also there, saw no sonar (yet).

January 17, 2022

- Used this high-resolution opencv logo to test tutorial code.

- Had to scale up the drawing with a factor 10, and modified the call to

circles = cv.HoughCircles(img,cv.HOUGH_GRADIENT,1,262,

param1=50,param2=30,minRadius=262,maxRadius=1312)

- This is the result (compared with the original result):

- Note that the top circle is not recognized to border issues.

- The protection if circles is None or len(circles[0]) == 0: seems to work

- Found the GenR workshop proceedings.

- Two papers seems to be good examples for 4-page essays:

January 12, 2022

- Updated the install instructions, but the available versions (Windows, Mac, Linux) are v2.8.7, while the robots are running v2.8.5.11)

January 11, 2022

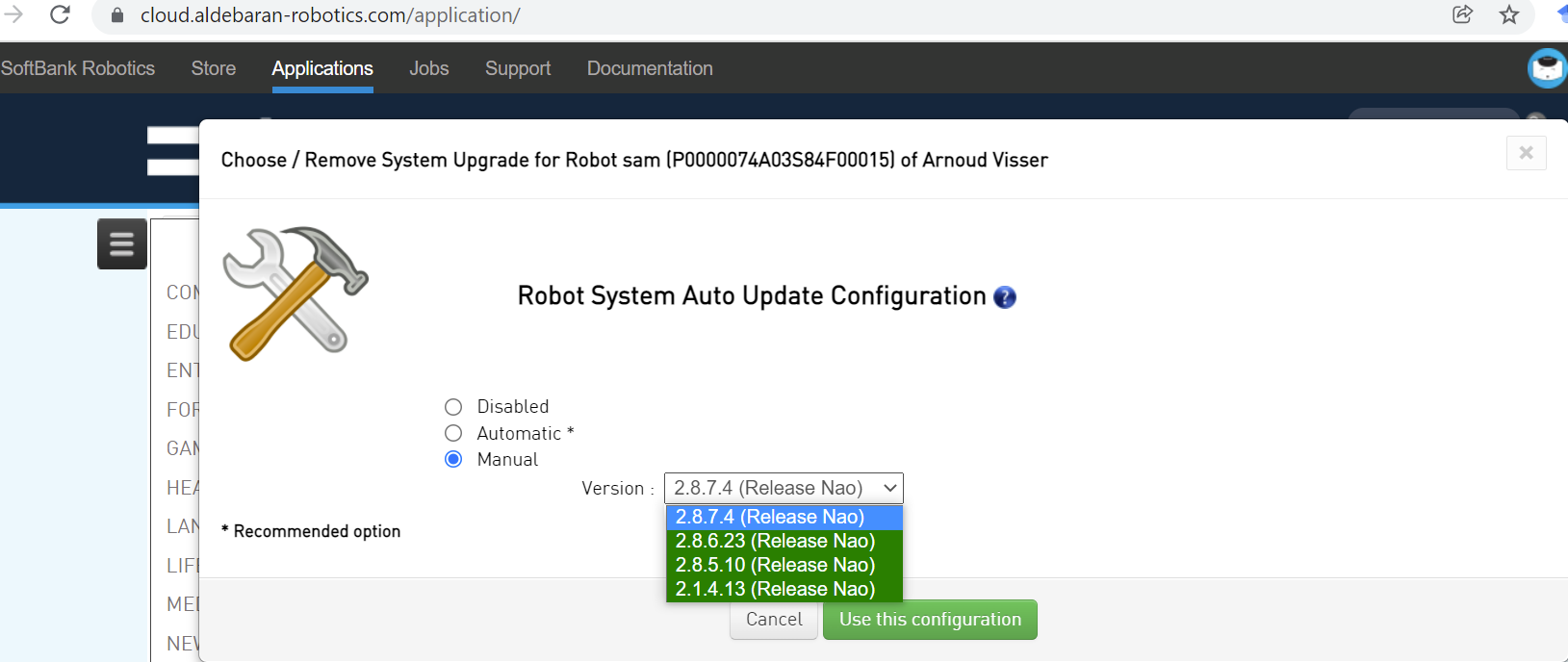

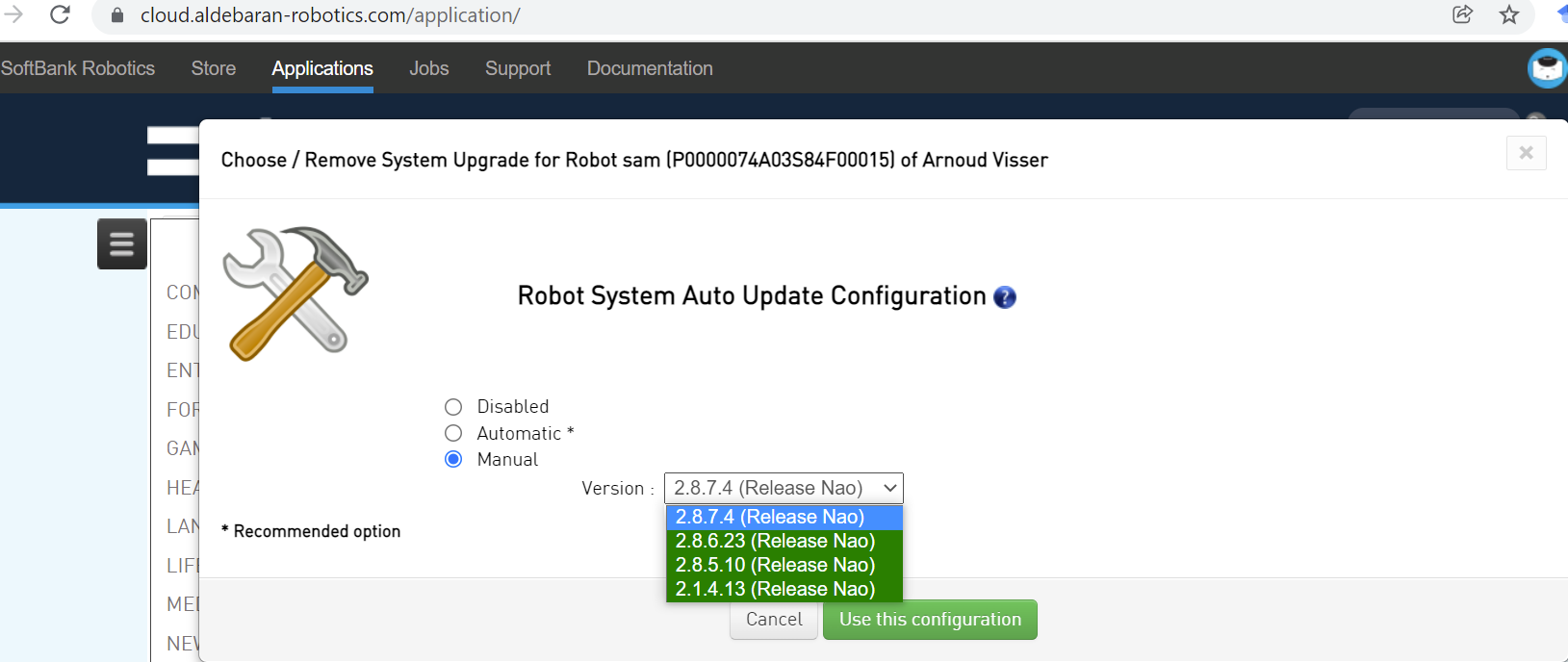

Found a way to update the robot. The store doesn't exist anymore, but a system update can be scheduled from cloud.aldebaran-robotics.com/application/.

Currently, v2.8.7.4, v2.8.6.23, v2.8.5.10, v2.1.4.13 are available:

January 10, 2022

- No light when I connected internet cable to Phileas, so tried the Digitus ethernet adapter (together with an USB-B <-> Usb-C adapter). Now I could connect to phileas.local, and mv robocup.conf robocup.conf.dnt.

- phileas could not connect to the robolab server, so I used the webinterface. After accepting the terms, the wifi-connect wizard was hanging in chrome. Fortunate it worked fine with edge.

- Tried to do the same with Appa, but that fails for both on the #/wizardnext/connectade page.

- Appa is alive, so the black cable is not stable enough. Switched to the yellow ethernet cable.:w

- Checked nao.local/advanced/#/. The NaoQi version is 2.8.5.11.

- Tried to connect via nao.local/#/wizard/network/list. robolab is visible, but gives an authentication problem. After an forget the same authentication failure is there, but connecting a second time the failure is gone.

- Still, the connectade is hanging under edge. When disconnecting the ethernet cable the wireless connection is gone again, but comes back when I inspect the network/list. Nao life can be switched off by hitting the chess button twice.

Yet, this not only switches off life, but also stiffness (and life is back when turned on).

- In NaoQi 2.8, there is a new Windows application robot-settings. Yet, the resolution of this application is quite bad, and it requires that you set a password (and doesn't accept the default one).

- At least Appa is running python 2.7.11 and kernel 4.4.86.

- Note that Jan de Wit has build a python2.7 <-> python3 bridge, for Windows and Mac (so no Linux version).

- Checked Moos with advanced

January 3, 2022

- Read this interesting blog, which discuss how recent developments in self-supervised and unsupervised learning are heading towards more biological plaussible models.

- One of his observations (thanks to Geirhos et al. (2021), NeurIPS), was that the latest models are not only more robust to distortions (thanks to magnitude more data), but are also less sensitive to texture and more to shape.

- The other observation was that with instance-constrative self-supervision, biological effects like retinal distortions, saccades, efference copy and hippocampus-based buffering could be observed.

- The author himself published Bakhtiari et al. (2021), NeurIPS, which a network that self-organized in a dorsal and a ventral stream.

- Also love his subunit which is sensitive to spiral motion:

-

- Also read this this blog.

- The blog stresses that a common understanding is needed, so

Neuro-AI researchers have been exploring the effects of adding elements of biological realism to DNNs to see how they affect representational correspondence . Storrs and Kriegeskorte (2020) hypothesize that, as the field of deep learning continues to progress, neural network models will only become more relevant and useful for cognitive neuroscience

Previous Labbooks

& nbsp;

& nbsp;