Started Labbook 2024.

December 21, 2023

- Got this Bioinspired soft robotic state of the art article recommended.

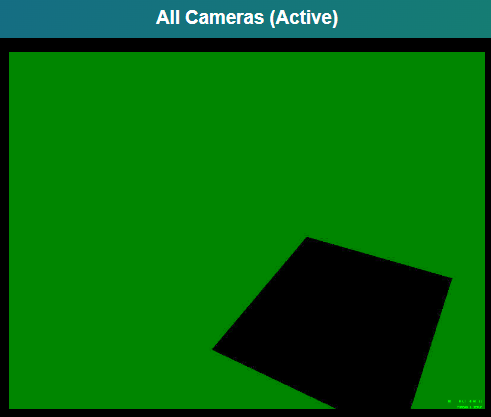

- Received Nao27: Ferdinand. Started Choregraph 2.8.6.23, connecting the nao via an ethernet cable. Could connect to nao.local.

- Checked the mac address with ifconfig -a and added it to iotroam. Because it is borrowed robot, I have put as end-date the German Open.

- Succesfully performed a Tai Chi and sit-down. Didn't switch off the fall-prevention yet.

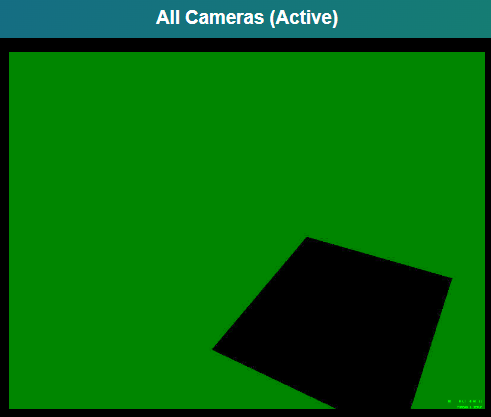

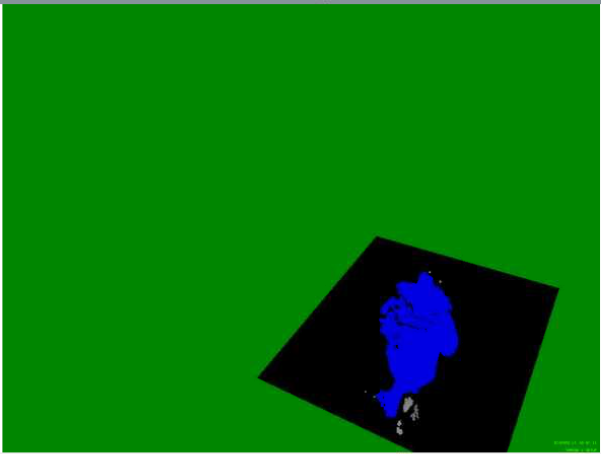

- Also tested Moos. No problems, although I heared some gears complaining in the upper body. Camera was not working, but after a nao restart that was solved.

-

- Looking if I could use Generative AI text-to-image to get inspiration.

- Both DALL-E and Midjourney are now payed services.

- Trying Adobe firefly.

- Prompt: 'Behavior-Based robotics - identifying ecological niches where robots can succesfully compete and survive, making them sufficiently adaptable to changes in the world the inhabit':

- Prompt: "Increasing accessibility: bringing robotics to the masses through suitable

interface, specification, and programming systems":

- Prompt: "Representing and controlling sensing by viewing it as a form of dynamic

agent -environment communication", modified to "Behavior-Based: Representing and controlling sensing by viewing it as a form of dynamic

agent -environment communication":

- Prompt: "Improving perception: new sensors, selective attention mechanisms, gaze

control and stabilization, improved eye-hand coordination, foveal vision, and

specialized hardware, among others":

- Prompt: "Further exploitation of expectations, attention, and intention in extracting

information about the world", augmented with "Behavior-Based" and "Behavior-Based robotics":

- Prompt: "Understanding more deeply the relationship between deliberation and reaction,

leading to more effective and adaptive interfaces for hybrid architectures",

augmented with "Behavior-Based" and "Behavior-Based robotics":

- Prompt: "Evaluating, benchmarking, and developing metrics: In order to be more

accurately characterized as a science, robotics needs more effective means for

evaluating its experiments.":

- Prompt: "Satisfying the need for far more advanced learning and adaptation capabilities

than are currently available":

- Prompt: "Creating large societies of multi agent robots capable of conducting complex

tasks in dynamic environments", where I removed large because all robots were huge. Also removed robots, focused on agents:

- Prompt: "Using robots as instruments to advance the understanding of animal and

human intelligence by embedding biological models of ever-increasing complexity

in actual robotic hardware":

- Switched by accident to the conclusions:

- Prompt: 'The issue of whether or not robots are capable of intelligent thought or

consciousness is quite controversial, with a broad spectrum of opinion ranging

from "absolutely not" to "most assuredly so."':

- Prompt: 'Robotic emotions may play a useful role in the control of behavior-based systems, although their role is just beginning to be explored.':

- Prompt: 'Homeostatic control, concerned with managing a robot's internal environment,

can also be useful for modulating ongoing behavior to assist in survival.':

- Modified the prompt: 'Nanorobots and microrobots can revolutionize the way in which we think

about robotic applications' to 'Applications of Nanorobots and microrobots in the human body can revolutionize the way in which we think':

- Prompt: 'One school of thought in robotics asserts that these machines are mankind's

natural successors.':

- Prompt: 'As in any important endeavor, there are a wide range of questions waiting to

be answered as well as opportunities to be explored.' modified to 'As in any important endeavor, there are a wide range of questions waiting to be answered as well as opportunities to be explored or behavior-based robotics.:

.jpg)

- As alternative, I also tried Bing Image Create, which allows to use 15 images with a boost. Bing loves a blue blackground:

- Bing can make other images, when your request another style (such as realistic). Still, Firefly wins in cuteness for prompt "Love & Robots:

- My wife found those two images the most inspirational:

December 7, 2023

- Had again problems to activate Perusall, but found in other course (August 15), that I had to enable Perusall in the navigation tab of Canvas.

- Found another chapter on Behavior-Based Robotics, Chapter 24 from Embedded Robotics (2008).

- The chapter starts well, with a clearly written introduction to behaviors. Yet, after that it focus on one example (EyeBot), including code-snappets in C. It shows adaptive behavior with Genetic Algorithms and NN, but as an example (not very generic).

- Arkin's book can no longer be found at CogNet

December 6, 2023

- As first step, I update Course manual. Left the final grade calculation open (for the moment).

- As learning outcome, only reference to computational psychobiology is made.

- Also the Winter 2023-2024 seems up-to-date.

- Next is the LeerWijzer.

- After that Perusall should be configured.

- While waiting on Edx, I checked 2022 and 2023 Labbook.

- The current version of qibullet is 1.4.6

- Main changes from 1.4.0 to 1.4.6 seems to be on the odometry update

- On January 17, 2023 I looked at the programming assignments of the other Computational Psychobiology courses.

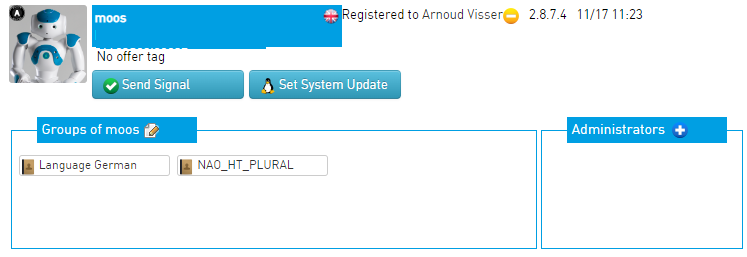

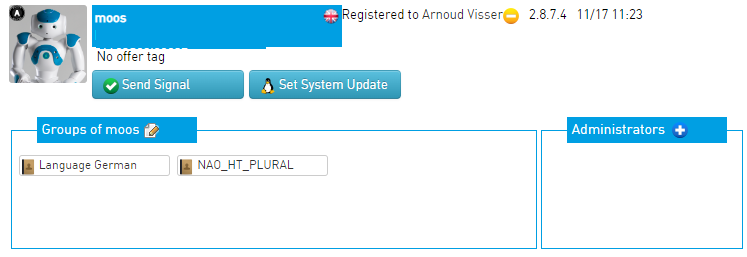

- The cloud-management from aldebaran still works, and Moos still reports NaoQi v2.8.7.4. 4 Nao robots and one Pepper are registered in the cloud.

- As options for Manual system updates I get the choice between 2.8.7.4, 2.8.6.23, 2.8.5.10, 2.1.4.13.

- Actually, I logged in via Google to EDX. The 'Using Python for Research' still exists (although finished in 2018).

- Only open homework is Case Study 6 - Homophily. I see my answers (only Case study 3 is missing, not #6 - strange).

- In the Windows instructions I use pynaoqi-2.8.7.4.

- Checked software downloads. The latest Python SDK is still 2.8.7.4 for Windows, 2.8.6.23 for Mac and Linux. On Canvas I have v2.8.7.4 for all three OSs.

- According to developer-center, this is still the latest version.

- United Robotics points to the same download-page, but the English version.

- Note that NI made a LabView plugin (for Windows). Is published in February 2016.

November 13, 2023

- To be free on Thursday 11 January, I should take group C. Pearl doesn't have an active account yet, so couldn't give her group A (yet).

November 9, 2023

June 1, 2023

May 25, 2023

- Watched the video on the MicroMouse competition.

May 11, 2023

March 15, 2023

- The book Embedded Robotics not only has hierarchical Maze navigation algorithms (Chapter 15), but also a chapter on Behavior-Based Robotics (Chapter 18.2) in the AI-section.

- As behavior-based application they suggest hide-and-seek, which could be a nice assignment for BBR.

February 2, 2023

February 1, 2023

- Made a function self.tools.switchCamera() which goes from TOP to BOTTOM and back. Tested on moos.

- Looking into Reacting to events to handle the error messages of the arm.

- Another interesting page is signal handling.

- Finally, you could disable the effect with setDiagnosisEffectEnabled

- Added also some positive remarks when the connecting to the video in tools_v6

- The (or False) has no effect on warning 711.

- The command can be seen in /var/log/lola/head-lola.log.

- Some interesting messages in this log:

[E] 1675265331.637561 3460 LoLA.behaviors.DiagnosisLegacy: Error on board LeftArmBoard, 248-->ERROR_MOTOR2_NOT_ASSERV_BECAUSE_MRE-->MRE_JOINT_OTHER_ISSUE

[I] 1675265331.649585 3460 LoLA.behaviors.DiagnosisLegacy: End of error on board LeftArmBoard, 232

[W] 1675265364.374795 3460 ALMotion.ALModelConfigManager: The Fall Manager has been disactivated.

[W] 1675265364.397754 3460 ALMotion.LibALMotion: setDiagnosisEffectEnabled(true)

[W] 1675265364.423590 3460 ALMotion.ALMotionSupervisor: Chain(s): , "LArm" are going to rest due to detection of SERIOUS error(s). New error(s):

[I] 1675265963.230781 3460 ALBattery: cleared system notification 805

- The MRE joint is mentioned in NaoQi 1.14 documentation

- Looked via the advanced webinterface. The LertArmBoard has values, but static ones like its serial-number. Searching for MRE also didn't help. Yet, searching for Diagnosis showed that ALDiagnosis/RobotIsReady = True, ActiveDiagnosisErrorChanged = [1,["LElbowRoll","LElbowYaw"]] and PassiveDiagnosisErrorChanged = [].

- Used getDiagnosiStatus, but that is a NaoQi 2.5 function, no longer available in NaoQi 2.8

- The new call is self.globals.diagnosisProxy.getActiveDiagnosis(), which nicely displays [1L, ['LElbowRoll', 'LElbowYaw']].

- Should add my own callback for ActiveDiagnosisErrorChanged, as described here for a memory event.

- That seems to work, but the event is not called (while the robot is still complaining).

January 31, 2023

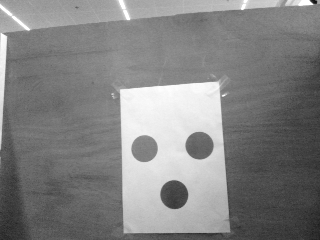

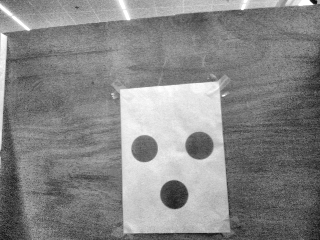

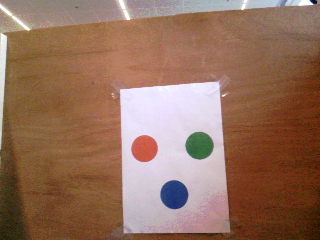

- Continue with circle detection which is CNN based.

- A fresh run was finished in a minute. Unfortunatelly, the last commands (saving a prediction after training fails with the warning:

libpng warning: Invalid image width in IHDR

libpng warning: Image width exceeds user limit in IHDR

- Tried to reproduce the code at nb-dual.

- The import works without problems with python 3.8.10.

- The model is also nicely displayed, although it takes some while and the following warning is displayed:

2023-01-31 10:36:43.355699: E tensorflow/stream_executor/cuda/cuda_driver.cc:271] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

- The model fit starts at 10:41. One minute later the training is already at Epoch 3/100.

- Should try to save the model after training, to see how fast the inference is.

- Saving works, but after loading the model the loss_function is unknown (while defined in both training and inference code):

model = keras.models.load_model("artificial_circles.hdf")

File "~/anaconda3/envs/tf1.14/lib/python3.7/site-packages/tensorflow/python/keras/engine/training.py", line 300, in compile

self.loss, self.output_names)

File "~/anaconda3/envs/tf1.14/lib/python3.7/site-packages/tensorflow/python/keras/engine/training_utils.py", line 1104, in prepare_loss_functions

loss_functions = [get_loss_function(loss) for _ in output_names]

File "/home/avisser/anaconda3/envs/tf1.14/lib/python3.7/site-packages/tensorflow/python/keras/engine/training_utils.py", line 893, in get_loss_function

loss_fn = losses.get(loss)

ValueError: Unknown loss function:loss_function

- This seems a known issue of the older h5 format. It can be solved by add_loss() or to save the model with something like save_format='SavedModel'. Yet, this seems not be recognized with tensorflow 1.14, so followed this post and used save_format='tf'. This option has an effect:

'Saving the model as SavedModel is not supported in TensorFlow 1.X'

Please enable eager execution or use the "h5" save format.

- Looked at Keras documentation and added a call keras.losses.MeanSquaredError(). That works, although the loss seems to have a constant value for all epochs.

- Loading this model still fails, with another error:

Unknown entries in loss dictionary: ['class_name', 'config']. Only expected following keys: ['conv2d_2']

- At 12:04 nb-dual is ready with training. Checked its Tensorflow version (v2.6.0). So, here the default is the tf model including loss.

- Checked the show_version.py outside the anaconda environment. Fails on missing symbol in libcudart.so.11.0. That library is there, problems seems to be in /lib/x86_64-linux-gnu/libnccl.so.2 that calls it.

- Looked into autonomous driving labbook (April 20, 2022), where I use singularity to build images.

- Used singularity cache list -v to see that there are actually 3 images still on ws10. When I look at /storage/./singularity-containers/ I see that I also have tensorflow2.1.0 container.

- Started this container with singularity shell --nv --bind /storage:/storage /storage/./singularity-containers/tensorflow2.1.0/. The show_version.py gave:

TensorFlow version 2.1.0

Keras version 2.2.4-tf

- With training nothing seems to happen after:

Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 22582 MB memory) -> physical GPU (device: 0, name: NVIDIA GeForce RTX 3090, pci bus id: 0000:01:00.0, compute capability: 8.6)

- After a few minutes the model is displayed, followed by this warning:

2023-01-31 12:48:00.106116: W tensorflow/stream_executor/gpu/redzone_allocator.cc:312] Internal: ptxas exited with non-zero error code 65280, output: ptxas fatal : Value 'sm_86' is not defined for option 'gpu-name'

Relying on driver to perform ptx compilation. This message will be only logged once.

- After this the training is finished in a minute (with the constant keras.loss).

- After training another warning is given:

2023-01-31 12:49:28.432546: W tensorflow/python/util/util.cc:319] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them.

WARNING:tensorflow:From /usr/local/lib/python3.7/dist-packages/tensorflow_core/python/ops/resource_variable_ops.py:1786: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

0.0

- Loaded the trained model on nb-dual, but get the error:

ValueError: Unknown loss function: loss_function. Please ensure this object is passed to the `custom_objects` argument. See https://www.tensorflow.org/guide/keras/save_and_serialize#registering_the_custom_object for details.

- Loading the model with the extra argument custom_objects={'loss_function': loss_function}) worked on nb-dual. It takes a little more than a minute to perform the inference (without GPU), although most time seems to dedicated on loading all drivers. One additional warning is given:

2023-01-31 13:48:31.400191: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

1.6022929

- Runned the inference twice. Got as loss resp. 1.6184229 and 1.6022929

- On ws10 this same code also works (for conda environment tf1.14). Yet, inference needs 5 minutes, and gives a loss of 773.4055

- Should try this inference model on nao-example. The images used for training are 128x128, while the color_signs are 320x240. Could adjust the inference or the training.

- When training with 320x240 I got the warning:

023-01-31 14:31:51.387199: W tensorflow/core/framework/cpu_allocator_impl.cc:80] Allocation of 983040000 exceeds 10% of free system memory

- Checking with free -m showed that 14Gb of the 16Gb is used (including the 2Gb swap-file). After 6 epochs the training process is killed.

- Strange enough also cv2.imwrite fails (while it worked yesterday).

- Tried again with 160x120 images. Now the memory usage stays around 7Gb.

- Did a resize to (128,128), but get the error:

ValueError: Input 0 is incompatible with layer model: expected shape=(None, 128, 128, 3), found shape=(32, 128, 3)

- Actually, the code does a np.expand on the code. With Resized Dimensions : (1, 128, 128, 3) the prediction seemst to work, only the imshow fails on unsupported array type in function 'cvGetMat'

- Tried to use scipy.misc.toimage() as suggested post, but that is function from an old release (while I use Scipy version 1.5.4). Just using resized[0,:,:,:]) works as good.

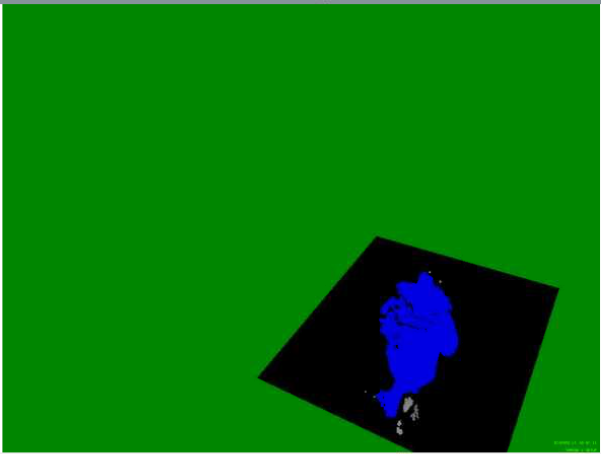

- Yet, the prediction is not optimal yet:

- But the training is still running in the background (and should be done with real recorded images)

- Run the script, but had to swap the dimensions to get a result:

- Made a script to record on touch.

- Recorded 65 images; 24 of those were usable. Split that 50/50 in a training / testing set.

- Now I need to find the center of the circles as labels.

January 30, 2023

January 27, 2023

- Would be great to use aidoROS on the Nao. The microphones are there, should check if the speaker can be used as buzzer. This would also require a ROS2 interface to the Nao.

-

- Nice Hakster project to make a spider with minimal components.

-

- Looked into histogram equalization tutorial. The simple_equalization.py works fine with python2.

- Also adaptive_equalization.py works with python2. Result looks as an improvement in contrast:

- Also tried to equalize each of the channels seperately:

- Inspected the values inside the circles, but actually there is more difference between the equalized images than the original images. This is the most visible in the red channel, where an original difference of 10bits blew up to a difference of 40 bits.

- Converted the image to HSV to see if that improves the histogram matching. Still the difference between python2 and python3 stays.

- Should look if the behavior of np.unique() stays the same between python2 and python3, something has changed since numpy 1.13. Should reproduce this, and look at alternative implementation with vstack.

- The behavior of np.unique for axis=0 is the same for python2 (numpy v1.16.6) and python3 (numpy 1.23.3). Same for if I include ravel().

- It goes wrong when the quantiles are calculated. Division is implemented differently in python2 and python3. Adding float() to the denumator solves it. This is a working histogram_matching.py. It can be loaded with from histogram_matching import match_histograms, and called with matched = match_histograms(src, ref, multichannel=multi).

-

- Looked at this video. It is trained by creating 50/50 squares and circles. It is keras based. A comment is that instead of a CNN, better an UNET could be used. The code can be found here.

- It would be fun to train this model, and see if it could run on the TPU-stick.

- An UNET application is this IRIS-segmentation, with this github page with the download-link of the pre-trained model..

- The unet-code seems to originate from this site, which contains a Matlab-interface.

January 24, 2023

- Tried to reproduce histogram matching tutorial.

- Downloaded both images. The cloudy tower is 777x1036, the sunset version is 828x1342.

- The example fails on line 24: "'module' object has no attribute 'match_histograms".

- The example is explictly based on scikit-image==0.18.1. With python2.7 the maximum version of scikit-image is 0.14.5. In Anaconda version 0.14.2 is installed.

- The documentation of this function is here. No mention of the when this function was included, but there is a link to the source-code of the function is given (and the algorithm).

- In principal the provided seems to work, although the matched image when displayed is empty. Strange, because each of the channels is matched with the match_cumulative_cdf() function.

- Tried my WSL version of Ubuntu 18.04. Did python3 -m pip install opencv-contrib-python, which installs v4.7.0.68. This install fails on missing openjpeg. Tried again with Ubuntu 20.04, which works out of the box. Also installed scikit-image==0.18.1 without problems. Running fails on cv.gapi.wip.GStreamerPipeline = cv.gapi_wip_gst_GStreamerPipeline

AttributeError: partially initialized module 'cv2' has no attribute 'gapi_wip_gst_GStreamerPipeline

- Run the example code on nb-dual's native Ubuntu 20.04, which gives the expected result.

- Recorded with moos an image with and without floodlights. This is the result: the source without floodlights, the reference without and the matched result:

- Checked the white in the middle. The source RGB is (220,216,228), the reference (216,210,219), and matched (215,210,221). So, RGB-shift is (5,6,7).

- Lets check if the github code works for python3.8. Was able to make it work for python3.8. Could call the function with python2.7, but had to remove the variable argument call '*, ' of the match_histogram function. Result is a grey-stripped image:

- Actually, on Dec 20, 2018 the histogram_matching was moved from skimage/transform to exposure. At that time, the function was not using the variable argument yet. Further, not much has changed.

- Looks like the returned image is emptied, whereafter it is not filled with _match_cumulative_cdf(). The cumulative_cdf is both for python2 and python3 called for unsigned integers. Before that other option was chosen. Yet, also with the other option the result stays gray.

- When I switch to not multi-channel, also the python3 matched image becomes white.

- Tried if I could use for skikit-image=0.14.5 the match_histograms from scimage.transform. Yet, also her "'module' object has no attribute 'match_histograms'".

- Added the cdf_test, but that works.

- Found a difference in the match_cumulative_cdf. In the modern code source.reshape(-1) is used, while in the original code source.ravel() is used. Still, same result.

January 23, 2023

- Continue with section 7.1.5 (Modeling mirror neurons in the macaque), of From Cybernetics to Brain Theory (Arbib's memoir, 2018).

- Inspired by a cat beeting food out of glass pipe, the Augmented Competitive Queueing model was developed. ACQ makes two evaluations for each action: "Each time an action is performed successfully, its desirability is updated while executability may be left as is or increased. However, when the action is unsuccessful, executability of the intended action is reduced while desirability of the apparent action is adjusted by TD-learning."

- The mirror system hypothesis is based on the observation that grasping and language parity both origing in Broca's area (which is the Macaque F5 area for mirror neurons for grasping). This hypothesis is supported by sign-language and cospeech gestures.

-

- Could look to this tutorial to do color correction (with a color balancing card).

- Between images, it is also possible to do histogram matching or histogram equalization. The latter is based on gray-images, so should be done for each of the different channels.

- The histogram matching is color based.

- The color balancing card is based on detecting low-contrast images tutorial.

January 20, 2023

- Arbib had difficulty to combine networks of finite automata and linear systems, which could be solved with Functors, which was extended to probabilistic automatons.

- Rana computatrix is only described in section 4.2 "My frog period".

- The journal Biological Cybernetics still exists.

- The toad experiments nicely shows the importance of subsumption. The Pretectum that detects Large Moving Objects not only activates the Avoid but also suppresses the Snap:

Fig. 2. Two schema-level models of approach and avoidance in the toad. Model B is more robust in that it can handle data on lesion of the pretectum as well as observations on normal behavior.

- The 1998 book Conceptual Models of Neural Organization had three pilars. The Structural Approach, the Functional Approach and the Dynamical Approach. Schema Theory is part of the functional decomposition, where a model is created which "provides the basis for modeling the overall function by neural networks which plausibly implement (usually in a distributed fashion) the schemas in the brain".

- Nice in his work on "“Perceptual structures and distributed motor control” (1981) was the explicit activation of both the visual search and reaching:

(Left) A coordinated control program linking perceptual and motor schemas to represent this behavior. Solid lines show transfer of data; dashed lines show transfer of control. The transition from ballistic to slow reaching provides the control signal to initiate the enclose phase of the actual grasp. (Right) Preshaping of the hand while reaching to grasp (top); the position of the thumb-tip traced from successive frames shows a fast initial movement followed by a slow completion of the grasp (bottom). (Adapted from Jeannerod & Biguer, 1982.)

- Should continue with section 7.1.5 (Modeling mirror neurons in the macaque), because that describes an illustration that I will use in my lecture.

-

- Note that you schedule a system update from https://cloud.aldebaran-robotics.com/manage/. Yet, according to the interface Moos has v2.8.7.4, while it reports v2.8.5:

- In the history I see that on September 26, 2022 the 2.8.7.4 image was downloaded and installed, together with package plural-na 1.4.0. The last Check Update is November 17, 2022.

- Checked the qiBullet version of Softbank. They upgraded to qiBullet v1.4.0 three weeks ago. Yet, I see no sonar sensor yet in the documentation and tests. Note that the CameraSubscription is including a flag for TOP, just as for the current NaoQi 2.8.7 version.

January 19, 2023

- Nice new video of the Atlas robot, climbing up to a construction side.

- The science behind these video is explained in a longer video.

-

- The story about 'What the frog's eye tells the frog' starts at page 22 of From Cybernetics to Brain Theory (Arkin's memoir, 2018).

- Arbib also wrote the foreword of Braitenberg's book Vehicles (1984). Braitenberg worked in 1962 on the propagation of signals spanned by Prukinje cells (see (b) the center of Fig. 1):

Fig. 1. Three levels of magnification of a fragment of cerebellar cortex. As the magnification increases, we go from a little slice through the cortex, the outer sheet of the cerebellum, to the Purkinje cell, which is the output cell of the cerebellar cortex, to a fragment of dendritic branches showing the synapses as revealed under the electron microscope

January 18, 2023

- Figure 1 from From Cybernetics to Brain Theory (Arbib's memoir, 2018) could be useful, because it shows slices of brain at several magnification levels.

- The key words look quite promissing, but with 115 pages it will not be very useful for the course.

- On page 5 I found the note: "Another important encounter was with a Lecturer (essentially an assistant professor) in Physiology at Sydney University, Bill Levick. Bill was working on neurophysiology of the cat visual cortex. Our deal was that he would let me see what he and Professor Peter Bishop were doing to the cats and I would tutor him in mathematics. He introduced me to the then just published paper “What the Frog's Eye tells the Frog’s brain” by Lettvin, Maturana, McCulloch, & Pitts (1959). The work was inspired by the 1947 Pitts-McCulloch paper. Indeed, Lettvin et al found 4 types of feature detectors in the retina of the frog and showed that they projected to four separate layers in the tectum (the visual midbrain, homologous to the mammalian superior colliculus), but there was no evidence linking those layers to group theory. "

- On page 8 I found the quote: "The conceptually most important idea for me came from the work of Bill Kilmer, another visiting scholar, in developing a computational model, RETIC, to address McCulloch’s ideas on distributed computation, which were inspired in turn by Magoun’s (1952) work on the role of the reticular formation in the neural management of wakefulness and the findings of Madge and Arnold Scheibel describing its anatomy as a stack of “poker chips” or modules arrayed along the neuraxis (Scheibel & Scheibel, 1958). RETIC had to commit to one of several modes of behavior (e.g., sleep, eat, drink, fight, flee or mate) using modules that received different samples of sensory input and thus formed different initial estimates for the desirability of the various modes. The challenge was to connect the modules in such a way that modes would compete and modules would cooperate to yield a consensus that could commit the organism to action. This model – developed in the early 1960s though not published in full till 1969 (Kilmer, McCulloch, & Blum, 1969) – seems to me to exemplify a notion crucial for understanding the brain: a general methodology for cooperative computation whereby competition and cooperation between elements of a network can yield an overall decision without the necessary involvement of a centralized controller. In the 1970s I generalized this to yield a variant of in which the interacting units could be functional (schemas that were possibly implemented across multiple brain regions) and not necessarily structural (e.g., specific neurons or neural circuits)."

- So, schema theory was inspired by Bill Kilmer's RTIC model (31 pages). Note (Fig. 7), that this model also contained a long-term and short-term memory of events.

- Still inspired by "What the Frog's Eye tells the Frog's Brain", where they were looking at vision in terms of action, they were looking for universals. With the work of Hubel and Wiesel (1959-1977), the looked at edge detectors, and found "that visual processing cannot be purely hierarchical. To the extent that higher levels of processing may extract key properties of an object, person or scene, our awareness is still enriched by the scene’s lower-level aspects (e.g., shape, motion, color and texture)." That is the competition Arbib was looking for, between "general purpose" pattern recognition versus Action-Oriented Perception. Looking forward to the section on Rana computatrix, the frog that computes.

- As mathematician, Arbib also loved Kalman's approach to mathemathical system theory (page 9).

- On a break in 1962, he was introduced to The Thinking Machine by C. Judson Herrick (1929) which provided another example of consideration of cybernetic issues well before the computer age.

- He wrote a 'state-of-the-art' book based on his knowledge in 1962. Nice is that he can now reflect what he had seen and what he had omitted at that time. For instance on page 12: "Perhaps of most importance for the later emergence of cognitive science from cybernetic roots, all-too-briefly mentioned, was Plans and the Structure of Behavior by George Miller, Eugene Galanter and Karl Pribram (1960) which combined ideas from early AI and psychology with hierarchies of cybernetic feedback units they called Test-Operate-Test-Exit (TOTE) units. "

- George Miller was not only a founder of cognitive science; George Miller worked with “the early Chomsky” to carry out psycholinguistic studies suggesting that finite state automata were inadequate models for language (G. A. Miller & Chomsky, 1963) and was well known for his classic paper “The magical number seven plus or minus two: some limits on our capacity for processing information” (G. A. Miller, 1956). His further importance to me was that, while I was at MIT, Arbib applied to take part in the Proseminar on neuropsychology for new graduate students. He was refused because enrolled in Mathematics! But then Miller gave me permission to audit the Proseminar for Psychology graduate students at Harvard, which I did, greatly deepening my understanding of Psychology

January 17, 2023

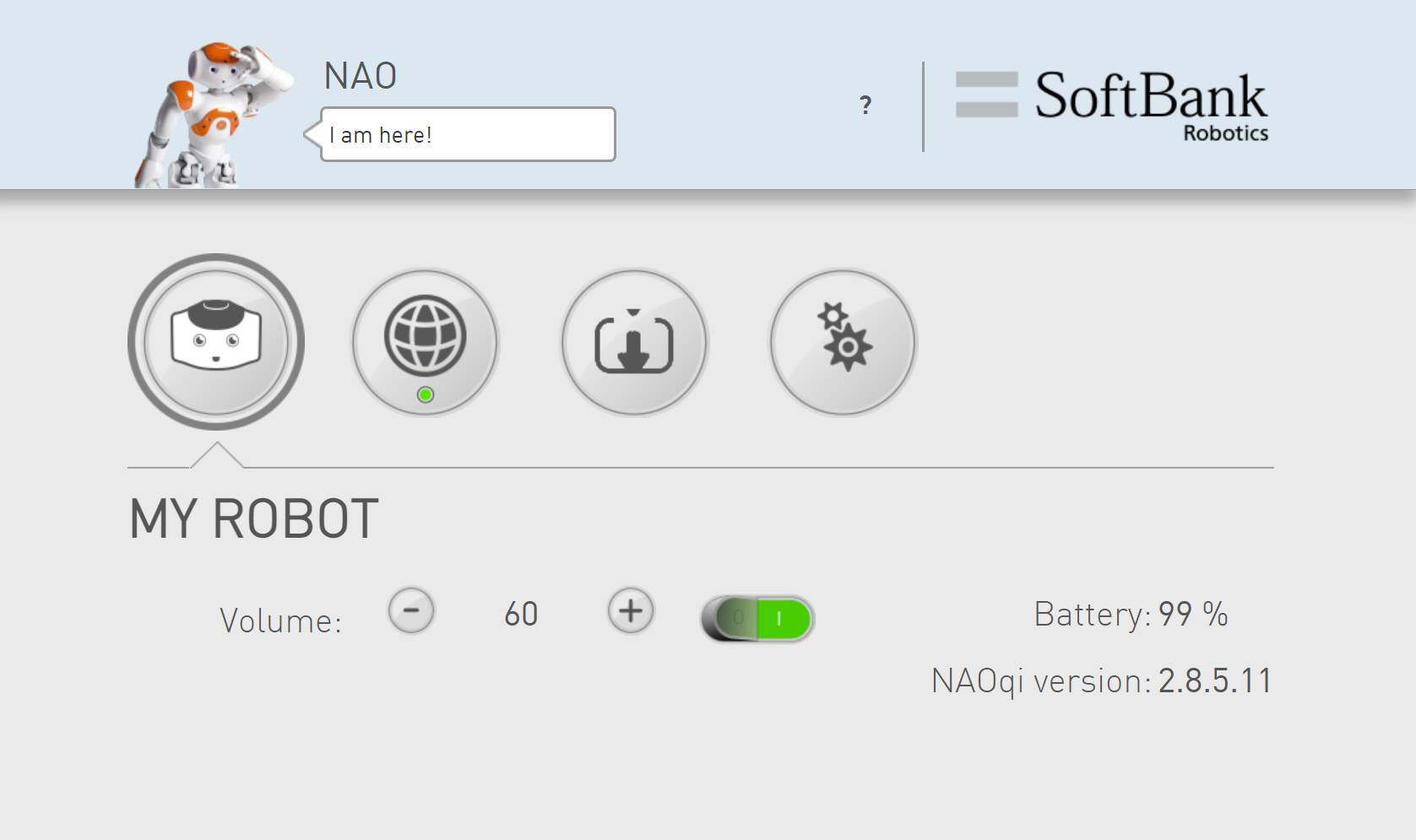

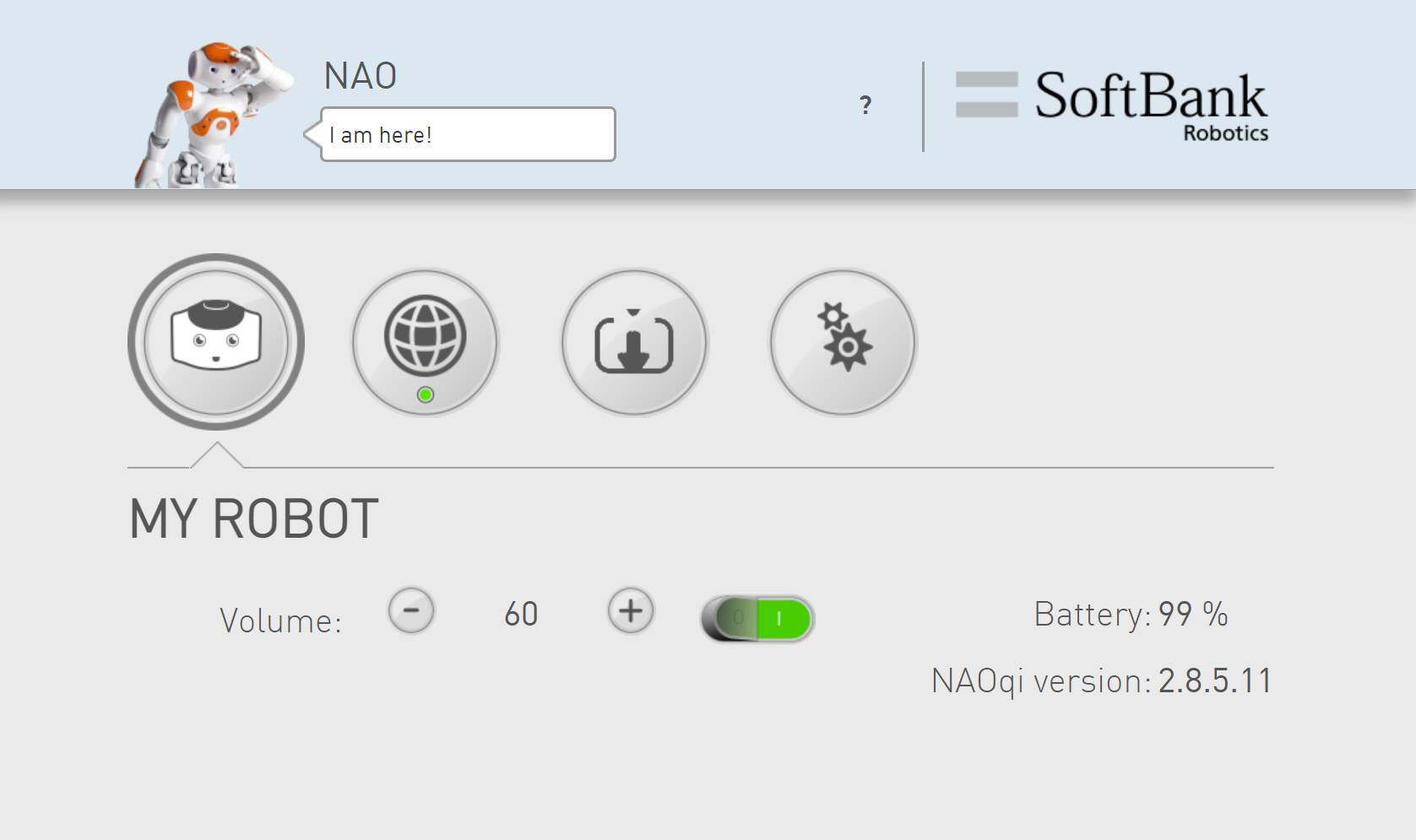

- Tested my code with self.globals.sysProxy.systemVersion() on Appa. This gives NaoQi 2.8.5.11 as answer.

- So, the difference in connecting to the VideoProxy is a difference in NaoQi version.

-

- I could look if I could anything usefull in chapter 3 and 4 from Conscious Mind, Resonant Brain: How Each Brain Makes a Mind.

-

- Note that in the three other Computational Psychobiology optional courses python and use neural nets to recognize patterns. Should be nice to include a neural network assignment.

- In period 1 the course Foundations of Data Science and AI for the Neurosciences, which contains both an introduction to image processing (for spectral analysis) and an introduction to ANN for classification in python.

- In period 2 the course Building Brains with AI, where the learn to execute simple NN-simulations in python.

- Also period 2 the course Psychology and AI. I don't see any programming assignments, although they use the book of Melany Mitchell, so the could use Netlogo.

January 16, 2023

- Two groups encounter an error with Phileas and Ferb, when the call the cSubscribe from tools_v3.py. At line 33 the top-camera is chosen with setparam(18,1), which gives an error.

- Trying to reproduce that error with Appa.

- On Appa my code works fine, nine images are saved. The only warning is 'Could not switch off FallManager'.

- At the end two warnings are given:

Framework terminiated succesfully

[W] 1673860539.239084 10872 qitype.signal: disconnect: No subscription found for SignalLink 14.

[W] 1673860539.239084 8508 qitype.signal: disconnect: No subscription found for SignalLink 0

- Note that I used vision_v2, instead of vision_v3:

Module 'behaviour' not loaded.

Module 'motion_v6' loaded as 'motion'.

Module 'globals_v6' loaded as 'globals'.

Module 'sonar_v1' loaded as 'sonar'.

Main-Module loaded: 'main_v6' as 'main'.

Module 'tools_v3' loaded as 'tools'.

Module 'vision_v2' loaded as 'vision'.

- Run the code with vision_v3 (had to correct the vision_v3 class name) which also works.

- On Ferb this gives the error, so it seems to do with the flashed version.

- When I comment the setParam out, the next call (vidProxy.subscribe()) fails. So, there is something wrong with the vidProxy ('Object' object).

- Did a ssh into Ferb, and run qicli info | grep Video (Following this guide). That gives:

050 [ALVideoDevice]

060 [ALVideoRecorder]

- Continued, and run the command qicli info ALVideoDevice. The function setParam is no longer there (instead setParameter), and subscribe is now subscribeCamera. Should check the release notes. In the Changelog 2-8 nothing is mentioned on this topic.

- Checked the webinterface, but deactivation of safety reflexes is allowed on Appa.

- Tested a new version of tools_v3. The code works, if I use self.video_client = self.globals.vidProxy.subscribeCamera(client_name, 1, resolution, colorSpace, fps) instead of self.globals.vidProxy.subscribe(client_name, resolution, colorSpace, fps). This is documented at the NaoQi 2.8 documentation, but without python code.

-

- Checking what goes wrong with globals when the code is run in Spyder. In globals_v6 contains an app.start().

- Problem is more in setting the dependencies. The original error was:

Application was already initialized

Error: (setDependencies) problem while loading dependencies of module 'globals'

- Modified the calls from exit to sys.exit. The error is clearly in the function setDependencies. Included an unSetDependencies in the error handling, but this fails in the next module:

motion_v6 instance has no attribute 'unsetDependencies'

Error: (setDependencies) problem while loading dependencies of module 'motion'

An exception has occurred, use %tb to see the full traceback.

SystemExit: 4

- Running now self.globals.sysProxy.systemVersion() in main_v7.py. It prints v2.8.7.4!

- Looked in this post, and tried the following code:

sp = ALProxy("ALServiceManager", "ferb.local", 9559 )

sp.stopService("ALServiceManager")

sp.startService("ALServiceManager")

- That worked once, but after an error it gave 'NoneType' object is not callable.

- Looked into the code of pynaoqi-2.8.7.4\lib\qi\__init__.py. At line 154 is the function that raise the exception "Application was already initialized".

- There is also a _stopApplication function, which is register atexit (line 183). Not directly callable.

- The init itself load __qi.pyd, so not further accessable.

- As this atexit page indicates, the atexit is not called when a Python fatal error is detected.

- Note that during startup the following modules are reloaded: inaoqi, qi._binder, motion, naoqi, _allog, _qi, qi.path, _inaoqi, allog, qi._type, qi, qi.translator, qi.logging. Used from inspect import getmembers, isfunction, followed with print(getmembers(qi,isfunction)). For instance the previous decovered _stopApplication function shows up, but further nothing usefull.

January 13, 2023

- Connected Mio to LAB42 and switched the Fall manager off. Mio is running NaoQi v 2.1.4.

January 11, 2023

- Succesfully gave permissions to all qi-libraries following the tips of this post. All three Macs can now run naoqi.

- Phineas is complaining on having a too hot right leg. Couldn't execute Thai Chi.

- Jerry seems to have the same problem.

January 10, 2023

- Trying to flash Appa with the USB-stick, by following these instructions.

- Added Appa to the LAB42 network. Still the webinterface hangs on the wizard. Could circumvent that with my script (not yet). Allowed the fall-detection switch off in the advanced-tab. At this page the version is still 2.8.5.11 (both for Appa and Moos).

- Created a small script to query the systemVersion with ALSystem.

- Checked /var/log/firmware/firmware.log on Moos. The chestboard indicated 'nothing to do', followed by Softversion 2.8.5 and Hardware version 6.0.0

- The opn-image used for flashing contains both scripts and binary code. In the method get_image_vesion() the version of the image is checked with hexdump FACTORY_RESET_2.8.7.4_20210820_094013.opn -n8 -s192 -v. This returns 00000c0 0200 0800 0700 0400, which seems to indicate v2.8.7.4 (as expected).

- Could try the script from January 24, 2022 to see what the main webinterface says.

- Did on Sam a wget and executed set wizard state script. Now have access to the main webinterface (but still v2.8.5.11 is reported):

- Looked at Aldebaran software downloads, but Choregraphe is only available for v2.8.6.23!

- Lets try to do the firmware update via Choregraphe v2.8.6.23 on Momo.

- Momo has already v2.8.5.11, so only switched off the DNT software.

- Updated the name of moos with this set_name script

- Strange enough, this same script does not work on appa.

- Tried a version based on a Qi-session, but session.service("ALProxy") said 'Cannot find service 'ALProxy' in index'.

- Yet, setRobotName is now part of 'ALSystem' service, so v2 of the set_name works.

- Momo nicely boots with DNT switched off, but no WIFI-scan is possible in the webinterface (tried 2x). Going to flash Momo. Momo complains that the image was corrupted, that the system is still running v2.8.5.11, but that the factory reset succeeded.

- Yet, now I could scan and deactivate the safety reflexes. Momo is also ready to go.

- Tried to update Phineas, but not clear where the update fails on here. Maybe a coorrupt opn-image, so the system is still in HULKS mode.

- In Choregraph only appa, mommo and moos are visible. Also ping to nao.local or phineas.local doesn't work.

- Also booted Ferb (without flashing). Ferb ears are blue, and the chest-button is white. Phineas chest-button is red.

- Should try to connect to Ferb via SPL_A and ip 10.0.24.23. That works. Yet, Choregraph could not connect, so I have to try to flash with a stick.

- Tried the v2.1.4.13 stick that lay with WS5, but that is an image for a Nao v5.

- Did a search on my home-computer for opn in September 14, 2021, but v2.1.4.13 seems the most recent I could find.

January 9, 2023

- Checked the links in the 'How to install (Windows)'. Updated the link to Conda Cheat sheet. This is for version 4.14.0.

- In Anaconda2 I actually use v4.7.12. Yet, the v4.14 and v4.12 are much better than the v4.6 version.

- Updated the documentation on OpenCV Image processing to the topics from Image Processing in OpenCV

- This tutorials are based on github repository (only one branch). Yet, this seems mainly a copy of the official tutorials.

- Linked to the official versions (which have version specified): Image filtering and Hough circles (both for v4.2.0).

-

- One of the Festo highlights of 2018-2021 is their Education Kit, which can be programmed with Open Roberta Lab. This visual programming environment (like Blink), also has a Nao configuration.

January 5, 2023

- Followed the instructions to make a bootable flash-stick from the Pepper-stick.

- Unpacked flasher-2.1.0.19 and selected the v2.8.7.4 opn to flash.

- A Nao v4 image was detected, but it fails to write because the stick had already a file system.

- The instructions suggested DISKPART, but instead I used Overwrite format from SD Card formatter. That didn't help.

- Doing the clean via diskpart worked fine. Bootable flash-stick is made.

-

- Continue with making a mask for motion. Downloaded lastsnap.png from RaspberryPi stick.

- Started Anaconda2 prompt and did conda activate opencv4.3.

- Calling jupyter notebook didn't work (used Anaconda3 for the OpenCV Crash course), yet installing fails on the package maturin that has the requirement of the latest setuptools.

- Problem was not in maturin (selected v0.7.6 instead of v0.11.4), but in package pywinpty (selected v0.5.7 instead of v1.1.0). With this two older package versions jupyter could be installed.

- The notebook starts. Running the first cell of Module 4 of the CrashCourse fails on missing matplotlib.

- Installed matplotlib and restarted the notebook. Running the first cell of Module 4 of the CrashCourse now fails on missing PIL. Installed Pillow. Now the first and second cell could be executed.

- Made a notebook where I first draw four lines with cv2.line, so that I could found the crossings that defined the polygon (drawn with cv2.drawMarker). The 5 points could be combined to draw the ROI with cv2.fillConvexPoly, where the points have to be a np.array.

- The mask has to be saved as a BINARY 8-bit pgm file, which seems not to be the default.

- Following the suggestions of this post, I tried to save it with cv2.imwrite("mask.pgm", mask, (cv2.IMWRITE_PXM_BINARY, 0)). Quite a large file for a mask, but the image is 1600x1200. I will test it on the Rasberry Pi.

- Edited the /etc/motion.cfg. Added a line with mask_file /etc/motion/mask.pgm. Also switched setup_mode on. For debugging, also added lines smart_max_speed 1 and picture_output_motion on. No mask is visible in the debug images (no red mask, only blue motion).

- Looked into /var/log/motion/motion.log, where I see the error-line [ERR] [ALL] [Jan 05 15:23:45] motion_init: Failed to read mask image. Mask feature disabled.

- Created the pgm file again, this time with cv2.imwrite("binary_mask.pgm", mask, (cv2.IMWRITE_PXM_BINARY, 1)). Now the binary_mask.pgm could be read:

Previous Labbooks

.jpg)