To be done:

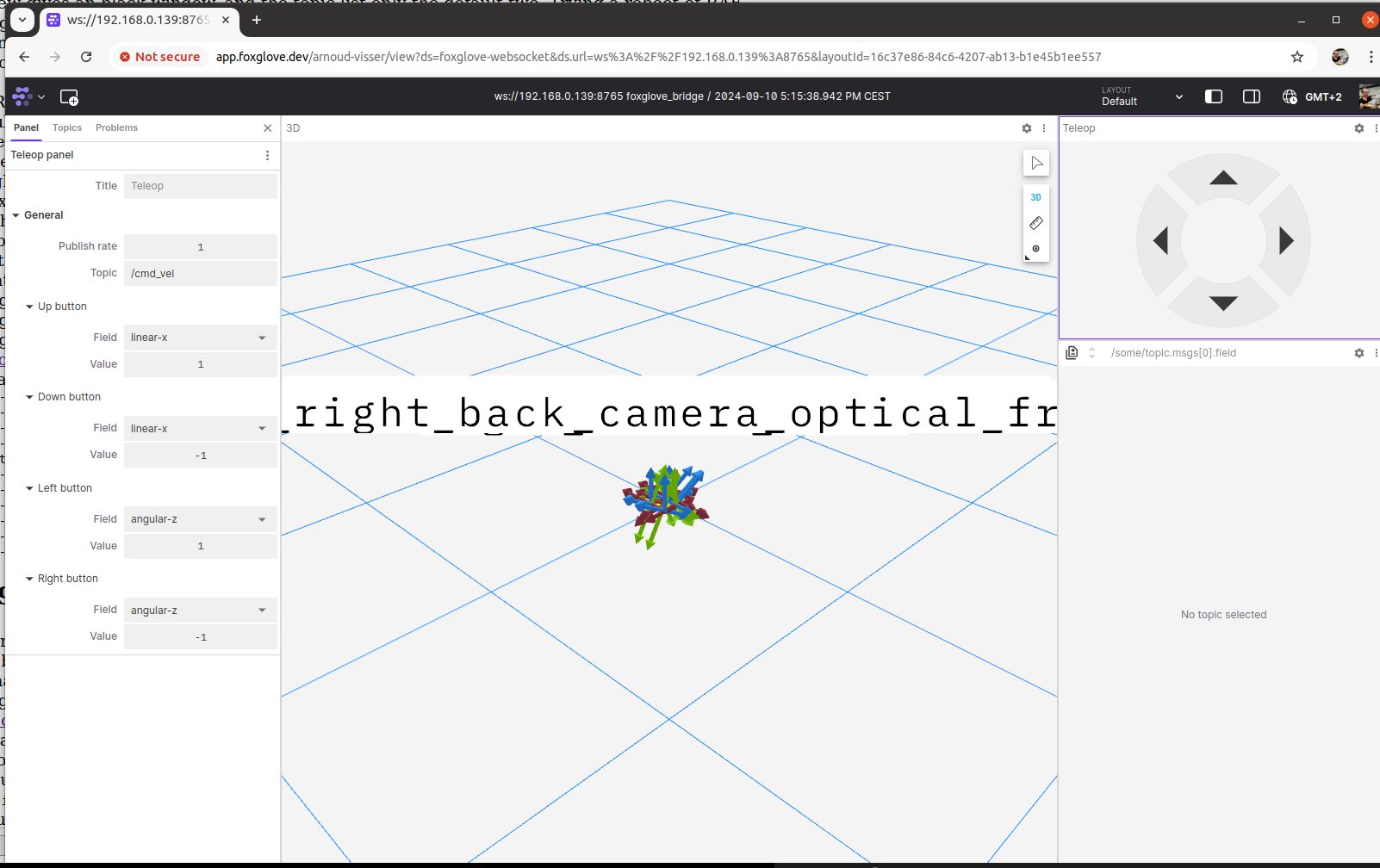

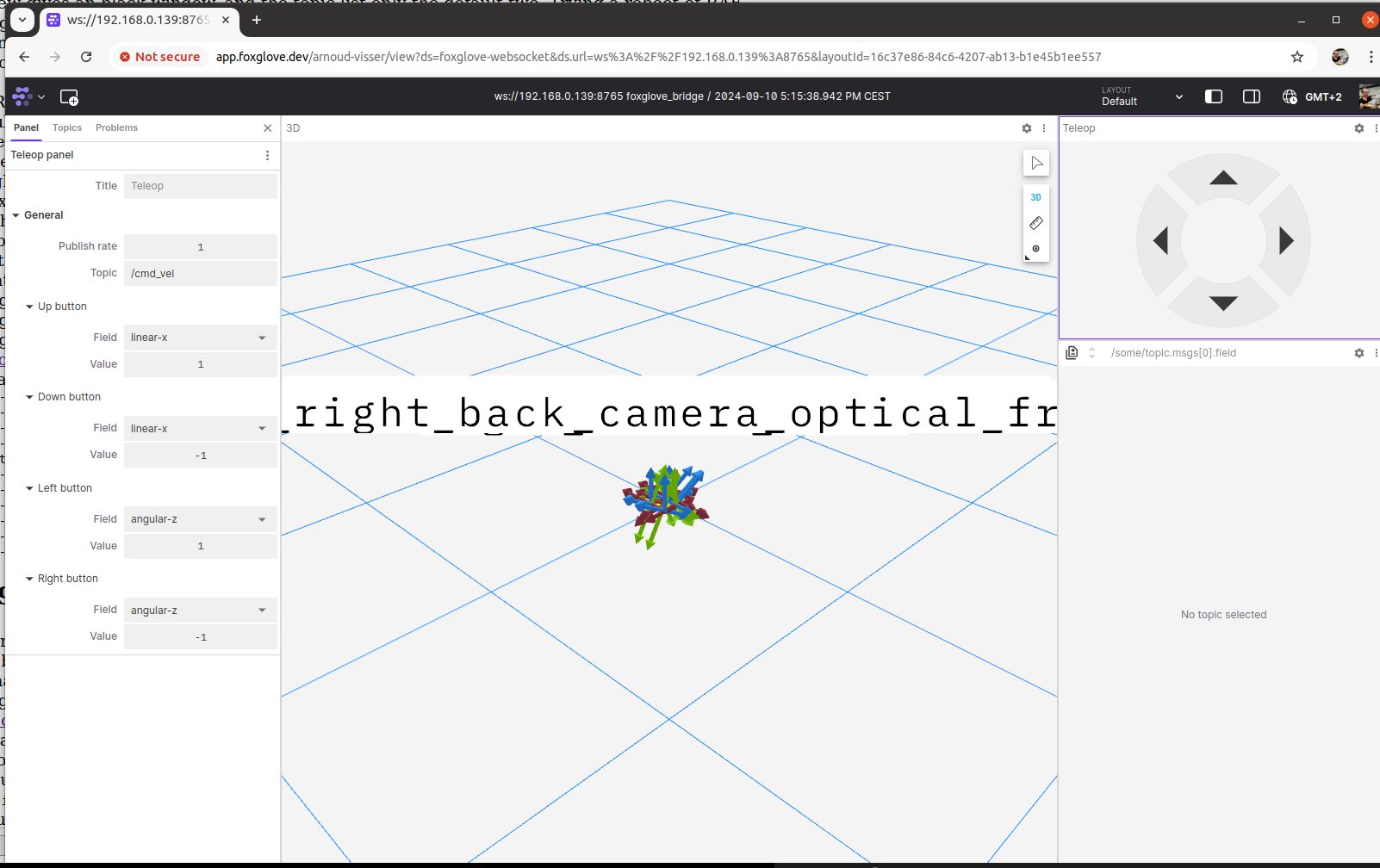

- Implement the book exercises in ROS2, which runs on RAE

Started Labbook 2025

December 23, 2024

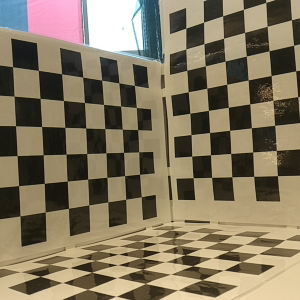

- Downloaded the small maze dataset, which contains 99 images.

- There are three steps. The first step (matching with the SiftGPU plugin) works well, the second step (sparse reconstruction) fails on missing libpba.so

- Added the instruction cp bin/libpba_no_gpu.so ../vsfm/bin/libpba.so to the TechReport IRL-UVA-24-02.

- Also had a minor warning, which can be ignored:

(VisualSFM:193033): GLib-GObject-WARNING **: 10:00:12.964: ../../../gobject/gsignal.c:2081: type 'GtkRecentManager' is already overridden for signal id '349'

- Found Scott M. Sawyer's MIT Lincoln Laboratory's webpage back. It points to still existing personal website 10flow.com.

-

- Should try to run VisualSfM on the bridge-1.zip dataset, which was in Peter Corke's section 14.8.3 (Visual Odometry).

December 18, 2024

- On ws9, the colcon_cd functionality I installed in October is no longer in /usr/share/colcon_cd/. Removed the lines in .bashrc.

- I am building pmws-2, graclus-1.2 and cmvs with g++ version 12.3.0.

- Tried it without modifying bundle.cc and genOption.cc, but that fails.

- Used sudo update-alternatives --config g++ to switch to version 11.4.0. Less errors, but still not compiling. Same for version 9.5.0

- Had to add deb [allow-insecure=yes] http://archive.ubuntu.com/ubuntu/ bionic main universe to /etc/apt/sources.list to be able to do sudo apt install g++-5.

- This still fails with option -std=c++0x, but without this option g++-5 can compile cmvs.

- I can start VisualSFM, although I get a warning Failed to load module "canberra-gtk-module"

December 17, 2024

- Looking what I have done on December 2 with ~/packages/pmvs-2/program/base/numeric/clapack. Just unpacked the clapack.tgz to directory CLAPACK-3.2.1, didn't change the Makefile or did a make. Just the code seems to be enough.

- The trick that I seem to have used, is to make a logical link called clapack in ~/packages/pmvs-2/program/main/include to ~/packages/pmvs-2/program/base/numeric/clapack/CLAPACK-3.2.1/INCLUDE.

- Updated the TechReport in Overleaf, test tomorrow on WS9.

December 16, 2024

- One of the teams used an innovation on colmap from the same developers: glomap, described in ECCV 2024.

- Impressive results, although OpenMVG is in most cases faster. Paper with code.

- Regard3D is tool based on openMVG.

December 12, 2024

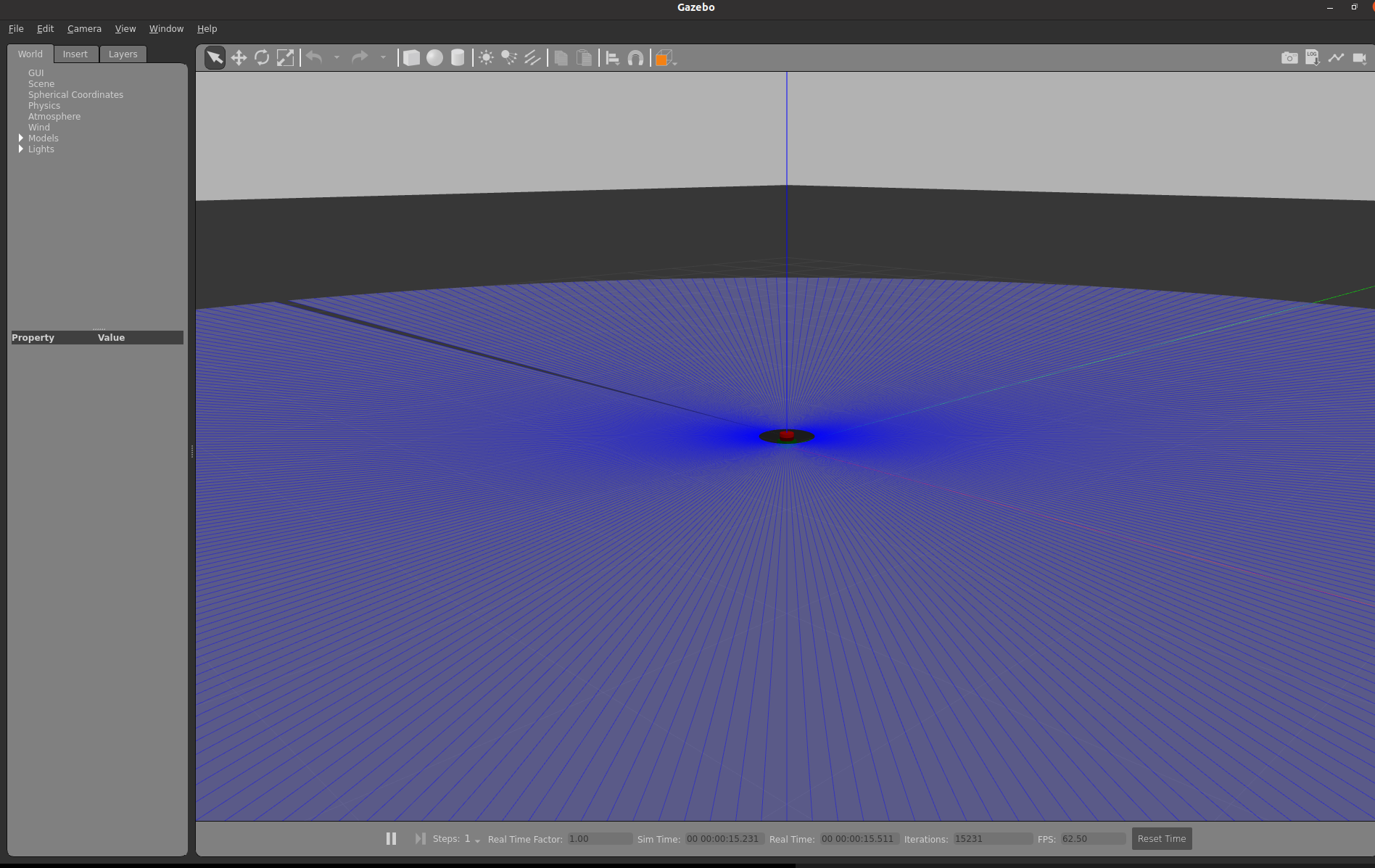

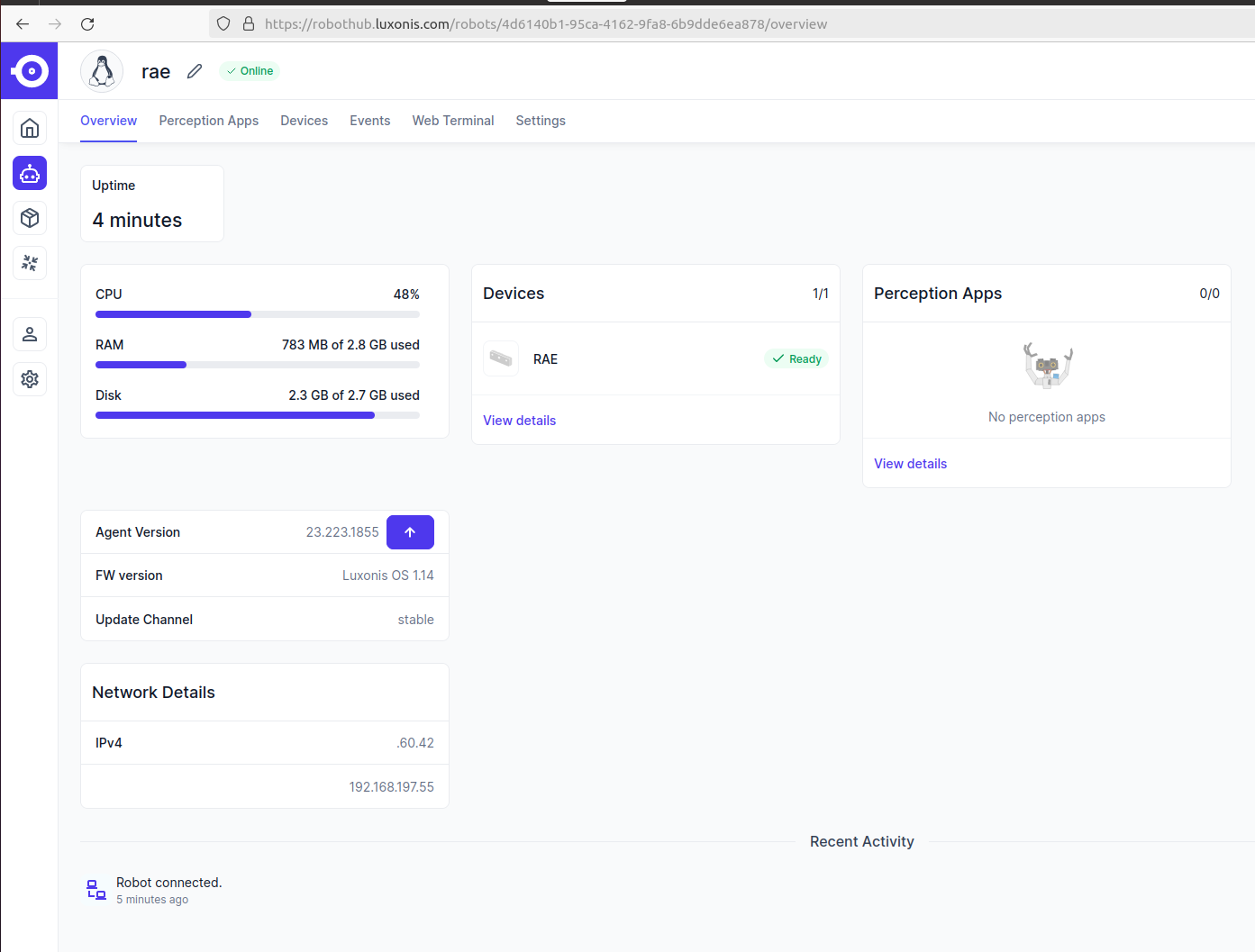

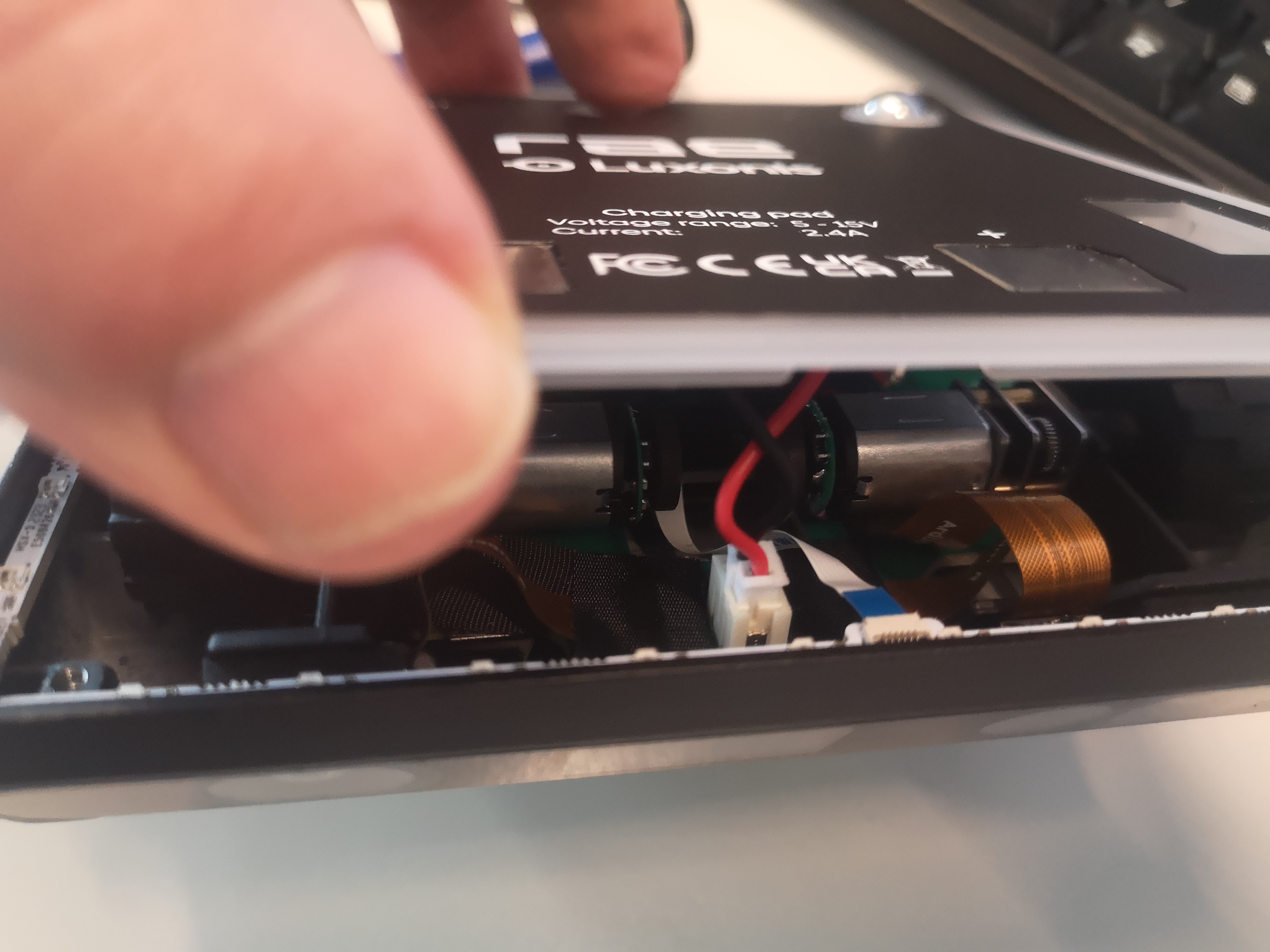

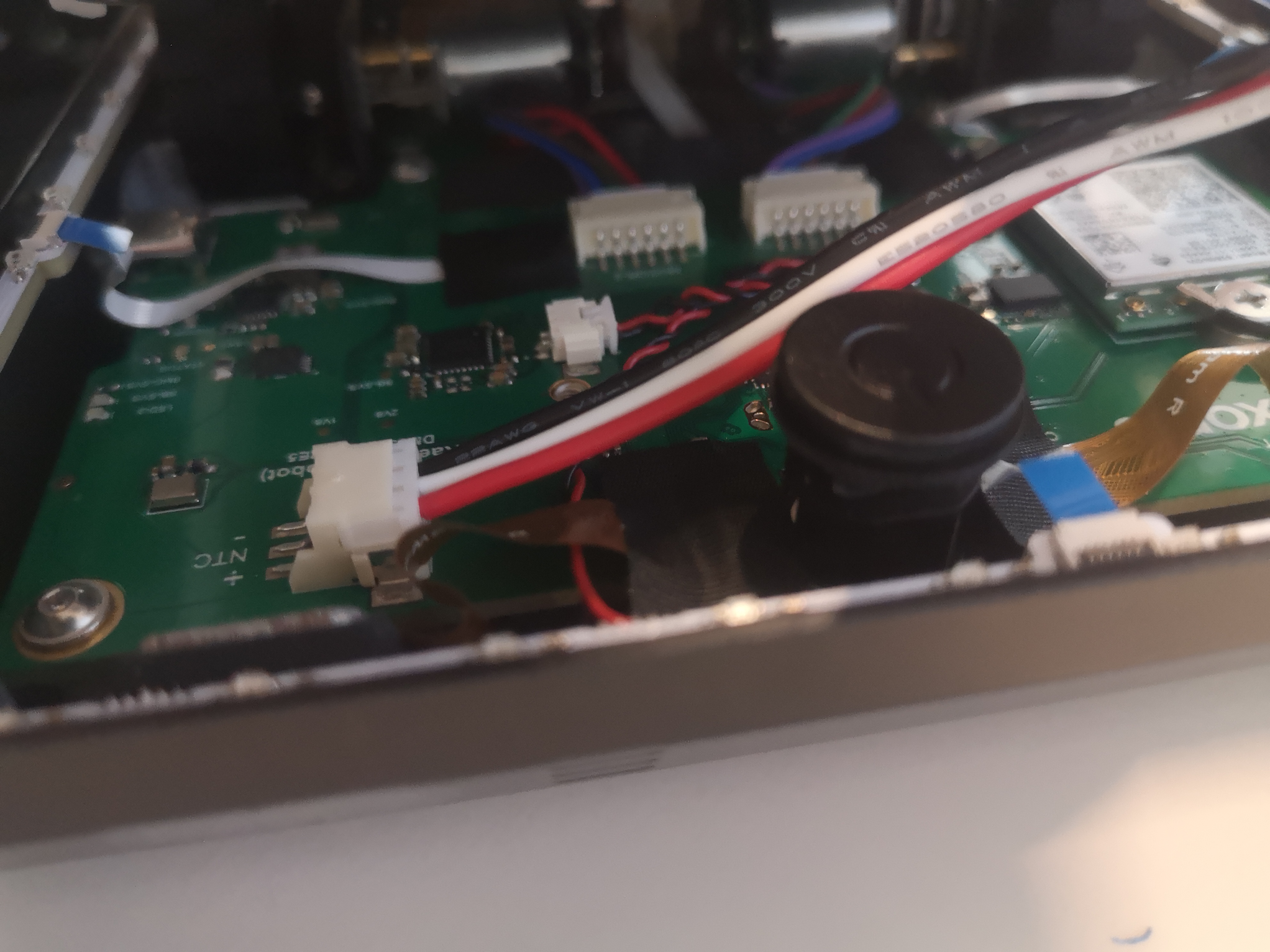

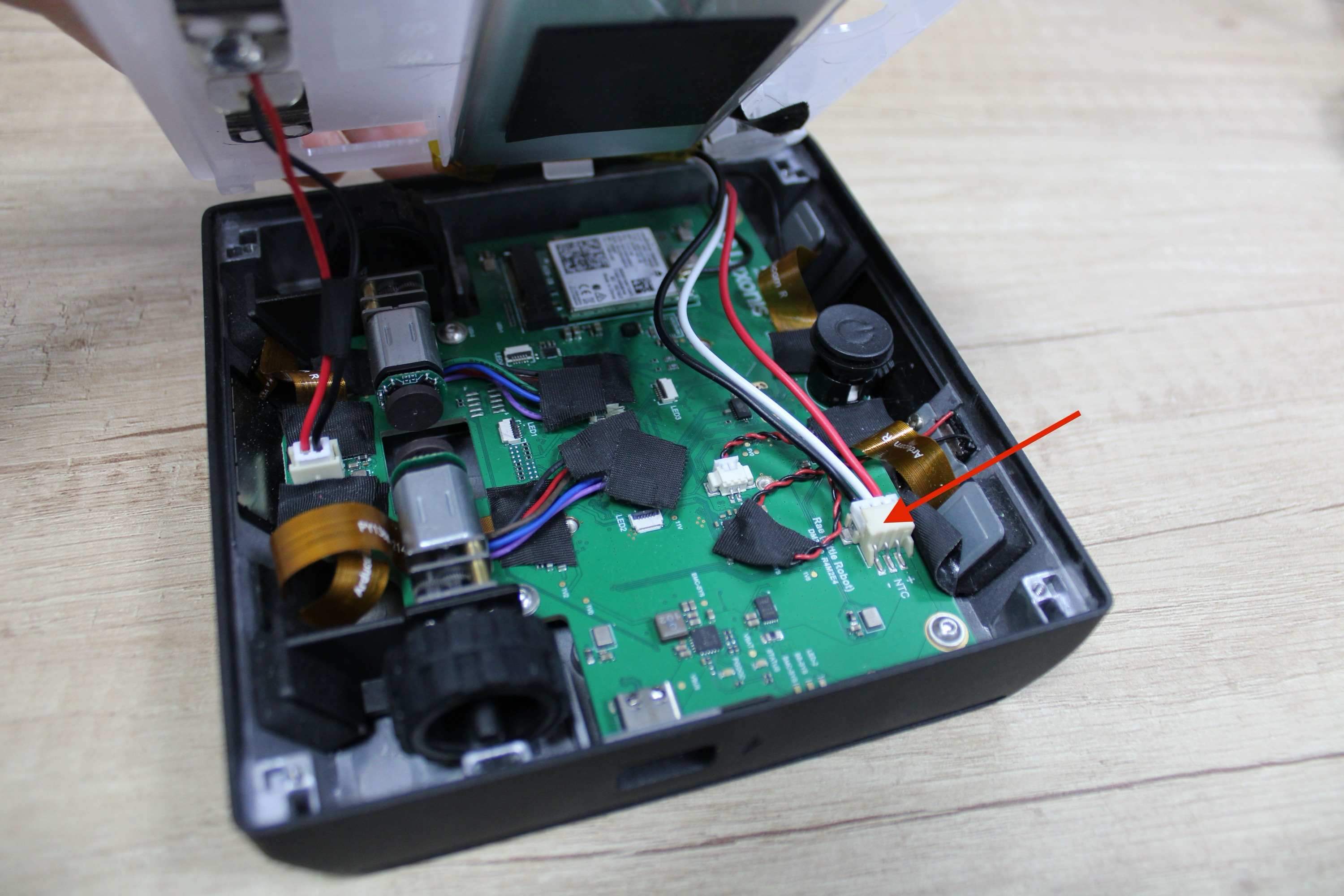

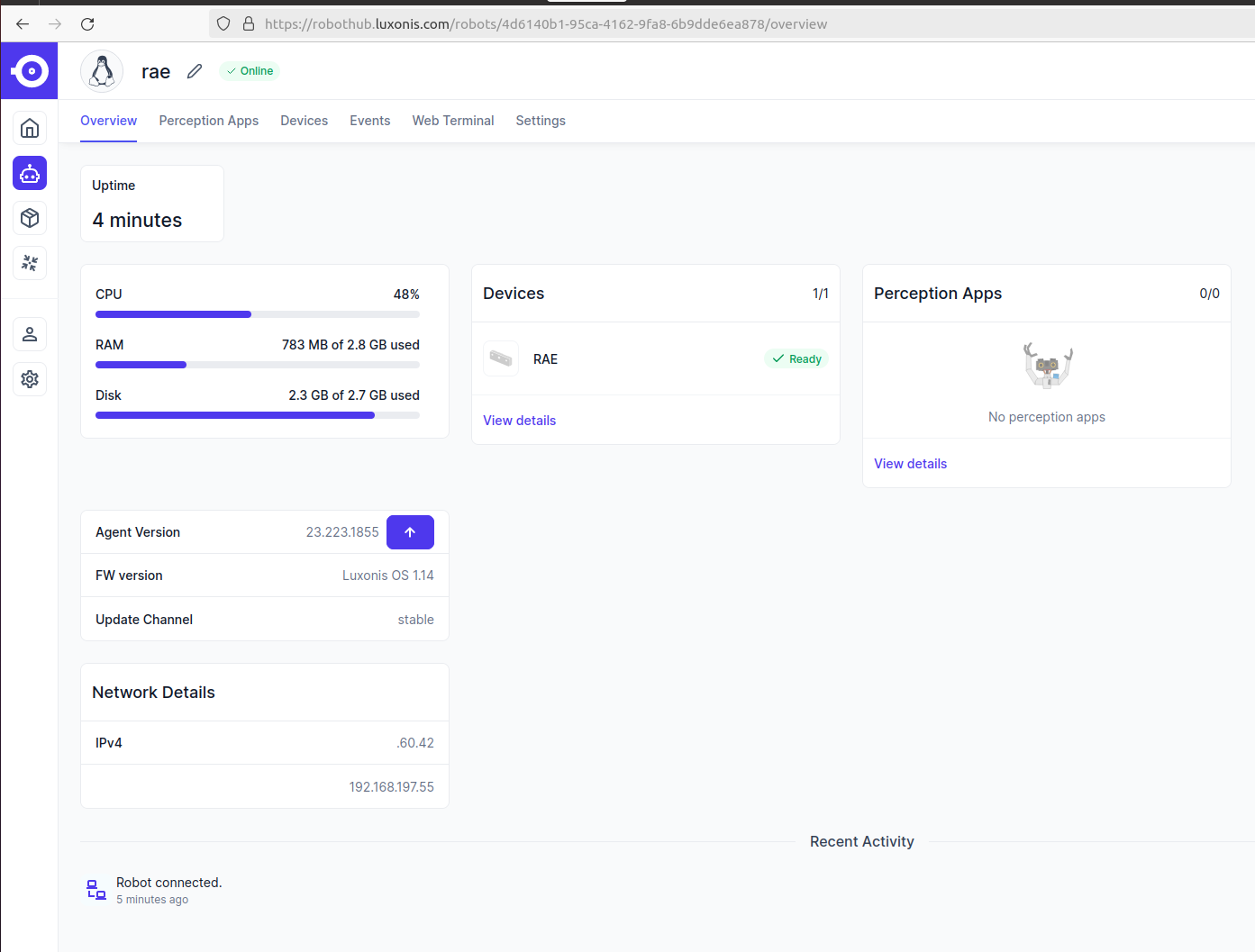

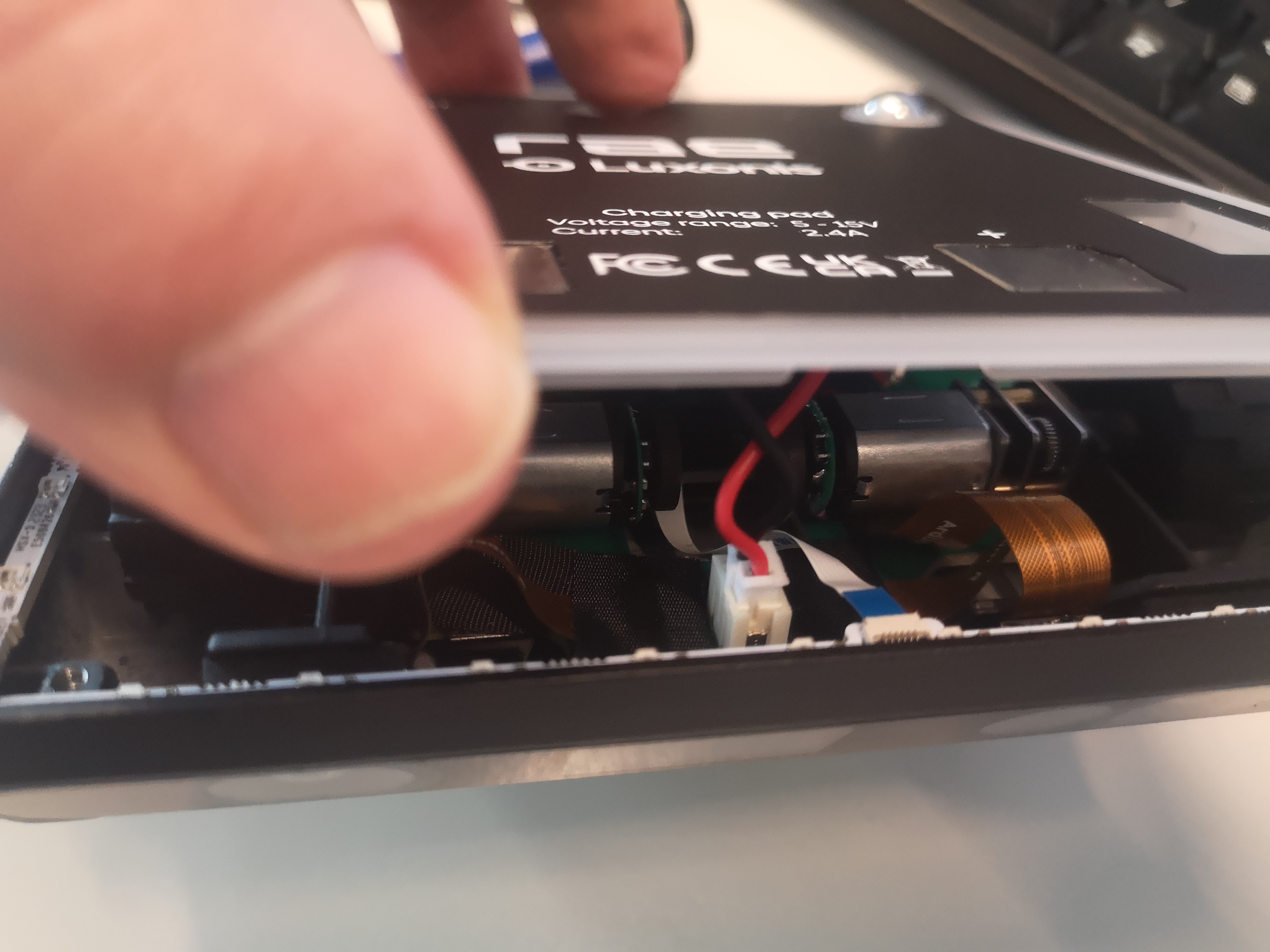

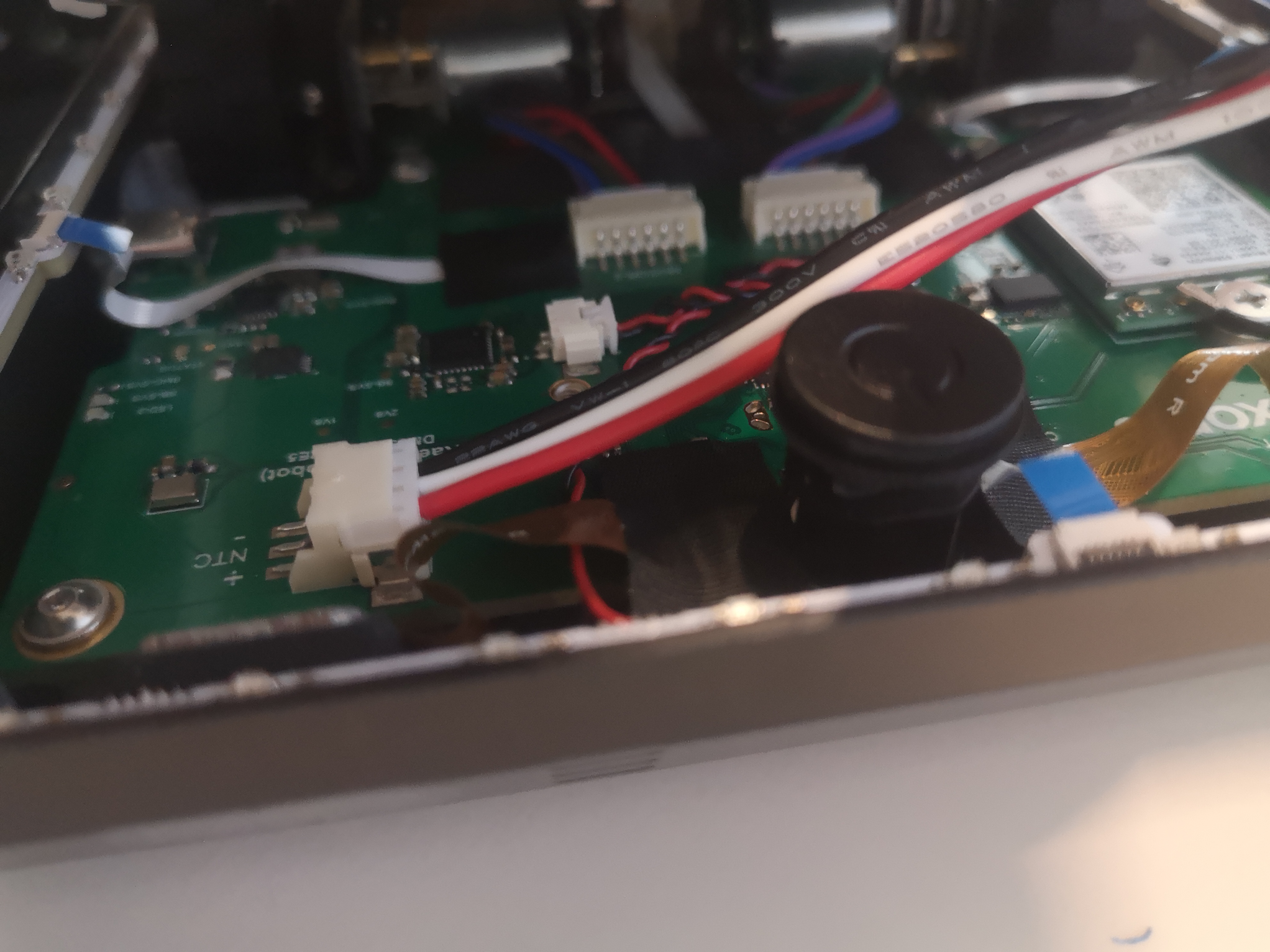

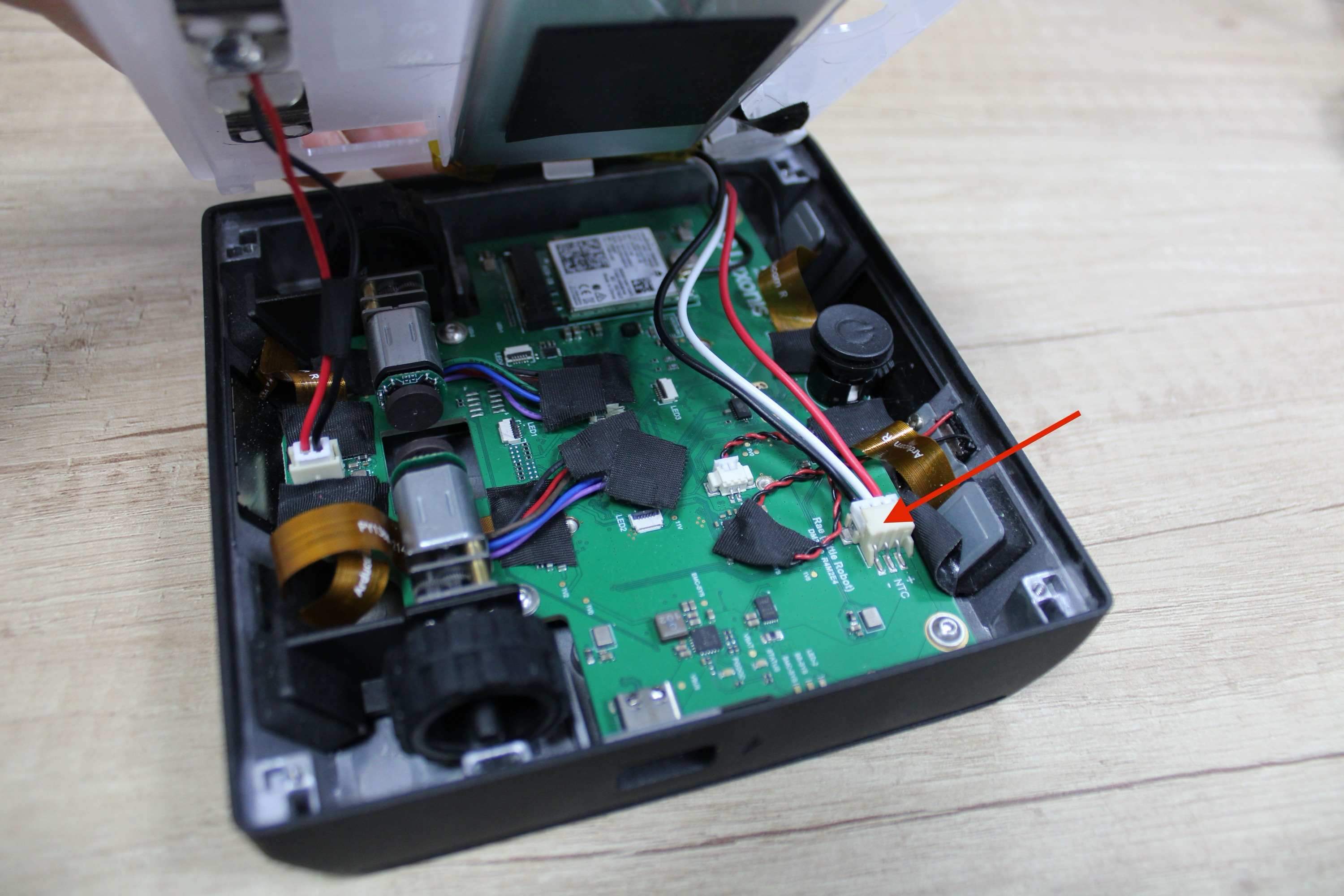

- Looking into the error in the disparity of the stereo-camera of the RAE. The stereo-camera should have a baseline of 7.5 cm (check). Yet, the maximum depth that can be measured is larger than expected.

- According to spreadsheet, the focal distance is 882 pixels, while our calibration found a value between 280 and 320 pixels.

Only group1 found a focal length of (843, 871).

- Maybe related with how the resolution is set, as configured for ROS.

- Note that for accuracy, I have to look at Wide FOV plot. Yet, seems to be the same as the standard 800P, only scaled 20% (shorter).

- The measurement setup was with subpixel mode on, so theoretical error is calculated for half or even a quarter of a pixel.

- The Accuracy oscillation nicely shows plateau's on heigth of 6.6m, 8.25m and 11m, which correspond to 5/4/3 pixels.

-

- Looked if I could read out the calibration data, according to this this example.

- Did inside the docker (in /tmp/examples) first wget https://bootstrap.pypa.io/get-pip.py, python3 get-pip.py, followed by python3 -m pip install depthai.

- After wget https://raw.githubusercontent.com/luxonis/depthai-python/main/examples/calibration/calibration_reader.py the command python3 calibration_reader.py gave with dai.Device(): No available devices.

- Looking into the depthai_ros_driver. In dai_nodes/stereo.cpp the class Stereo is called, with a link to pipeline, device and a left/rightSocket. This code can probabley found in dai_nodes/sensors/sensor_wrapper.'

- In stereo.cpp the function sensor_helpers::getCalibInfo() is called (for the device).

- In sys_logger a lot of details is printed, such as the sysinfo with Leon CPU Usage.

- Not clear what features are tracked by default.

- dai_nodes/sensors/mono.cpp at least does getCalibInfo from "i_calibration_file".

- The file dai_nodes/sensor/sensor_helpers.cpp contains some usefull definitions, such as available resolutions.

- The file /ws/install/rae_camera/share/rae_camera/config/camera.yaml doesn't contain the i_calibration_file definition.

- More information can be get when I launch camera.launch.py with the environment setting DEPTHAI_DEBUG=1.

- Started ros2 launch rae_camera rae_camera.launch.py params_file:='/ws/install/rae_camera/share/rae_camera/config/camera.yaml', but still DEFAULT logging is set to INFO.

- Tried ros2 launch depthai_ros_driver camera.launch.py. At least I now get [depthai] [info] DEPTHAI_DEBUG enabled, lowered DEPTHAI_LEVEL to 'debug'.

- Next [depthai] [debug] Resources - Archive 'depthai-bootloader-fwp-0.0.26.tar.xz' open: 13ms, archive read: 259ms, which fails (Could not create session on gate!).

- Before that the oak-D model is correctly loaded:

[component_container-1] [DEBUG] [1733923437.478357305] [kdl_parser]: Link oak had 5 children.

[component_container-1] [DEBUG] [1733923437.478533139] [kdl_parser]: Link oak_imu_frame had 0 children.

[component_container-1] [DEBUG] [1733923437.478578473] [kdl_parser]: Link oak_left_camera_frame had 1 children.

[component_container-1] [DEBUG] [1733923437.478609515] [kdl_parser]: Link oak_left_camera_optical_frame had 0 children.

[component_container-1] [DEBUG] [1733923437.478644640] [kdl_parser]: Link oak_model_origin had 0 children.

[component_container-1] [DEBUG] [1733923437.478704724] [kdl_parser]: Link oak_rgb_camera_frame had 1 children.

[component_container-1] [DEBUG] [1733923437.478735224] [kdl_parser]: Link oak_rgb_camera_optical_frame had 0 children.

[component_container-1] [DEBUG] [1733923437.478769641] [kdl_parser]: Link oak_right_camera_frame had 1 children.

[component_container-1] [DEBUG] [1733923437.478799849] [kdl_parser]: Link oak_right_camera_optical_frame had 0 children.

Yet, I only one stereo camera is loaded, not front and back.

- Still: [oak]: No ip/mxid specified, connecting to the next available device..

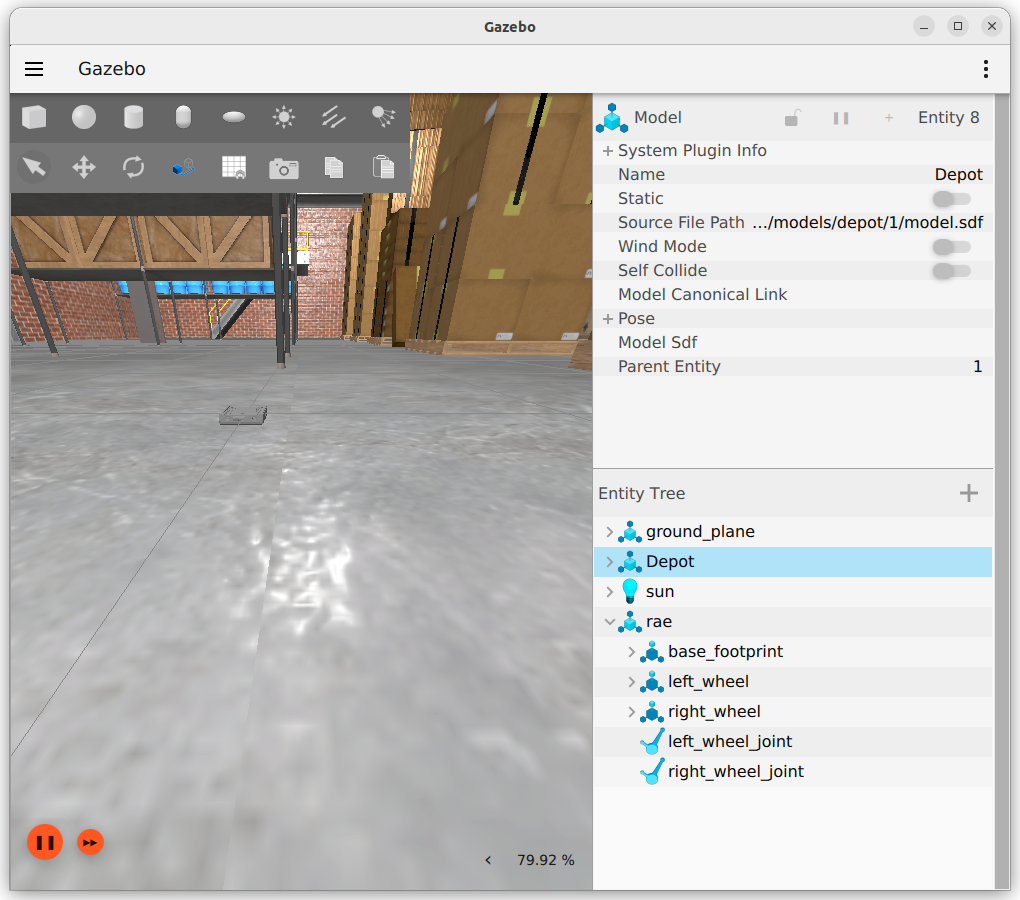

- Tried ros2 launch rae_bringup robot.launch.py.

- That has a ros2_control_node:

[ros2_control_node-5] [WARN] [1733924498.121108086] [controller_manager]: [Deprecated] Passing the robot description parameter directly to the control_manager node is deprecated. Use '~/robot_description' topic from 'robot_state_publisher' instead.

[ros2_control_node-5] [INFO] [1733924498.123028929] [resource_manager]: Loading hardware 'RAE'

- The camera is created in a rae_container:

[component_container-1] [INFO] [1733924498.906458553] [rae_container]: Found class: rclcpp_components::NodeFactoryTemplate

[component_container-1] [INFO] [1733924498.906641387] [rae_container]: Instantiate class: rclcpp_components::NodeFactoryTemplate

[component_container-1] [DEBUG] [1733924498.907210098] [rcl]: Initializing node 'camera' in namespace ''

- Rebooted. Now I get:

[component_container-1] [INFO] [1733925128.850781046] [rae]: Camera with MXID: xlinkserver and Name: 127.0.0.1 connected!

[component_container-1] [INFO] [1733925128.851008212] [rae]: PoE camera detected. Consider enabling low bandwidth for specific image topics (see readme).

[component_container-1] [INFO] [1733925128.886109313] [rae]: Device type: RAE

[component_container-1] [INFO] [1733925129.165539075] [rae]: Pipeline type: rae

[component_container-1] [INFO] [1733925130.443240332] [rae]: Finished setting up pipeline.

[component_container-1] [INFO] [1733925132.081899464] [rae]: Camera ready!

- Note that I have not seen the Pipeline type rae in the depthai_ros_driver.

- Tried again, with DEPTHAI_DEBUG=1. This gives some additional information:

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925185.896] [system] [info] Reading from Factory EEPROM contents

[component_container-1] [INFO] [1733925185.926919507] [rae]: Device type: RAE

[component_container-1] [DEBUG] [1733925186.212712788] [rae]: Socket 1 - OV9782

[component_container-1] [DEBUG] [1733925186.212909040] [rae]: Socket 0 - IMX214

[component_container-1] [DEBUG] [1733925186.213158710] [rae]: Socket 2 - OV9782

[component_container-1] [DEBUG] [1733925186.213204544] [rae]: Socket 3 - OV9782

[component_container-1] [DEBUG] [1733925186.213241253] [rae]: Socket 4 - OV9782

[component_container-1] [DEBUG] [1733925186.214585809] [rae]: Device has no IR drivers

[component_container-1] [INFO] [1733925186.214883313] [rae]: Pipeline type: rae

[component_container-1] [DEBUG] [1733925186.217009295] [pluginlib.ClassLoader]: Creating ClassLoader, base = depthai_ros_driver::pipeline_gen::BasePipeline, address = 0x7fce133eb8

[component_container-1] [DEBUG] [1733925186.221016048] [pluginlib.ClassLoader]: Entering determineAvailableClasses()...

[component_container-1] [DEBUG] [1733925186.221165633] [pluginlib.ClassLoader]: Processing xml file /underlay_ws/install/depthai_ros_driver/share/depthai_ros_driver/plugins.xml...

[component_container-1] [DEBUG] [1733925186.222069060] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::RGB, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222178353] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::RGBD, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222306105] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::RGBStereo, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222341688] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::Stereo, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222372855] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::Depth, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222402981] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::CamArray, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222435439] [pluginlib.ClassLoader]: XML file has no lookup name (i.e. magic name) for class depthai_ros_driver::pipeline_gen::Rae, assuming lookup_name == real class name.

[component_container-1] [DEBUG] [1733925186.222499732] [pluginlib.ClassLoader]: Exiting determineAvailableClasses()...

...

[component_container-1] [DEBUG] [1733925186.225114386] [pluginlib.ClassLoader]: depthai_ros_driver::pipeline_gen::Rae maps to real class type depthai_ros_driver::pipeline_gen::Rae

[component_container-1] [DEBUG] [1733925186.225374889] [pluginlib.ClassLoader]: std::unique_ptr to object of real type depthai_ros_driver::pipeline_gen::Rae created.

[component_container-1] [DEBUG] [1733925186.225485265] [rae]: Creating node stereo_front

[component_container-1] [DEBUG] [1733925186.228509466] [rae]: Setting param stereo_front.i_left_socket_id with value 1

[component_container-1] [DEBUG] [1733925186.230595031] [rae]: Setting param stereo_front.i_right_socket_id with value 2

[component_container-1] [DEBUG] [1733925186.239961387] [rae]: Creating stereo node with left sensor left and right sensor right

[component_container-1] [DEBUG] [1733925186.240310932] [rae]: Creating node left base

[component_container-1] [DEBUG] [1733925186.242118453] [rae]: Setting param left.i_max_q_size with value 30

[component_container-1] [DEBUG] [1733925186.244563813] [rae]: Setting param left.i_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.247804558] [rae]: Setting param left.i_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.251080470] [rae]: Setting param left.i_calibration_file with value

[component_container-1] [DEBUG] [1733925186.254959722] [rae]: Setting param left.i_simulate_from_topic with value 0

[component_container-1] [DEBUG] [1733925186.257842796] [rae]: Setting param left.i_simulated_topic_name with value

[component_container-1] [DEBUG] [1733925186.260680287] [rae]: Setting param left.i_disable_node with value 0

[component_container-1] [DEBUG] [1733925186.263348608] [rae]: Setting param left.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925186.265780177] [rae]: Setting param left.i_board_socket_id with value 1

[component_container-1] [DEBUG] [1733925186.268810461] [rae]: Setting param left.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925186.272372293] [rae]: Setting param left.i_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.274429775] [rae]: Setting param left.i_enable_lazy_publisher with value 1

[component_container-1] [DEBUG] [1733925186.276646883] [rae]: Setting param left.i_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.278889908] [rae]: Setting param left.i_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.281877900] [rae]: Setting param left.i_reverse_stereo_socket_order with value 0

[component_container-1] [DEBUG] [1733925186.284719599] [rae]: Node left has sensor OV9782

[component_container-1] [DEBUG] [1733925186.285207063] [rae]: Creating node left

[component_container-1] [DEBUG] [1733925186.291010170] [rae]: Setting param left.i_publish_topic with value 0

[component_container-1] [DEBUG] [1733925186.292803106] [rae]: Setting param left.i_output_isp with value 0

[component_container-1] [DEBUG] [1733925186.295615638] [rae]: Setting param left.i_enable_preview with value 0

[component_container-1] [DEBUG] [1733925186.298304877] [rae]: Setting param left.i_fps with value 30

[component_container-1] [DEBUG] [1733925186.301309869] [rae]: Setting param left.i_preview_size with value 300

[component_container-1] [DEBUG] [1733925186.304632531] [rae]: Setting param left.i_preview_width with value 300

[component_container-1] [DEBUG] [1733925186.307103809] [rae]: Setting param left.i_preview_height with value 300

[component_container-1] [DEBUG] [1733925186.310641182] [rae]: Setting param left.i_resolution with value 800P

[component_container-1] [DEBUG] [1733925186.312956917] [rae]: Setting param left.i_interleaved with value 0

[component_container-1] [DEBUG] [1733925186.315441153] [rae]: Setting param left.i_set_isp_scale with value 0

[component_container-1] [DEBUG] [1733925186.317811138] [rae]: Setting param left.i_width with value 640

[component_container-1] [DEBUG] [1733925186.320637545] [rae]: Setting param left.i_height with value 400

[component_container-1] [DEBUG] [1733925186.323036697] [rae]: Setting param left.i_keep_preview_aspect_ratio with value 1

[component_container-1] [DEBUG] [1733925186.325096637] [rae]: Setting param left.r_iso with value 800

[component_container-1] [DEBUG] [1733925186.326604529] [rae]: Setting param left.r_exposure with value 20000

[component_container-1] [DEBUG] [1733925186.328102045] [rae]: Setting param left.r_whitebalance with value 3300

[component_container-1] [DEBUG] [1733925186.330546198] [rae]: Setting param left.r_focus with value 1

[component_container-1] [DEBUG] [1733925186.332262342] [rae]: Setting param left.r_set_man_focus with value 0

[component_container-1] [DEBUG] [1733925186.333925694] [rae]: Setting param left.r_set_man_exposure with value 0

[component_container-1] [DEBUG] [1733925186.335385961] [rae]: Setting param left.r_set_man_whitebalance with value 0

[component_container-1] [DEBUG] [1733925186.337181773] [rae]: Setting param left.i_fsync_continuous with value 0

[component_container-1] [DEBUG] [1733925186.339686259] [rae]: Setting param left.i_fsync_trigger with value 0

[component_container-1] [DEBUG] [1733925186.344368979] [rae]: Setting param left.i_sensor_img_orientation with value AUTO

[component_container-1] [DEBUG] [1733925186.344885609] [rae]: Node left created

[component_container-1] [DEBUG] [1733925186.345010694] [rae]: Base node left created

[component_container-1] [DEBUG] [1733925186.345071486] [rae]: Creating node right base

[component_container-1] [DEBUG] [1733925186.347410054] [rae]: Setting param right.i_max_q_size with value 30

[component_container-1] [DEBUG] [1733925186.350337129] [rae]: Setting param right.i_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.352291984] [rae]: Setting param right.i_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.354271923] [rae]: Setting param right.i_calibration_file with value

[component_container-1] [DEBUG] [1733925186.356799243] [rae]: Setting param right.i_simulate_from_topic with value 0

[component_container-1] [DEBUG] [1733925186.360060905] [rae]: Setting param right.i_simulated_topic_name with value

[component_container-1] [DEBUG] [1733925186.362640893] [rae]: Setting param right.i_disable_node with value 0

[component_container-1] [DEBUG] [1733925186.364880793] [rae]: Setting param right.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925186.367245444] [rae]: Setting param right.i_board_socket_id with value 2

[component_container-1] [DEBUG] [1733925186.370060101] [rae]: Setting param right.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925186.373379097] [rae]: Setting param right.i_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.375290118] [rae]: Setting param right.i_enable_lazy_publisher with value 1

[component_container-1] [DEBUG] [1733925186.377231640] [rae]: Setting param right.i_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.380130506] [rae]: Setting param right.i_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.382059861] [rae]: Setting param right.i_reverse_stereo_socket_order with value 0

[component_container-1] [DEBUG] [1733925186.384240177] [rae]: Node right has sensor OV9782

[component_container-1] [DEBUG] [1733925186.384457846] [rae]: Creating node right

[component_container-1] [DEBUG] [1733925186.386263200] [rae]: Setting param right.i_publish_topic with value 1

[component_container-1] [DEBUG] [1733925186.390851835] [rae]: Setting param right.i_output_isp with value 0

[component_container-1] [DEBUG] [1733925186.394024579] [rae]: Setting param right.i_enable_preview with value 0

[component_container-1] [DEBUG] [1733925186.396414356] [rae]: Setting param right.i_fps with value 30

[component_container-1] [DEBUG] [1733925186.398879092] [rae]: Setting param right.i_preview_size with value 300

[component_container-1] [DEBUG] [1733925186.401365495] [rae]: Setting param right.i_preview_width with value 300

[component_container-1] [DEBUG] [1733925186.403833190] [rae]: Setting param right.i_preview_height with value 300

[component_container-1] [DEBUG] [1733925186.406647013] [rae]: Setting param right.i_resolution with value 800P

[component_container-1] [DEBUG] [1733925186.410007801] [rae]: Setting param right.i_interleaved with value 0

[component_container-1] [DEBUG] [1733925186.411403233] [rae]: Setting param right.i_set_isp_scale with value 0

[component_container-1] [DEBUG] [1733925186.414033179] [rae]: Setting param right.i_width with value 640

[component_container-1] [DEBUG] [1733925186.417095381] [rae]: Setting param right.i_height with value 400

[component_container-1] [DEBUG] [1733925186.419428198] [rae]: Setting param right.i_keep_preview_aspect_ratio with value 1

[component_container-1] [DEBUG] [1733925186.422541983] [rae]: Setting param right.r_iso with value 800

[component_container-1] [DEBUG] [1733925186.425453641] [rae]: Setting param right.r_exposure with value 20000

[component_container-1] [DEBUG] [1733925186.427141369] [rae]: Setting param right.r_whitebalance with value 3300

[component_container-1] [DEBUG] [1733925186.430075943] [rae]: Setting param right.r_focus with value 1

[component_container-1] [DEBUG] [1733925186.432407928] [rae]: Setting param right.r_set_man_focus with value 0

[component_container-1] [DEBUG] [1733925186.435431545] [rae]: Setting param right.r_set_man_exposure with value 0

[component_container-1] [DEBUG] [1733925186.437671820] [rae]: Setting param right.r_set_man_whitebalance with value 0

[component_container-1] [DEBUG] [1733925186.442555709] [rae]: Setting param right.i_fsync_continuous with value 0

[component_container-1] [DEBUG] [1733925186.444679191] [rae]: Setting param right.i_fsync_trigger with value 0

[component_container-1] [DEBUG] [1733925186.447875102] [rae]: Setting param right.i_sensor_img_orientation with value AUTO

[component_container-1] [DEBUG] [1733925186.448311315] [rae]: Node right created

[component_container-1] [DEBUG] [1733925186.448414566] [rae]: Base node right created

[component_container-1] [DEBUG] [1733925186.451367933] [rae]: Setting param stereo_front.i_max_q_size with value 30

[component_container-1] [DEBUG] [1733925186.453473665] [rae]: Setting param stereo_front.i_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.456195237] [rae]: Setting param stereo_front.i_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.458388845] [rae]: Setting param stereo_front.i_output_disparity with value 0

[component_container-1] [DEBUG] [1733925186.460790456] [rae]: Setting param stereo_front.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925186.462953605] [rae]: Setting param stereo_front.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925186.465021420] [rae]: Setting param stereo_front.i_publish_topic with value 1

[component_container-1] [DEBUG] [1733925186.467400072] [rae]: Setting param stereo_front.i_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.469789724] [rae]: Setting param stereo_front.i_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.472257335] [rae]: Setting param stereo_front.i_enable_lazy_publisher with value 1

[component_container-1] [DEBUG] [1733925186.474817614] [rae]: Setting param stereo_front.i_reverse_stereo_socket_order with value 0

[component_container-1] [DEBUG] [1733925186.477165515] [rae]: Setting param stereo_front.i_publish_synced_rect_pair with value 0

[component_container-1] [DEBUG] [1733925186.480659846] [rae]: Setting param stereo_front.i_publish_left_rect with value 0

[component_container-1] [DEBUG] [1733925186.483096707] [rae]: Setting param stereo_front.i_left_rect_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.485140771] [rae]: Setting param stereo_front.i_left_rect_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.487227462] [rae]: Setting param stereo_front.i_left_rect_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.489316152] [rae]: Setting param stereo_front.i_left_rect_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.493356364] [rae]: Setting param stereo_front.i_left_rect_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.497142615] [rae]: Setting param stereo_front.i_publish_right_rect with value 0

[component_container-1] [DEBUG] [1733925186.499099137] [rae]: Setting param stereo_front.i_right_rect_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.503454436] [rae]: Setting param stereo_front.i_right_rect_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.506270884] [rae]: Setting param stereo_front.i_right_rect_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.509173084] [rae]: Setting param stereo_front.i_right_rect_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.511472443] [rae]: Setting param stereo_front.i_right_rect_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.513830136] [rae]: Setting param stereo_front.i_lr_check with value 1

[component_container-1] [DEBUG] [1733925186.516774878] [rae]: Setting param stereo_front.i_board_socket_id with value 2

[component_container-1] [DEBUG] [1733925186.518723858] [rae]: Setting param stereo_front.i_align_depth with value 1

[component_container-1] [DEBUG] [1733925186.521053467] [rae]: Setting param stereo_front.i_socket_name with value right

[component_container-1] [DEBUG] [1733925186.523349785] [rae]: Setting param stereo_front.i_set_input_size with value 0

[component_container-1] [DEBUG] [1733925186.525698645] [rae]: Setting param stereo_front.i_width with value 640

[component_container-1] [DEBUG] [1733925186.528136880] [rae]: Setting param stereo_front.i_height with value 400

[component_container-1] [DEBUG] [1733925186.530562033] [rae]: Setting param stereo_front.i_depth_preset with value HIGH_ACCURACY

[component_container-1] [DEBUG] [1733925186.532571680] [rae]: Setting param stereo_front.i_enable_distortion_correction with value 0

[component_container-1] [DEBUG] [1733925186.534968791] [rae]: Setting param stereo_front.i_set_disparity_to_depth_use_spec_translation with value 0

[component_container-1] [DEBUG] [1733925186.537143607] [rae]: Setting param stereo_front.i_bilateral_sigma with value 0

[component_container-1] [DEBUG] [1733925186.539489883] [rae]: Setting param stereo_front.i_lrc_threshold with value 10

[component_container-1] [DEBUG] [1733925186.542229622] [rae]: Setting param stereo_front.i_depth_filter_size with value 5

[component_container-1] [DEBUG] [1733925186.544385105] [rae]: Setting param stereo_front.i_stereo_conf_threshold with value 240

[component_container-1] [DEBUG] [1733925186.547103719] [rae]: Setting param stereo_front.i_subpixel with value 0

[component_container-1] [DEBUG] [1733925186.549538246] [rae]: Setting param stereo_front.i_rectify_edge_fill_color with value 0

[component_container-1] [DEBUG] [1733925186.552138359] [rae]: Setting param stereo_front.i_enable_alpha_scaling with value 0

[component_container-1] [DEBUG] [1733925186.554787222] [rae]: Setting param stereo_front.i_disparity_width with value DISPARITY_96

[component_container-1] [DEBUG] [1733925186.556696244] [rae]: Setting param stereo_front.i_extended_disp with value 0

[component_container-1] [DEBUG] [1733925186.559216397] [rae]: Setting param stereo_front.i_enable_companding with value 0

[component_container-1] [DEBUG] [1733925186.562648477] [rae]: Setting param stereo_front.i_enable_temporal_filter with value 0

[component_container-1] [DEBUG] [1733925186.565441800] [rae]: Setting param stereo_front.i_enable_speckle_filter with value 0

[component_container-1] [DEBUG] [1733925186.568031829] [rae]: Setting param stereo_front.i_enable_spatial_filter with value 0

[component_container-1] [DEBUG] [1733925186.571260199] [rae]: Setting param stereo_front.i_enable_threshold_filter with value 0

[component_container-1] [DEBUG] [1733925186.573845187] [rae]: Setting param stereo_front.i_enable_decimation_filter with value 0

[component_container-1] [DEBUG] [1733925186.574387818] [rae]: Node stereo_front created

[component_container-1] [DEBUG] [1733925186.574506861] [rae]: Creating node stereo_back

[component_container-1] [DEBUG] [1733925186.576973722] [rae]: Setting param stereo_back.i_left_socket_id with value 3

[component_container-1] [DEBUG] [1733925186.579317790] [rae]: Setting param stereo_back.i_right_socket_id with value 4

[component_container-1] [DEBUG] [1733925186.582568535] [rae]: Creating stereo node with left sensor left_back and right sensor right_back

[component_container-1] [DEBUG] [1733925186.582764662] [rae]: Creating node left_back base

[component_container-1] [DEBUG] [1733925186.585004146] [rae]: Setting param left_back.i_max_q_size with value 30

[component_container-1] [DEBUG] [1733925186.587361130] [rae]: Setting param left_back.i_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.589324402] [rae]: Setting param left_back.i_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.591219590] [rae]: Setting param left_back.i_calibration_file with value

[component_container-1] [DEBUG] [1733925186.593864995] [rae]: Setting param left_back.i_simulate_from_topic with value 0

[component_container-1] [DEBUG] [1733925186.595544848] [rae]: Setting param left_back.i_simulated_topic_name with value

[component_container-1] [DEBUG] [1733925186.597205825] [rae]: Setting param left_back.i_disable_node with value 0

[component_container-1] [DEBUG] [1733925186.598774634] [rae]: Setting param left_back.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925186.601836627] [rae]: Setting param left_back.i_board_socket_id with value 3

[component_container-1] [DEBUG] [1733925186.605607877] [rae]: Setting param left_back.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925186.608352117] [rae]: Setting param left_back.i_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.610647684] [rae]: Setting param left_back.i_enable_lazy_publisher with value 1

[component_container-1] [DEBUG] [1733925186.612489580] [rae]: Setting param left_back.i_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.613775678] [rae]: Setting param left_back.i_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.615262361] [rae]: Setting param left_back.i_reverse_stereo_socket_order with value 0

[component_container-1] [DEBUG] [1733925186.618200019] [rae]: Node left_back has sensor OV9782

[component_container-1] [DEBUG] [1733925186.618403730] [rae]: Creating node left_back

[component_container-1] [DEBUG] [1733925186.620071374] [rae]: Setting param left_back.i_publish_topic with value 1

[component_container-1] [DEBUG] [1733925186.622859322] [rae]: Setting param left_back.i_output_isp with value 0

[component_container-1] [DEBUG] [1733925186.625133722] [rae]: Setting param left_back.i_enable_preview with value 0

[component_container-1] [DEBUG] [1733925186.626779199] [rae]: Setting param left_back.i_fps with value 30

[component_container-1] [DEBUG] [1733925186.627958254] [rae]: Setting param left_back.i_preview_size with value 300

[component_container-1] [DEBUG] [1733925186.629641023] [rae]: Setting param left_back.i_preview_width with value 300

[component_container-1] [DEBUG] [1733925186.631829714] [rae]: Setting param left_back.i_preview_height with value 300

[component_container-1] [DEBUG] [1733925186.634222825] [rae]: Setting param left_back.i_resolution with value 800P

[component_container-1] [DEBUG] [1733925186.638261828] [rae]: Setting param left_back.i_interleaved with value 0

[component_container-1] [DEBUG] [1733925186.642614377] [rae]: Setting param left_back.i_set_isp_scale with value 0

[component_container-1] [DEBUG] [1733925186.644460773] [rae]: Setting param left_back.i_width with value 640

[component_container-1] [DEBUG] [1733925186.648245274] [rae]: Setting param left_back.i_height with value 400

[component_container-1] [DEBUG] [1733925186.649425829] [rae]: Setting param left_back.i_keep_preview_aspect_ratio with value 1

[component_container-1] [DEBUG] [1733925186.651845356] [rae]: Setting param left_back.r_iso with value 800

[component_container-1] [DEBUG] [1733925186.654018506] [rae]: Setting param left_back.r_exposure with value 20000

[component_container-1] [DEBUG] [1733925186.657237500] [rae]: Setting param left_back.r_whitebalance with value 3300

[component_container-1] [DEBUG] [1733925186.658442305] [rae]: Setting param left_back.r_focus with value 1

[component_container-1] [DEBUG] [1733925186.660499995] [rae]: Setting param left_back.r_set_man_focus with value 0

[component_container-1] [DEBUG] [1733925186.662411975] [rae]: Setting param left_back.r_set_man_exposure with value 0

[component_container-1] [DEBUG] [1733925186.664002868] [rae]: Setting param left_back.r_set_man_whitebalance with value 0

[component_container-1] [DEBUG] [1733925186.666849108] [rae]: Setting param left_back.i_fsync_continuous with value 0

[component_container-1] [DEBUG] [1733925186.668965424] [rae]: Setting param left_back.i_fsync_trigger with value 0

[component_container-1] [DEBUG] [1733925186.671261783] [rae]: Setting param left_back.i_sensor_img_orientation with value AUTO

[component_container-1] [DEBUG] [1733925186.671989166] [rae]: Node left_back created

[component_container-1] [DEBUG] [1733925186.673269139] [rae]: Base node left_back created

[component_container-1] [DEBUG] [1733925186.673346515] [rae]: Creating node right_back base

[component_container-1] [DEBUG] [1733925186.674792073] [rae]: Setting param right_back.i_max_q_size with value 30

[component_container-1] [DEBUG] [1733925186.677337060] [rae]: Setting param right_back.i_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.682560494] [rae]: Setting param right_back.i_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.684137970] [rae]: Setting param right_back.i_calibration_file with value

[component_container-1] [DEBUG] [1733925186.687959304] [rae]: Setting param right_back.i_simulate_from_topic with value 0

[component_container-1] [DEBUG] [1733925186.691054631] [rae]: Setting param right_back.i_simulated_topic_name with value

[component_container-1] [DEBUG] [1733925186.693427116] [rae]: Setting param right_back.i_disable_node with value 0

[component_container-1] [DEBUG] [1733925186.695756767] [rae]: Setting param right_back.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925186.698382422] [rae]: Setting param right_back.i_board_socket_id with value 4

[component_container-1] [DEBUG] [1733925186.700914617] [rae]: Setting param right_back.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925186.704274071] [rae]: Setting param right_back.i_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.705804630] [rae]: Setting param right_back.i_enable_lazy_publisher with value 1

[component_container-1] [DEBUG] [1733925186.708904999] [rae]: Setting param right_back.i_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.711575362] [rae]: Setting param right_back.i_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.714122641] [rae]: Setting param right_back.i_reverse_stereo_socket_order with value 0

[component_container-1] [DEBUG] [1733925186.715981703] [rae]: Node right_back has sensor OV9782

[component_container-1] [DEBUG] [1733925186.716183164] [rae]: Creating node right_back

[component_container-1] [DEBUG] [1733925186.718648317] [rae]: Setting param right_back.i_publish_topic with value 0

[component_container-1] [DEBUG] [1733925186.720392544] [rae]: Setting param right_back.i_output_isp with value 0

[component_container-1] [DEBUG] [1733925186.723079866] [rae]: Setting param right_back.i_enable_preview with value 0

[component_container-1] [DEBUG] [1733925186.726475155] [rae]: Setting param right_back.i_fps with value 30

[component_container-1] [DEBUG] [1733925186.729496314] [rae]: Setting param right_back.i_preview_size with value 300

[component_container-1] [DEBUG] [1733925186.732528389] [rae]: Setting param right_back.i_preview_width with value 300

[component_container-1] [DEBUG] [1733925186.733698236] [rae]: Setting param right_back.i_preview_height with value 300

[component_container-1] [DEBUG] [1733925186.734811290] [rae]: Setting param right_back.i_resolution with value 800P

[component_container-1] [DEBUG] [1733925186.738376747] [rae]: Setting param right_back.i_interleaved with value 0

[component_container-1] [DEBUG] [1733925186.740656314] [rae]: Setting param right_back.i_set_isp_scale with value 0

[component_container-1] [DEBUG] [1733925186.743302469] [rae]: Setting param right_back.i_width with value 640

[component_container-1] [DEBUG] [1733925186.745625954] [rae]: Setting param right_back.i_height with value 400

[component_container-1] [DEBUG] [1733925186.748448235] [rae]: Setting param right_back.i_keep_preview_aspect_ratio with value 1

[component_container-1] [DEBUG] [1733925186.750947680] [rae]: Setting param right_back.r_iso with value 800

[component_container-1] [DEBUG] [1733925186.752193402] [rae]: Setting param right_back.r_exposure with value 20000

[component_container-1] [DEBUG] [1733925186.755554524] [rae]: Setting param right_back.r_whitebalance with value 3300

[component_container-1] [DEBUG] [1733925186.758222470] [rae]: Setting param right_back.r_focus with value 1

[component_container-1] [DEBUG] [1733925186.762187057] [rae]: Setting param right_back.r_set_man_focus with value 0

[component_container-1] [DEBUG] [1733925186.764652293] [rae]: Setting param right_back.r_set_man_exposure with value 0

[component_container-1] [DEBUG] [1733925186.767106320] [rae]: Setting param right_back.r_set_man_whitebalance with value 0

[component_container-1] [DEBUG] [1733925186.768420877] [rae]: Setting param right_back.i_fsync_continuous with value 0

[component_container-1] [DEBUG] [1733925186.770075687] [rae]: Setting param right_back.i_fsync_trigger with value 0

[component_container-1] [DEBUG] [1733925186.772524756] [rae]: Setting param right_back.i_sensor_img_orientation with value AUTO

[component_container-1] [DEBUG] [1733925186.772946886] [rae]: Node right_back created

[component_container-1] [DEBUG] [1733925186.774760865] [rae]: Base node right_back created

[component_container-1] [DEBUG] [1733925186.776525926] [rae]: Setting param stereo_back.i_max_q_size with value 30

[component_container-1] [DEBUG] [1733925186.779390334] [rae]: Setting param stereo_back.i_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.781974113] [rae]: Setting param stereo_back.i_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.783272669] [rae]: Setting param stereo_back.i_output_disparity with value 0

[component_container-1] [DEBUG] [1733925186.786861834] [rae]: Setting param stereo_back.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925186.788941816] [rae]: Setting param stereo_back.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925186.791777931] [rae]: Setting param stereo_back.i_publish_topic with value 1

[component_container-1] [DEBUG] [1733925186.794454128] [rae]: Setting param stereo_back.i_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.796777654] [rae]: Setting param stereo_back.i_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.799205473] [rae]: Setting param stereo_back.i_enable_lazy_publisher with value 1

[component_container-1] [DEBUG] [1733925186.801488166] [rae]: Setting param stereo_back.i_reverse_stereo_socket_order with value 0

[component_container-1] [DEBUG] [1733925186.804360781] [rae]: Setting param stereo_back.i_publish_synced_rect_pair with value 0

[component_container-1] [DEBUG] [1733925186.806877310] [rae]: Setting param stereo_back.i_publish_left_rect with value 0

[component_container-1] [DEBUG] [1733925186.809643924] [rae]: Setting param stereo_back.i_left_rect_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.812546457] [rae]: Setting param stereo_back.i_left_rect_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.815109194] [rae]: Setting param stereo_back.i_left_rect_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.816257832] [rae]: Setting param stereo_back.i_left_rect_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.817632722] [rae]: Setting param stereo_back.i_left_rect_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.820832800] [rae]: Setting param stereo_back.i_publish_right_rect with value 0

[component_container-1] [DEBUG] [1733925186.823547706] [rae]: Setting param stereo_back.i_right_rect_low_bandwidth with value 0

[component_container-1] [DEBUG] [1733925186.826644240] [rae]: Setting param stereo_back.i_right_rect_low_bandwidth_quality with value 50

[component_container-1] [DEBUG] [1733925186.829936236] [rae]: Setting param stereo_back.i_right_rect_enable_feature_tracker with value 0

[component_container-1] [DEBUG] [1733925186.831933758] [rae]: Setting param stereo_back.i_right_rect_add_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.835754885] [rae]: Setting param stereo_back.i_right_rect_exposure_offset with value 0

[component_container-1] [DEBUG] [1733925186.838672376] [rae]: Setting param stereo_back.i_lr_check with value 1

[component_container-1] [DEBUG] [1733925186.843448471] [rae]: Setting param stereo_back.i_board_socket_id with value 4

[component_container-1] [DEBUG] [1733925186.845280367] [rae]: Setting param stereo_back.i_align_depth with value 1

[component_container-1] [DEBUG] [1733925186.850106421] [rae]: Setting param stereo_back.i_socket_name with value right_back

[component_container-1] [DEBUG] [1733925186.851914442] [rae]: Setting param stereo_back.i_set_input_size with value 0

[component_container-1] [DEBUG] [1733925186.853837005] [rae]: Setting param stereo_back.i_width with value 640

[component_container-1] [DEBUG] [1733925186.857759341] [rae]: Setting param stereo_back.i_height with value 400

[component_container-1] [DEBUG] [1733925186.860692124] [rae]: Setting param stereo_back.i_depth_preset with value HIGH_ACCURACY

[component_container-1] [DEBUG] [1733925186.864121912] [rae]: Setting param stereo_back.i_enable_distortion_correction with value 0

[component_container-1] [DEBUG] [1733925186.867083196] [rae]: Setting param stereo_back.i_set_disparity_to_depth_use_spec_translation with value 0

[component_container-1] [DEBUG] [1733925186.870626694] [rae]: Setting param stereo_back.i_bilateral_sigma with value 0

[component_container-1] [DEBUG] [1733925186.871933834] [rae]: Setting param stereo_back.i_lrc_threshold with value 10

[component_container-1] [DEBUG] [1733925186.875182703] [rae]: Setting param stereo_back.i_depth_filter_size with value 5

[component_container-1] [DEBUG] [1733925186.877294519] [rae]: Setting param stereo_back.i_stereo_conf_threshold with value 240

[component_container-1] [DEBUG] [1733925186.880865184] [rae]: Setting param stereo_back.i_subpixel with value 0

[component_container-1] [DEBUG] [1733925186.884692811] [rae]: Setting param stereo_back.i_rectify_edge_fill_color with value 0

[component_container-1] [DEBUG] [1733925186.888626521] [rae]: Setting param stereo_back.i_enable_alpha_scaling with value 0

[component_container-1] [DEBUG] [1733925186.892786152] [rae]: Setting param stereo_back.i_disparity_width with value DISPARITY_96

[component_container-1] [DEBUG] [1733925186.896543486] [rae]: Setting param stereo_back.i_extended_disp with value 0

[component_container-1] [DEBUG] [1733925186.898040544] [rae]: Setting param stereo_back.i_enable_companding with value 0

[component_container-1] [DEBUG] [1733925186.902721847] [rae]: Setting param stereo_back.i_enable_temporal_filter with value 0

[component_container-1] [DEBUG] [1733925186.905782506] [rae]: Setting param stereo_back.i_enable_speckle_filter with value 0

[component_container-1] [DEBUG] [1733925186.908125783] [rae]: Setting param stereo_back.i_enable_spatial_filter with value 0

[component_container-1] [DEBUG] [1733925186.910632228] [rae]: Setting param stereo_back.i_enable_threshold_filter with value 0

[component_container-1] [DEBUG] [1733925186.913828888] [rae]: Setting param stereo_back.i_enable_decimation_filter with value 0

[component_container-1] [DEBUG] [1733925186.914219560] [rae]: Node stereo_back created

[component_container-1] [DEBUG] [1733925187.678849258] [rae]: Creating node imu

[component_container-1] [DEBUG] [1733925187.681989584] [rae]: Setting param imu.i_get_base_device_timestamp with value 0

[component_container-1] [DEBUG] [1733925187.683067929] [rae]: Setting param imu.i_message_type with value IMU_WITH_MAG_SPLIT

[component_container-1] [DEBUG] [1733925187.684099440] [rae]: Setting param imu.i_sync_method with value COPY

[component_container-1] [DEBUG] [1733925187.685214452] [rae]: Setting param imu.i_acc_cov with value 0

[component_container-1] [DEBUG] [1733925187.688007775] [rae]: Setting param imu.i_gyro_cov with value 0

[component_container-1] [DEBUG] [1733925187.690594261] [rae]: Setting param imu.i_rot_cov with value -1

[component_container-1] [DEBUG] [1733925187.693654545] [rae]: Setting param imu.i_mag_cov with value 0

[component_container-1] [DEBUG] [1733925187.695910570] [rae]: Setting param imu.i_update_ros_base_time_on_ros_msg with value 0

[component_container-1] [DEBUG] [1733925187.698006759] [rae]: Setting param imu.i_enable_rotation with value 1

[component_container-1] [DEBUG] [1733925187.700894041] [rae]: Setting param imu.i_rotation_vector_type with value ROTATION_VECTOR

[component_container-1] [DEBUG] [1733925187.704217994] [rae]: Setting param imu.i_rot_freq with value 100

[component_container-1] [DEBUG] [1733925187.706811356] [rae]: Setting param imu.i_mag_freq with value 100

[component_container-1] [DEBUG] [1733925187.709507135] [rae]: Setting param imu.i_acc_freq with value 100

[component_container-1] [DEBUG] [1733925187.711691159] [rae]: Setting param imu.i_gyro_freq with value 100

[component_container-1] [DEBUG] [1733925187.715055613] [rae]: Setting param imu.i_batch_report_threshold with value 5

[component_container-1] [DEBUG] [1733925187.718930614] [rae]: Setting param imu.i_max_batch_reports with value 5

[component_container-1] [DEBUG] [1733925187.719268742] [rae]: Node imu created

[component_container-1] [DEBUG] [1733925187.719434828] [rae]: Creating node sys_logger

[component_container-1] [DEBUG] [1733925187.720631674] [rae]: Node sys_logger created

[component_container-1] [INFO] [1733925187.720789384] [rae]: Finished setting up pipeline

...

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.063] [IMU(14)] [info] IMU product ID:

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.063] [IMU(14)] [info] Part 10004563 : Version 3.9.9 Build 2

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.063] [IMU(14)] [info] Part 10003606 : Version 1.8.0 Build 338

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.063] [IMU(14)] [info] Part 10004135 : Version 5.5.3 Build 162

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.063] [IMU(14)] [info] Part 10004149 : Version 5.1.12 Build 183

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.083] [system] [info] Read from EEPROM

...

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.519] [system] [info] Reading from Factory EEPROM contents

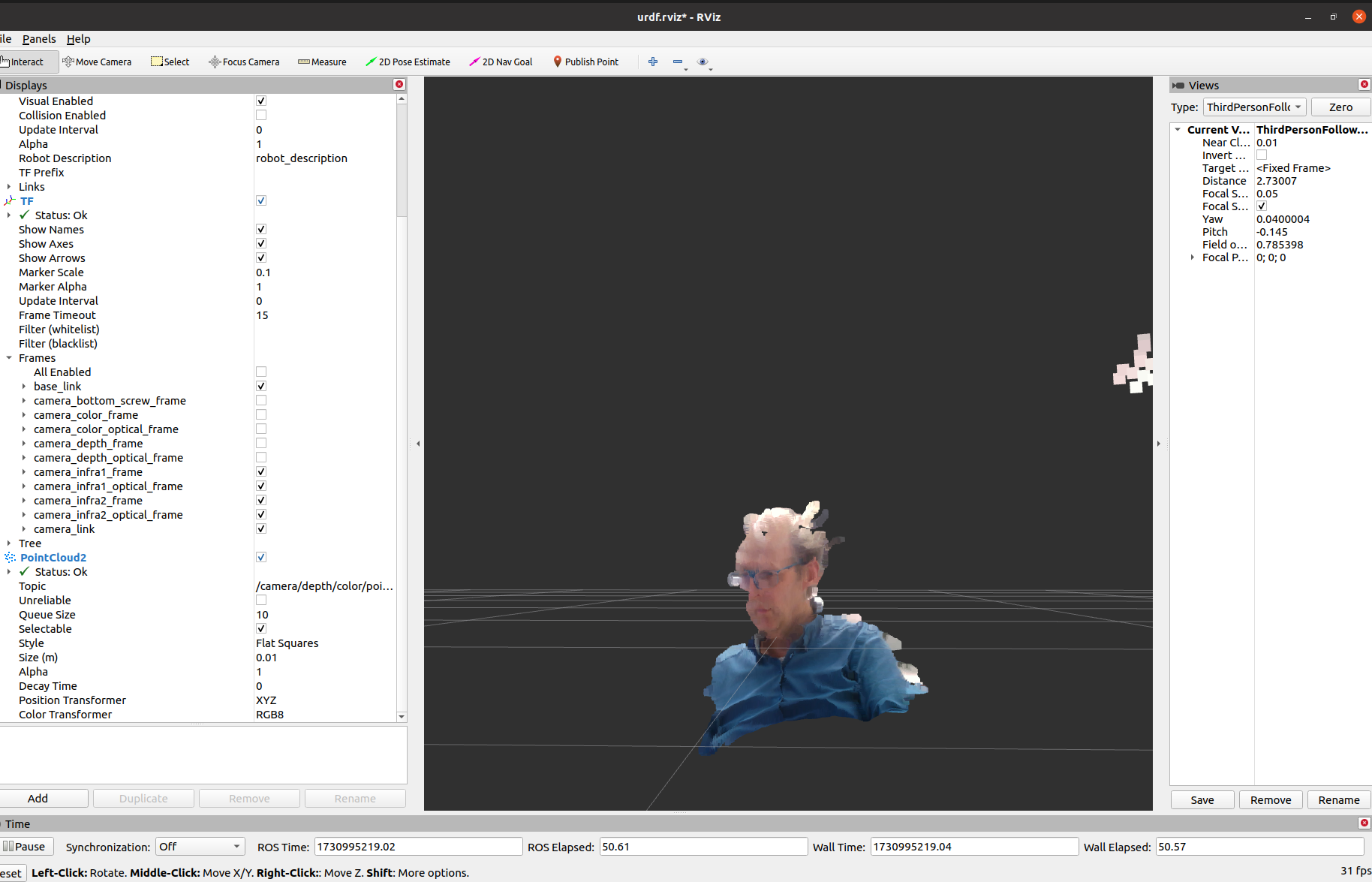

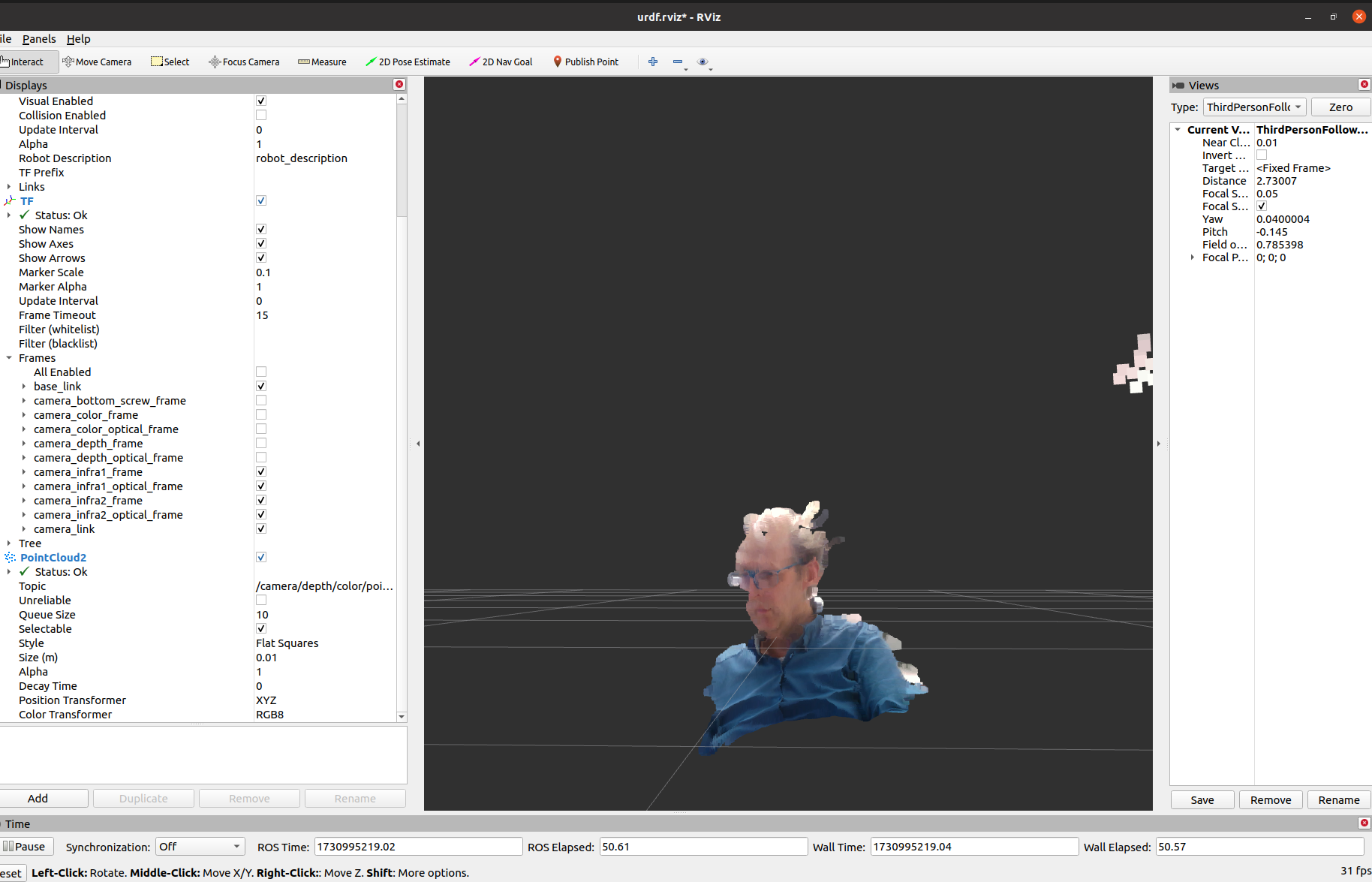

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.547] [StereoDepth(12)] [info] Baseline: 0.074863344

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.547] [StereoDepth(12)] [info] Fov: 96.69345

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.547] [StereoDepth(12)] [info] Focal: 284.64175

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.547] [StereoDepth(12)] [info] FixedNumerator: 21309.232

[component_container-1] [58927016838860C5] [127.0.0.1] [1733925188.547] [StereoDepth(12)] [debug] Using 0 camera model

- The file /underlay_ws/install/depthai_ros_driver/share/depthai_ros_driver/plugins.xml gives .

- In /underlay_ws/src/depthai-ros/depthai_descriptions/urdf/models the OAK-D.stl can be found, but no RAE.

- In /underlay_ws/src/depthai-ros/depthai_ros_driver/config/calibration/left.yaml can be found, with 800 pixels focal length, and a distortion of [0.1074, -0.2854, ...]. The right.yaml has a comparible focal lenght, but the distortion is much smaller [0.027691, -0.084910]. The rgb.yaml has focal length of 1007, and distortion [0.0802921, -0.244505, ...].

In include/depthai_ros_driver/pipeline/pipeline_generator.hpp the PipelineType::RAE is defined. So, the underlay_ws is aware of the RAE pipeline.

- The battery status is published by reading out /sys/class/power_supply/bq27441-0/capacity (100 at the moment)

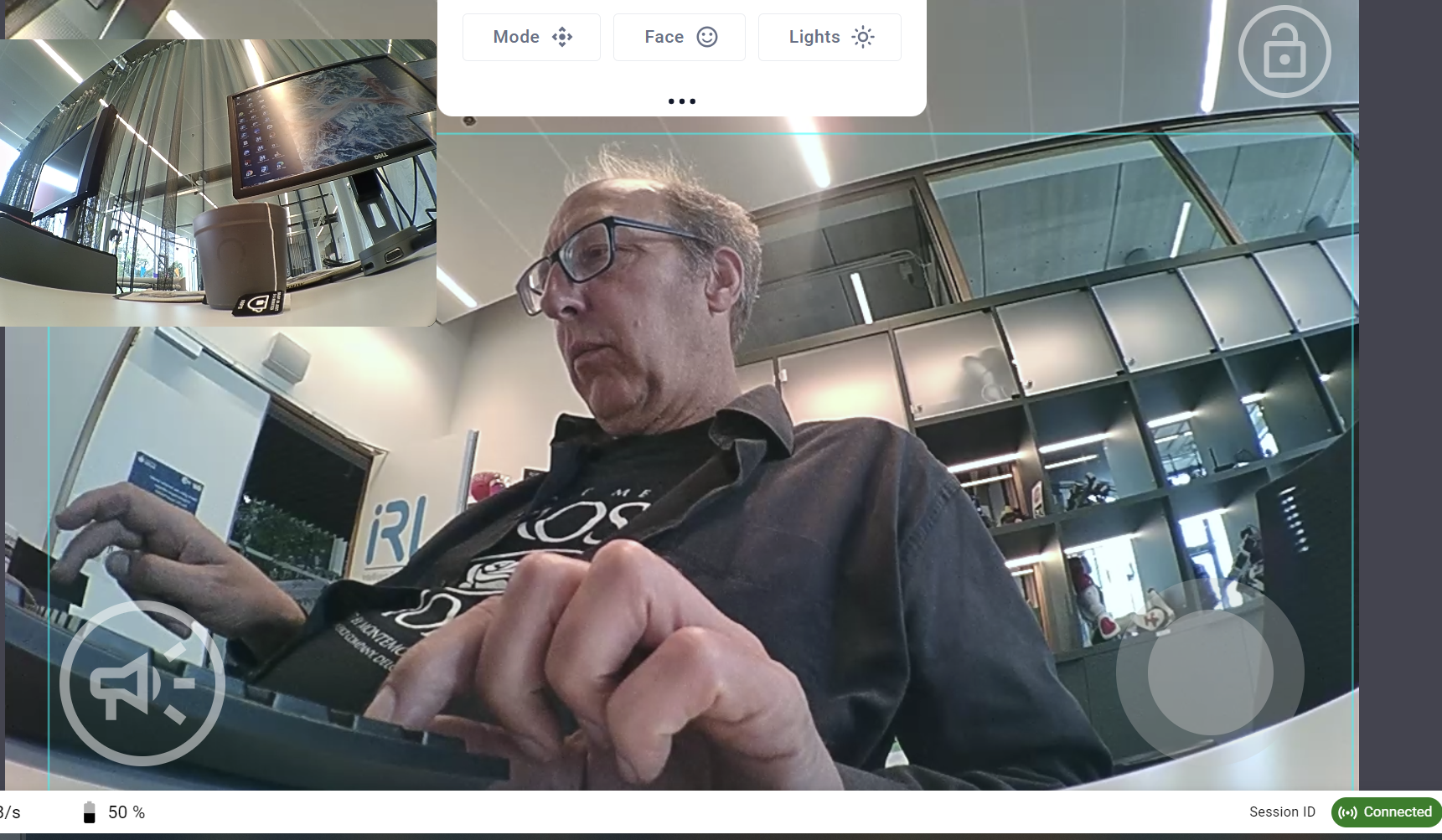

- Tried the combination ros2 launch rae_hw peripherals.launch.py with python3 rae-ros/rae_bringup/scripts/battery_status.py. That nicely gives a blue led, with 10 blue bars on the display.

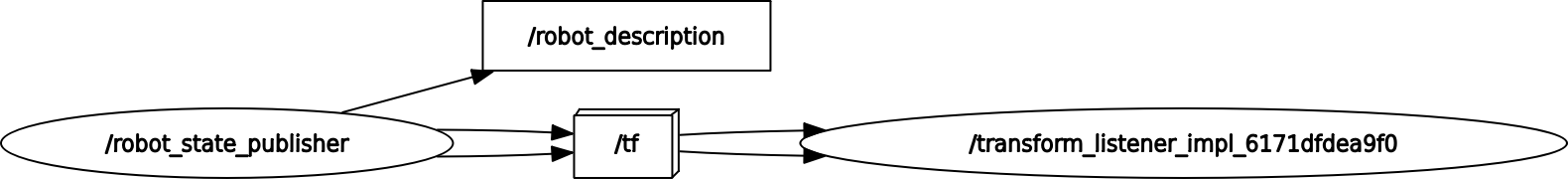

- The script robot.launch.py just loads two scripts. Looking into /ws/src/rae-ros/rae_hw/launch/control.launch.py This loads /ws/src/rae-ros/rae_hw/config/controller.yaml and launches /ws/src/rae_description/launch/rsp.launch.py. The last one is just the robot_state_publisher.

- The controller.yaml defines the motion_model, with the pose and twist covariance.

- The rae_camera.launch.py just calls the depthai_ros_driver::Camera plugin, with the rae_camera/config/camera.yaml as parameter_file. Most important is the pipeline_type: rae set at the beginning of the file. The only extra thing is busybox devmem 0x20320180 32 0x00000000.

December 9, 2024

- Qi based his lecture on the course Embodied AI from Oregon State University (Fall 2019).

- Actually, it were mostly student presentation on selected research-papers, with only three introduction lectures on Reinforcement Learning.

- Some of the literature mentioned by Qi:

December 6, 2024

- You can increase the RAE default resolution by changing the ROS camera config file on the robot.

- Even more advanced is VKF, which gives in Table 1 a list of alternatives (with magnetic disturbance rejection). Note that the MagneticField is one of Ros-messages published by the IMU.

- Unother team used FilterPy library as basis for their code.

- Reading first and second chapter of ChangChang's PhD-thesis

-

- Looked for published papers on robotic courses:

December 5, 2024

- One of the teams used pupil-apriltags, which were inspired by the bindings provided by duckietown.

- They used OpenCV's KalmanFilter. A tutorial can be found here.

December 4, 2024

- Following the instructions of the new technical report on ws9.

- ws9 has /usr/bin/gcc-11, from 2021.

- Changed the dependency libatlas-dev to libatlas-base-dev.

- Checked with ldd /snap/visualsfm-mardy/current/bin/VisualSFM, but all libraries that could not be found are there. Only at /lib/x86_64-linux-gnu/, so maybe I should check if I can build VisualSFM from source again (at the end, first the method based on visualsfm-mardy.

- Building SiftGPU with g++-4.1 fails. With g++-11 it works.

- Not clear how I used CLAPACK-3.2.1. Used the default make.inc, but making the lapack archive by in step 5 of Install instructions, it fails on a missing ../INSTALL/slamch.o. Lets first try steps 2-4. Have the feeling that having just the code was previously enough. Skipped the step 4 (testing). Still the same error. Did a make clean.

December 3, 2024

- To compile the tool CMVS, step 4 of VisualSFM, libgfortran1.so is needed.

- To get this library, we have to go 2008.

- Luckely, libgfortran1 can be found in hardy (8.04) LTS.

- The packages from hardy can still be found when is augmented with:

deb [allow-insecure=yes] http://old-releases.ubuntu.com/ubuntu/ hardy main universe

.

- Next is /etc/apt/sources.list, followed by sudo apt-get update.

- The library is based on gcc-4.1, so sudo apt-get install g++-4.1 followed by sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-4.1 8. Note that the last number is the priority, for the moment I set it equal to the year of the LTS. This can be undone with sudo update-alternatives --config gcc. Note that g++ is not configured with alternatives (still 9.4.0). The alternatives first have to be installed, before you can choose.

- Building Graclus gave no problems, although gcc-4 gave enough warnings (made for gcc 3.0.3)

- Building CMVS seems to be the hard part.

- Followed the instructions, but the build fails. Probably because it is using g++ instead of gcc, so should also configure g++ to use version 4.1

- Looked up the version year with /usr/bin/g++-9 --versionConfigured g++ with those three setups:

sudo update-alternatives --install /usr/bin/g++ g++ /usr/bin/g++-4.1 8

sudo update-alternatives --install /usr/bin/g++ g++ /usr/bin/g++-5 15

sudo update-alternatives --install /usr/bin/g++ g++ /usr/bin/g++-9 19

- With this order g++-9 is the auto-option.

- With g++-4.1 compilation failed with -fopenmp, but after selecting g+=-5 with sudo update-alternatives --config g++ the compilation was succesfull.

- The directory /snap/visualsfm-mardy/current/bin/ had already the three binaries, so copied cmvs, pmvs2 and genOption to ~/packages/vsfm/bin/

- Running cmvs from mardy now gives:

Reading bundle...21 cameras -- 2495 points in bundle file

***********

21 cameras -- 2495 points

Reading images: *********************

Set widths/heights...done 0 secs

done 0 secs

slimNeighborsSetLinks...done 0 secs

mergeSFM...***********resetPoints...done

Rep counts: 2495 -> 32 0 secs

setScoreThresholds...done 0 secs

sRemoveImages... ***********

Kept: 11 14 15 16 19 20

Removed: 0 1 2 3 4 5 6 7 8 9 10 12 13 17 18

sRemoveImages: 21 -> 6 0 secs

slimNeighborsSetLinks...done 0 secs

Cluster sizes:

6

Adding images:

0

Image nums: 21 -> 6 -> 6

Divide:

done 0 secs

5 images in vis on the average

- But then I get the error pmvs2: error while loading shared libraries: libgsl.so.19: cannot open shared object file.

- Inspecting ldd /snap/visualsfm-mardy/current/bin/pmvs2 confirms the missing library. Yet ~/src/cmvs/program/main/pmvs2 can load /lib/x86_64-linux-gnu/libgsl.so.23.

- Also setup the gcc-alternatives with:

sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-4.1 8

sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-5 15

sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-7 17

sudo update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-9 19

- Also set g++ back to the default g++-9.

- Back to ~/packages/vsfm. Tried the Build VisualSFM step, but this fails on several libraries:

/usr/bin/ld: cannot find -lGL

/usr/bin/ld: build/GlobalUtil.o: in function `GlobalUtil::DevilLoadImage(BitmapRGBI*, char const*)':

GlobalUtil.cpp:(.text+0x26d): warning: Using 'dlopen' in statically linked applications requires at runtime the shared libraries from the glibc version used for linking

/usr/bin/ld: cannot find -lgtk-x11-2.0

/usr/bin/ld: cannot find -lgdk-x11-2.0

/usr/bin/ld: cannot find -lpangocairo-1.0

/usr/bin/ld: cannot find -latk-1.0

/usr/bin/ld: cannot find -lgdk_pixbuf-2.0

/usr/bin/ld: cannot find -lpangoft2-1.0

/usr/bin/ld: cannot find -lpango-1.0

- Instead did cp -p /snap/visualsfm-mardy/current/bin/VisualSFM ~/packages/vsfm/bin.

- Now CMVS starts working. After 4 images in vis on the average the terminal gives:

--------------------------------------------------

--- Summary of specified options ---

# of timages: 5 (enumeration)

# of oimages: 0 (enumeration)

level: 1 csize: 2

threshold: 0.7 wsize: 7

minImageNum: 3 CPU: 8

useVisData: 1 sequence: -1

--------------------------------------------------

Reading images: *****

11 Harris running ...15 Harris running ...16 20 19 Harris running ...Harris running ...Harris running ...179 harris done

DoG running...188 harris done

DoG running...177 harris done

DoG running...181 harris done

DoG running...180 harris done

DoG running...250 dog done

246 dog done

248 dog done

246 dog done

246 dog done

done

adding seeds

(4,58)(0,41)(3,59)(2,26)(1,52)done

---- Initial: 0 secs ----

Total pass fail0 fail1 refinepatch: 2735 329 2279 127 456

Total pass fail0 fail1 refinepatch: 100 12.0293 83.3272 4.64351 16.6728

Expanding patches...

---- EXPANSION: 1 secs ----

Total pass fail0 fail1 refinepatch: 10333 7739 2218 376 8115

Total pass fail0 fail1 refinepatch: 100 74.896 21.4652 3.63883 78.5348

FilterOutside

mainbody:

Gain (ave/var): 1.0235 0.368504

7975 -> 7923 (99.348%) 0 secs

Filter Exact: *****

7923 -> 6913 (87.2523%) 0 secs

FilterNeighbor: 6913 -> 5306 (76.7539%) 0 secs

FilterGroups: 20

5306 -> 4902 (92.386%) 0 secs

STATUS: 23 0 8546 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0

Expanding patches...

---- EXPANSION: 1 secs ----

Total pass fail0 fail1 refinepatch: 5612 2201 1910 1501 3702

Total pass fail0 fail1 refinepatch: 100 39.2195 34.0342 26.7463 65.9658

FilterOutside

mainbody:

Gain (ave/var): 1.23607 0.409579

7103 -> 7101 (99.9718%) 0 secs

Filter Exact: *****

7101 -> 6519 (91.804%) 0 secs

FilterNeighbor: 6519 -> 5958 (91.3944%) 0 secs

FilterGroups: 20

5958 -> 5719 (95.9886%) 0 secs

STATUS: 40 0 12228 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0

Expanding patches...

---- EXPANSION: 0 secs ----

Total pass fail0 fail1 refinepatch: 4440 1646 1708 1086 2732

Total pass fail0 fail1 refinepatch: 100 37.0721 38.4685 24.4595 61.5315

FilterOutside

mainbody:

Gain (ave/var): 1.42566 0.463243

7365 -> 7364 (99.9864%) 0 secs

Filter Exact: *****

7364 -> 6773 (91.9745%) 0 secs

FilterNeighbor: 6773 -> 6261 (92.4406%) 0 secs

FilterGroups: 20

6261 -> 6077 (97.0612%) 0 secs

STATUS: 52 0 14948 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0

---- Total: 0 secs ----

- The Log Window gives:

Running Yasutaka Furukawa's CMVS tool...

cmvs /home/arnoud/onderwijs/VAR/3rdAssignment/rosbag2_2024_11_27-13_04_36_Correct/first_20_images_sparse_matching.cvms.nvm.cmvs/00/02.nvm.cmvs/00/ 50 8

genOption /home/arnoud/onderwijs/VAR/3rdAssignment/rosbag2_2024_11_27-13_04_36_Correct/first_20_images_sparse_matching.cvms.nvm.cmvs/00/02.nvm.cmvs/00/ 1 2 0.700000 7 3 8

1 cluster is generated for pmvs reconstruction.

pmvs2 /home/arnoud/onderwijs/VAR/3rdAssignment/rosbag2_2024_11_27-13_04_36_Correct/first_20_images_sparse_matching.cvms.nvm.cmvs/00/02.nvm.cmvs/00/ option-0000

This may take a little more time, waiting...

4 seconds were used by PMVS

Loading option-0000.ply, 6077 vertices ...

Loading patches and estimating point sizes..

#############################

You can manually remove bad MVS points:

1. Switch to dense MVS points mode;

2. Hit F1 key to enter the selection mode;

3. Select points by dragging a rectangle;

4. Hit DELETE to delete the selected points.

#############################

Save to 02.nvm ... done

Save /home/arnoud/onderwijs/VAR/3rdAssignment/rosbag2_2024_11_27-13_04_36_Correct/first_20_images_sparse_matching.cvms.0.ply ...done

----------------------------------------------------------------

Run dense reconstruction, finished

Totally 4.000 seconds use

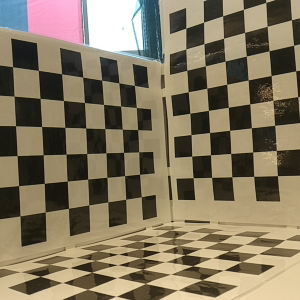

- That the matches fail is not strange, because the sequence starts in VisLab:

- Running the algorithm on frame 20-119 from mazeweek1.mp4. Should have done MatchSequentially, but brute force also seems to work. No sigmentation error yet. Matching 100 frames needed 164 seconds. Although finished, Dense Reconstruction seems not to start.

- Only did the Match, should have done the Sparse Reconstruction.

- Run the Match sequency, instead of n-to-n (goes faster, used step3).

- Sparse 3D reconstruction gives now mssing libpba.so. That file is also not in /snap/visualsfm-mardy/current/lib/x86_64-linux-gnu/, so Bundle Adjustment should be made.

- Instead of pba_v1.0.4.zip, I downloaded pba_v1.0.5.zip from project site.

- Run make -f makefile_no_gpu and copied cp bin/libpba_no_gpu.so ~/packages/vsfm/bin/libpba.so

- Run the Sparse Reconstruction, although it gave:

#############################

Failed to find two images for initialization

Resuming SfM finished, 28 sec used

- Also the Dense Reconstruction was not able to improve the result.

December 2, 2024

- The combination of /snap/visualsfm-mardy/current/bin/VisualSFM and ~/packages/SiftGPU/bin/libsiftgpu.so starts nicely with reading in the images and calculating the SIFT features, but than again crashes after SIFT 0491 on GetPixelMatrix():

#0 in BasicImage::GetPixelMatrix(Points&) ()

#1 in BasicImage::ValidateFeatureData() ()

#2 in NViewMatcher::ComputeMissingFeature() ()

#3 in NViewMatcher::ComputeMissingMatch(char const*, int)

- Made a shorter version (20 files) of the jpgs. Now the Match finishes succesfully. Next step (Sparse reconstruction) fails, but I can try to build that library:

ERROR: unable to Load libpba.so (Multicore Bundle

Adjustment). Make sure you have libpba.so in the VisualSFM

bin folder; Run to check its dependencies.

- Downloaded pba (v1.0.5).

- Compiled with make -f makefile_no_gpu, which gives several warnings (maybe should use -std=c++11), on -Wreorder, -Wsign-compare, -Wnarrowing, -Waggressive-loop-optimizations, -Warray-bounds, -Wmaybe-uninitialized.

- The result is bin/libpba_no_gpu.so, which I copied ~/packages/SiftGPU/bin/libpba.so. That gives the possibility to start the Sparse Reconstruction step, although the first 20 images are not the best choice (movement seems to start only later):

#21/21: 3301 projs and 595 pts added.

PBA: 2500 3D pts, 21 cams and 19498 projs...

PBA: 2.497 -> 2.176 (22 LMs in 1.67sec)

#points w/ large errors: 3

PBA: 2497 3D pts, 21 cams and 19481 projs...

PBA: 2.171 -> 2.171 (13 LMs in 0.70sec)

Focal Length : [903.355]->[838.055]

Radial Distortion : [-0.771 -> -36]

END: No more images to add [0 projs]

#############################

Failed to find two images for initialization

Resuming SfM finished, 80 sec used

---------------------------------------------------

21 cams, 2502 pts (3+: 2048)

19481 projections (3+: 18583)

---------------------------------------------------

1 model(s) reconstructed from 21 images;

21 modeled; 0 reused; 0 EXIF;

0MB(0) used to store feature location.

---------------------------------------------------

########-------timing------#########

Structure-From-Motion finished, 80 sec used

79.7(79.3) seconds on Bundle Adjustment (+)

79.6(79.2) seconds on Bundle Adjustment (*)

#############################

----------------------------------------------------------------

Run full 3D reconstruction, finished

Totally 80.000 seconds used

- The CVMS button starts Yasutaka Furukawa's CMVS tool externally, with cmvs *.cvms, which gives:

ERROR: the above CMVS command failed!

- Time to build this CVMS tool. The tool actually has three dependencies, such as Bundler, PMVS2, Graclus.

- Note that Bundler is not in the list of Scott Sawyer's build instructions.

- First check ldd pmvs2, two libraries are missing libjpeg.so.62 and libgfortran.so.1.

- Scott Sawyer gives some Hack PMVS-2 tips, because libjpeg.so.62 was already outdated for Ubuntu 12.04.

- This hack fails, because mylapack.o is not compiled with -fPIE.

- Without this hack, building lapack fails on ../base/numeric/mylapack.cc:6:10: fatal error: clapack/f2c.h: No such file or directory.

- f2c is Fortran to C converter, which is still maintained (last update March 2024). I tried the instructions from this Install instructions.

- Those no longer work, although the script shows nicely how to make and install from code.

- Made a directory ~/packages/pmvs-2/program/base/numeric/clapack.

- This solves the first compilation error, but next would be #include

- I actually already installed lapack-dev, which can be found at /usr/lib/x86_64-linux-gnu/lapack. Actually, I also have/home/arnoud/git/Pangolin/scripts/vcpkg/ports/lapack and /home/arnoud/git/lvsb/build/pvsb/superbuild/eigen/

- The Pangolin is just a CMakelists.txt with lapack as required package. The lsvb option is mainly fortran code, which few cpp-files.

- Should look at clapack.

- That works, pmvs2 is compiled. Only library missing is libgfortran.so.1

- There are several libgfortran-*-dev versions available, but the oldest seems to be libgfortran-4.7-dev.

- Looking if I can use this trick, but with trusty (14.04) instead of bionic (16.04). I saw in this post that libgfortran1 is based on gcc-4.1.

- With apt search I found sudo apt-get install g++-4.4 (trusty).

- Yet, no libgfortran options from trusty. Tried bionic, latest is libgfortran3. Tried precise (12.04 LTS) and lucid (10.04), but both in both archives libgfortran can be found.

- Luckely, libgfortran1 can be found in hardy (8.04).

-

- Shaodi highlighted CVPR 2024 Workshop on Autonomous Driving

November 28, 2024

- Lets see if I can make vsfm on Ubuntu 20.04.

- Skipped in the makefile the old static libs. After adding -llapack -ljpeg the tool is build.

- The Gui starts. Adding the images gives:

VisualSFM:45660): GLib-GObject-WARNING **: 11:39:05.459: ../../../gobject/gsignal.c:2086: type 'GtkRecentManager' is already overridden for signal id '319'

Segmentation fault (core dumped)

- Used gdb backtrace to see that the program crashed on jpeg_CreateDecompress () (/lib/x86_64-linux-gnu/libjpeg.so.8, called by BitmapRGBI::OpenJPEG()

- When I try to do sudo apt-get install --dry-run libjpeg62-dev 131 (ROS) packages will be removed.

- Tried to build vsfm on nb-ros (Ubuntu 18.04). Also linked to libjpeg.so, so also crashes on loading a jpg.

- Loading a pgm actually worked. So look if I can modify the script to pgms.

- pgm are bitmaps that expect a gray image. That seems to work. Other option would be ppm. Made a list of pgms, which could be loaded (although more than 500, so rest is skipped. No libsiftgpu.so, so no matches.

- Let see if I can make siftgpu on ws9 (with Nvidia-card). Note 2012 build instructions.

- The option siftgpu_enable_cuda is true per default. Switched it off.

- Missing library IL, so did sudo apt install libdevil-dev.

- Code still fails on SiftMatchCU::CheckCudaDevice(int)

- Compilation both loads ./include/GL/glut.h and /usr/include/GL/gl.h

- Anyway, that is for the viewer. The command make siftgpu only builds the libsiftgpu.so. When specified in LD_LIBRARY_PATH, the matches are used in the reconstruction. After SIFT 0249 the program crashes on BasicImage::GetPixelMatrix(), called from BasicImage::ValidateFeatureData().

- The tool is made from VisualSFM.a, so no option to inspect the code.

- Looked into position-indepented executables. Adding gcc -static, removes the -fPIE warning, but now all dynamic libraries can no longer be found (including dynamic loading of libsiftgpu.so).

November 27, 2024

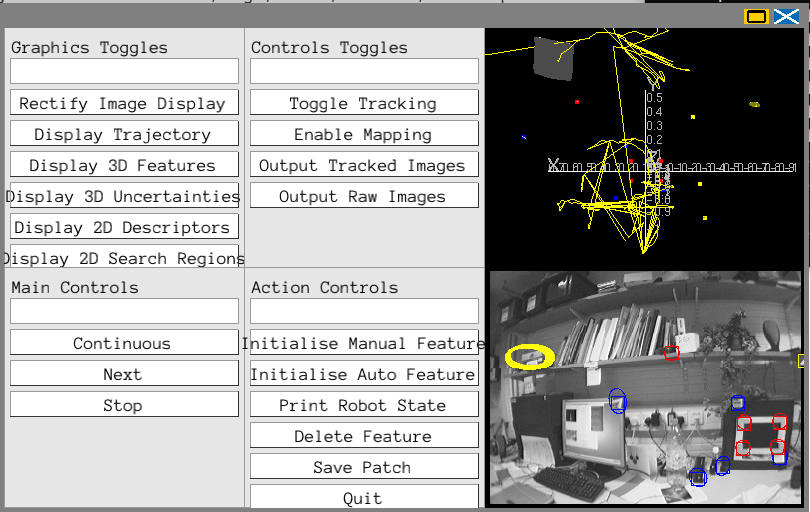

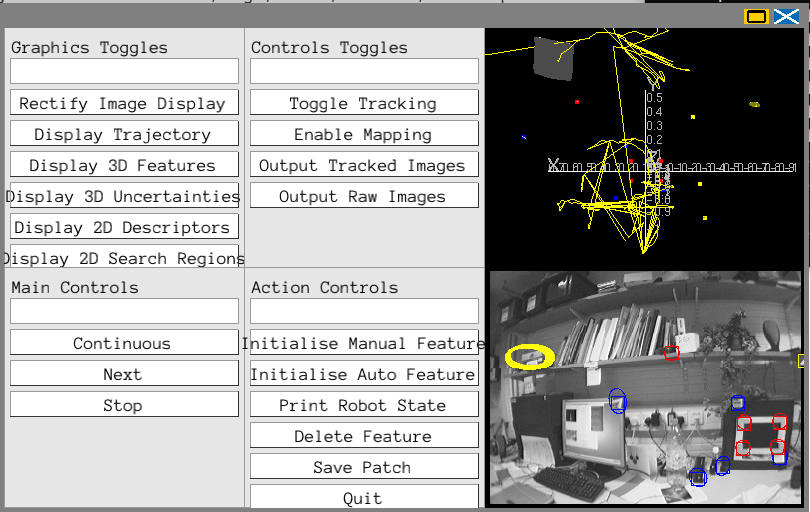

- The VisualSFM tool is based on 3DV 2013 paper.

- The tool should be configurable with multiple algorithms. As indicated in documentation, you need SiftGPU for feature detection and matching, PBA for sparse reconstruction, PMVS/CMVS for dense reconstruction.

-

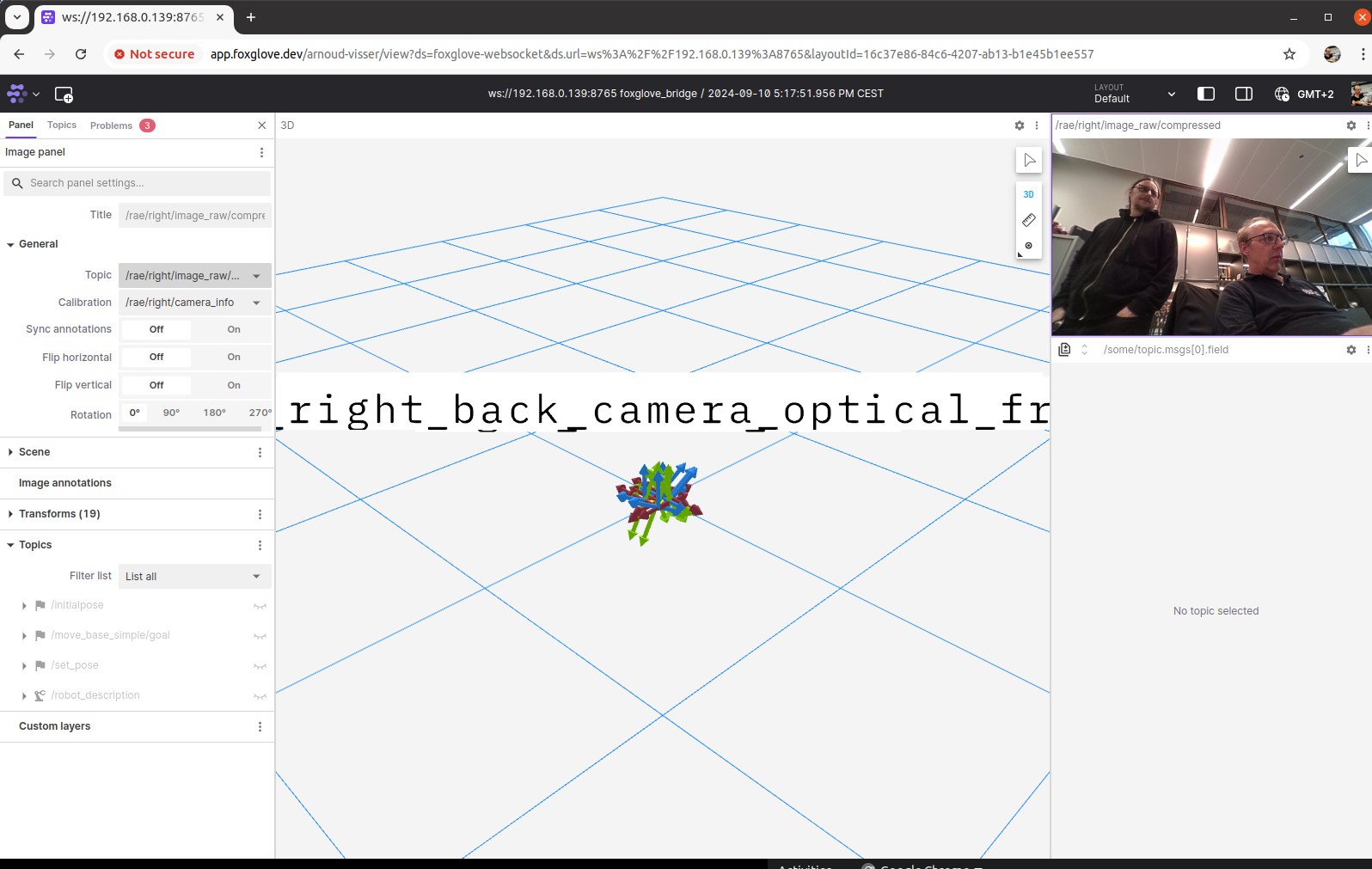

- Received the rosbag recorded in the maze.

- Following Recording and playing back data tutorial.

- Did ros2 bag info rosbag2_2024_11_27-13_04_36_Correct/rosbag2_2024_11_27-13_04_36_0.db3, and received:

Files: rosbag2_2024_11_27-13_04_36_Correct/rosbag2_2024_11_27-13_04_36_0.db3

Bag size: 508.8 MiB

Storage id: sqlite3

Duration: 248.446s

Start: Nov 27 2024 13:04:37.390 (1732709077.390)

End: Nov 27 2024 13:08:45.837 (1732709325.837)

Messages: 63709

Topic information: Topic: /diff_controller/odom | Type: nav_msgs/msg/Odometry | Count: 4477 | Serialization Format: cdr

Topic: /imu/data | Type: sensor_msgs/msg/Imu | Count: 13750 | Serialization Format: cdr

Topic: /odometry/filtered | Type: nav_msgs/msg/Odometry | Count: 5534 | Serialization Format: cdr

Topic: /rae/imu/data | Type: sensor_msgs/msg/Imu | Count: 13756 | Serialization Format: cdr

Topic: /rae/imu/mag | Type: sensor_msgs/msg/MagneticField | Count: 13755 | Serialization Format: cdr

Topic: /rae/right/camera_info | Type: sensor_msgs/msg/CameraInfo | Count: 4436 | Serialization Format: cdr

Topic: /rae/right/image_raw/compressed | Type: sensor_msgs/msg/CompressedImage | Count: 4459 | Serialization Format: cdr

Topic: /tf | Type: tf2_msgs/msg/TFMessage | Count: 3541 | Serialization Format: cdr

Topic: /tf_static | Type: tf2_msgs/msg/TFMessage | Count: 1 | Serialization Format: cdr

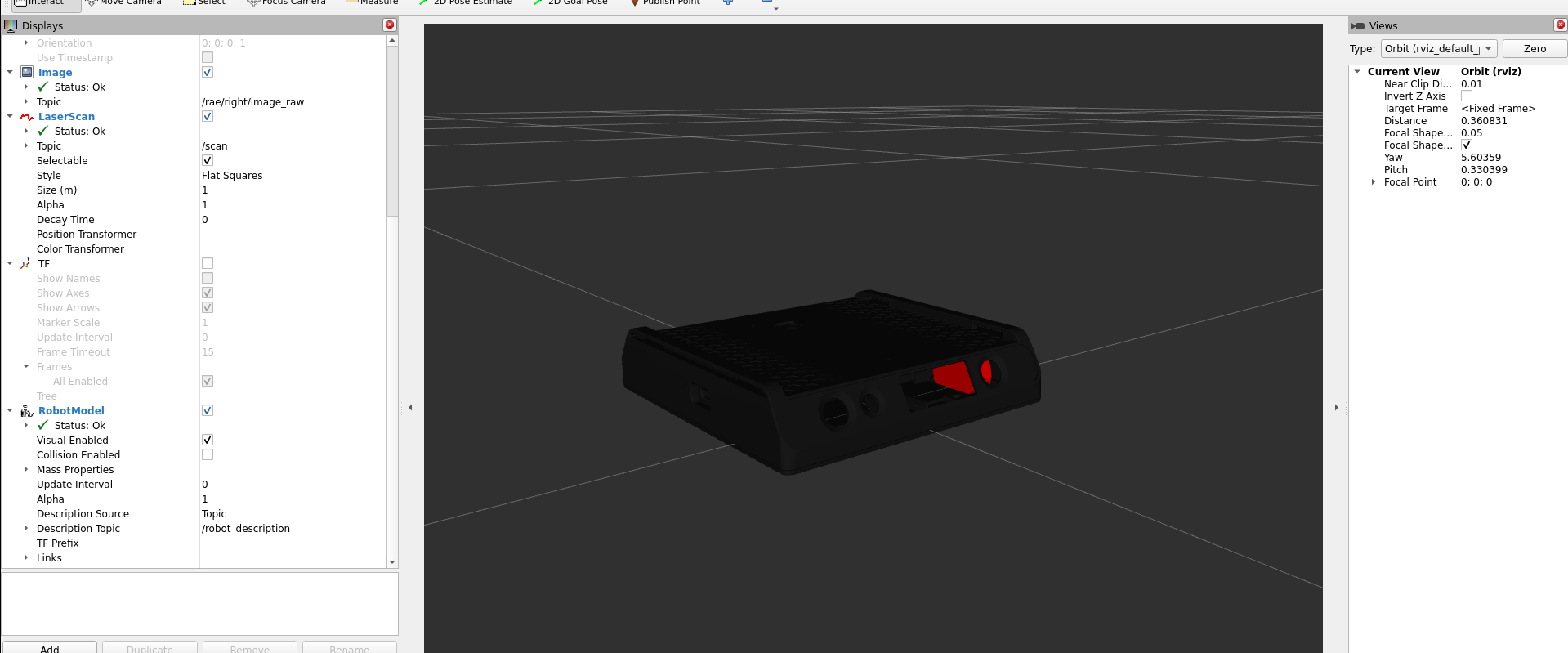

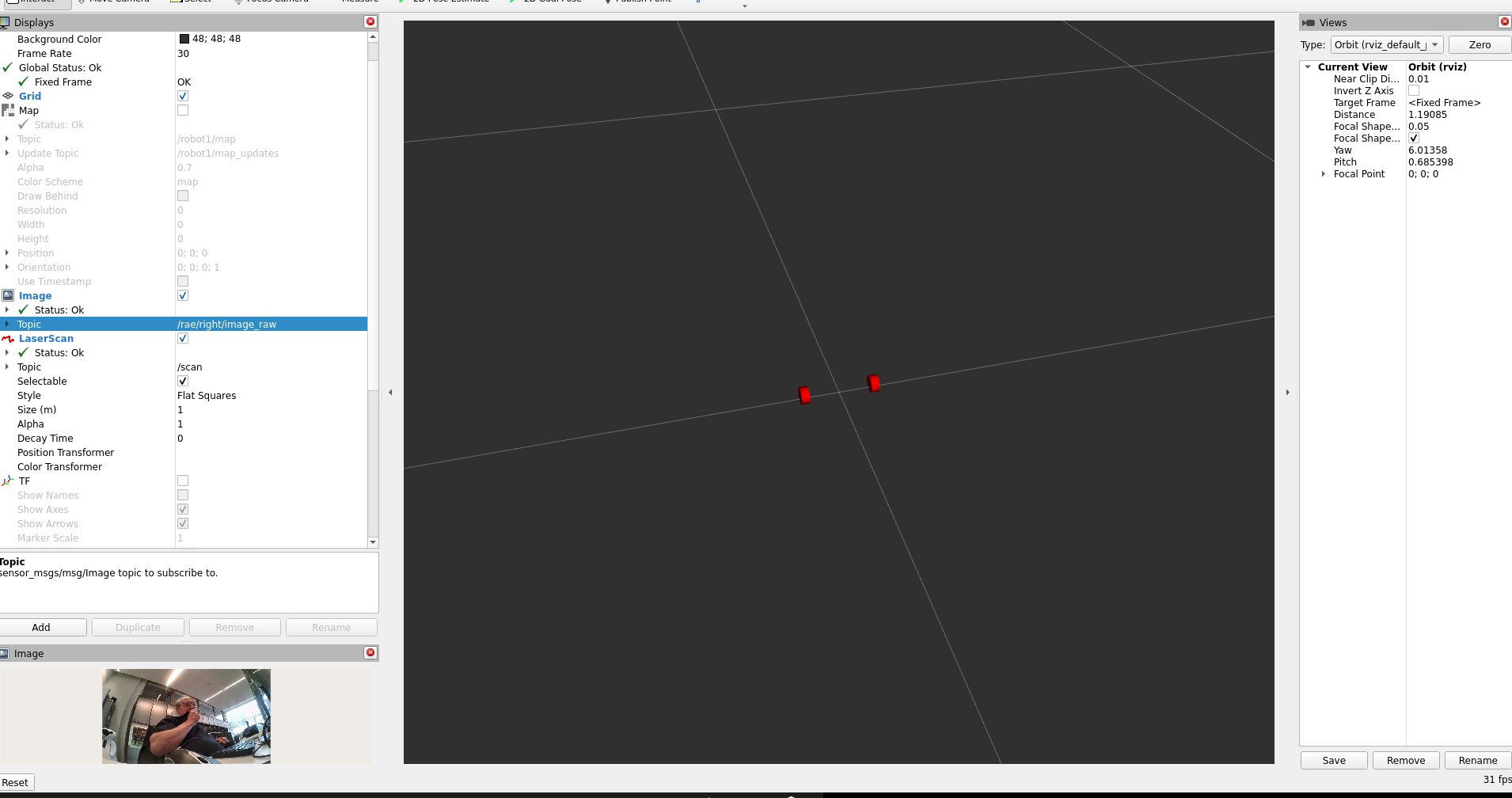

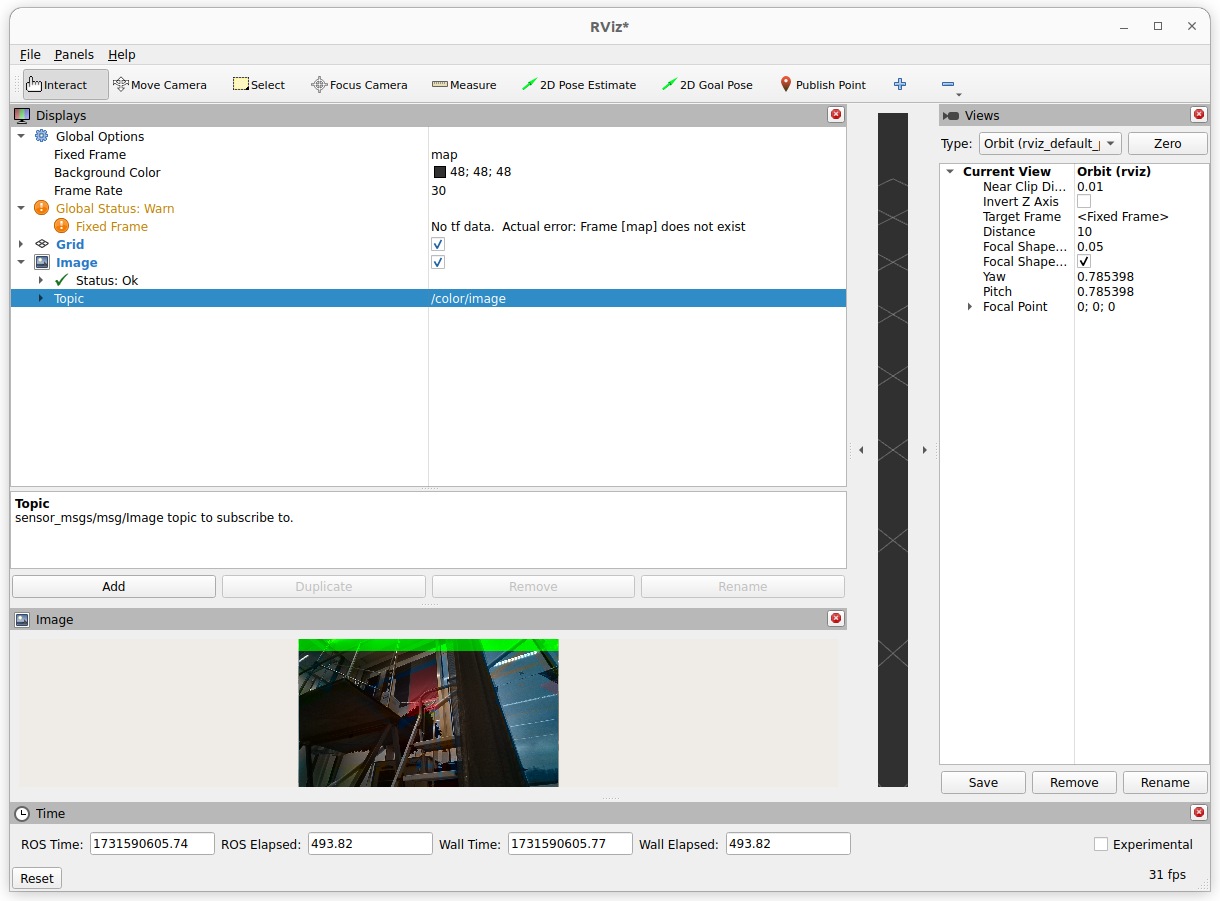

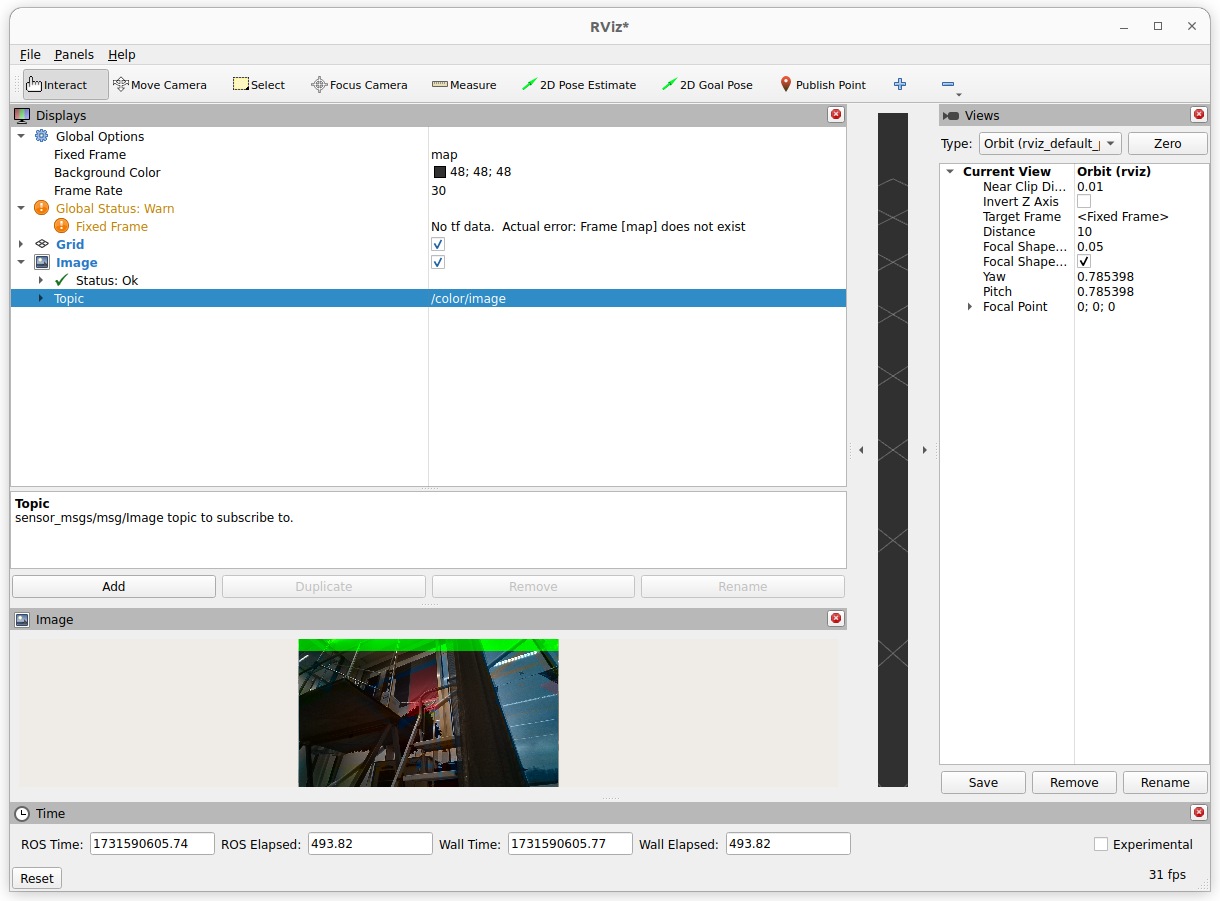

- Did bag play rosbag2_2024_11_27-13_04_36_Correct/rosbag2_2024_11_27-13_04_36_0.db3, but do not see any mesurements in rviz2. Maybe I should set the domain (not specified in metadata.yaml)

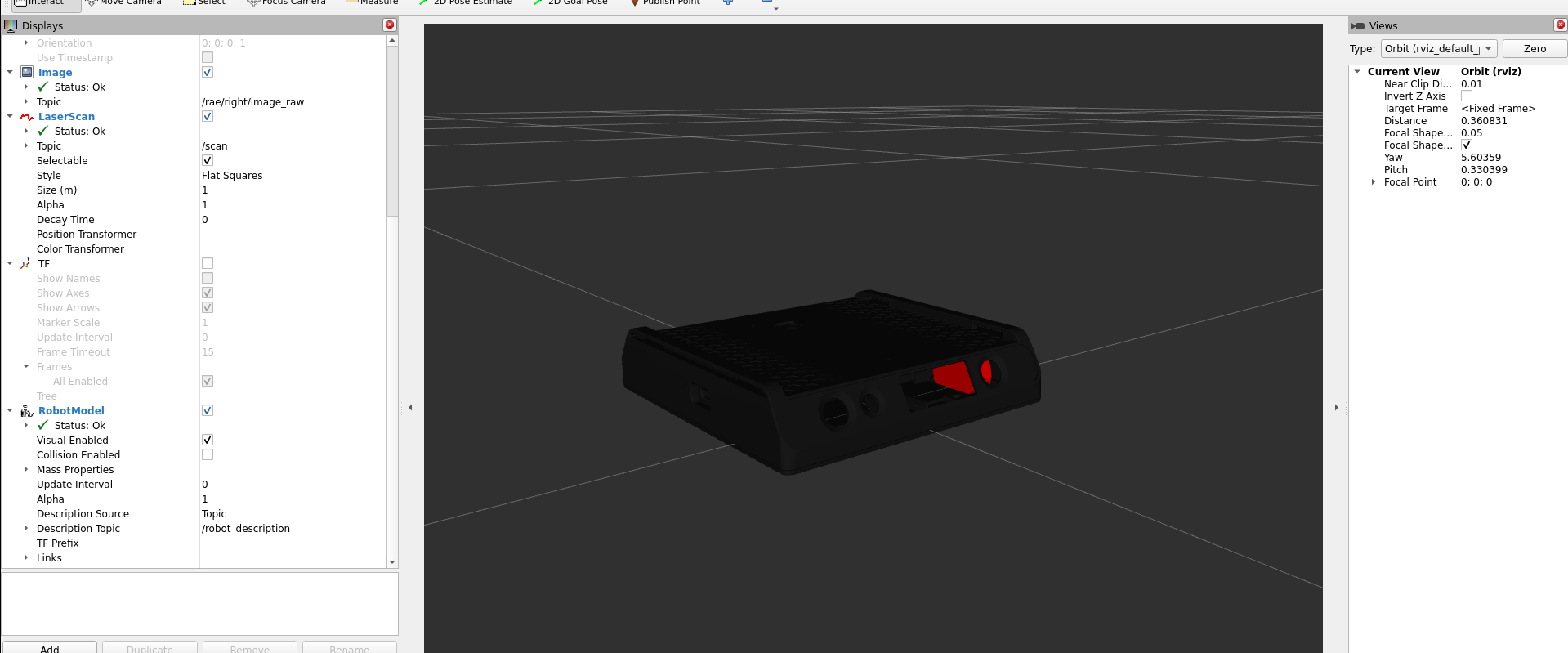

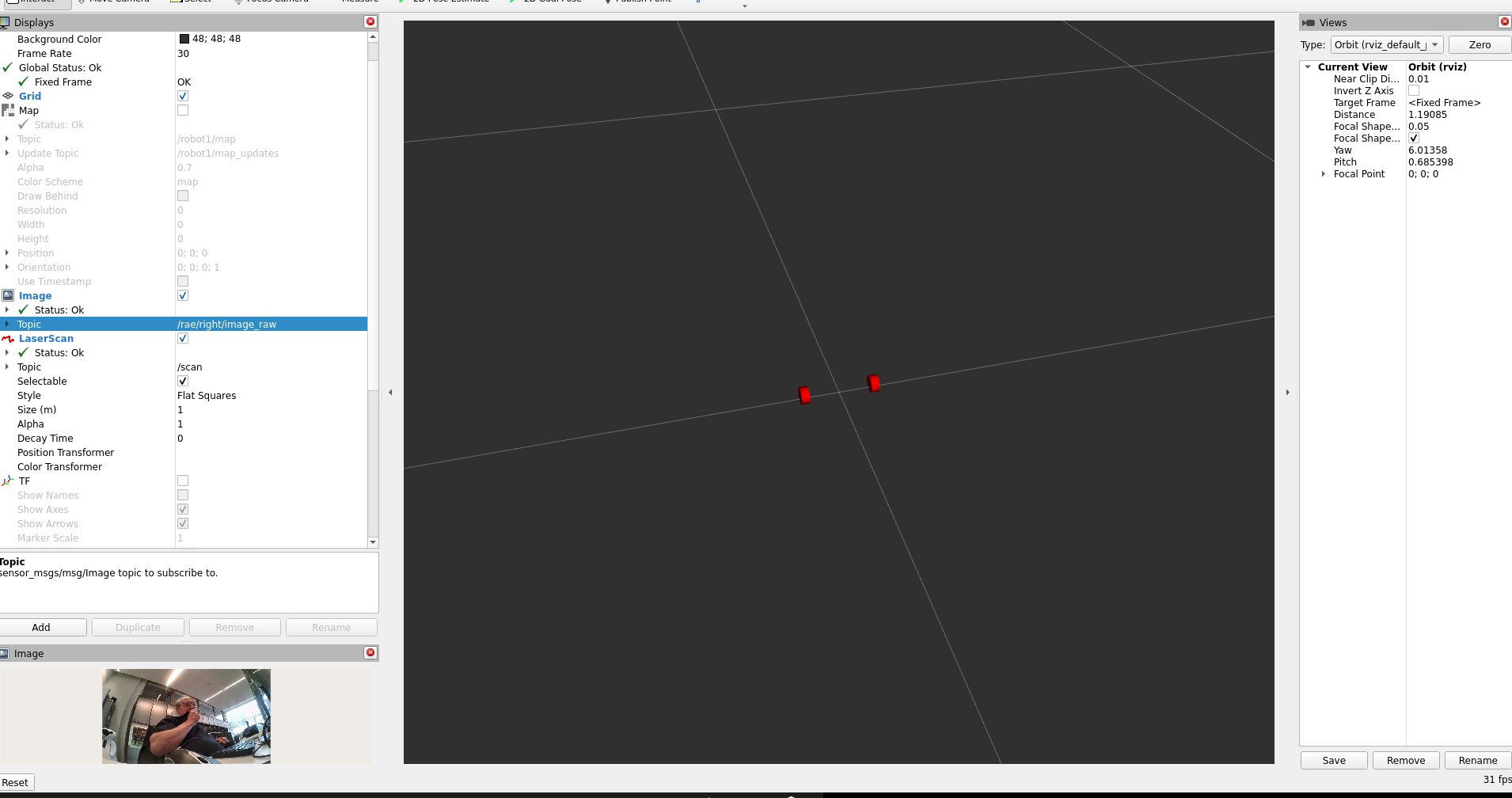

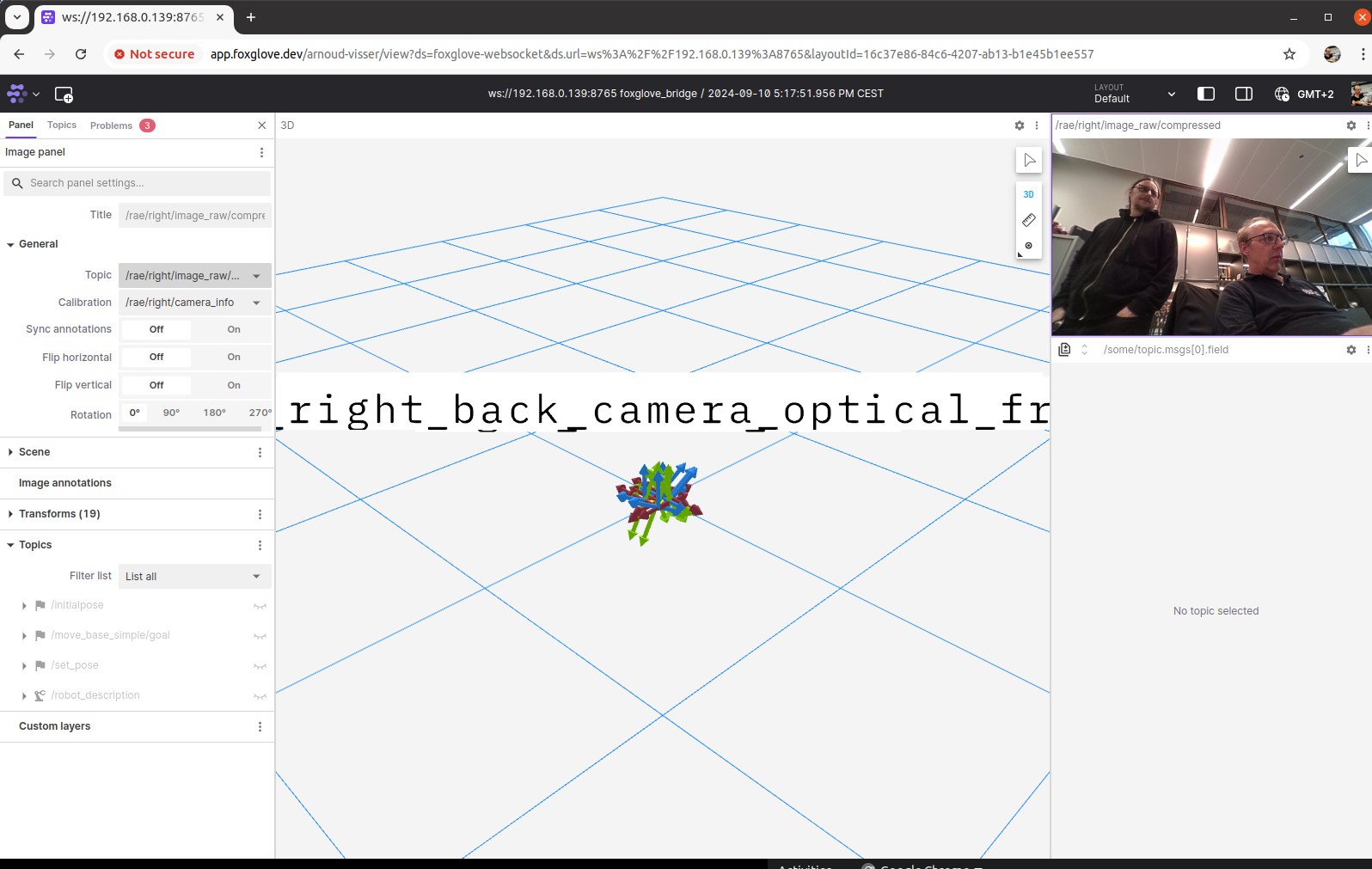

- Now I get the compressed images in rviz2 (subscribed before on the uncompressed raw images).

- Looking if this script works.

- This script works with rosbags-image package. Adjusted export_jpg_images.py to work on the rae-topic.

- The Linux installation instructions of VisualSFM point to this Ubuntu 12.04 installation script, which lists 6 algorithms to be installed.

- Created in my Ubuntu 20.04 home ~/packages/vsfm.

- Pure make fails with multiple times: relocation R_X86_64_32 against `.rodata.str1.1' can not be used when making a PIE object; recompile with -fPIE.

- Tried sudo apt-get install libgtk2.0-dev libglew1.6-dev libglew1.6 libdevil-dev libboost-all-dev libatlas-cpp-0.6-dev libatlas-dev imagemagick libatlas3gf-base libcminpack-dev libgfortran3 libmetis-edf-dev libparmetis-dev freeglut3-dev libgsl0-dev, which fails on v1.6.

- Instead, did udo apt-get install libgtk2.0-dev libglew-dev glew-utils libdevil-dev libboost-all-dev libatlas-cpp-0.6-dev libatlas-dev imagemagick libatlas-dev libcminpack-dev libgfortran3 libmetis-edf-dev libparmetis-dev freeglut3-dev libgsl-dev.

- Still the same error, the precompiled libraries seems to be outdated.

- Lets try to use snap (0.5.26-2 from Alberto Mardegan (mardy) installed).

- Running /snap/visualsfm-mardy/current/bin/VisualSFM works, although I get the warning Unable to write to log file

/snap/visualsfm-mardy/3/bin/log/[24_11_27][15_10_14][95].log.

- The command /snap/visualsfm-mardy/current/bin/VisualSFM sfm ./images/ my_result.nvm nearly works (png instead of jpg images).

- With the script modified to jpg images the images are loaded, although VisualSFM gives the warning unable to load libsiftgpu.so. Result:

##########-------timing------#########

1 Feature Detection finished, 0 sec used

#############################

9939111 pairs to compute match

NOTE: using 3 matching workers

###########-------timing------#########

9939111 Image Match finished, 10 sec used

#############################

Save to /home/arnoud/onderwijs/VAR/3rdAssignment/rosbag2_2024_11_27-13_04_36_Correct/my_result.nvm ... done

0 pairs have two-view models

0 pairs have fundamental matrices

Save to /home/arnoud/onderwijs/VAR/3rdAssignment/rosbag2_2024_11_27-13_04_36_Correct/my_result.nvm ... done

----------------------------------------------------------------

VisualSFM 3D reconstruction, finished

Totally 12.000 seconds used

- Try to make SiftGPU, but that fails on src/SiftGPU/CuTexImage.cpp:35:10: fatal error: cuda_gl_interop.h: No such file or directory.

- Looked into /snap/visualsfm-mardy/3/lib/x86_64-linux-gnu/, but indeed no libsiftgpu.so

- Could be that it is part of other snap of Alberto Mardegan: snap install colmap-mardy.

November 26, 2024

- The boot-repair creates and displays a report when you decide not to send it to the forum.

- The diagnosis found nothing to repair.

- The HDD is not visible, nor by fdisk, nor by gparted.

- Should look for a harddisk-repair disk from ct-magazine.

-

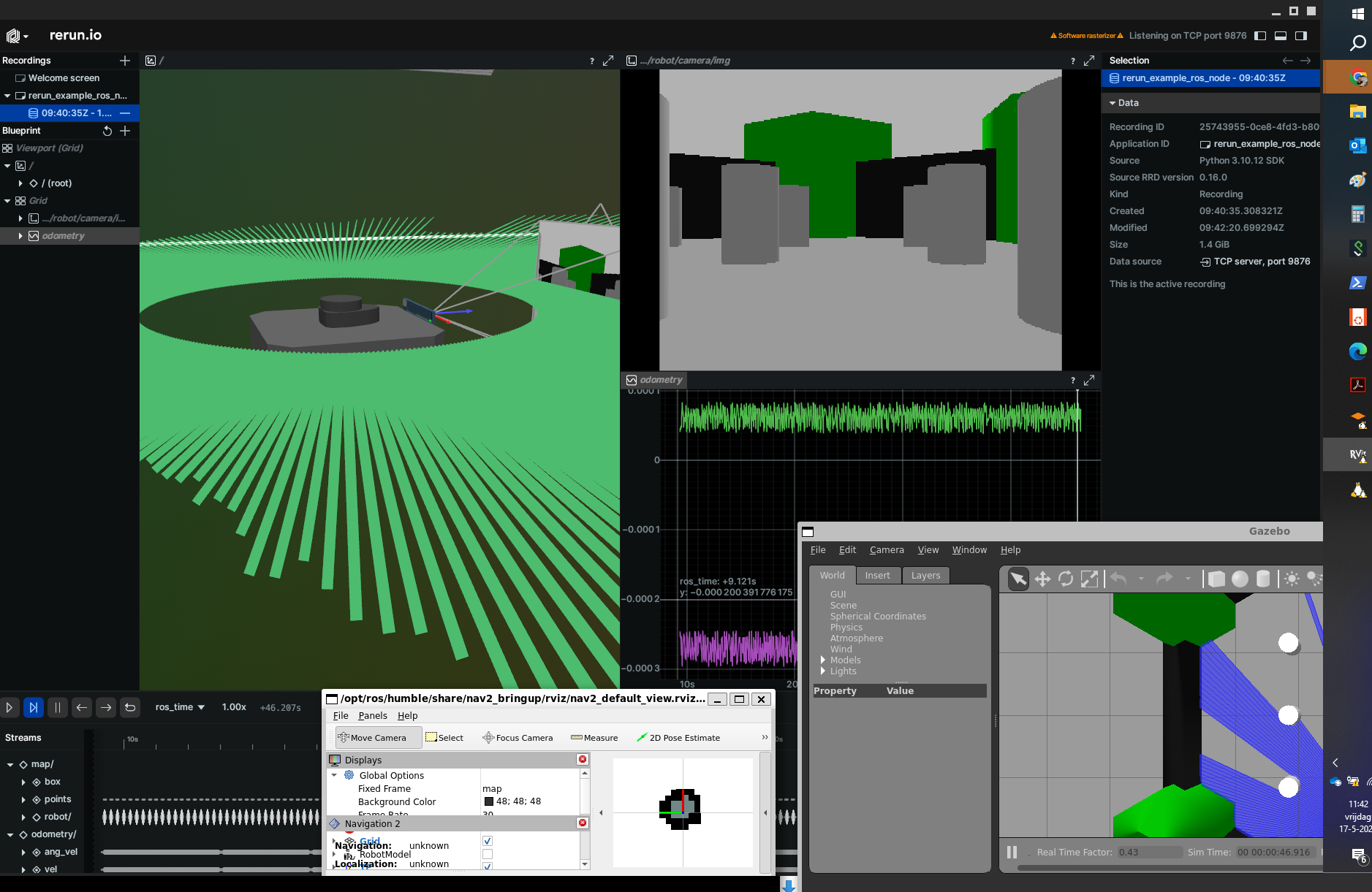

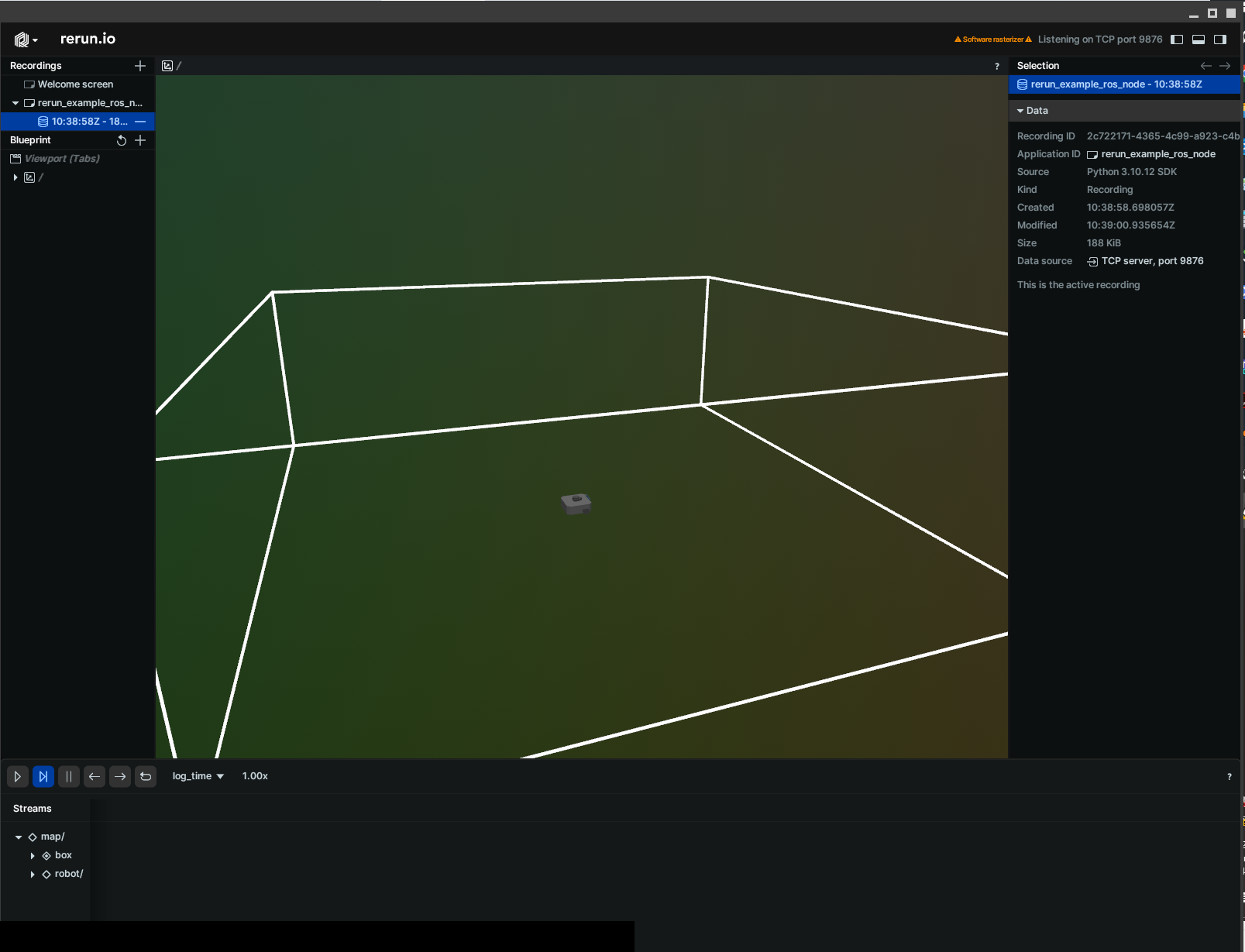

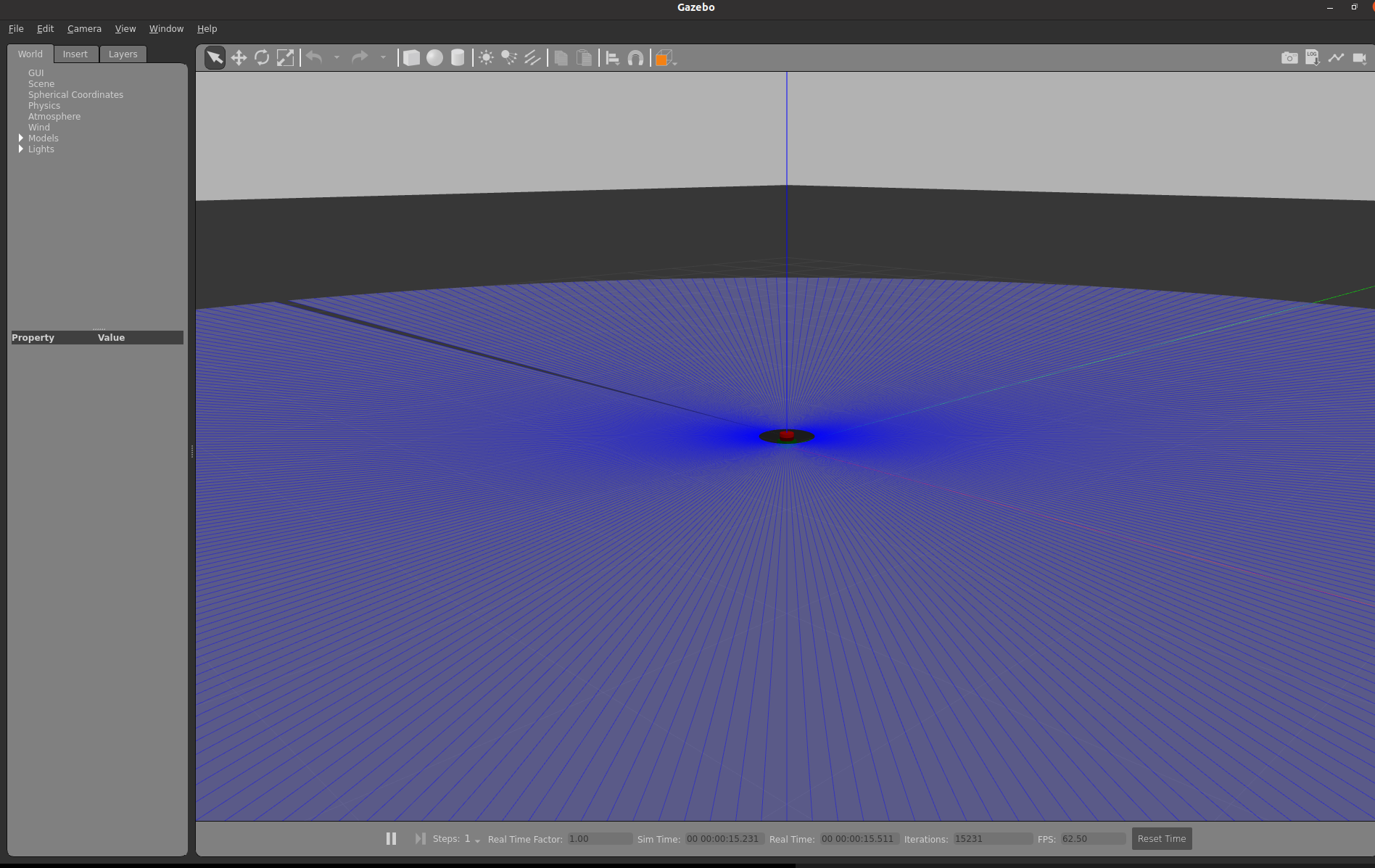

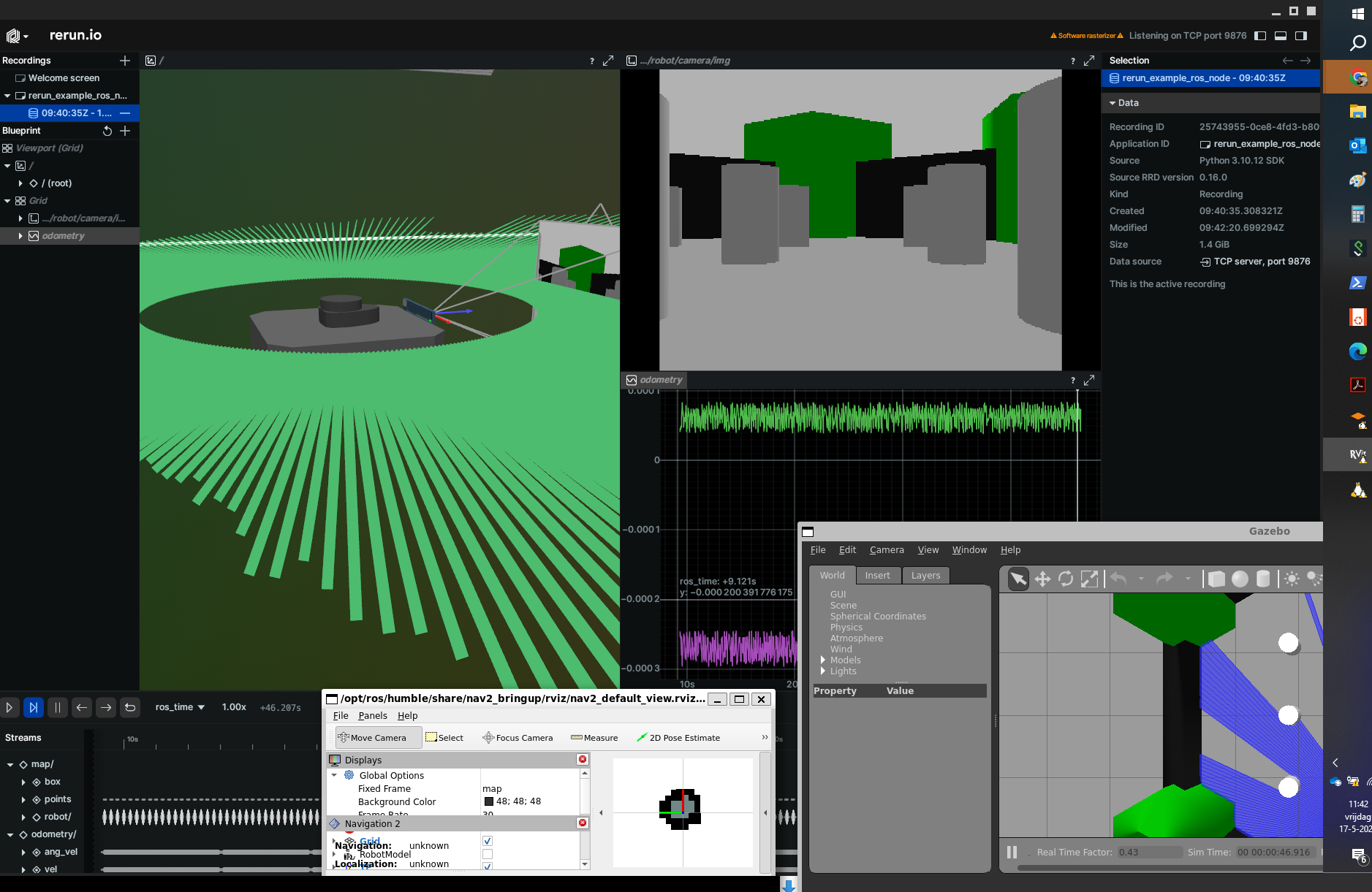

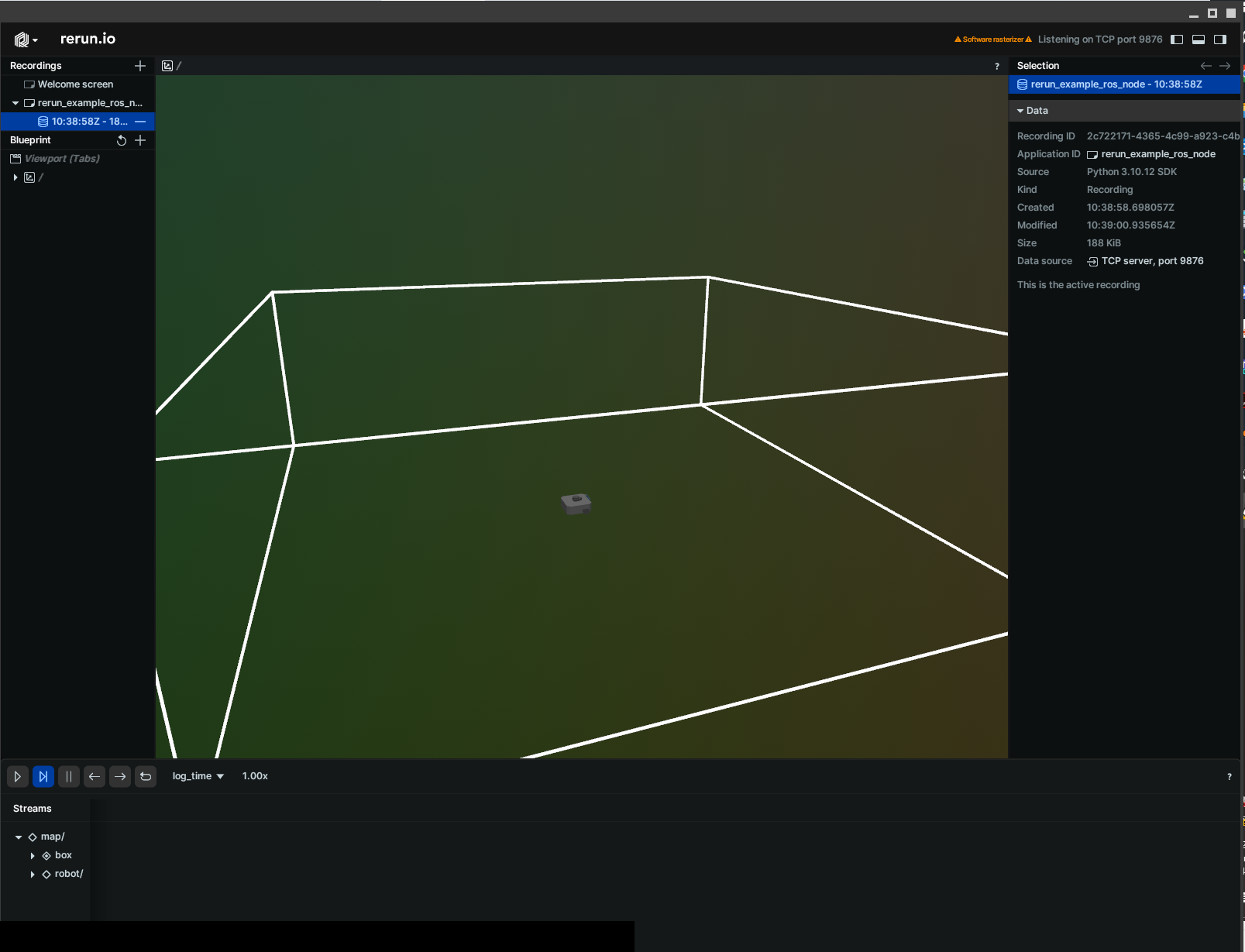

- Looking at the Navigating While Mapping tutorial.

- No background information in the tutorial, just hands-on.

- Reading SLAM Toolbox article (2020).

- The Nav2 layer is explained in Spatio-temporal voxel layer: A view on robot perception for the dynamic world (2020).

- The article indicated that Robot Pose EKF is no longer used, replaced by the Robot Localization package.

- As plugin, the article points to D* lite planner.

- The Navigation stack perception pipeline resides in the Costmap 2D package. It includes an obstacle layer and the VL. The Voxel Layer (VL) can place information of 2D or 3D sensors in dense 3D-layer. It relies on the modified Bresenham's voxel clearing algorithm to get free space.

- Octomap, RTAB-Map and Cartographer can generate 3D-point clouds, but are not designed to be used for navigation or obstacle avoidance in real-time.

- The new Voxel Layer (STVL), includes concepts like voxel decay and decay acceleration.

- The decay acceleration makes use of frustum models of the sensors. 3D laser scanners as the VPL16 use slightly modified frustum model.

- The data structure used is OpenVDB from DreamWorks Animations. These resambles B+trees and octrees.

- Most robots nowadays use docker deployments.

- Could look for the ROSCon 2018 presentation. He mentions at 10m that publishing the voxel map is quite expensive.

- The code can be found at github. The branch is ros2, although the documentation still mentions ros-kinetic.

- Steve mentions in his video also nonpersistent_voxel_layer, which stores only the most recent measurement.

-

- D* is covered in Peter Corke's chapter 5 (section 5.4.2).

- The resource section points to Python Robotics, which includes Dijkstra, A*,D*, Potential Field algorithm.

- Steve LaValle's software page, contains RTT code.

- Siegwart covers A* and D* at page 385 (Chapter 6).

- Voxels are not in Siegwart's index.

- Peter Corke covers Voxels in 14.7, including with code to fit planes.

- For viewing the Cloud Compare tool is mentioned (C++ based).

- It seems that Open3D has an PointCloud class wrapper.

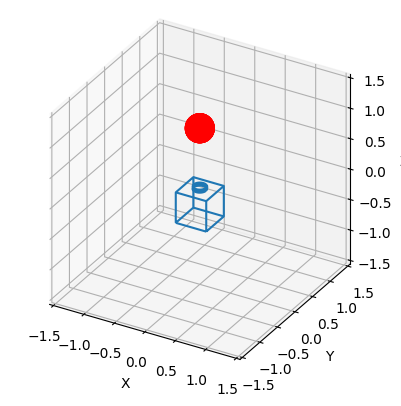

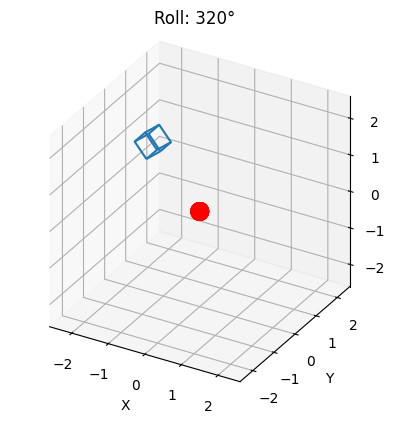

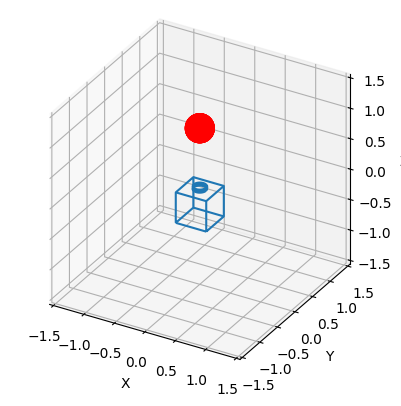

- Runned Chapter 5 notebook.

November 25, 2024

- Looked into the code of EKF which can be found at ~/.local/lib/python3.8/site-packages/roboticstoolbox/mobile/EKF.py.

- This EKF function can be called with several arguments. With only robot as argument it work as dead reckoning, with robot, sensor & map as localisation,

without map it will start to map creation, SLAM even when V is given.

- V is the estimated odometry noise and P0 the initial covariance matrix.

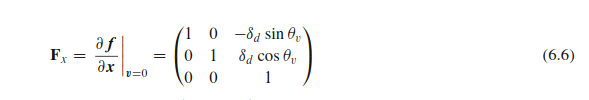

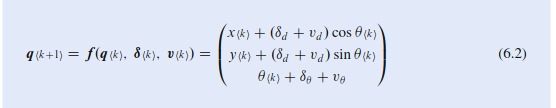

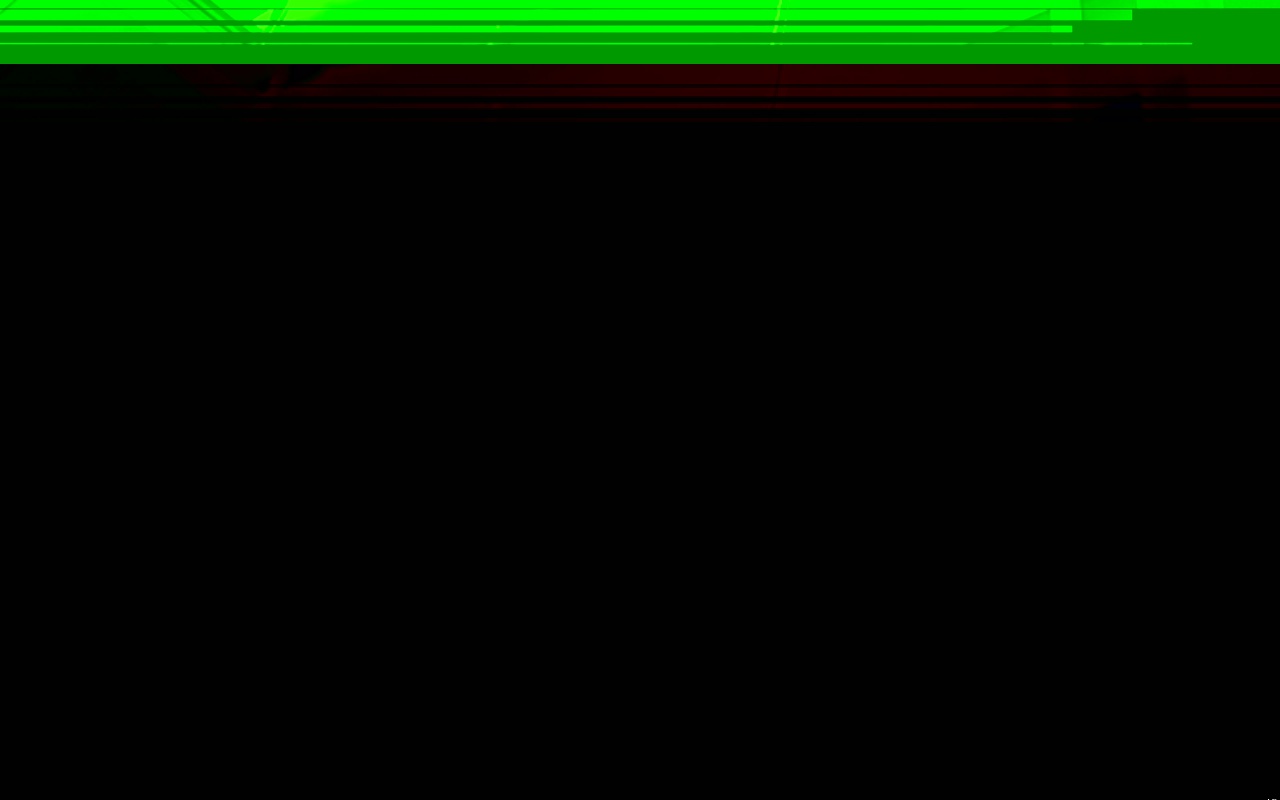

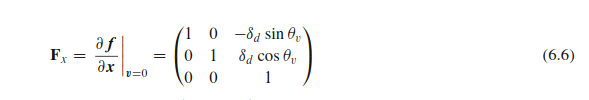

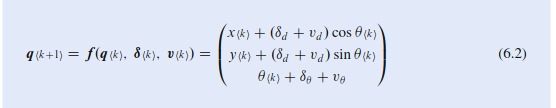

- Looked into the code of the robot which can be found at ~/.local/lib/python3.8/site-packages/roboticstoolbox/mobile/Vehicle.py. The equation (6.6 and 6.7) indicate that theta_d is used, but that is the angle after the movement. So, in the calculation actually theta is used, the current angle.

- Not sure if this is a bug or a feature. It seems to be a feature, because is the derivate of equation 6.2. Yet, it is not actually clear which theta is actually used in equation 6.2. Book is wrong, comments are misleading, code is good.

- Also looked into the notebook of Chapter 14. From Fig. 14.7 the point P and epipole are drawn, but not the epipolar line. That line is only drawn at the end of the next section (page 598).

-

- Should do a Boot-repair on Barend's old laptop.

November 22, 2024

- Shaodi mentioned SfM with 7-points this week, Davide works with the 8-point and 5-point algorithms.

- This paper makes a comparison between all three algorithms.

- With the 7-point algorithm, there can be either one or three real solutions.

- For more details for the 5-point algorithm, the point to Nister 2004.

-

- Joey pointed me to a discussion on long term support for RAE, which pointed to the discussion on RCV3 (with RCV4 on the horizon in Q4 2024). According to this discussion a RAE based on RCV4 is only expected in Q4 2025.

- This is a list of demos which could be run directly on the device.

- Could look at this Harris detector based on PyTorch.

-

- Downloaded on the Ubuntu partition of nb-dual Chapter 6 notebook in ~/onderwijs/VAR. The code from page 211 (6.1.1 Modelling the robot) works, including an animation that was not in the book.

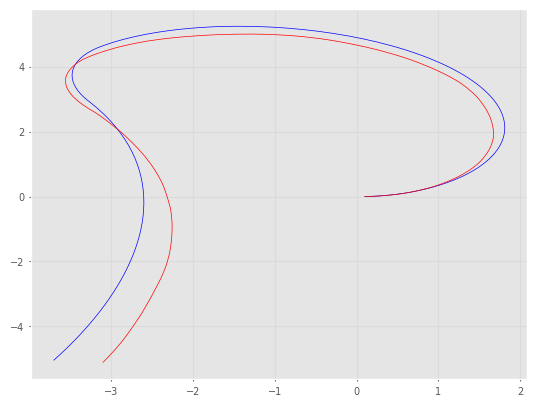

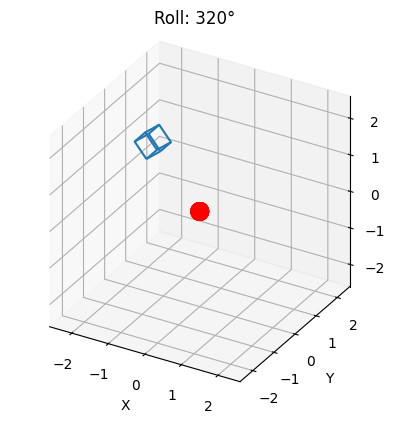

- The next section shows the EKF pose estimation, based on the motion updates (blue ground-truth, red ekf estimate):

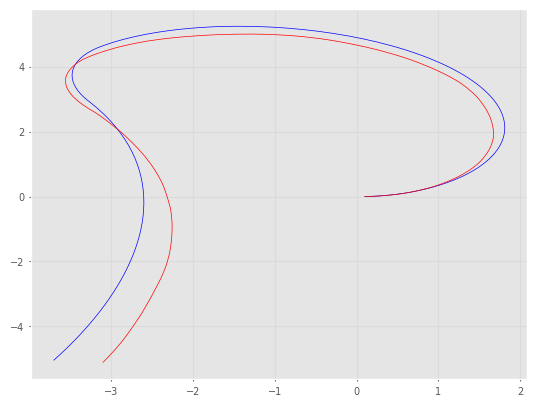

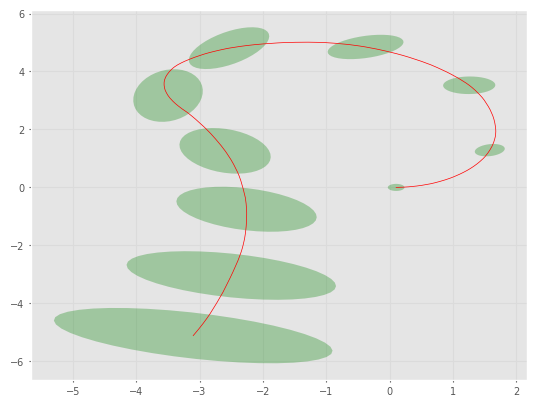

- There is also code to draw the confidence as elipses, comparible with Fig. 6.5.