Labbook Dutch Nao Team

Error Reports

The head of Princess Beep Boop (Nao4) crashed twice when an ethernet cable was plucked in, but this problem disappeared after flashing. Seems OK 13 December 2016.

- Julia has a weak right shoulder (December 2016).

Bleu hips are squicking. Seems OK September 2015.

- Bleu sound falls away. Loose connector? September 2015.

- Nao's overheats faster when using arms than using legs.

Blue skulls is broken at the bottom: should remove its head any more.

Blue has a connector at the front which is no longer glued on position. Couldn't find a connector position.

Started Labbook 2020.

'

December 23, 2019

- Looked at the Nao administration for a good robot to borrow for TechnoLab Leiden.

- Carlos, Nao5, performed well (so good, that I removed Left Leg stuck from its head). Let it stand up, sit down, did the Thinking loops (both sitting and standing). No problems.

- DownyJr, Nao2 didn't power up its lower body.

- The non-marked robot I tried with the Nao3 and Nao2 head, in both cases the robot shuts-down once booted. Maybe I should try it again after giving it more time to charge itself.

June 20, 2019

- Downloading OpenNao OS VirtualBox image 2.1.2 from Aldebaran resources

- Had still a vm-2.1.0.19 image in my VirtualBox under Linux, so cloned that image an tried to connect the downloaded image to that clone.

June 19, 2019

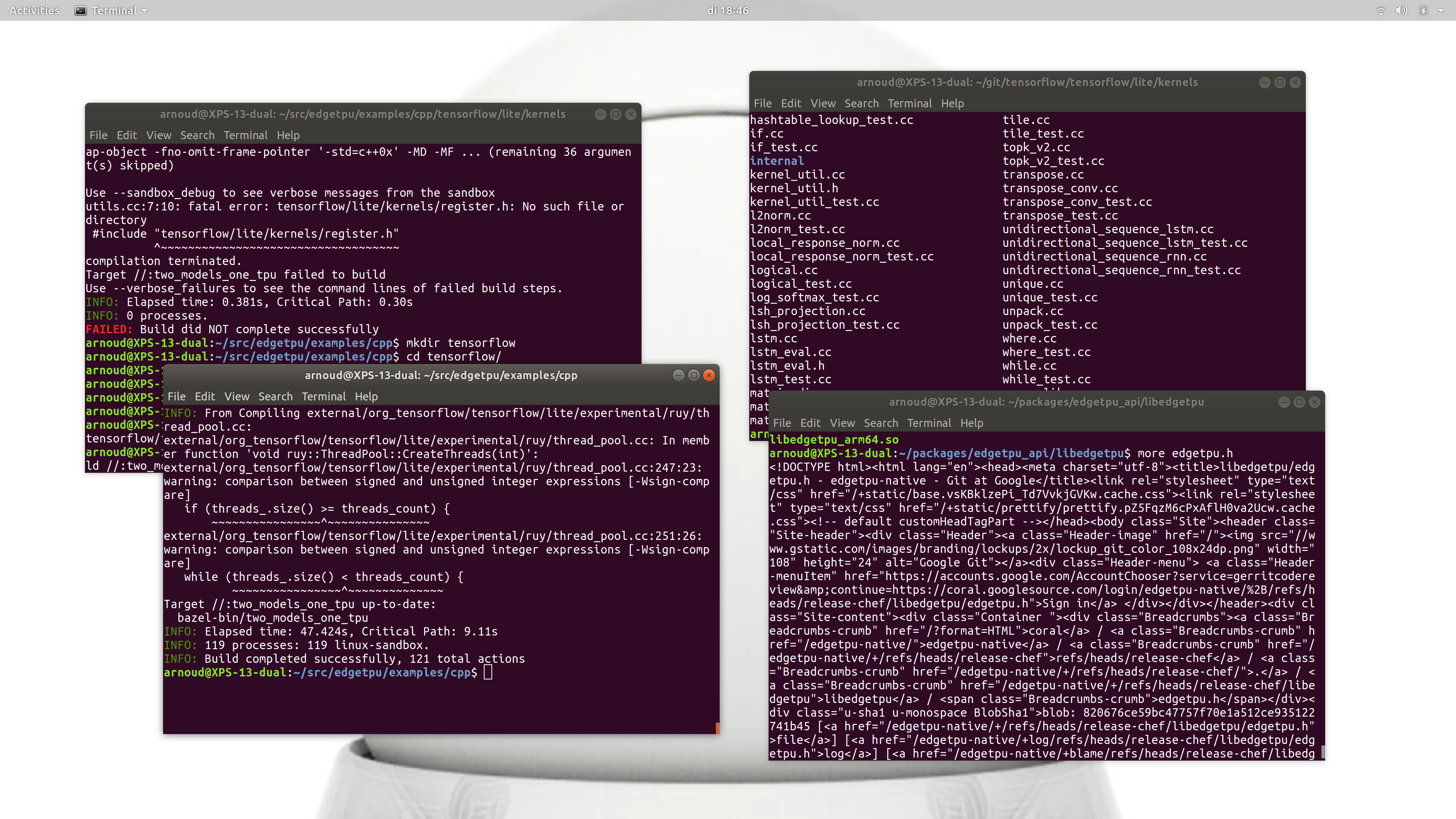

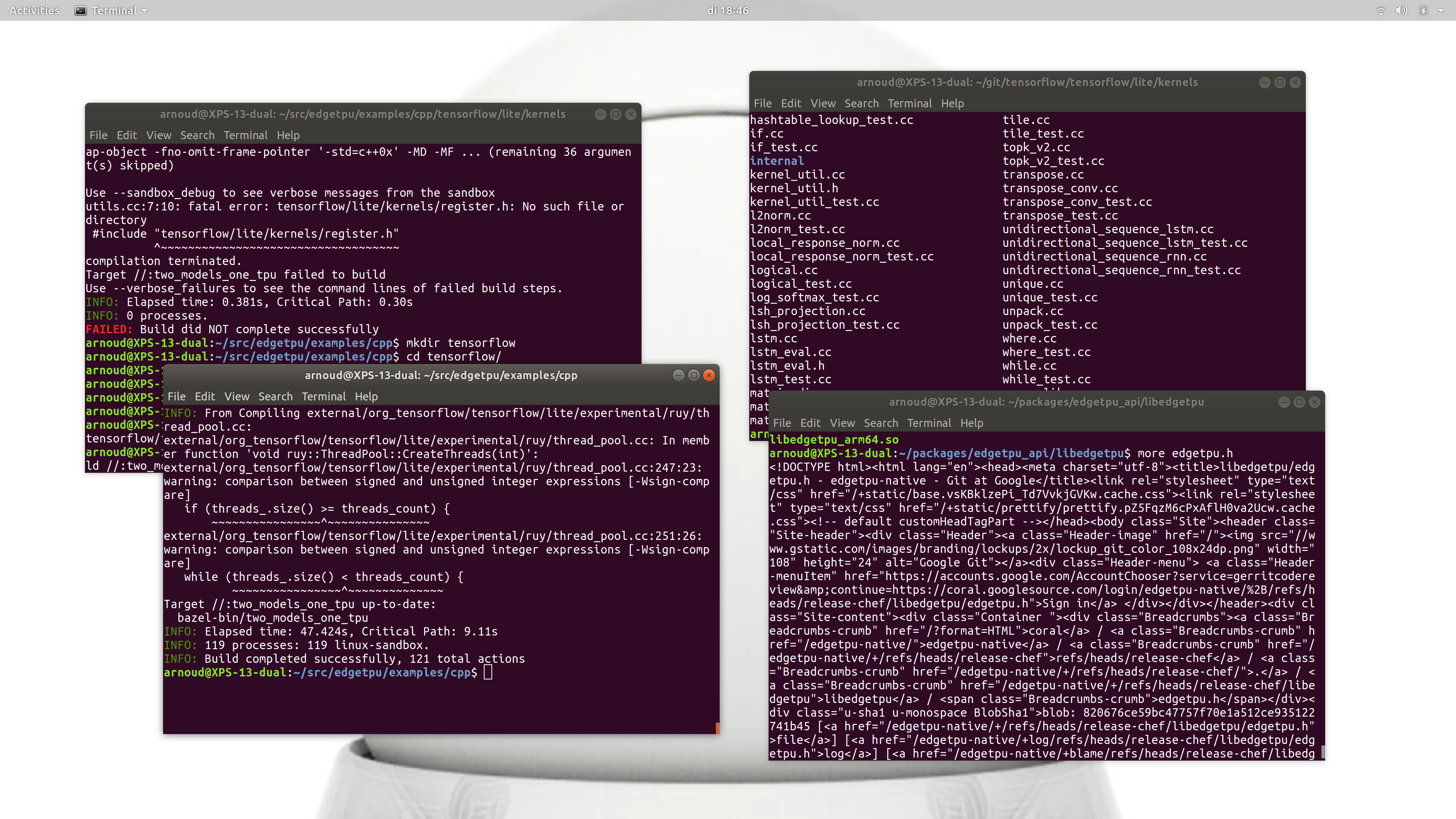

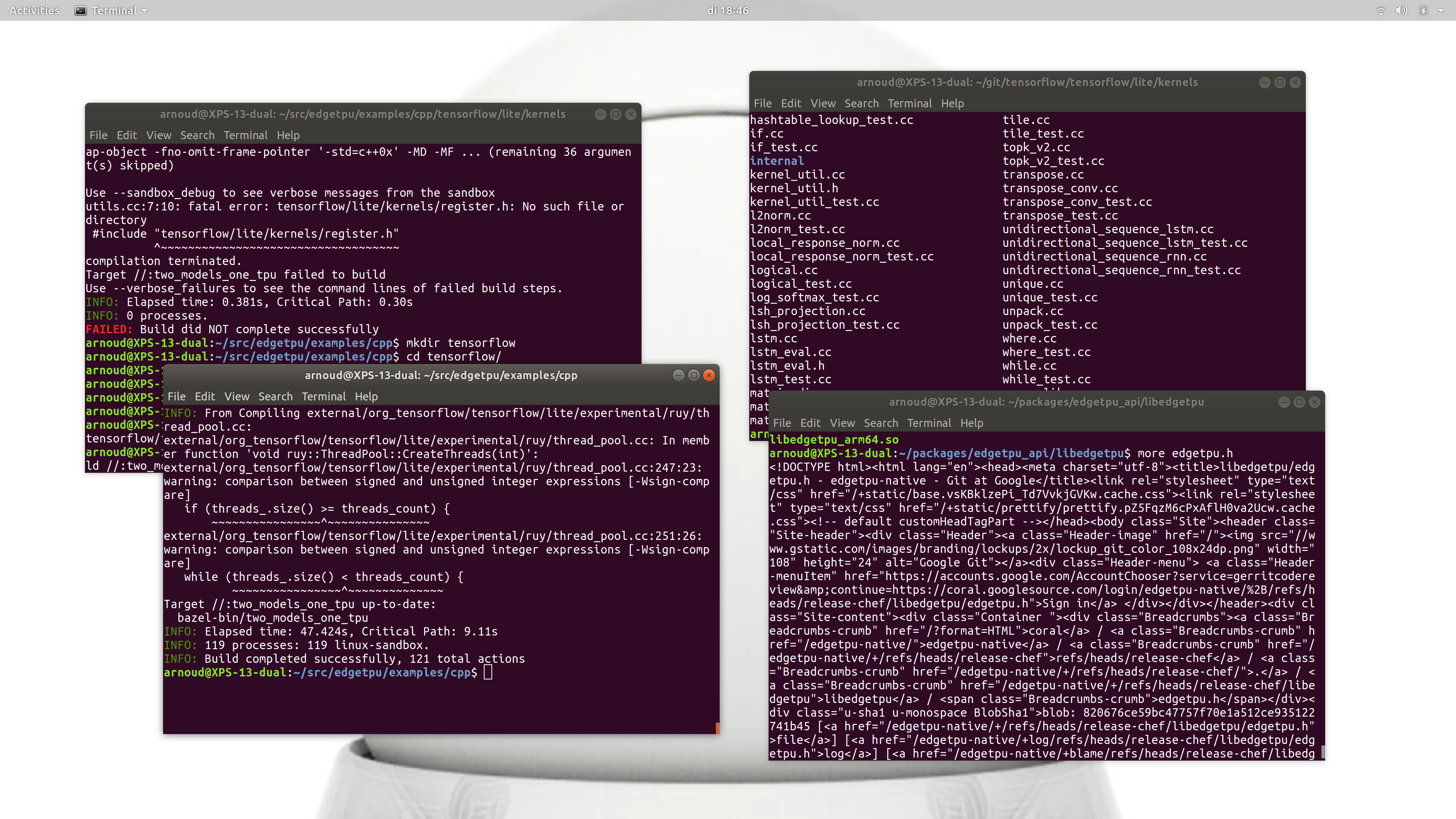

- Continue with running the edge TPU C++ example.

- Executing the command bazel-bin/two_models_one_tpu --help gave the output:

Running model: /tmp/edgetpu_cpp_example/inat_bird_edgetpu.tflite and model: /tmp/edgetpu_cpp_example/inat_plant_edgetpu.tflite for 2000 inferences

ERROR: Could not open '/tmp/edgetpu_cpp_example/inat_bird_edgetpu.tflite'.

Fail to build FlatBufferModel from file: /tmp/edgetpu_cpp_example/inat_bird_edgetpu.tflite

Aborted (core dumped)

- The usages is nicely documented in two_models_one_tpu.cc:

// Example to run two models alternatively using one Edge TPU.

// It depends only on tflite and edgetpu.h

//

// Example usage:

// 1. Create directory /tmp/edgetpu_cpp_example

// 2. wget -O /tmp/edgetpu_cpp_example/inat_bird_edgetpu.tflite \

// http://storage.googleapis.com/cloud-iot-edge-pretrained-models/canned_models/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite

// 3. wget -O /tmp/edgetpu_cpp_example/inat_plant_edgetpu.tflite \

// http://storage.googleapis.com/cloud-iot-edge-pretrained-models/canned_models/mobilenet_v2_1.0_224_inat_plant_quant_edgetpu.tflite

// 4. wget -O /tmp/edgetpu_cpp_example/bird.jpg \

// https://farm3.staticflickr.com/4003/4510152748_b43c1da3e6_o.jpg

// 5. wget -O /tmp/edgetpu_cpp_example/plant.jpg \

// https://c2.staticflickr.com/1/62/184682050_db90d84573_o.jpg

// 6. cd /tmp/edgetpu_cpp_example && mogrify -format bmp *.jpg

// 7. Build and run `two_models_one_tpu`

//

// To reduce variation between different runs, one can disable CPU scaling with

// sudo cpupower frequency-set --governor performance

- Now it runs:

Running model: /tmp/edgetpu_cpp_example/inat_bird_edgetpu.tflite and model: /tmp/edgetpu_cpp_example/inat_plant_edgetpu.tflite for 2000 inferences

INFO: Initialized TensorFlow Lite runtime.

W third_party/darwinn/driver/package_registry.cc:65] Minimum runtime version required by package (5) is lower than expected (10).

W third_party/darwinn/driver/package_registry.cc:65] Minimum runtime version required by package (5) is lower than expected (10).

[Bird image analysis] max value index: 753 value: 0.898438

[Plant image analysis] max value index: 1680 value: 0.972656

Using one Edge TPU, # inferences: 2000 costs: 19.9468 seconds.

- Without the TPU I get the following output:

Running model: /tmp/edgetpu_cpp_example/inat_bird_edgetpu.tflite and model: /tmp/edgetpu_cpp_example/inat_plant_edgetpu.tflite for 2000 inferences

INFO: Initialized TensorFlow Lite runtime.

ERROR: Failed to retrieve TPU context.

ERROR: Node number 0 (edgetpu-custom-op) failed to prepare.

- Could also build ./bazel-bin/two_models_two_tpus_threaded , but that gave directly:

Segmentation fault (core dumped)

- This behavior is a known issue, if you link to libc++ instead of libstdc++. Actually, the executable is linked to both libc++ and libstdc++.

- Tried to compile the small test.cc with clang, but the command clang -std=c++0x -stdlib=libc++ -ledgetpu -L/home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/git/tensorflow -lm -lc++abi -lrt -ludev -lgcc_s -lresolv -lpthread -lc test.cc fails with:

/usr/bin/ld: /lib/x86_64-linux-gnu/libedgetpu.so: undefined reference to symbol '_ZNSt3__18ios_base5clearEj'

/usr/lib/x86_64-linux-gnu//libc++.so.1: error adding symbols: DSO missing from command line

- Running g++ -std=c++0x test.cc -ledgetpu -L/home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/git/tensorflow -lm -lc++abi -lrt -ludev -lgcc_s -lresolv -lpthread -lc works, but the executable a.out has both dependencies and crashes.

- When I do clang++ -std=gnu++11 -stdlib=libc++ test.cc -ledgetpu -L/home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/git/tensorflow -lm -lc++abi -lrt -ludev -lgcc_s -lresolv -lpthread -lc it works (because -stdlib is clang++ command, not a g++):

0.11.1(@229432882 manually build)

- The executable made this way is not linked to libstdc++. If I extend the code to

#include

#include

int main(void) {

auto v = edgetpu::EdgeTpuManager::GetSingleton()->Version();

std::cout << v << std::endl;

const auto& available_tpus =

edgetpu::EdgeTpuManager::GetSingleton()->EnumerateEdgeTpu();

std::cout << "Number of Edge TPUs found: " << available_tpus.size() << std::endl;

if (available_tpus.size() < 2) {

std::cerr << "This example requires two Edge TPUs to run." << std::endl;

return 0;

}

}

I get:

Number of Edge TPUs found: 1

This example requires two Edge TPUs to run.

- Running g++ -nostdlib -lc++ doesn't solve this issue (many undefined references).

- Running clang++ -std=gnu++11 -stdlib=libc++ test.cc -ledgetpu -L/home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/packages/edgetpu_api/libedgetpu -I /home/arnoud/git/tensorflow also works.

- Tried to make a Makefile with those compiler commands, but two_models_one_tpu fails on missing libraries. An example is the third_party packages flatbuffers, which I downloaded and compiled with bazel build //:flatbuffers and //:flatc_library, but this didn't help.

June 18, 2019

- Still bazel build //:two_models_one_tpu fails on The repository '@local_config_tensorrt' could not be resolved.

- This '@local_config_tensorrt' is called in the file tensorflow.bzl in the ~/.cache/bazel. There is directory compiler/tf2tensorrt/, but I don't see a bzl configuration-file there. There is also a directory contrib/tensorrt; the file BUILD also tries to load @local_config_tensorrt.

- The @ indicates that it is a remote repository imported in a WORKSPACE file.The tensorrt_configure.bzl can be found in directory ~/git/tensorflow/thirdparty/tensoorrt/, but adding load("//org_tensorflow/third_party/tensorrt:tensorrt_configure.bzl","tensorrt_configure") to the WORKSPACE of the cpp-examples doesn't work (crosses boundary of package)

- Solved this (temporarely) with correct line load("//org_tensorflow/third_party/tensorrt:tensorrt_configure.bzl","tensorrt_configure") to the WORKSPACE. The first time I run I got a request to load third_party/gpus:rocm_configure.bzl but then the tensorrt error came back.

- Found a good WORKSPACE on github. Trick was to load the tensorflow/workspace.bzl.

- This partly works, although filegroup_external is not part of the closure_rules. So I should try the latest version.

- This fails on closure/private/defs.bzl. The FileType function is not available, but setting the flag <--incompatible_disallow_filetype default: "false" (which logical, because this flag is false by default).

- Problem is still the version of closure_rules. In the WORKSPACE of ~/git/tensorflow another version is loaded (archive from 2019-04-04, instead of the 0.7.0.tar.gz version). If I check the branch tags, also v0.8.0 is available.

- My build fails now on libedgetpu/Build.bazel (src attribute of cc_library rule).

- Changed my libedgetpu.BUILD. The @libedgetpu//:lib dependency is now solved with a cc_import with as shared_library libedgetpu_x86_64.so. Seems to work, only fails on dependency on @bazel_skylib of protobuf.

- Adding the skylib http_archive to the WORKSPACE solves this issue.

- The compilation could not find some of the lite/kernel includes, but that was because I commented that out in the local BUILD.

- Had the http-page of the edgetpu.h instead of the raw file.

- Finally, a working build:

June 13, 2019

- Continue with building tensorflow lite. Changed PYTHONPATH from 2.7/lib-dynload to 3.

- When I do import tensorflow from the interpreter (python3), tensorflow is loaded without problems.

- Also installed the TensorFlow pip dependencies as suggested in build from source tutorial:

pip3 install -U --user keras_applications==1.0.6 --no-deps

pip3 install -U --user keras_preprocessing==1.0.5 --no-deps

The first package dependences didn't work. Installing them one by one worked.

- The executable bazel can be found, although it is a script in my home directory that points to the real executable. Calling bazel help gave:

Starting local Bazel server and connecting to it...

[bazel release 0.25.2]

June 12, 2019

- Looking at the CMakeCache.txt and CMakeOutput.log in modules/objdetect, but this only contains CFLAGS. I see no targets. I also cannot find any Makefiles which are produced. Seems that the make has to be performed from the general build directory.

- Checking objdetect in the OpenCV tutorial. The example code is there, but no build instructions on the github.

- Started with the tutorials at the beginning (section 1.1). Checked if I also did sudo make install. The examples are now installed in /usr/local/share/opencv4/samples, the includes in /usr/local/include, the libraries in /usr/local/lib, the exutables in /usr/local/bin (five files, including opencv_version).

- Created a CMakeLists.txt and created a DisplayImage executable (in ~/src/opencv/tutorials/cmake). Called it with ./DisplayImage /usr/local/share/opencv4/samples/data/lena.jpg.

- Note that on page 118 there is also a section on cross-compilation for ARM based Linux systems.

- Copied the CMakeLists.txt to opencv/tutorials/object_detection and made objectDetection. Called the executable with ~/src/opencv/tutorials/object_detection$ ./objectDetection --eyes_cascade=/usr/local/share/opencv4/haarcascades/haarcascade_eye_tree_eyeglasses.xml --face_cascade=/usr/local/share/opencv4/haarcascades/haarcascade_frontalface_default.xml , which used my webcam for facedetection:

This program demonstrates using the cv::CascadeClassifier class to detect objects (Face + eyes) in a video stream.

You can use Haar or LBP features.

Usage: objectDetection [params]

--camera (value:0)

Camera device number.

--eyes_cascade (value:/usr/local/share/opencv4/haarcascades/haarcascade_eye_tree_eyeglasses.xml)

Path to eyes cascade.

--face_cascade (value:/usr/local/share/opencv4/haarcascades/haarcascade_frontalface_default.xml)

Path to face cascade.

-h, --help

[ INFO:0] global ~/git/opencv/modules/videoio/src/videoio_registry.cpp (187) VideoBackendRegistry VIDEOIO: Enabled backends(7, sorted by priority): FFMPEG(1000); GSTREAMER(990); INTEL_MFX(980); V4L2(970); CV_IMAGES(960); CV_MJPEG(950); FIREWIRE(940)

[ INFO:0] global ~/git/opencv/modules/videoio/src/backend_plugin.cpp (248) getPluginCandidates VideoIO pluigin (GSTREAMER): glob is 'libopencv_videoio_gstreamer*.so', 1 location(s)

[ INFO:0] global ~/git/opencv/modules/videoio/src/backend_plugin.cpp (256) getPluginCandidates - /usr/local/lib: 0

[ INFO:0] global ~/git/opencv/modules/videoio/src/backend_plugin.cpp (259) getPluginCandidates Found 0 plugin(s) for GSTREAMER

[ INFO:0] global ~/git/opencv/modules/core/src/ocl.cpp (888) haveOpenCL Initialize OpenCL runtime...

- I also have 7 executables in /usr/bin, binary installed on September 20, 2018. The two extra are precisely the two I need: opencv_traincascade and opencv_createsamples. The source code of those executables can be found in the apps directory (6 apps).

- The two apps that I need are not build because in apps/CMakeLists.txt those two are commented out!

- Train cascade is hard to build (added #include , but this version seems no longer compatible with the current opencv version: old_ml_precomp.hpp:327:1: error: ‘CvFileNode’ does not name a type; did you mean ‘CvDTreeNode’?. But createsamples is build and seems to work.

- Copied ubbo_opencv-haar directory and run the command perl bin/createsamples.pl positives.txt negatives.txt samples 1500\

"opencv_createsamples -bgcolor 0 -bgthresh 0 -maxxangle 1.1\

-maxyangle 1.1 maxzangle 0.5 -maxidev 40 -w 80 -h 40"

. Seems to work, received the output:

opencv_createsamples -bgcolor 0 -bgthresh 0 -maxxangle 1.1 -maxyangle 1.1 maxzangle 0.5 -maxidev 40 -w 80 -h 40 -img ./positive_images/frame_108.jpg -bg tmp -vec samples/frame_108.jpg.vec -num 39

Info file name: (NULL)

Img file name: ./positive_images/frame_108.jpg

Vec file name: samples/frame_108.jpg.vec

BG file name: tmp

Num: 39

BG color: 0

BG threshold: 0

Invert: FALSE

Max intensity deviation: 40

Max x angle: 1.1

Max y angle: 1.1

Max z angle: 0.5

Show samples: FALSE

Width: 80

Height: 40

Max Scale: -1

RNG Seed: 12345

Create training samples from single image applying distortions...

Open background image: ./negative_images/frame_88.png

Done

The result is that now a number of new images in the directory samples are created.

June 7, 2019

- Trying to help Jeroen with installing opencv from source. Gave him the tip.

- Running cmake directly from objdetect fails on missing "ocv_define_module".

- Running cmake from the git/opencv/build fails on missing "ocv_glob_modules". This can be solved by also cloning opencv_contrib with command git clone https://github.com/opencv/opencv_contrib.git followed by a git checkout 3.4.

- The command cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \

-D INSTALL_C_EXAMPLES=ON \

-D INSTALL_PYTHON_EXAMPLES=ON \

-D WITH_TBB=ON \

-D WITH_V4L=ON \

-D WITH_QT=ON \

-D WITH_OPENGL=ON \

-D OPENCV_EXTRA_MODULES_PATH=../opencv_contrib/modules \

-D BUILD_EXAMPLES=ON ..

now works (although there are some warnings of packages that are not found).

- Compilation with make -j8 starts fine. After 13% I receive a warning on legacy API declarations in imgcodecs_c.h. Did a fresh git pull, but this error remains.

- Edited opencv_contrib/modules/xobjdetect/src/precomp.hpp and changed imgcodecs_c.h in legacy/constants_c.h. Now the compilation continues (at least further than 25%).

- After 43% the same error in opencv_contrib/modules/reg/samples/map_test.cpp

- Also after 43% a new error in opencv_contrib/modules/xfeatures2d/src/fast.cpp: a conversion from int to cv::DetectorType. Put an explicit cast (cv::FastFeatureDetector::DetectorType)type) in the call to FAST() on line 462 of fast.cpp (DetectorType is an enum of three types (with integer values 0,1,2)). Compiling continues beyond 80%.

- Next error (on 80%), is CV_NODE_SEQ not defined in this scope. Solved this with 8 lines of extra code in sparse_matching_gpc.cpp:

#ifndef CV_NODE_SEQ

#define CV_NODE_SEQ 5

#endif

#ifndef CV_NODE_FLOW

#define CV_NODE_FLOW 8

#endif

- In waldboost_detector.cpp the function imread is called with constant CV_LOAD_IMAGE_GRAYSCALE, which can be correted with the constant defined for OpenCV 3.1 and higher: cv::ImreadModes::IMREAD_GRAYSCALE. In waldboost_detector.cpp also a call to imgcodecs_c.h is made.

- The next error is a ambigious call in modules/text/samples/webcam_demo.cpp and segmented_word_recognition.cpp. Adding a , 0 to the call (default component_level) solves this.

- Next error are a number of ambigious calls in optflow/tests. The call can be made clear by adding cv::optflow:: before DISTOpticalFlow (in each of the files). The same addition has to be made before some the types used in the templates.

- Next error is located in the python_bindings generator. It expects a that cv:ximgproc::SLIC a type is, while it is defined in ximgproc/slic.hpp as enum. That seems to be a serious error, time to try something different.

- Saved all my modifications in /tmp/opencv_contrib and made the switch git checkout master. Only webcam_demo.cpp and segmented_word_recognition.cpp are restored. The build now finishes without problems. build/bin/opencv_version gives 4.1.0-dev. Don't see createsamples.

May 29, 2019

- Tried again bazel build //:two_models_one_tpu, but still repository '@local_config_tensorrt' could not be resolved.

- Removed all //tensorflow dependencies from the BUILD. Now I receive the error message:

ERROR: /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/libedgetpu/BUILD.bazel:3:12: in srcs attribute of cc_library rule @libedgetpu//:lib: source file '@libedgetpu//:BUILD.bazel' is misplaced here (expected .cc, .cpp, .cxx, .c++, .C, .c, .h, .hh, .hpp, .ipp, .hxx, .inc, .inl, .H, .S, .s, .asm, .a, .lib, .pic.a, .lo, .lo.lib, .pic.lo, .so, .dylib, .dll, .o, .obj or .pic.o)

.

- Added only the @org_tensorflow//tensorflow/lite:framework dependency back and got the tensorrt error back:

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: error loading package '@org_tensorflow//tensorflow/lite': in /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/org_tensorflow/tensorflow/tensorflow.bzl: Unable to find package for @local_config_tensorrt//:build_defs.bzl: The repository '@local_config_tensorrt' could not be resolved. and referenced by '//:two_models_one_tpu'

- Strange enough the pip_package that is build, is not found when I do pip3 install tensorflow_gpu. Checked tensorflow/tools/tensorflow_builder and run PYTHONPATH=~/git/tensorflow python3 config_detector.py. Got the error message:

raise ImportError("Could not import tensorflow. Do not import tensorflow "

ImportError: Could not import tensorflow. Do not import tensorflow from its source directory; change directory to outside the TensorFlow source tree, and relaunch your Python interpreter from there.

May 21, 2019

- Continue with building the edge TPU C++ example.

- Yesterday's building tensorflow from scratch completed without errors. Building the example with command bazel build //:two_models_one_tpu gave now other error messages. Strangly the target '@libedgetpu//:header' failure was back, and now tensorflow complains about missing '@local_config_rocm' instead of a missing '@local_config_cuda':

Starting local Bazel server and connecting to it...

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: no such target '@libedgetpu//:header': target 'header' not declared in package '' defined by /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/libedgetpu/BUILD.bazel and referenced by '//:two_models_one_tpu'

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: error loading package '@org_tensorflow//tensorflow/lite': in /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/org_tensorflow/tensorflow/tensorflow.bzl: Unable to find package for @local_config_rocm//rocm:build_defs.bzl: The repository '@local_config_rocm' could not be resolved. and referenced by '//:two_models_one_tpu'

ERROR: Analysis of target '//:two_models_one_tpu' failed; build aborted: error loading package '@org_tensorflow//tensorflow/lite': in /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/org_tensorflow/tensorflow/tensorflow.bzl: Unable to find package for @local_config_rocm//rocm:build_defs.bzl: The repository '@local_config_rocm' could not be resolved.

INFO: Elapsed time: 2.316s

INFO: 0 processes.

FAILED: Build did NOT complete successfully (10 packages loaded, 28 targets configured)

currently loading: @org_tensorflow//tensorflow/lite

- Didn't read this error message well, so I started to recompile tensorflow again with cuda support (first configure, followed by bazel build --config=opt --config=cuda //tensorflow/tools/pip_package:build_pip_package).

- Added to following lines to libedgetpu.BUILD:

cc_library(

name = "header",

srcs = glob(["**"]),

hdrs = ["edgetpu.h"],

visibility = ["//visibility:public"],

)

- After more than 6 hours compilation, tensorflow is ready with building the pip_package. The additional lines in libedgetpu.BUILD seem to work, although two_model_one_tpu still fails on a new target: 'local_config_tensorrt'.

- Tried to configure tensorflow differently, but CUDA and ROCm are mututally exclusive. tensorrt is one of the option in configure, but not an option for bazel build --config==tensorrt. Starting to build again with tensorrt configured.

May 21, 2019

- Hugh Mee experimented with edge TPU C++ example. She came quite far, but failed on the bazel build //two_models_one_tpu step.

- Started with getting started (again), but downloaded now v1.9.2 (april 2019). Installed complained that it could use the pip cache in my home-directory, so added -H to sudo (as suggested).

- Copied /usr/local/lib/python3.6/dist-packages/edgetpu/demo/ into ~/src/edgetpu/demo/.

- Was able to classify my first bird: python3 classify_image.py --model models/mobilenet_v2_1.0_224_inat_bird_quant_edgetpu.tflite --label labels/inat_bird_labels.txt --image images/parrot.jpg :

---------------------------

Ara macao (Scarlet Macaw)

Score : 0.76171875

No indication on how much processing time is gained by using the TPU, but the response was quite fast.

- Continued with the example. The BUILD is a bazel file, so I first had to install bazel. Tried to build target with bazel build //:two_models_one_tpu, which failed on with the missing repository @libedgetpu:

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: no such package '@libedgetpu//': The repository '@libedgetpu' could not be resolved and referenced by '//:two_models_one_tpu'

ERROR: Analysis of target '//:two_models_one_tpu' failed; build aborted: no such package '@libedgetpu//': The repository '@libedgetpu' could not be resolved

INFO: Elapsed time: 0.711s

INFO: 0 processes.

FAILED: Build did NOT complete successfully (8 packages loaded, 28 targets configured)

- So, BUILD points to libedgetpu, which is installed (in ~/packages/edgetpu_api/, but this directory only contains the *.so files, not a WORKSPACE. So

I should create a libedgetpu/WORKSPACE in edgetpu/examples/cpp, following the instructions of dependences on non-bazel projects.

- Read the instructions, incorrectly, I should add the following line to the empty WORKSPACE in edgetpu/examples/cpp:

new_local_repository(

name = "libedgetpu",

path = "/home/arnoud/packages/edgetpu_api/libedgetpu",

build_file = "libedgetpu.BUILD",

)

and made libedgetpu.BUILD with the following content:

cc_library(

name = "lib",

srcs = glob(["**"]),

visibility = ["//visibility:public"],

)

The result was the next error message (but tensorflow has bazel WORKSPACES):

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: no such package '@org_tensorflow//tensorflow/lite': The repository '@org_tensorflow' could not be resolved and referenced by '//:two_models_one_tpu'

ERROR: Analysis of target '//:two_models_one_tpu' failed; build aborted: no such package '@org_tensorflow//tensorflow/lite': The repository '@org_tensorflow' could not be resolved

INFO: Elapsed time: 0.127s

INFO: 0 processes.

FAILED: Build did NOT complete successfully (1 packages loaded, 1 target configured)

Fetching @libedgetpu; Restarting.

- Tensorflow has WORKSPACES, but ~/git/tensorflow/tensorflow/lite has BUILD and build_def.bzl.

- Added the following line to edgetpu/examples/cpp/WORKSPACE:

local_repository(

name = "org_tensorflow",

path = "/home/arnoud/git/tensorflow",

)

- Again one step further: I am now missing the target 'header' and the repository '@local_config_cuda'.:

bazel build //:two_models_one_tpu

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: no such target '@libedgetpu//:header': target 'header' not declared in package '' defined by /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/libedgetpu/BUILD.bazel and referenced by '//:two_models_one_tpu'

ERROR: /home/arnoud/src/edgetpu/examples/cpp/BUILD:14:1: error loading package '@org_tensorflow//tensorflow/lite': in /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/org_tensorflow/tensorflow/tensorflow.bzl: Unable to find package for @local_config_cuda//cuda:build_defs.bzl: The repository '@local_config_cuda' could not be resolved. and referenced by '//:two_models_one_tpu'

ERROR: Analysis of target '//:two_models_one_tpu' failed; build aborted: error loading package '@org_tensorflow//tensorflow/lite': in /home/arnoud/.cache/bazel/_bazel_arnoud/08061ec84e41b9414c12235536eacf30/external/org_tensorflow/tensorflow/tensorflow.bzl: Unable to find package for @local_config_cuda//cuda:build_defs.bzl: The repository '@local_config_cuda' could not be resolved.

INFO: Elapsed time: 0.135s

INFO: 0 processes.

FAILED: Build did NOT complete successfully (3 packages loaded, 1 target configured)

currently loading: @org_tensorflow//tensorflow/lite

- Downloading etgetpu.h in ~/packages/edgetpu_api/libedgetpu solved the first target.

- Checked tensorflow GPU support and installed the stable version with sudo -H pip3 install tensorflow-gpu. That didn't help.

- Tried to run ./configure in ~/git/tensorflow, but cuda-8.0 didn't contained the default [cuDNN 7] (version of cudnn.h).

- Installed CUDA with apt following the instructions of tensorflow gpu support. Only had to upgrade the last command to libnvinfer-dev=5.1.5-cuda10.1. During the installation the old packages nvidie-driver-410 was replaced by version 418 of the driver.

- Still, same error message (local_cuda_config could not be resolved). Tried it both with a configure with cuda and without cuda (all other choices default). After configuring without cuda, I used the instruction from build from source and executed bazel build --config=opt //tensorflow/tools/pip_package:build_pip_package. Now bazel is going to fetch several rules (hopefully also local_cuda_config).

May 9, 2019

- Zora was complaining that the system clock of Mio was incorrect. Logged in ate the commandline and checked the date with date and hwclock --show. Both were Sep 2009, so maybe the battery of the hwclock in the head is gone.

- Followed the instructions at garron.me and set the time as root with ntpdate 129.6.15.28 (to get the time from an internet server), date and hwclock --set --date="2019-05-09 12:25".

April 30, 2019

- Continue with training cascade tutorial.

- Made a screenshot and tried opencv_visualisation --image=img/ArnoudVisser2019.jpg --model=/usr/local/share/opencv4/haarcascades/haarcascade_eye_tree_eyeglasses.xml and opencv_visualisation --image=img/ArnoudVisser2019.jpg --model=/usr/local/share/opencv4/lbpcascades/lbpcascade_frontalface_improved.xml. LBP is much faster, but in both cases I just saw Window scanning in the upper corner (not my screenshot in the background and not the full image scanned). Should I use png instead of jpg, and is a smaller image required?

- The image of Angelina Jolie had the same preprocessing as cascade classifier files –>24x24 pixel image, grayscale conversion and histogram equalisation. In the cascade tutorial also jpg images are used, so it is only the preprocessing.

- In the objectDetection example, the preprocessing is done with cvtColor( frame, frame_gray, COLOR_BGR2GRAY );

equalizeHist( frame_gray, frame_gray );.

- Made a small preProcessImage.cpp program to do the preprocessing. Now the visualisation works (although too fast for lbp).

April 29, 2019

- Building OpenCV from source, following instructions from Linux installation tutorial.

- The command /usr/bin/opencv_traincascade is now available.

- The cascade models are installed in /usr/local/share/opencv4/lbpcascades/.

- Ibrahim used in his thesis the command opencv traincascade -data Classifer -vec samples.vec -bg

negatives.txt -numStages 20 -minHitRate 0.999 -maxFalseAlarmRate

0.4 -featureType LBP -numPos 1000 -numNeg 2000 -w 15 -h 15

-precalcValBufSize 6000 -precalcIdxBufSize 6000.

- Continued with cascade classifier tutorial.

- In ~/git/opencv/samples/cpp/example_cmake is an example Makefile. Modifying this for objectDetection.cpp compiles fine, only a number of libraries are missing (webp, gdal, hdf5). Executing sudo apt-get install libhdf5-serial-dev solved the latter. The command sudo apt install gdal-bin python-gdal python3-gdal didn't install the gdal library. The command sudo apt-get install libgdal-dev seems to solve this, but now all vtk-libraries are missing. Simply removed those vtk-libraries from the Makefile, by manually executing pkg-config --libs --static opencv and removing all references to vtk-libraries.

- Tried to run , but no webcam found. Checked device in several ways (lsusb;ls -al /sys/class/video4linux;v4l2-ctl --list-devices;uvcdynctrl -l;cheese), and finally connected an external Logitech camera. Now received the message that unknown format was used. Checked the camera with v4l2-ctl -d /dev/video0 --list-formats and received:

ioctl: VIDIOC_ENUM_FMT

Index : 0

Type : Video Capture

Pixel Format: 'PWC2' (compressed)

Name : Raw Philips Webcam Type (New)

Index : 1

Type : Video Capture

Pixel Format: 'YU12'

Name : Planar YUV 4:2:0

- Tried the Intel RealSense 3D Camera R200, Creative Senz3D and Xtion ProLive, but none gave a /dev/video0.

- Tried Skype, which works (camera gets active). Installed sudo apt-get install v4l2ucp and looked at Video4Linux configuration, but /dev/video0 could not be opened. Tried Cheese again, and now it works! My hypothesis is that the camera was in deep sleep, and that Skype was able to wake the camera up, so that also Cheese and Video4Linux could access the camera.

- Could also run example ./objectDetection --camera=0 --eyes_cascade=/usr/local/share/opencv4/haarcascades/haarcascade_eye_tree_eyeglasses.xml --face_cascade=/usr/local/share/opencv4/haarcascades/haarcascade_frontalface_alt.xml. Could recognize my face when I remove my glasses.

- According to this post, I could set CV_CAP_PROP_STREAM to one of the 12 options (with cvSetCaptureProperty(CvCapture* capture, int property_id, double value).

M

April 23, 2019

February 27, 2019

- Explored the K12 lessons of StemLab.

February 4, 2019

- Yannis Kalantidis is able to learn motion 10x more effective than calculation optical flow, by generating motion clues

- Also interesting is his Double Attention Networks, which classifies different attention areas with classes (e.g. robot, ball), and enhance the combinations to classify the activity.

January 30, 2019

- Currently the DNT uses for all joints a stiffness of 0.8, but Sun demonstrated that walking with a stiffness of 0.1 in the ankle joint is also possible. Nice to look if this can be reproduced.

- Also nice to as graduate project to see if Sun's work can be reproduced on a V6 robot.

- Should first try move_faster.py, which increases the step-size from 4cm to 6cm (and measures the speed), which should correspond to an increase from 9 cm/s up to 14 cm/s

January 15, 2019

- Moving robocup.cfg worked, the file is only a tag, it had no content.

January 14, 2019

- Sam is walking fine, tried to remove the DNT code from Moos. Yet, the v6 robots do not have a directory /etc/naoqi, and at both robots the autoload.ini is empty. Where is the DNT-code activated? Answer: in the file robocup.config in the home-directory. Giving that file another name (e.q. robocup.cfg.dnt) and rebooting should solve this issue.

- Julia (nao10) is constantly complaining that the motors are too hot in the right arm (which is also marked). Connected Julia to the internet and switched the Fall reflex override off in the advanced webpage. Could switch warning of with ALMotionProxy::setDiagnosisEffectEnabled(True), but Julia is already still after I switched the reflex override. Stand up from rest works fine, also wipe forehead with right-arm succeeds.

January 8, 2019

- Installed Choregraphe 2.1.4.13 from Aldebaran resources

- Should have downloaded the setup, because the zip doesn't install Bonjour.

- Downloaded Bonjour v2.0.2 from Apple

- Now Choregraphe sees walle.local, but I couldn't ping walle.local. Luckily I could use its ip-adress. Then I became superuser (su), followed by cd /etc/naoqi and changed the autoload.ini by (cp autoload.ini.naoqi autoload.ini.

- After the reboot the front-button works again, but strange enough walle couldn't connect to the network (while the ethernet cable was blinking). Did a full shutdown and reboot. That helped.

- Started to test the instructions from my Ubuntu from Linux prompt. Created main.py and modules/globals.py. Yet, import naoqi stills fails, so I will first update the Linux instructions.

Previous Labbook