Wishlist

- Create more functional the police agent behavior.

- Better cooperation of two ambulance agents saving a single civilian.

- Distribute the fire agents over the available water hydrants.

Split the

original Labbook in a Labbook about the Virtual Robot competition and one

about the Agent Competition.

December 4, 2014

July 28, 2014

- Tested the Dell USB3-Ethernet adapter. With one router I only got a inet6 adress, but on the DNT-router I got a inet4 adress and could ping info.science.uva.nl.

July 15, 2014

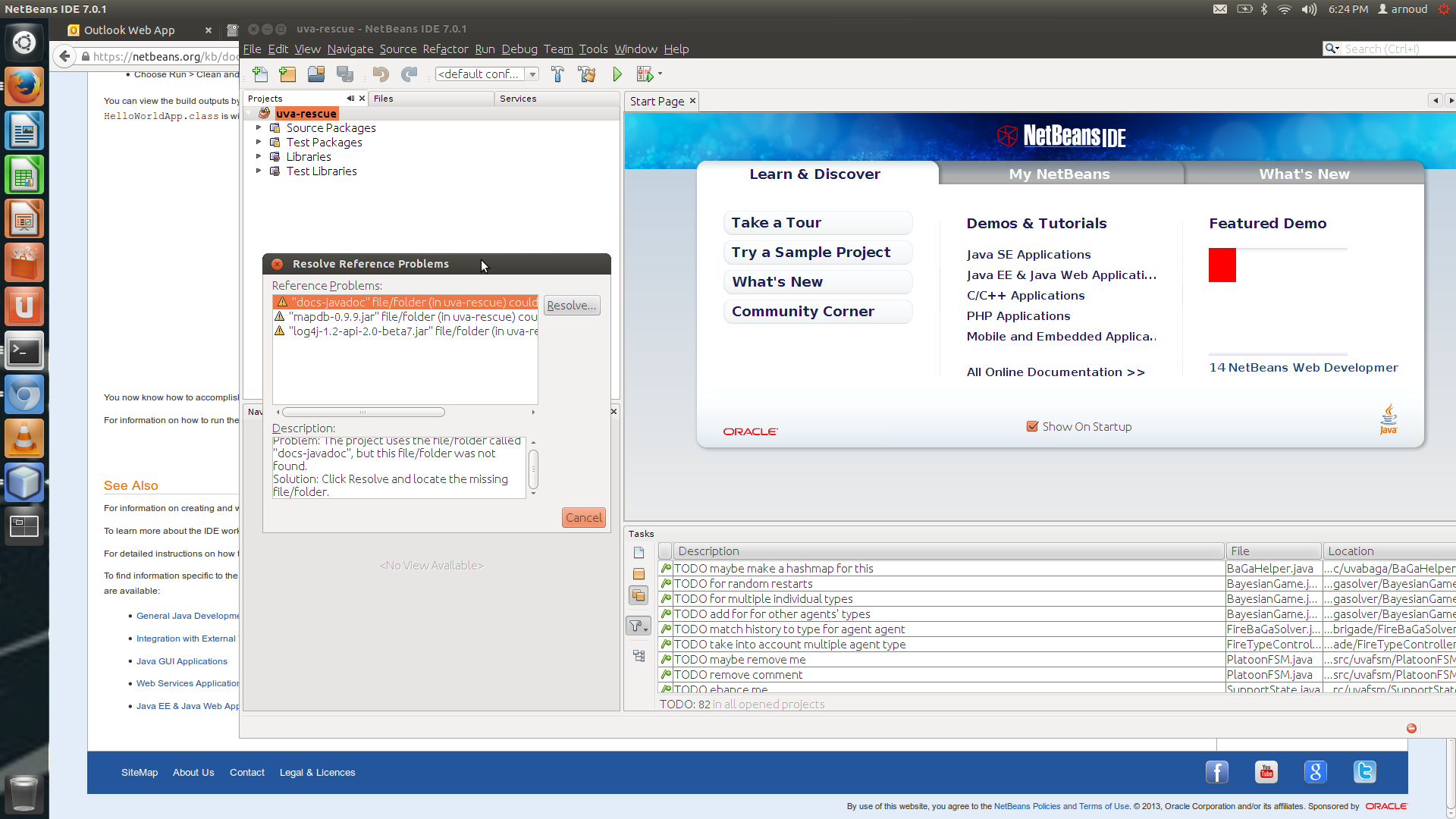

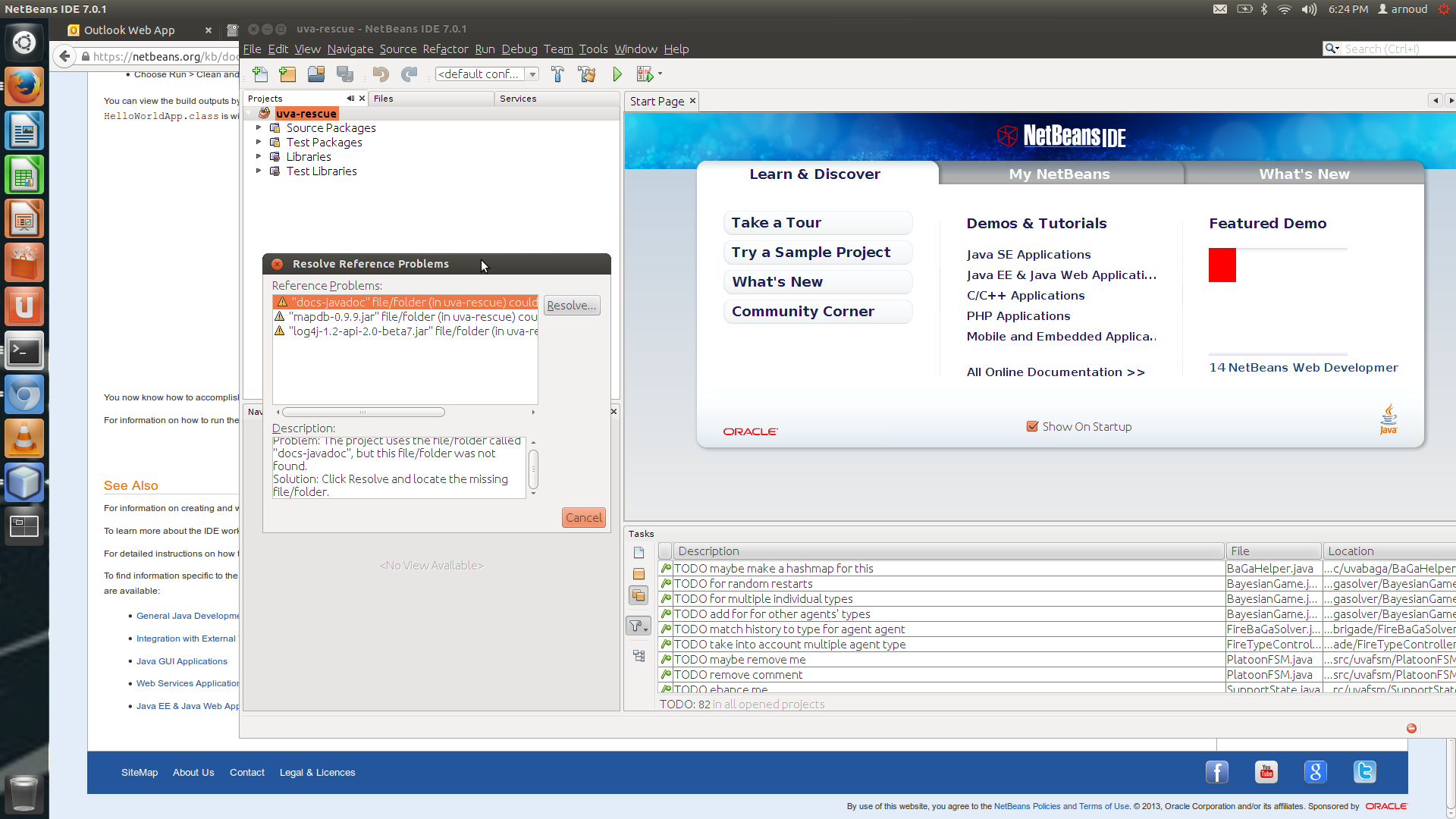

- Installed Netbeans on nb-ros. Loaded the IDE via Dash Home. Loaded the project by selecting the uva-rescue directory. Netbeans complained about three missing resources:

Missing are the docs-javadoc folder, the mapdb-0.9.9.jar and the log4j-1.2-api-2.0-beta7.jar.

July 2, 2014

- Followed the instructions at wepupd8.org and installed Oracle JDK (version 1.7.0_60). Now the build is succesful.

June 30, 2014

June 12, 2014

- Found the TDP from the Infrastructure winner of 2008: they proposed the introduction of emotions to the agents. That would have been a valuable addition when applied to the civilians, but they proposed it for the rescue agents.

- In 2010 Brave Circles proposed the flood simulator.

- Also found the TDP from the Infrastructure winner of 2011: they proposed an Online Rescue Simulation Launcher, which could be used to monitor the improvements of the teams during the year. Unfortunately, I never have seen this server online.

- Read the short-paper about the multi-agent challenge. The fire-fighting coordination problem is solved with distributed constraint optimisation problem, which seems to have no overlap with a MDP.

- Checked Jenning's article on MaxSum, but only found reference to complete algorithms, such as ADOPT [5], OptAPO [6], DPOP [7], NCBB [8] and AFB [9]; and approximate algorithms such as

the Distributed Stochastic Algorithm (DSA) [1], Maximum Gain Message (MGM) [10], and ALS_DisCOP [11] that do not.

- The Distributed Gibss article indicates that DUCT is a DCOP-algorithm applied on MDPs. Yet, I could find no reference to MDPs in this article.

- In this AAMAS2010 paper, DCOP is used as preprocessing step to help a greedy MDP solver to find higher-reward policies faster.

- Found a group in Southern California which is specialized in combining Dec-POMDPs and DCOPs.

- They called this combination Distributed Coordination of Exploration and Explotation (DCEE). DCEE agents do not know the reward function in advance (in contrast to DCOP agents). The paper ends with the following quote "Distributed POMDPs [2] are also not directly relevant: DECPOMDPs

plan over uncertainty in agent action outcomes, whereas

DCEE actions have known outcomes but uncertain rewards."

- The DCEE is more extended explained in the Advances in Complex Systems article (official copy), although the AAMAS paper is cited more often.

- Jennings is quite critical in his Bandits paper about DCOPs: "assumes perfect knowledge of he interactions between agents, thus limiting its practical applicability".

- To start working with the RMASBench the following guide is essential.

February 11, 2014

- As preparation of our Team Description Paper, we are reading some of last years TDPs.

- MRL's TDP is relevant their partitioning algorithm (based on K-means). Different algorithms are used to assign task to agents: first the Hungarian algorithm to assign agents to partitions, followed by negotation on tasks inside the partition. The authors trace the problem back to the Simplex algorithm, which is described in Russell&Norvig chapter 4 (with the recommendation to use instead interior-point methods). The Hungarian method is only mentioned in Russell&Norvig's chapter 15. Here the recommendation is to use a probabilistic approach: particle filters.

- SOS' TDP is less formal, but describe nicely the high level task of each agent-type. They use learning (with a neural net) to be able to predict priorities. An aspect which is clearly not covered yet by us is the searching behavior.

- GUC ArtSapience's TPD. GUC claims, as MRL, that an equal part of partitians and agent is optimal. No evidence for this claim. They claim that they have some preliminary results indicating that sharing world information next to task information improves the score. No learning in their approach (although they are happy about prioritizing tasks).

-

- Trying to get Mircea's code (rev 3) working. The repository contains a boot directory with several scripts, yet start.sh fails on missing maps, so it seems that I have to follow the install instructions from the agent competition. The log indicates that it expects for instant standard.jar in ../jars.

- Installed rng-tools. Downloaded rescue-2013.zip and performed ant, but compilation fails (my version of javac is 1.6.0_27, my version of ant 1.8.2). Error is located in:

/home/arnoud/svn/RescueAgents/rescue-2013/modules/misc/src/misc/MiscSimulator.java:68: illegal start of type

[javac] notExplosedGasStations=new HashSet<>();

- Repaired this bug, but the same empty constructor is used in TrafficSimulator. Should follow the instructions for Installing Oracle JDK.

Labbook 2013

Labbook 2012

Labbook 2011

Labbook 2010

Labbook 2009

Labbook 2008

Labbook 2007

Labbook 2006

Labbook 2005

Labbook 2004