Wishlist

- Connection to UsarCommander

- ROS-nodes working for platform, kinect, laser scanner and ins.

Started

Labbook 2018.

December 11, 2017

October 17, 2017

August 21, 2017

August 17, 2017

- Connected to the mbot08n. Here, the navigation machine is connected with the router. mbot08n has ip-address 192.168.2.58, is configured with mask 255.255.255.0, uses as gateway 192.168.2.50 and as dns 8.8.8.8. With the ip-address of nb-ros manually set to 192.168.2.100, I could ping to mbot08n. Yet, the machine performed an shutdown before I could try a ssh.

- The mbot08n calls a shutdown because the electronics is too low. Giving it a sudo showdown -c cancelled that, but a new shutdown is called regulary. The keyboard seems to be configured non-USA, the dash is somewhere below. Added USA international, instead of the default Portogese keyboard. Maybe I should try to install dconf-editor to handly the power-shutdowns.

- The dconf-editor is only handy to edit the events coupled to the (power-)buttons. Installed lm-sensors and did a scan of the available sensors, but I only get the temperature readings from the motherboard (no voltage) by typing sensors. Luckely, the battery seems to be charging, because I didn't get any shutdown warnings after I killed the first.

- I could find mbot08r with the command fping -a -g 192.168.2.0/24: it has ip-address 192.168.2.57. I could access the webinterface. mbot08r should have got ip-adress 10.1.15.22 and mbot08n 10.1.15.23.

- mbot08n has the following commands in bashrc:

source /opt/ros/hydro/setup.bash

source /opt/monarch_msgs/setup.bash

source /opt/mbot_ros/setup.bash

source /home/monarch/sandbox/monarch/code/trunk/catkin_ws/devel/setup.bash

export ROS_PACKAGE_PATH=$ROS_PACKAGE_PATH:/home/monarch/sandbox/monarch/code/trunk/rosbuild_ws

export ROS_MASTER_URI=http://mbot08n:11311

export MBOT_NAME=mbot08

So ros_cd mbot_ros works.

- Next steps in Chapter 23 of the integration manual is run setup_and_launch_mbot.bash

- Found script in ~/sandbox/monarch/code/trunk/launch. Calling this script started a full making of messages and all code.

- At the end most services seem to be started (for instance the rfd node died), but the list of rostopic is long (partly due to the connection to the other robots in /mbot08/sar). Scans are available as rostopic info /mbot08/scan

Type: sensor_msgs/LaserScan

Publishers: None

Subscribers:

* /mbot08/amcl (http://mbot08n:46333/)

* /mbot08/webconsole (http://mbot08n:50449/)

* /mbot08/navigation (http://mbot08n:57285/)

- There is no imu_data nor odom in the topics!

- The webinterface is working, but I see no camera images. The only cam in my topics are:

/mbot08/behavior_patrollingapproachinteractcamera/cancel

/mbot08/behavior_patrollingapproachinteractcamera/feedback

/mbot08/behavior_patrollingapproachinteractcamera/goal

/mbot08/behavior_patrollingapproachinteractcamera/result

/mbot08/behavior_patrollingapproachinteractcamera/status

- Saying something also doesn't work!

- Tried to visualize the laserscan, but adding /mbot08/scan to rviz didn't work.

- Tried to visualize with rosrun rqt_plot rqt_plot, but only the scalars as range_max could be displayed.

- Saw that with rosmsg show LaserScan that I needed /mbot08/scan/ranges.

- Only topic which displays something is rostopic echo /rosout.

- The simple laserviewer doesn't work anymore, because it fails on import roslib; roslib.load_manifest('laser_view');.

August 16, 2017

- Checked the ethernet connection, but both are connected to the router. Yet, the router doesn't give ip4 adresses, only ip6 adresses. Not clear what the ip6 from the router itself is.

- Connected the router via its internet port to the TP-link from DNT. Now the mbot12h and nb-ros get a ip4 adress from the DNT router, but not mbot12n is not in the client list. Without the DNT router, the network connection claims that tere is a connection (but no ip4 or ip6 address). ifconfig indicates both eth0 and eth1, but only eth0 gets a ip6 address.

- In /etc/hosts mbot12l is mentioned (192.168.2.37).

- Going to try the tricks from askubuntu.

August 14, 2017

- The password that Gwenn provided was correct, I can now login as monarch on mbot12h. Password is also given in section 8.1.2 of the Deliverable 2.2.1. Starting a terminal from commandline is CTR+ALT+T (USB-hub was no longer working ?!). The monarch environment starts with the screen in portrait mode. The bashrc ends with the /opt/ros/hydro/setup.bash, following by the setup from monarch_svn. The svn also contains docs. The svn points to http://svnmanager.isr.ist.utl.pt/svn/monarch.

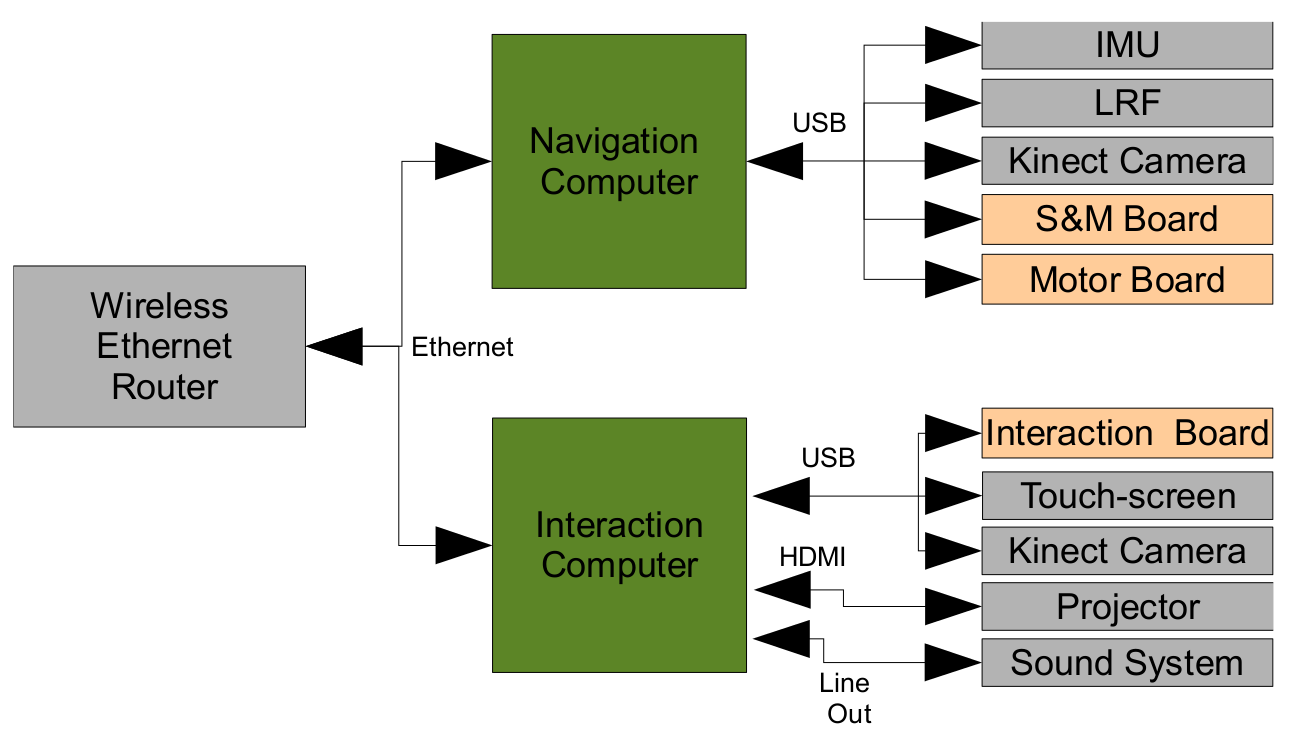

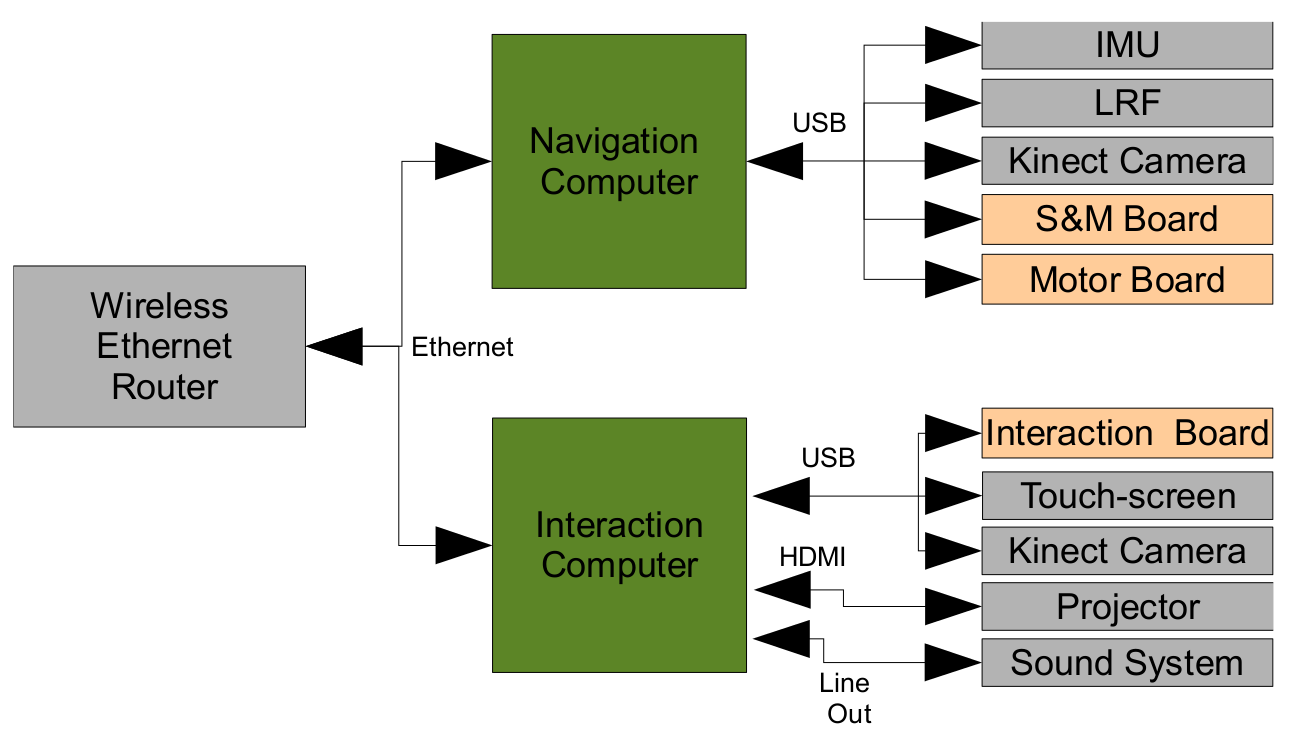

- Copied the documentation to a stick, to inspect it on a larger screen (the hardware architecture is depicted in Fig. 6.1:

From Monarch Deliverable 2.2.1

- This deliverable also contains the information which sensor should be connected to which USB-port!

- Our mbot12h should get ip-adress 10.1.15.36 (and navigation 10.1.15.35, router 10.1.15.34), but the cable seems not be disconnected.

- The specific monarch messages are cmd_arms, cmd_leds, cmd_head and cmd_mouth. The base is steared by the regular cm_vel.

- The instructions at section 18.1 are not followed (no MBOT_NAME in environment).

- Also roscd mbot_ros gives No such package.

- On the navigation pc the daemon mbotrosd should be running, which could be checked with sudo service mbotrosd status (section 8.1.3)

- Section 23.1 is called Checking if system is operational. The first step is to launch setup_and_launch_mbot.bash and check for the topics /scan, /imu_data and /odom (on the navigation computer I presume). This launch file can be found in monarch/code/trunk/launch.

- Other deliverables can be found at Monarch project site.

- Our robot hasn't Star Gazer sensor nor Ultra Wide Band location system tag.

August 8, 2017

- Tried to switch on the mbots. Disabled the mbot without arms. The white front plate could be removed after removing four screws at the side, four of the ground plate and three screws of the slid-detector at the front. Those screws at lose mounts, so lost one in the robot. With the front plate removed, I saw that the motherboard had a DVI connector.

- Connected the DVI connector to the navigation board of the mbot with arms, but nothing happened. Connected to the HRI-board of the mbot with arms and switched the charger on. Now I saw the screen on its belly coming to life (although no signal). Connecting the loose DVI cable to the HRI-board solved the issue. Got a grub boot menu, choose the first ubuntu option.

- After a while I got a login menu for ubuntu 12.04, with users gwenn, monarch or guest. Used a monitor as USB-hub, connected a keyboard and mouse and was able to get a terminal (side of the screen is a bit hidden behind the front plate).

- Wireless is switched on, but no networks visible. Should be solvable with wired connection to network hub and or a wireless usb in my hub.

- First a password of monarch (or gwenn).

June 27, 2017

June 20, 2017

June 19, 2017

- Tirza is using a lot of the software from Stanford, but not the publications. Yet, the figures illustrating the dependencies in this paper would be very illustrative in the wordy section 3.2.2. Also, the introduction indicates why the shallow representation of Stanford seems to be appriorate for this task (relations between content words are more important than the overall tree structure)

- The proposed system of Tirza seems to concentrate on the dialog state, as indicated in Fig 1 of overview paper.

- According to the article, there are three families of algorithms to track the dialog state: hand-crafted rules,

generative models, and discriminative models.

- According to the article, there are three families of algorithms to track the dialog state: hand-crafted rules,

generative models, and discriminative models. Tirza, as most human-robot researchers, are in the hand-crafted rule family. Yet, at page 7 Williams indicates that robots are out of scope of this review. Instead, he points to Bohus and Horvitz 2009 and Ma et al 2012. The first is an avatar with a behavior and dialog model, the second less relevant (requests distributed over a map).

June 16, 2017

June 12, 2017

June 8, 2017

June 7, 2017

June 6, 2017

- Read Chapter 1 and the appendix D of Mavridis thesis). Although a bit strange organized and more relying on examples than general overview, the message comes across.

May 31, 2017

May 30, 2017

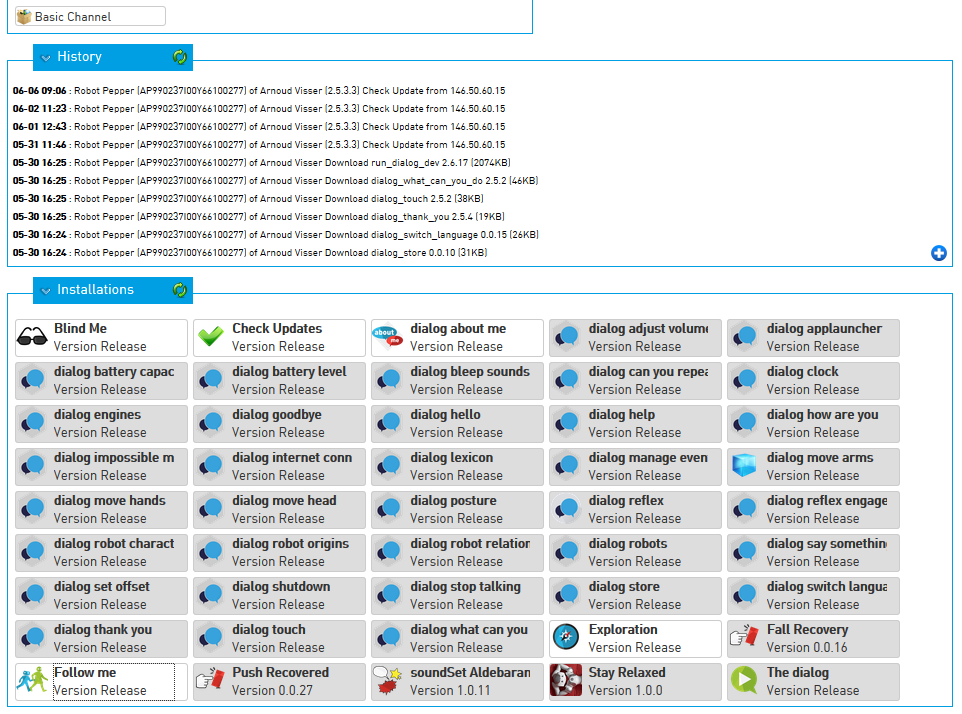

- Went to the resource page of the Aldebaran Community and downloaded NaoQi 2.5.5.5. Installed Choregraphe on my Windows laptop.

- I only saw NaoQi 2.1.2 as VirtualBox.

- Found the chest-button of Pepper. Choregraphe doesn't see Pepper in the network. Pepper doesn't say her IP. Checking the Pepper User Guide. User Guide says that pushing the chest-button once should let Pepper say its IP. Yet, it seams that sound is off, and giving the voice command "Speak louder" didn't help.

- Found how to access the LAN-port via the forum (use the metal key!).

- With the cable, I could connect to Pepper. ip-adress seems to be pepper.local. Pepper was also wirelessly connected, with ip 146.50.60.45.

- Did the wizard, and the app page said that 30 apps should be updated (9 ok). Performed the update. Now 41 apps are up-to-date.

- According to webinterface, the Naoqi version is 2.5.3.3 (instead of 2.5.5.5)

- Maybe a good idea to update, because 2.5 has navigation!

- Differences between 2.5.5 and 2.5.3 are minimal.

- Added billing adress, but still a grey install button at Aldebaran Store.

- Checked this post, because I couldn't find an overview which 41 apps were installed.

- I could say "Hello world" from Choregraphe (and login via ssh).

May 23, 2017

- Mavridis' overview paper lists 10 desiderata, but concentrates on the symbol grounding problem (the subject of his thesis). This is also the main question for Tirza, so that is a must read for her.

- Tirza found a paper of CMU describing the integration of Pepper with ROS Indigo.

May 22, 2017

May 18, 2017

May 17, 2017

May 10, 2017

- Bas Terwijn now also maintains a labbook.

- Bas tried first Kinetic / Ubuntu 16.4, but went back (May 4) to Indigo / Ubuntu 14.04.

- Jonathan came on May 2 to the same conclusion.

- The Scrum-page of Jonathan shows less progress than the labbook.

- Also checked Tirza's proposal and labbook.

- Looking at the technology behind Hanson robotics, and they have several open AI software:

Note that GlueAI claims to be ROS compatible. Yet, page is from 2014. Could find a reference to Glue.AI on ros.org.

May 9, 2017

- Saw yesterday a link to multiperson skeleton detection with a single webcam: CMU OpenPose.

- Work is based on this paper.

May 1, 2017

Previous Labbooks