TCP Tests

Description

The goal of the TCP tests was to get an impression about the performance of the

site local loop Force10 - Cisco 6509 - AR5 loop. As had already

been mentioned in the

"Performed Experiments"

section,

the removing of an intrinsic 8 Gbits/s bottleneck in

the Cisco 6509 by an upgrade of this switch will be investigated. Also

the effect of some Web100 Linux kernel

parameter settings will be shown. Also the effect of MTU settings will be

investigated.

As mentioned in the "Setup"

section, The DAS-2 cluster nodes have

been separated into two VLAN's. TCP flows have been generated between cluster

nodes pairs, located in both VLAN's that have been generated with the

Iperf V. 1.6.5 traffic

generator. Because the goal in these tests was to measure the performance of the

route, each flow had been generated between a single source and destination

host. The TCP tests has been executed as a function of the # source -

destination node pairs, the # parallel flows per node pair (generated by

Iperf using the

pthread library) and the TCP window size which will be varied such that

the sum of the TCP window over the parallel flows per node pair will be kept

constant. This makes it possible to able to compare the effect of the

# parallel flows per node pair. Due to the small round-trip it can be

expected that the effect of TCP window size variation will be limited.

Results

In the subsections below TCP throughput results will be presented for the

situations described above in the following order:

To be able to register the traffic reliable in the SNMP counters of the network

equipment, also a TCP test using flows with a duration of 30 min. have been

executed:

Bottleneck 6509: 8 Gbits/s;

No Web100 Buffer Resize; MTU: 1500 Bytes

In this subsection results will be presented of TCP tests that had been executed

under the circumstances that the Cisco 6509 still was constrained with an

internal bottleneck of 8 Gbits/s. In these

Web100 kernel parameters has been defined

such that the receive and send buffer sizes are no longer automatically adjusted

to the congestion window (cwnd). This can be done by setting the

/proc pseudo file system entries

/proc/sys/net/ipv4/web100_sbufmode and

/proc/sys/net/ipv4/web100_rbufmode to zero. Their default value is

one. Alternatively the command

sysctl -w net.ipv4.web100_rbufmode=0

net.ipv4.web100_sbufmode=0

could be run.

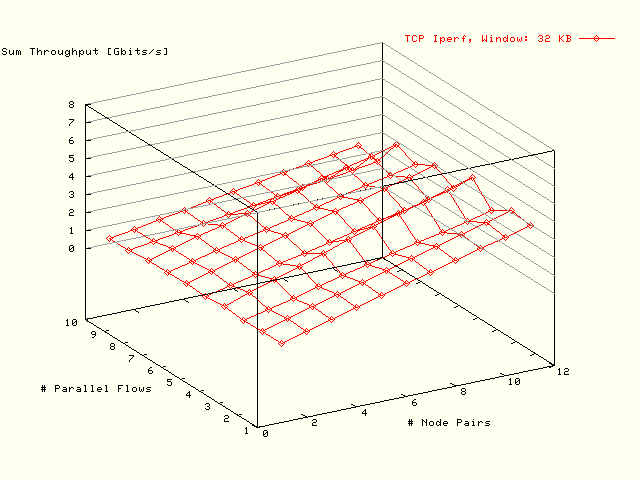

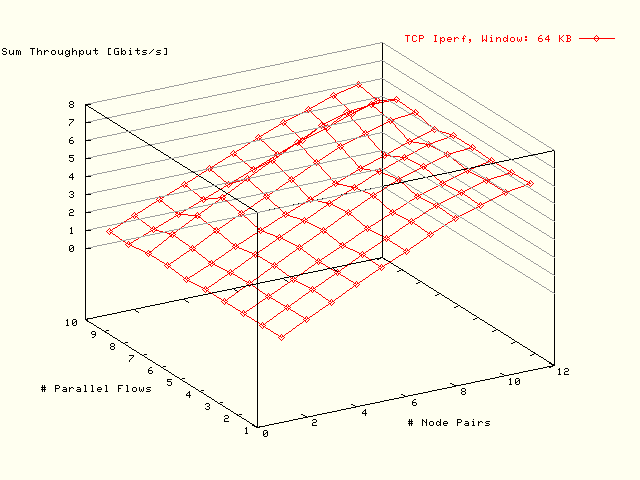

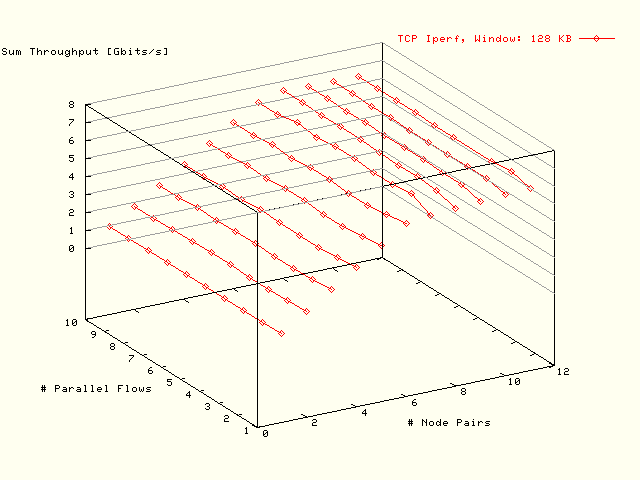

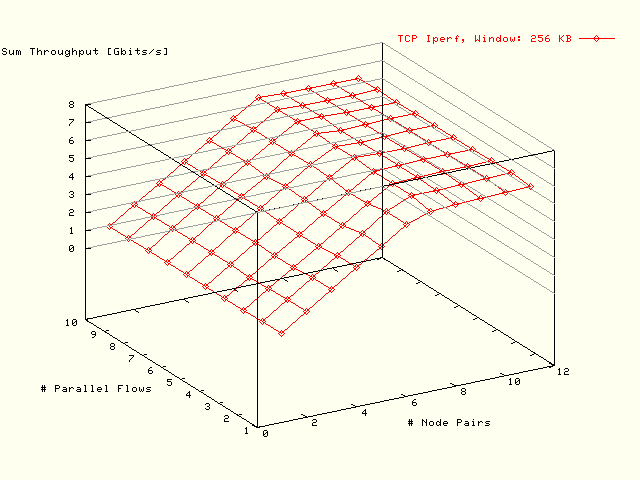

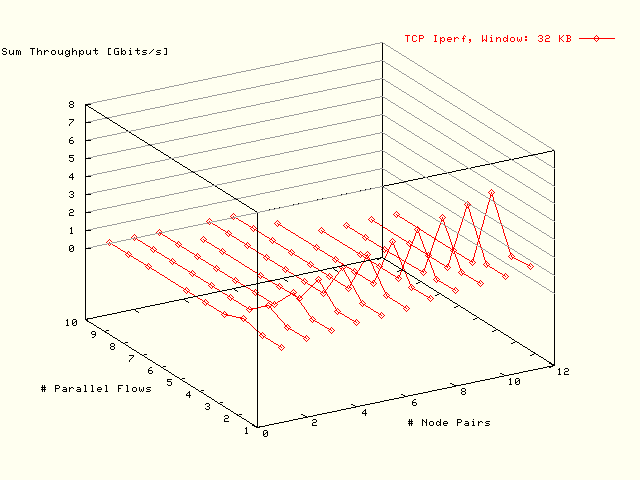

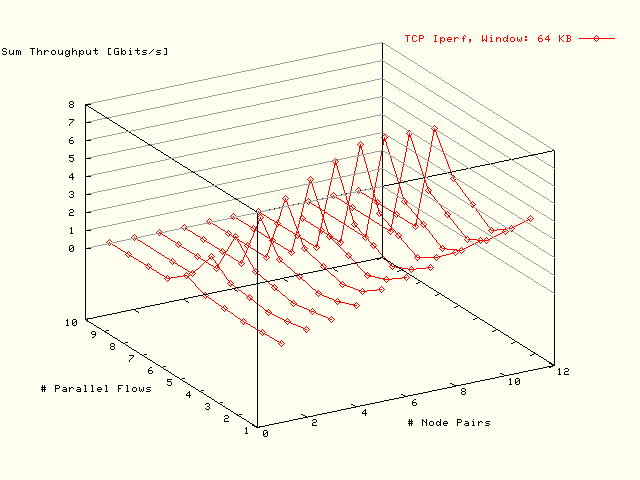

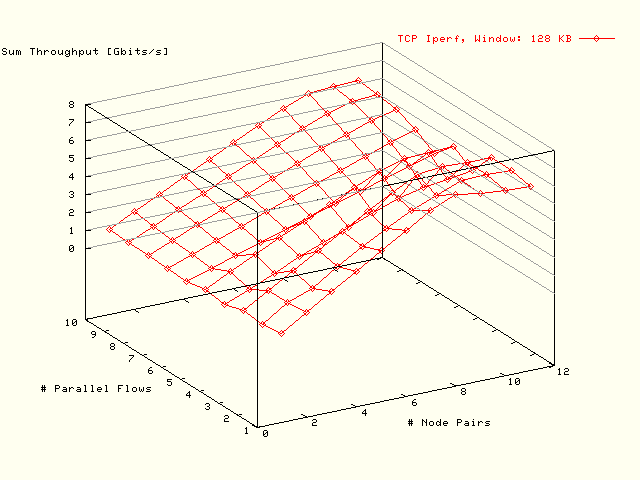

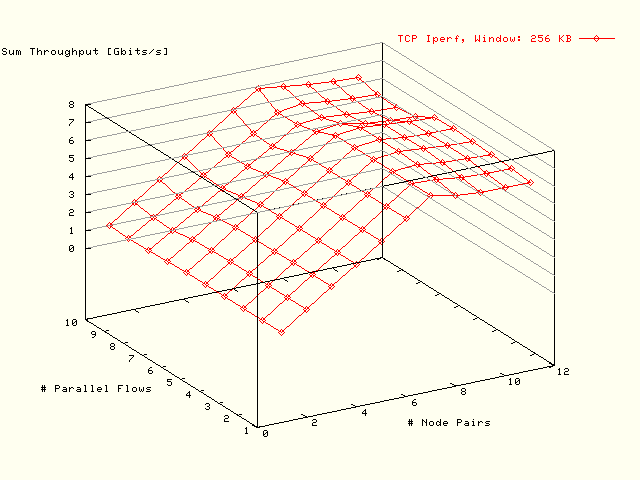

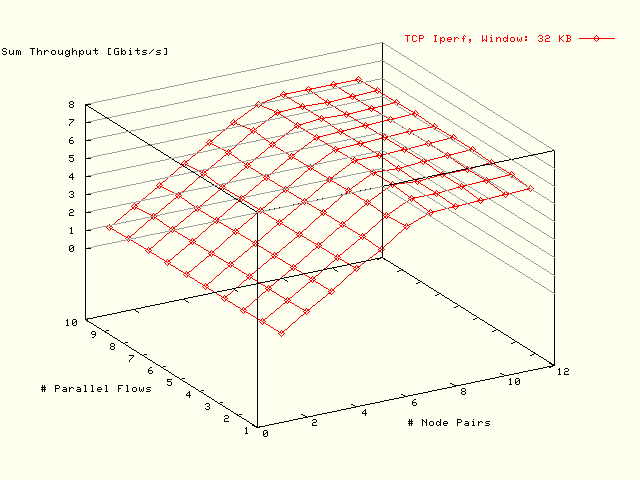

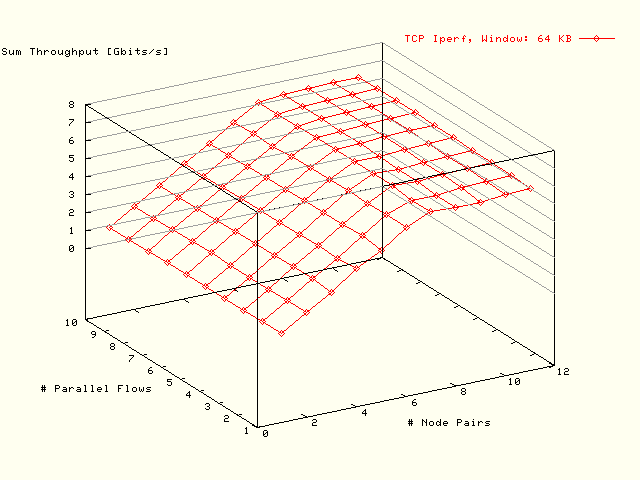

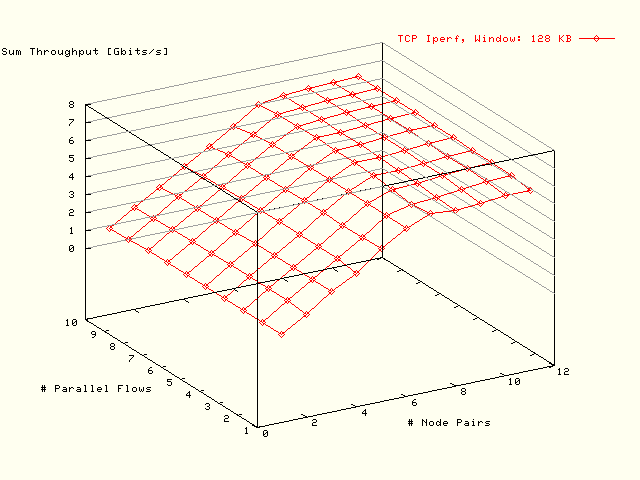

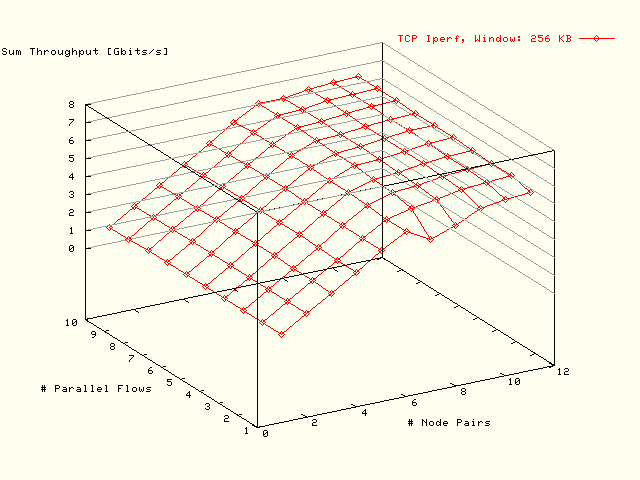

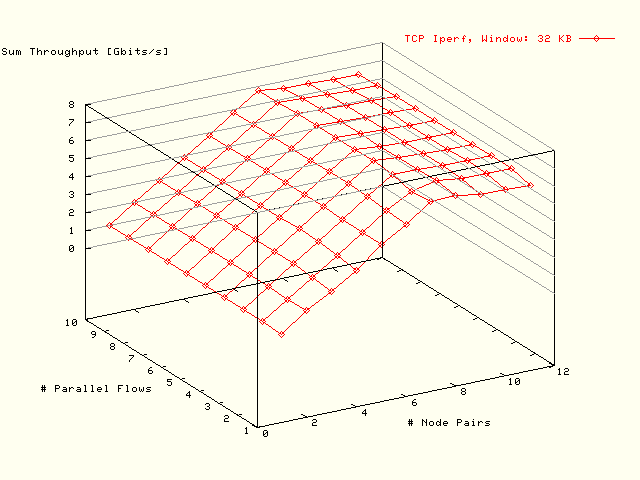

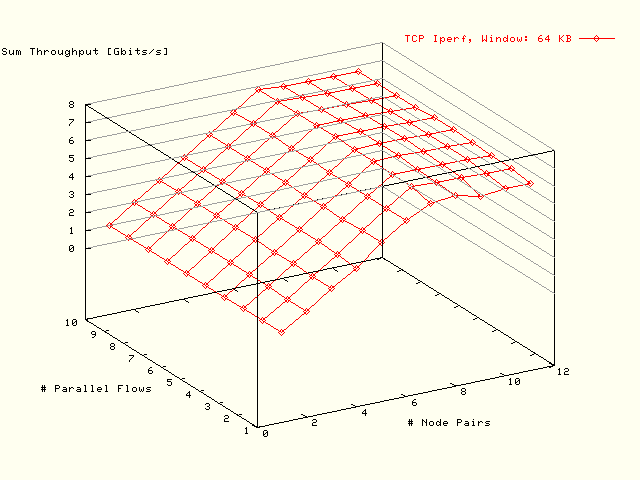

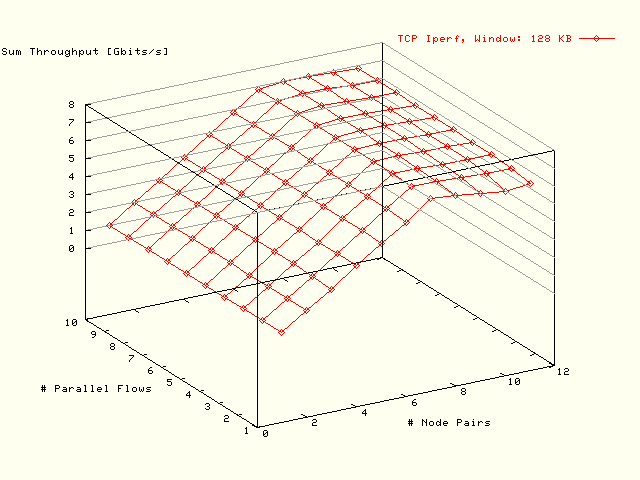

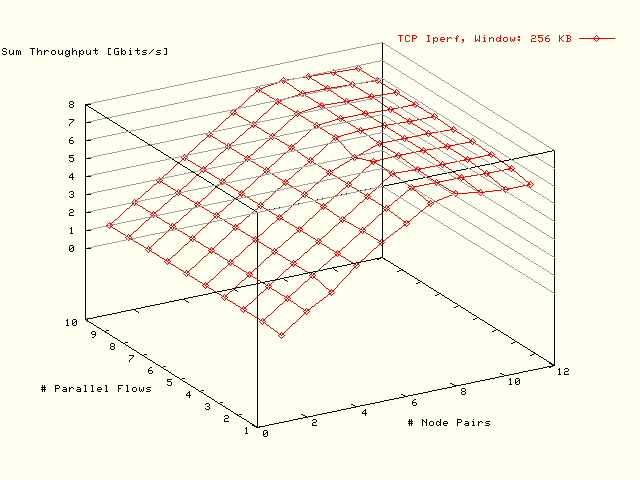

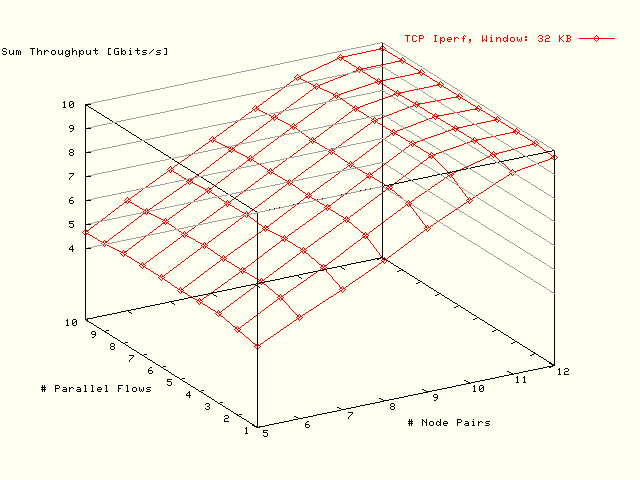

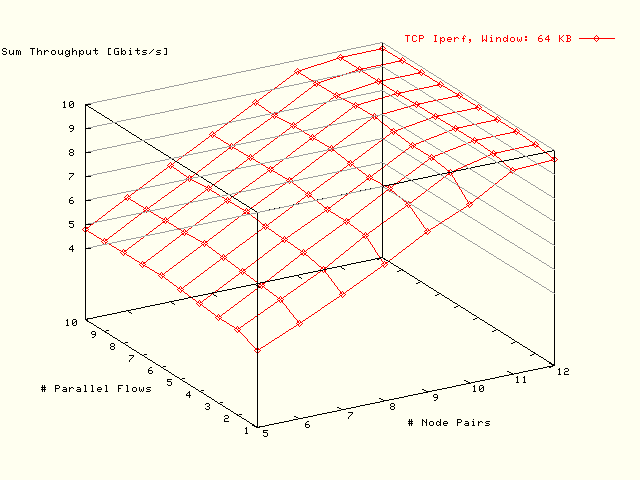

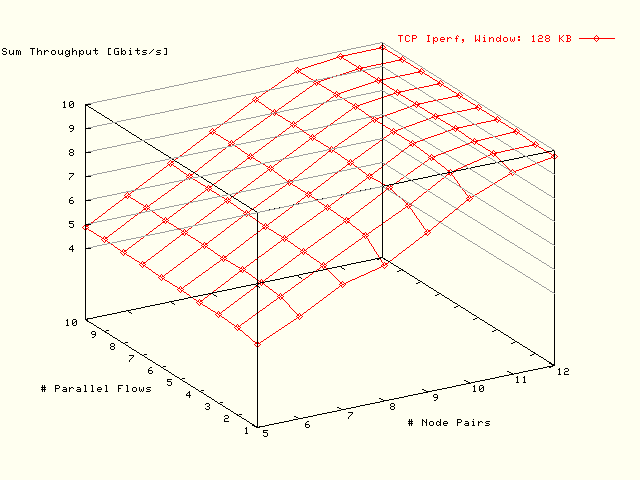

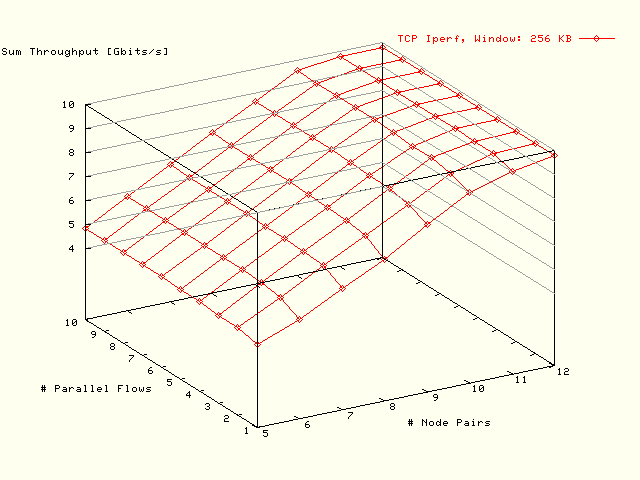

The TCP throughput tests were performed as they have been described in the

"Description" section above. These tests had

been executed with 1 to 11 node pairs (at that moment the maximum

available number) and with 1 to 10 parallel flows per node pair. The

used MTU was 1500 Bytes. In the

plots of these TCP throughput values as a function of the # node pairs and

of the # parallel flows per node pair have been presented for window sizes

of 32,

64,

128 and

256 KBytes.

| . |

|

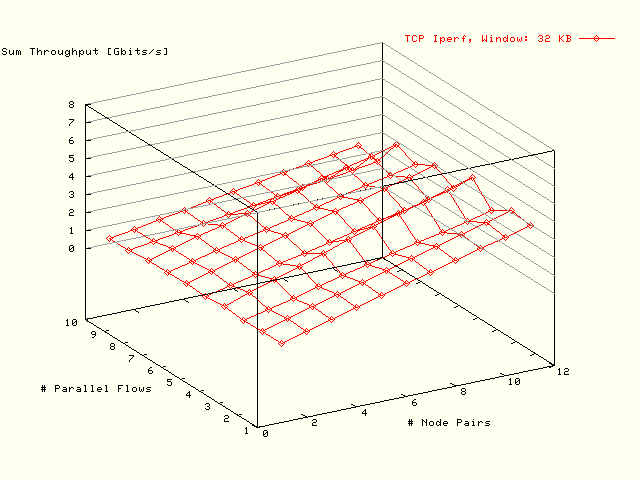

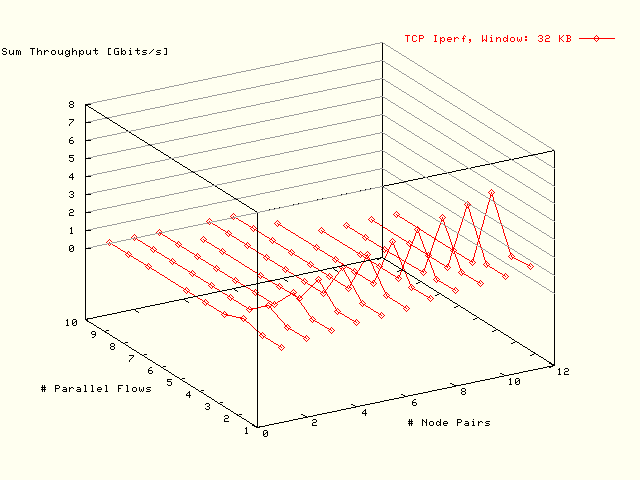

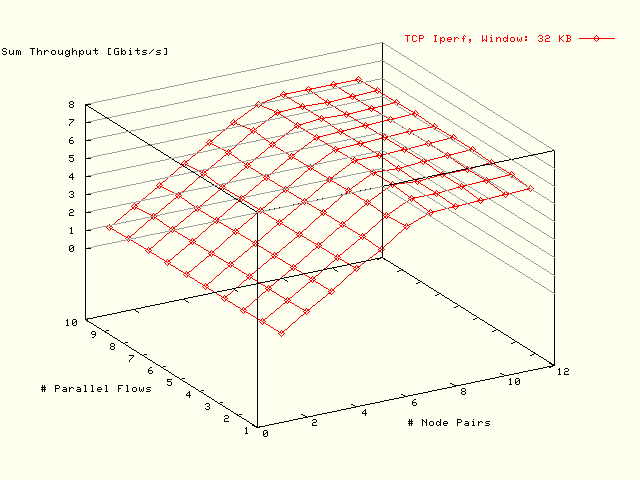

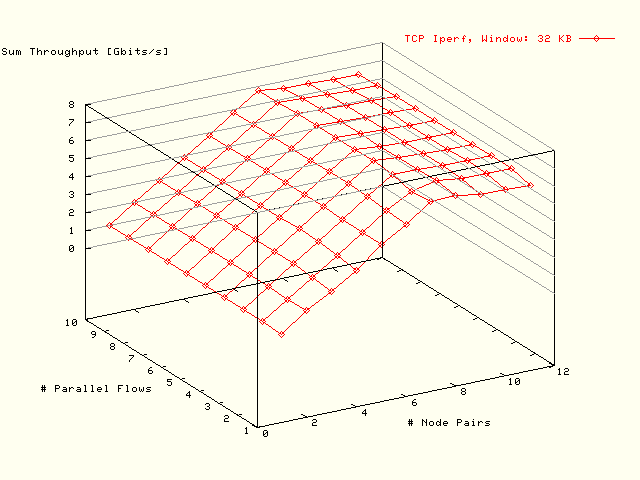

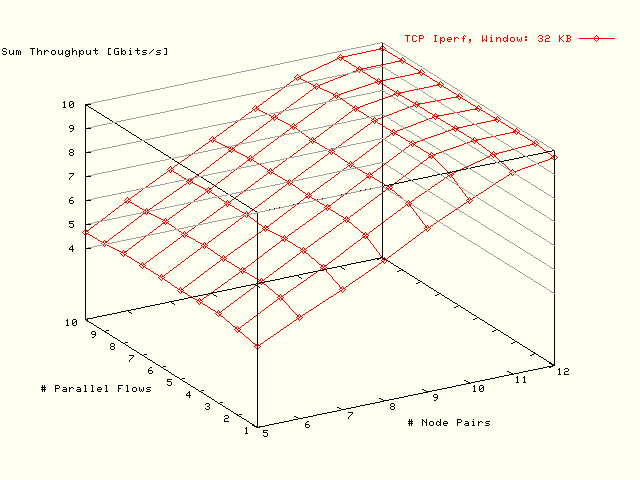

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 32 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

1500 Bytes. |

| . |

|

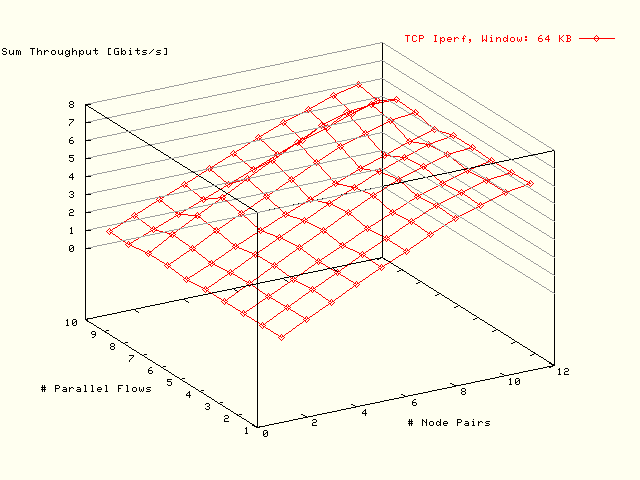

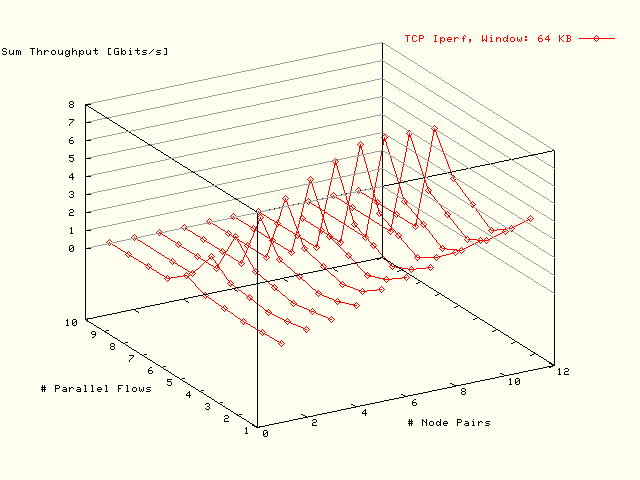

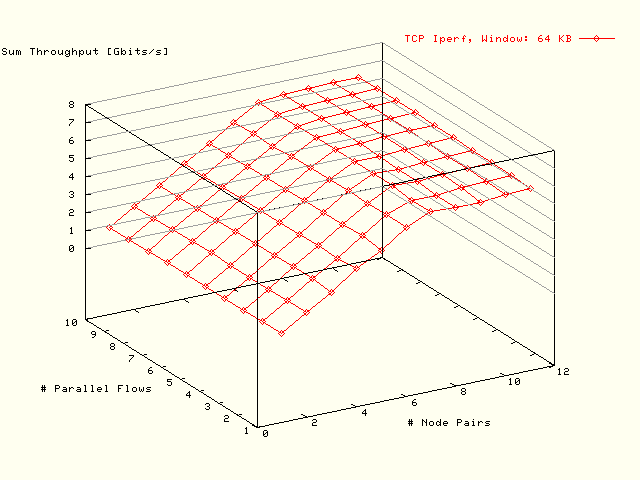

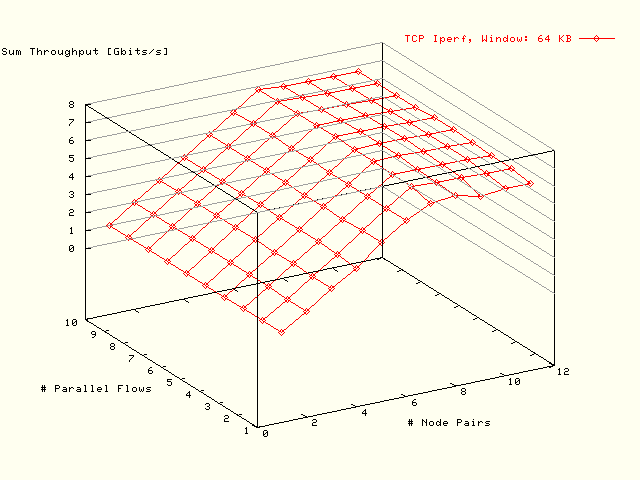

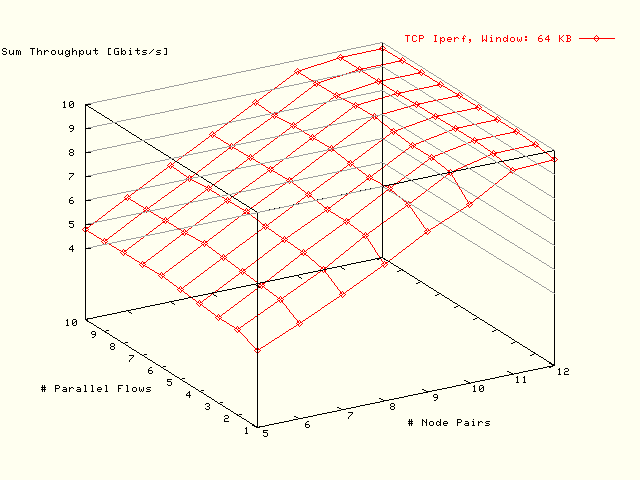

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 64 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

1500 Bytes. |

| . |

|

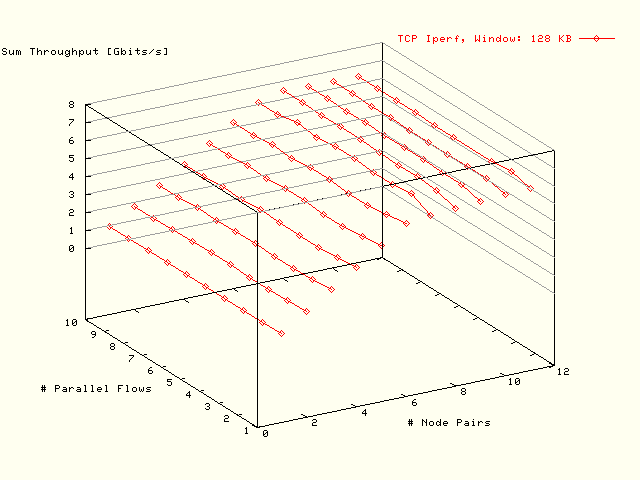

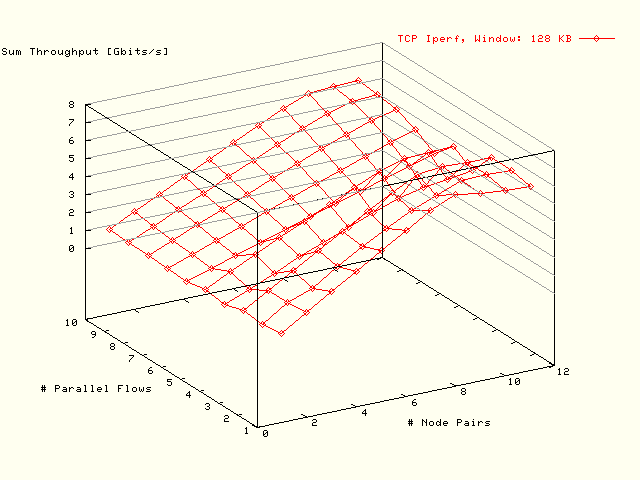

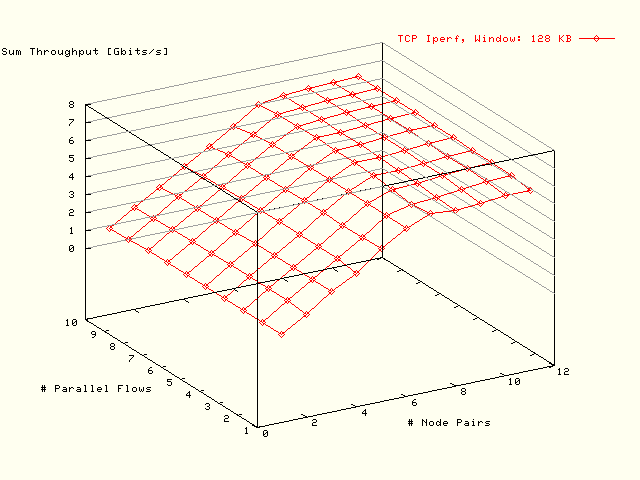

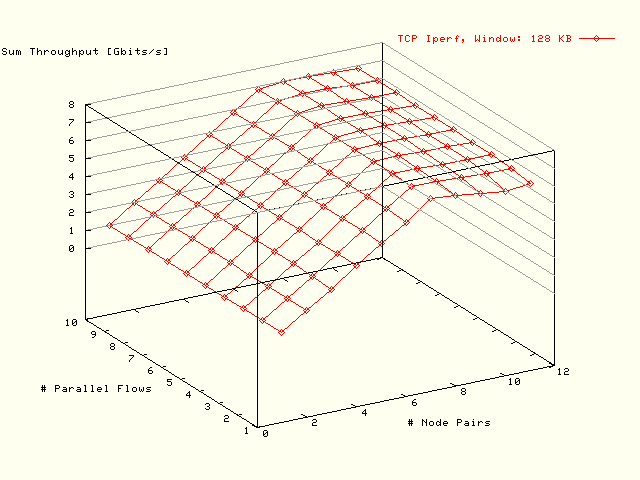

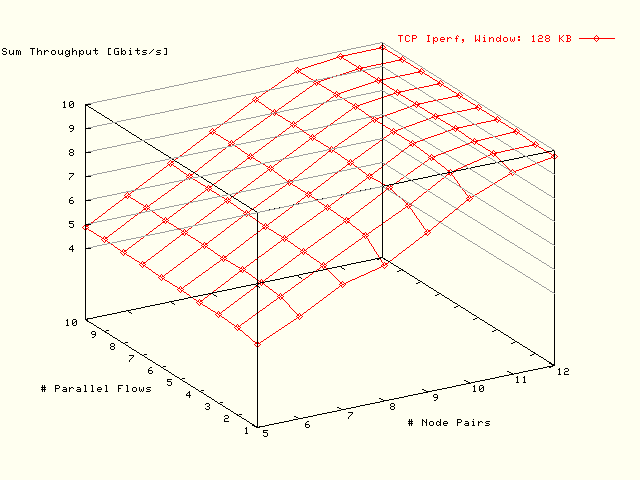

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 128 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

1500 Bytes. |

| . |

|

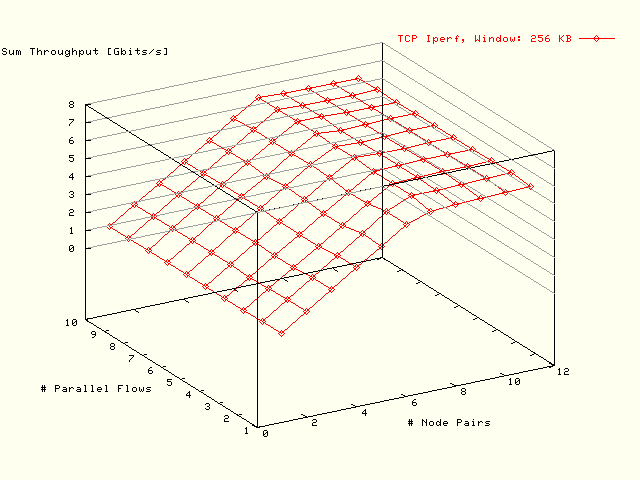

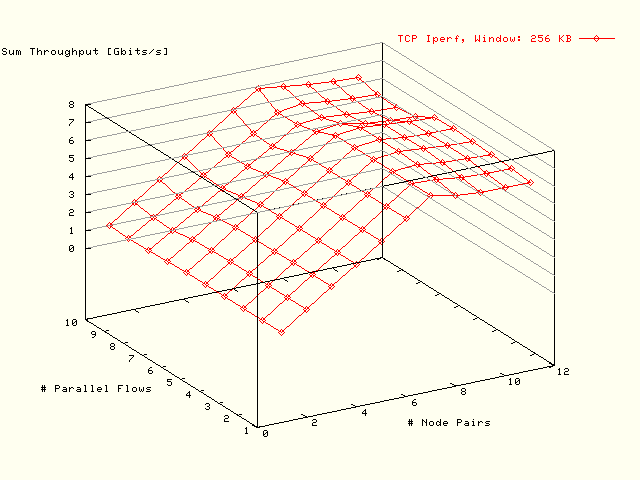

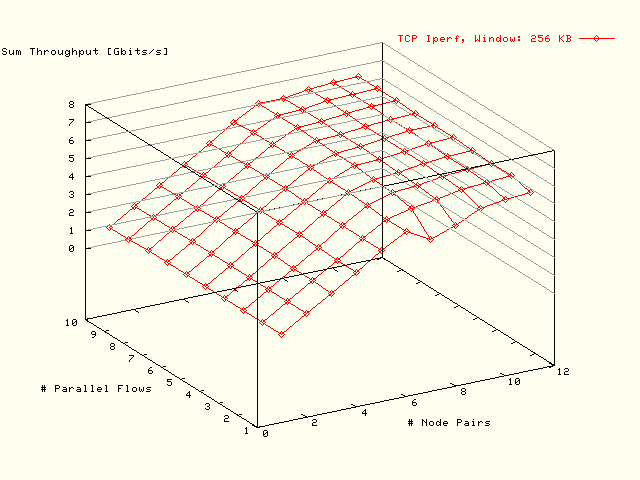

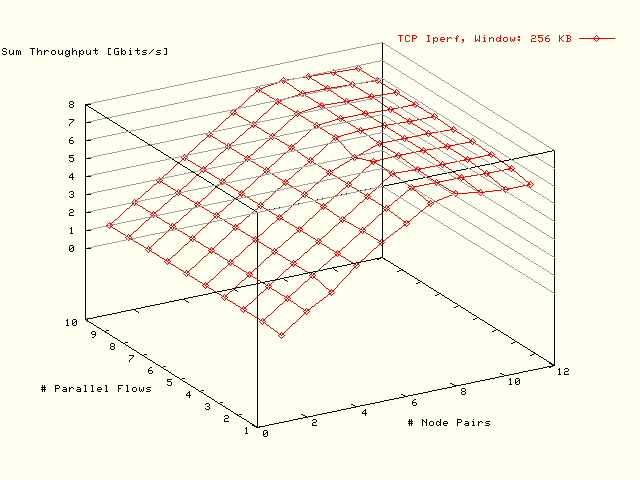

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 256 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

1500 Bytes. |

A remarkable result from this test is the low achieved bandwidth for TCP

windows ≤ 64 KByte

(

).

Bottleneck 6509: 8 Gbits/s;

No Web100 Buffer Resize; MTU: 8176 Bytes

In the

the results of equivalent tests are presented as in the

previous subsection, but here a MTU

of 8176 Bytes has been used.

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 32 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

8176 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 64 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

8176 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 128 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

8176 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 256 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The socket buffer resizing had been switched off in

the Web100 kernel. MTU:

8176 Bytes. |

From the

there follows that there is no improvement compared with the experiments where a

MTU of 1500 Bytes has been used

().

To check if these low throughput values have been caused by the

Web100 kernel parameter settings for the

receive and send buffer sizes, the TCP tests will be repeated with the default

Linux kernel resize settings. In the

Bottleneck 6509: 8 Gbits/s;

Default Buffer Resize; MTU: 1500 Bytes

In this subsection equivalent results will be presented as in the previous two

subsections without buffer resizing with MTU values of

1500 and

8176 Bytes, but here the default

Linux (and Web100) buffer resizing

mechanisms will be used with an MTU of 1500 Bytes. In the

plots of these TCP throughput values as a function of the # node pairs and

of the # parallel flows per node pair have been presented for window sizes

of 32,

64,

128 and

256 KBytes.

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 32 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 1500 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 64 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 1500 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 128 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 1500 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 256 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 1500 Bytes. |

When these results are compared with those from the previous two subsections,

where the socket buffer resizing has been switched off for MTU values of

1500 and

8176 Bytes

(),

there follows that switching off the buffer resizing appeared to be a

disadvantage for these short round-trip times, although it might be beneficial

for longer round-trip times. In the sequel of these tests the default buffer

resizing will be used.

In the next subsection the MTU will be

set to 8192 Bytes to check if that would lead to a higher throughput.

Bottleneck 6509: 8 Gbits/s;

Default Buffer Resize; MTU: 8192 Bytes

In this subsection equivalent results will be presented as in the

previous section, but here with an MTU

of 8192 Bytes. In the

plots of these TCP throughput values as a function of the # node pairs and

of the # parallel flows per node pair have been presented for window sizes

of 32,

64,

128 and

256 KBytes.

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 32 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 8192 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 64 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 8192 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 128 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 8192 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 256 KBytes. There was an intrinsic bottleneck of 8 Gbits/s at

the 6509 switch. The default socket buffer resizing mechanisms had been

used. MTU: 8192 Bytes. |

When these results are compared with those from the

previous section, where a MTU value

of 1500 Bytes has been used

(),

there follows that the larger MTU used here does indeed lead to a slight

performance improvement.

No Bottleneck 6509; Default Buffer

Resize: MTU: 8192 Bytes

In this subsection the results of TCP tests will be shown that were executed

after the intrinsic bottleneck of the Cisco 6509 had been removed by an

upgrade and from the network point of view there was no limitation anymore to

achieve the provided bandwidth of 10 Gbits/s.

As in the previous subsections, the TCP tests had been executed as a function of

the # source - destination node pairs, the # parallel flows per

node pair and the TCP window size which had been varied such that the sum of the

TCP window over the parallel flows per node pair will be kept constant. In these

tests 5 to 12 node pairs (one extra node pair came available compared

with the tests before) had been used and 1 to 10 parallel

flows per node pair. The used MTU was 8192 Bytes. In the

these plots have been presented for window sizes of

32,

64,

128 and

256 KBytes.

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 32 KBytes. There was no bottleneck in the network equipment anymore

in relation with the provided bandwidth of 10 Gbits/s. The default

socket buffer resizing mechanisms had been used. MTU:

8192 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 64 KBytes. There was no bottleneck in the network equipment anymore

in relation with the provided bandwidth of 10 Gbits/s. The default

socket buffer resizing mechanisms had been used. MTU:

8192 Bytes. |

| . |

|

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 128 KBytes. There was no bottleneck in the network equipment

anymore in relation with the provided bandwidth of 10 Gbits/s. The

default socket buffer resizing mechanisms had been used. MTU:

8192 Bytes. |

| . |

|

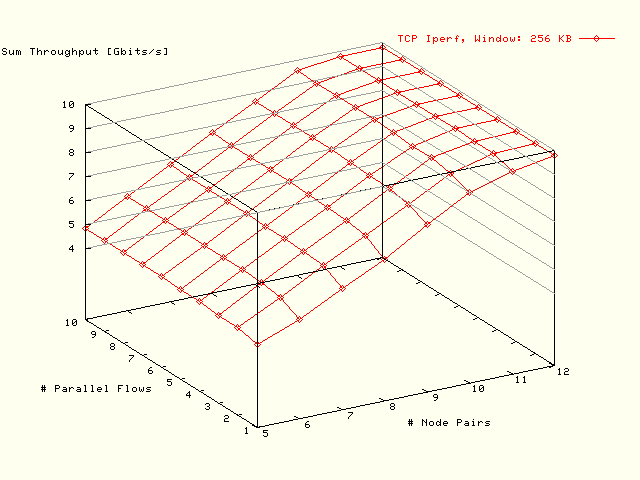

Sum TCP throughput values as a function of the

# node pairs and the # parallel flows per node pair. The sum of

the TCP window size, taken over the # parallel flows per node pair,

was 256 KBytes. There was no bottleneck in the network equipment

anymore in relation with the provided bandwidth of 10 Gbits/s. The

default socket buffer resizing mechanisms had been used. MTU:

8192 Bytes. |

From the

there follows that the provided bandwidth could well be reached. That is also

the case with 11 node pairs, the # node pairs that were available for

the upgrade of the 6509 switch. None of the network and host equipment

seems to be a bottleneck with the topology used in these tests.

No Bottleneck 6509; Flows of

30 Min.

To be able to register the traffic reliable in the SNMP counters of the network

equipment, also a TCP test with flows lasting for 30 min. have been

executed. These tests had been executed after the upgrade of the

6509 switch. Twelve node pairs had been used with a single flow per node

pair using a TCP window of 128 KBytes. The MTU was 8192 bytes. In

the bandwidth values are listed for the individual flows and for their sum.

Flow

[#] |

Bandwidth

[Mbits/s] |

Flow

[#] |

Bandwidth

[Mbits/s] |

| 1 |

789 |

7 |

846 |

| 2 |

713 |

8 |

802 |

| 3 |

841 |

9 |

843 |

| 4 |

856 |

10 |

851 |

| 5 |

843 |

11 |

825 |

| 6 |

765 |

12 |

758 |

| |

|

Sum |

9732 |

| . |

|

Bandwidth of the individual flows between

12 node pairs and their sum. The duration of the flows was 30 min.

No bottlenecks were involved, so the provided bandwidth was 10 Gbits/s.

MTU: 8192 Bytes. |

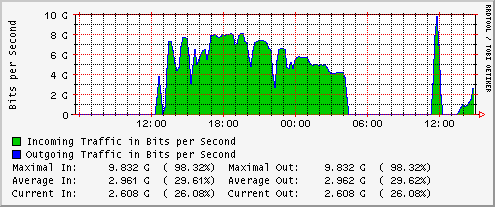

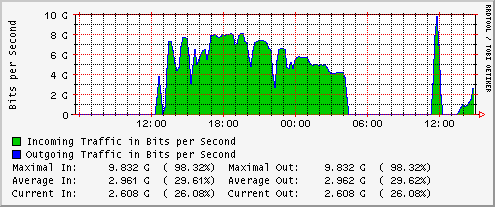

In

the corresponding SNMP registration is presented from

SURFnet at the interface of the

6509 switch to which the Force10 switch has been connected. Please note

that the 30 min. TCP tests had been started at 11:28.

| . |

|

SNMP registration from

SURFnet at the interface of the

6509 switch to which the Force10 switch has been connected. It was

taken during the 30 min. TCP tests from which the results have been

listed in

.

These tests had been started at 11:28. |

In

a maximum bandwidth of 9.8 Gbits/s has been registered. In

the sum of the bandwidth values is 9.7 Gbits/s. Both values are in good

agreement with each other.

Conclusions

From the TCP tests presented above, the following

conclusions can be drawn:

-

Switching off the default resizing of the send and receive buffer sizes in

the Web100 kernel are not beneficial

for small TCP window sizes (≤ 64 KBytes). In the sequel the

default resizing behaviour will be used.

-

In the situation with the 8 Gbits/s bottleneck of the 6509 switch,

the maximum achieved throughput was 7 Gbits/s. This is not a bad result

concerning the mismatch in provided bandwidths between the Force10 switch

and the 6509.

-

After the upgrade of the 6509 switch, the provided bandwidth of

10 Gbits/s could be filled without problems which could be concluded

both from the achieved throughput values and from the SNMP registrations.

From these TCP tests there follows that the connection between Force10

and 6509 does not seem to introduce problems.

-

Using larger MTU sizes larger than the default 1500 bytes is beneficial

for high throughput values that are nearing the provided bandwidth.