7.1. k-Means Clustering

k-Means clustering is a partional clustering approach in which each cluster is associated with a centroid \(\v\mu_j\) (center or prototype point). The number of clusters \(k\) is considered fixed and has to be chosen a priori. Each point \(\v x\ls i\) in the data set is then assigned to the cluster with the closest centroid.

Let \(c_i\) be the cluster assigned to point \(\v x\ls i\). Then the goal of k-means clustering is to minimize the following cost function:

Observe that the cluster labels \(c_i\) are discrete values. Gradient descent is therefore not a logical choice. The cluster centers \(\v\mu_j\) are continuous (\(n\) dimensional) variables. It can be shown that finding the optimal parameters \(c_i\) and \(\v\mu_j\) is an NP hard problem.

The k-means optimization problem is therefore done in a two step process. First we fix the cluster centers and find the \(c_i\) that minimize the cost. Evidently if we set:

the distance from \(\v x\ls i\) to the selected cluster center is minimal and doing that for all points \(\v x\ls i\) minimizes the cost.

Then given the classification we fix the \(c_i\) and calculate the optimal cluster centers \(\v\mu_j\). To do so we calculate the gradient:

Observe that this minimization can be solved analytically. We set the gradient (with respect to \(\v\mu_j\) equal to zero and solve for \(\v\mu_j\):

Note that the summation in the right hand side just calculates the number of points in cluster \(j\). Let’s call that number \(m_j\) then we arrive at:

So given a fixed set of clusters the optimal cluster center \(\v\mu_j\) is the mean of all points assigned to that cluster.

The k-means algorithm consists of selecting \(k\) cluster centers at random and then alternating these two optimization steps:

def kmeans_clustering(data, n_clusters=3, max_iterations=100):

"""k-means clustering"""

clusters = select_initial_clusters(data, n_clusters)

for i in range(max_iterations):

labels = assign_labels(data, clusters, n_clusters)

new_clusters = calculate_clusters(data, labels, n_clusters)

if test_if_done(data, labels, clusters, new_clusters, n_clusters):

break

clusters = new_clusters

print("iterations: ", i)

return (labels, clusters)

In an exercise you have to implement four functions:

select_initial_clusters(data, n_clusters)

this function should return a (n_clusters, n) shaped array of cluster

centers, each row a cluster center. A simple way to do that is to

randomly select n_clusters points from the data set.

assign_labels(data, clusters, n_clusters)

this function should return a (m,) shaped array of label values

(from 0 to n_clusters) indicating which cluster center is

closest. This function implements Eq.7.1.1.

calculate_clusters(data, labels, n_clusters)

this function should return a (n_clusters, n) shaped array of

cluster centers. This function implements Eq.7.1.2.

Finally you have to implement the function

test_if_done(data, labels, clusters, new_clusters, n_clusters)

As a default implementation you could just check whether the new clusters are close to the previous clusters.

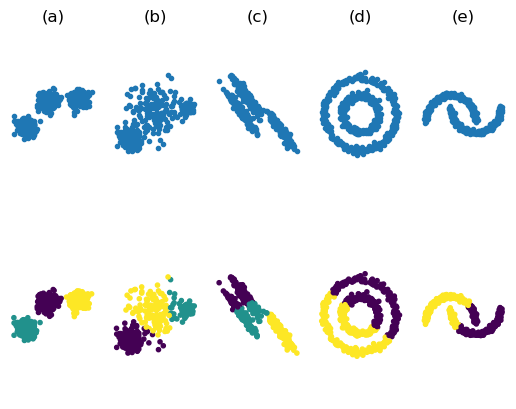

In Fig. 7.1.1 the 5 data sets from the introduction are shown as clustered by the k-means algorithm.

Fig. 7.1.1 k-Means Clustering. For the first three datasets \(k=3\) is selected and for the last two datasets we have set \(k=2\).

From the figure it is clear that k-means clustering only really works for the first data set. In (a) clusters of spherical shape of about the same size are shown. In (b) we do have spherical clusters but of varying sizes, k-means tend to assign points from the larger clusters to smaller clusters. In (c) where we see ellipsoid clusters, a situation that k-means cannot deal with. For the non ellipsoid clusters in (d) and (e) k-means clustering is totally insufficient.