Monday, June 26th, 2006

In the beginning..

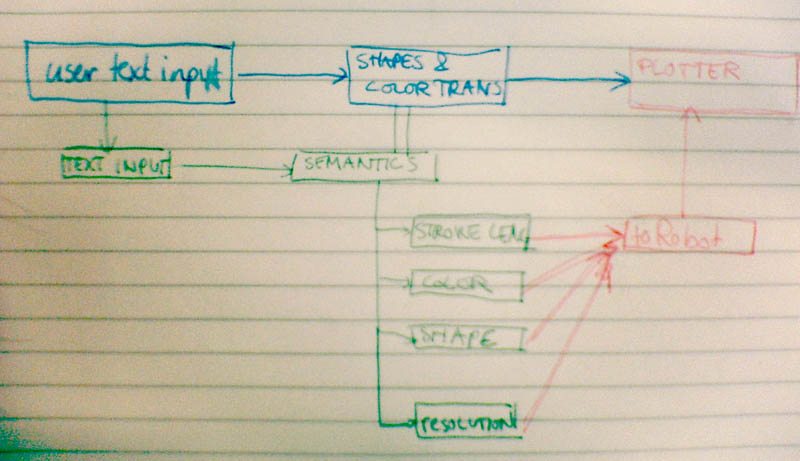

First of all, we needed to define our concept. What we wanted was for the robot to be able to do something communicative with the users, something besides merely acting on clear cut commands. Giving the robot some kind of personality would be too difficult for one week, but we were set on the robot drawing or painting something robot-oriented. What we finally decided on was to have the robot respond to a text-driven user input by means of a drawing. When a user would 'talk' to the robot by typing into a console, the program would semantically interpret the text into something that would visually correspond to the tone of the text.

To be able to find some kind of backup for the choices we would make in shapes and color, we decided to do some literature research into color psychology, and which colors link to which emotions. Most of the literature we dug through had quite a few rather esoteric and random elements, but seemed to agree on some basic points. These included yellow being happy, black being sad, and red being passionate. One of the papers we read was The color of emotion by M. Egan and R. D'Andrade (1974).

After we looked into the basis of what would become our semantic analysis, we were free to dig into the source left to us by Bram and Folkert. Their java skills were obviously much better than ours, and we were happy that they had done so much work for us to build on.

Their code consists of three parts. One is the GUI in which the users can draw figures to be drawn by the robot. Another is the part that actually translates the images into robot moves. Between the GUI and the translator, there is a class that registers the canvas and what is going on on it.

The plan:

At four o'clock, the larger part of the old code was understood. A beginning was made with building a 'programming-interface' to record new text-to-visuals-code. The old GUI showed itself to be able to display our generated graphics, making us less dependent on the UMI-RTX robot for testing. After some more coding after dinner, we called it a day, knowing that the concept was indeed possible to execute within reasonable time.