Wishlist

- Connection to UsarCommander

- ROS-nodes working for platform, arm, laser scanner and ins.

- Connection to playchess

December 22, 2022

- The LoCoBot is low-cost mobile manipulator (pre-build 4548 euro). Could be possibility for the Alpha-Zeta project.

- It uses RealSense D435 camera and RPLIDAR A2M8 laser scanner.

November 30, 2022

- Found a Logitech QuickCam Orbit, which still works quite fine. The transparant cover is gone. Looking for software to control the tilt.

- Ask Ubuntu points in this post suggest to use uvcdynctrl.

- The Orbit is not visible in WSL.

- For Windows I installed the Logitech Webcam Software v2.8.0, which should also work for Windows10.

- During installation, the Orbit moves. Controls don't work yet, but the installation also asked for a reboot.

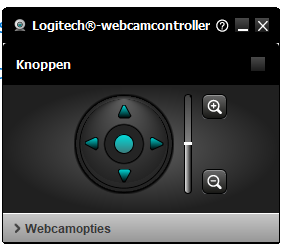

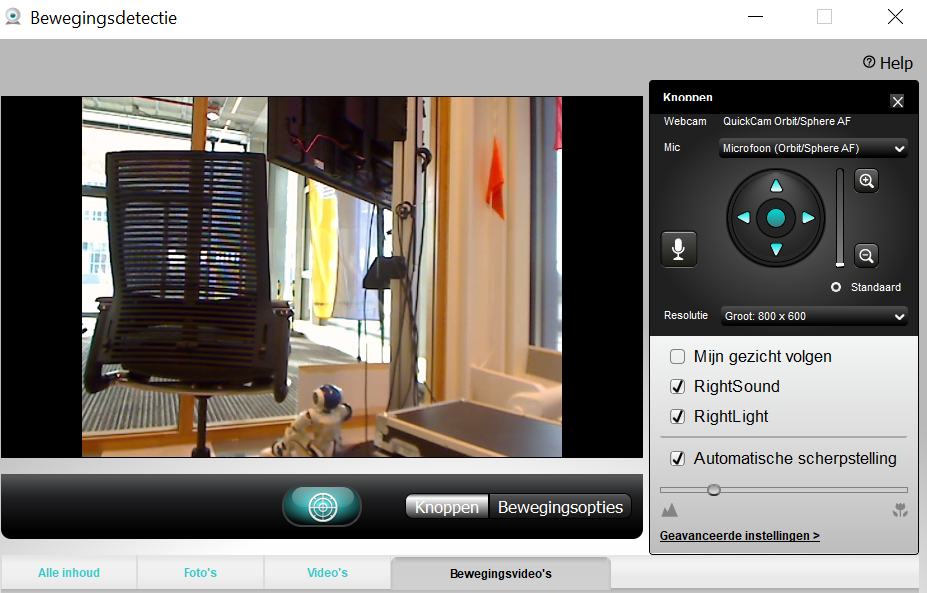

- This is the control of LWS:

- That works. The buttons are also embedded in motion detection software:

- Participated in a webinar on Issac ROS DNN Interface, which was demonstrated with YoloV5.

- The results were visualised with Floxglove.

- The ROS-pipeline they show on slide 12, is that image_raw sensor_msgs are coverted to tensor_pub by an isaac_ros_dnn_encoder, send to Yolov5 network, the tensor_sub are converted again by a decoder and send to rqt for visualisation.

November 8, 2022

- Should look at the github code of this Nvidia blog

- A model of the IRL would be nice. Not in Grabcad or 3dmdb yet. Could ask Joey to make such model.

November 3, 2022

- This 'commercial' blog from Zivid has a nice overview of robotic piece picking challenges.

October 25, 2022

- Nvidia announced for Q2 2023 the Nova Orin as new reference platform. Note that this robot includes two Jetson AGX Orin boards, so I expect that this will not a cheap system.

October 3, 2022

- Teams can still register for Core OpenCV competition until October 31.

September 27, 2022

- Checked if the urg_lidar_serial.launch works with the /dev/ttyUSB0, now that I have the USB-serial cable with me.

- Receive an initial invalid response, but the after that the getID and urg_node both work. At least it shows up in the rostopic list.

- Will try to reproduce the robotstackenv on 18.04, to see if if rviz runs without problems there.

- The command roslaunch urg_node urg_lidar.launch now also works when connected via the micro-USB and /dev/ttyACM0

- Installing RoboStack on the Ubuntu 18.04 machine nb-ros.

- First installed mambaforge, but conda activate robostackenv fails (also after restarting shell).

- A reboot solved this issue. Could continue with creating the robostackenv with mamba.

- Directly after creating the robostackenv I run rosrun rviz rviz, which gives the same error as this environment in Ubuntu 22.04:

/home/arnoud/mambaforge/envs/robostackenv/lib/rviz/rviz: symbol lookup error: /home/arnoud/mambaforge/envs/robostackenv/lib/rviz/../librviz.so: undefined symbol: _ZTIN4YAML13BadConversionE - Looked at my nb-dual. The librviz.so has a different size. Copied the mamba version to tmp and recplaced it with the librviz.so from nb-dual.

- Now rosrun rviz rviz fails on missing libboost_filesystem.so.1.71.0. Checking with ldd tmp/librviz.so | grep libboost showed that the mamba version used v1.74.0.

- Copied v1.71.0 to the mamba environment. Now libyaml-cpp.so.6 is needed (libyaml-cpp-so.7 is the present in the mamba environment. Also copied so.6 to mamba. Now rosrun rviz rviz, but gives a coredump directly after Forcing OpenGl version 0.

- The segmentation fault is created in libboost_filesystem.so.1.71.0

- Changed the original librviz.so back to the mamba environment, and created nsensible links to the libyaml-cpp.so versions. Still an undefined symbol, so left it in original state (pointing to libyaml-cpp.so.0.7.0)

- Submitted an issue on this subject.

September 25, 2022

- Continued with installation of Hokuyo URG04LX description on top of RoboStack

- All prerequisites were already installed, there was only no package available for ros-noetic-urg-node.

- Trying to install the urg_node from source.

- Note that there are several branches for the urg-node, but no ros-noetic branch. The default (and quite recent) branch is kinetic, so stayed in the main branch.

- Made a new ws in ~/mambaforge/catkin_ws. Did catkin init, followed by catkin build. It fails on missing the laser_proc package.

- Unfortunatelly, also mamba install ros-noetic-laser-proc leads to nothing provides requested ros-noetic-laser-proc

- Also cloned laser_proc. Also this package has no noetic-branch, the default one is melodic.

- This package is build, the urg_node is now hanging on missing on package urg_c.

- This packages has two branches (ros1 and ros2), with the ros1 master as default. With those three packages build from source I can continue with the installation of Hokuyo URG04LX description.

- Everything works more or less fine, only the simple command rosrun rviz rviz gives already the error:

~/mambaforge/envs/robostackenv/lib/rviz/../librviz.so: undefined symbol: _ZTIN4YAML13BadConversionE. - This post suggests that this could be the wrong version of YAML. Installed yaml-cpp from source, which didn' t help. Strange enough both ldd ../envs/robostackenv/lib/rviz/rviz | grep yaml and ldd ../envs/robostackenv/lib/librviz.so | grep yaml showed no yaml dependence.

- Another suggestion is that opencv is missing. Although the robostackenv article claims that this environment to great to install opencv. No clear guide how to install opencv in robostackenv, partly because it is already installed (import cv2 works).

- Looking into RoboStack's rviz.

- Building this package fails on missing opengl, so did mamba install mesa-libgl-devel-cos7-x86_64 mesa-dri-drivers-cos7-x86_64 libselinux-cos7-x86_64 libxdamage-cos7-x86_64 libxxf86vm-cos7-x86_64 libxext-cos7-x86_64 xorg-libxfixes as suggested in robostack installation guide.

- Yet, now the build fails on rviz/ogre_helpers/

- Looked into list of RoboStack packages. Enough rviz plugins, nothing about yaml or ogre.

- Looked with rostopic list to published topics, but roslaunch urg_node urg_lidar.launch dies with error_code=-5.

- Should try again, with serial to usb.

September 24, 2022

- Continued with installation of RoboStack

- For mamba activate robostackenv you need to run mamba init, so for the moment used the suggested conda activate robostackenv

- From the options, I installed:

- mamba install compilers cmake pkg-config make ninja

- mamba install catkin_tools

- mamba install rosdep

- The last one (rosdep) was not needed, all requested packages were already installed.

September 23, 2022

- Looking if I can have ROS Noetic environment on my Ubuntu 22.04 machine with RoboStack

- Not needed, I have still a /opt/ros/noetic/ installation (from the time that was a Ubuntu 20-21 machine?)

- ROS Noetic should with Ubuntu Focal Fossa (20.04).

- Will first try to install my ros hokuyo urg04lx description natively, I could repeat the RoboStack experiment with Ros Melodic for Ubuntu 18.04 (Bionic Beaver).

- Yet, simply installing sudo apt install ros-noetic-ros-base fails, so ros noetic and Ubuntu 22.04 (Jammy) are not friends (ros-answers suggest that it is only possible when you build from source.

- So back to RoboStack

- First instruction already fails, because I am missing conda. The suggestion is to use mambaforge, whic h installs ~/mamboforge in my home.

- In ~/mamboforge/bin I had to do ./conda init, which modified my ~/.bashrc. Also did the suggested ./conda config --set auto_activate_base false.

- Now the first RoboStack instruction works: conda install mamba -c conda-forge.

- Time to go RoboCafe, will continue the next steps later.

September 20, 2022

- Used the images from URG Network tutorial to show the usage of the USB and RS232 connectors.

- Checked out the new github into ~catkin_ws/src and tried to do a catkin build, but the build of all 18 packages is taking quite long (somethin with numpy?!).

- The build of the package minkindr_conversions fails on eigen_conversions, so installed sudo apt install ros-melodic-eigen-conversions.

- With eigen-conversions still numpy-eigen fails, so eigen_checks et al are abandoned.

- numpy-eigen fails on CMakeFiles/numpy_eigen.dir/src/autogen_module/import_float.cpp.o: file not recognized.

- Moved numpy_eigen, minkindr and volblox to the not_building directory (the numpy_eigen github-page mentioned ros-noetic).

- Now all packages are build, yet nothing is done for

ros_hokuyo_urg04lx_description

. - Copied the package.xml and CMakeLists.txt from dreamvu_pal_camera.

- The package.xml has too many dependencies. Removed all, except the one on std_msgs.

- Added a install command to the CMakeLists.txt as suggested in this post.

- After source ~/catkin_ws/devel/setup.bash the sequence roscd ros_hokuyo_urg04lx_description and roslaunch ros_hokuyo_urg04lx_description urg-04lx.launch works. Only one warning:

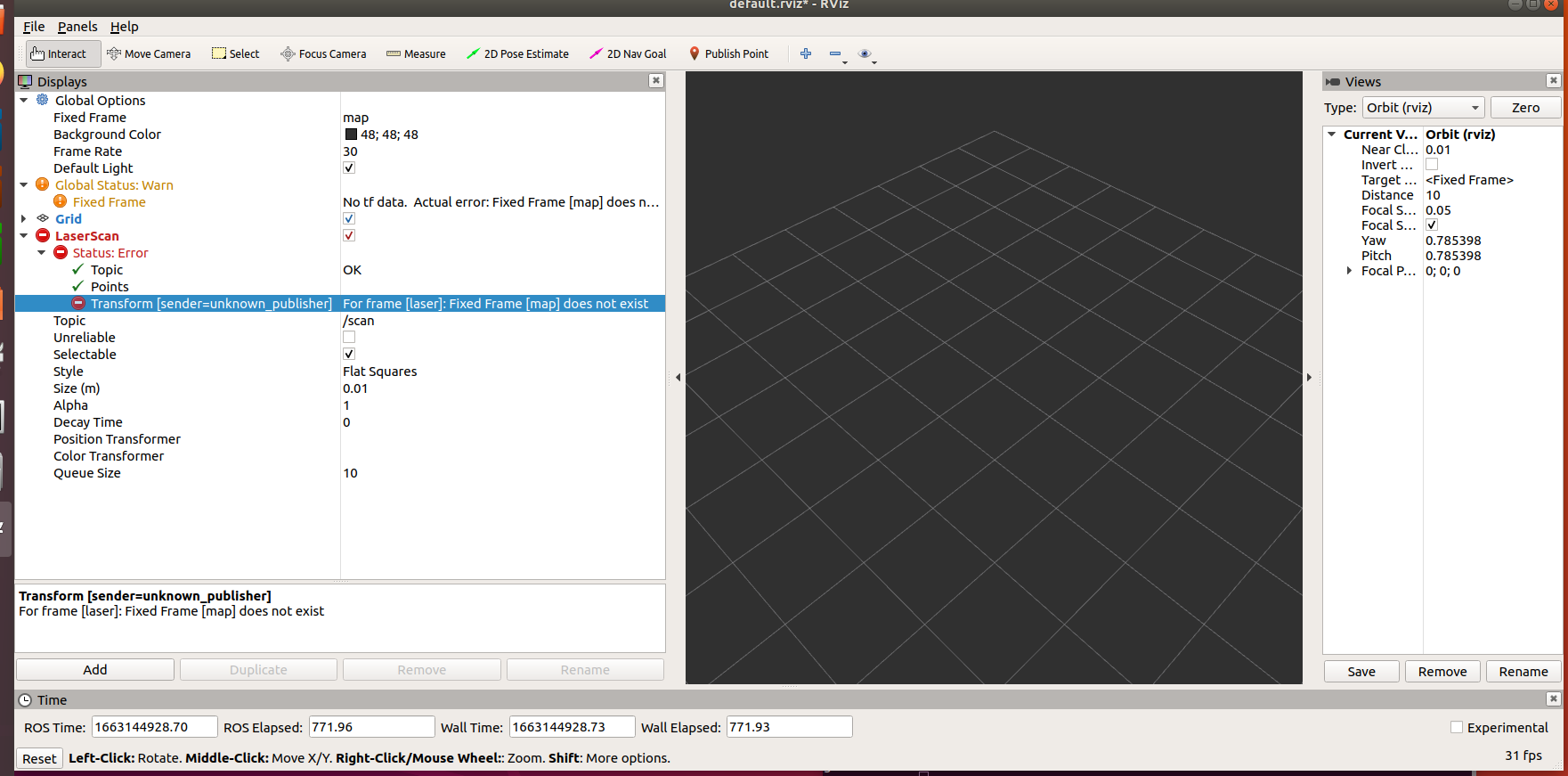

[ WARN] [1663674420.749324047]: The root link laser has an inertia specified in the URDF, but KDL does not support a root link with an inertia. As a workaround, you can add an extra dummy link to your URDF.. - When I start rosrun rviz rviz I get an error, but that is because by default the RobotModel is looking for robot_description. My package publishes /laser/robot_description. Still an error that there is no transform from [laser] to [map].

- Starting rosrun tf2_ros static_transform_publisher 0 0 0 0 0 0 map laser solves this.

- Moved to nb-ros and tried out if the URG04LX also works under ros-noetic.

- Checked with dmesg | tail, the serial converter is attached to /dev/ttyUSB0. Did sudo chmod o+rw /dev/ttyUSB0.

- The standard launch of urg_node tries to connect to /dev/ttyACM0, so commited urg_lidar_serial.launch.

- Could roslaunch ros_hokuyo_urg04lx_description/launch/urg_lidar_serial.launch (without even building the catkin package). Checked the output with rostopic list and rostopic echo /scan.

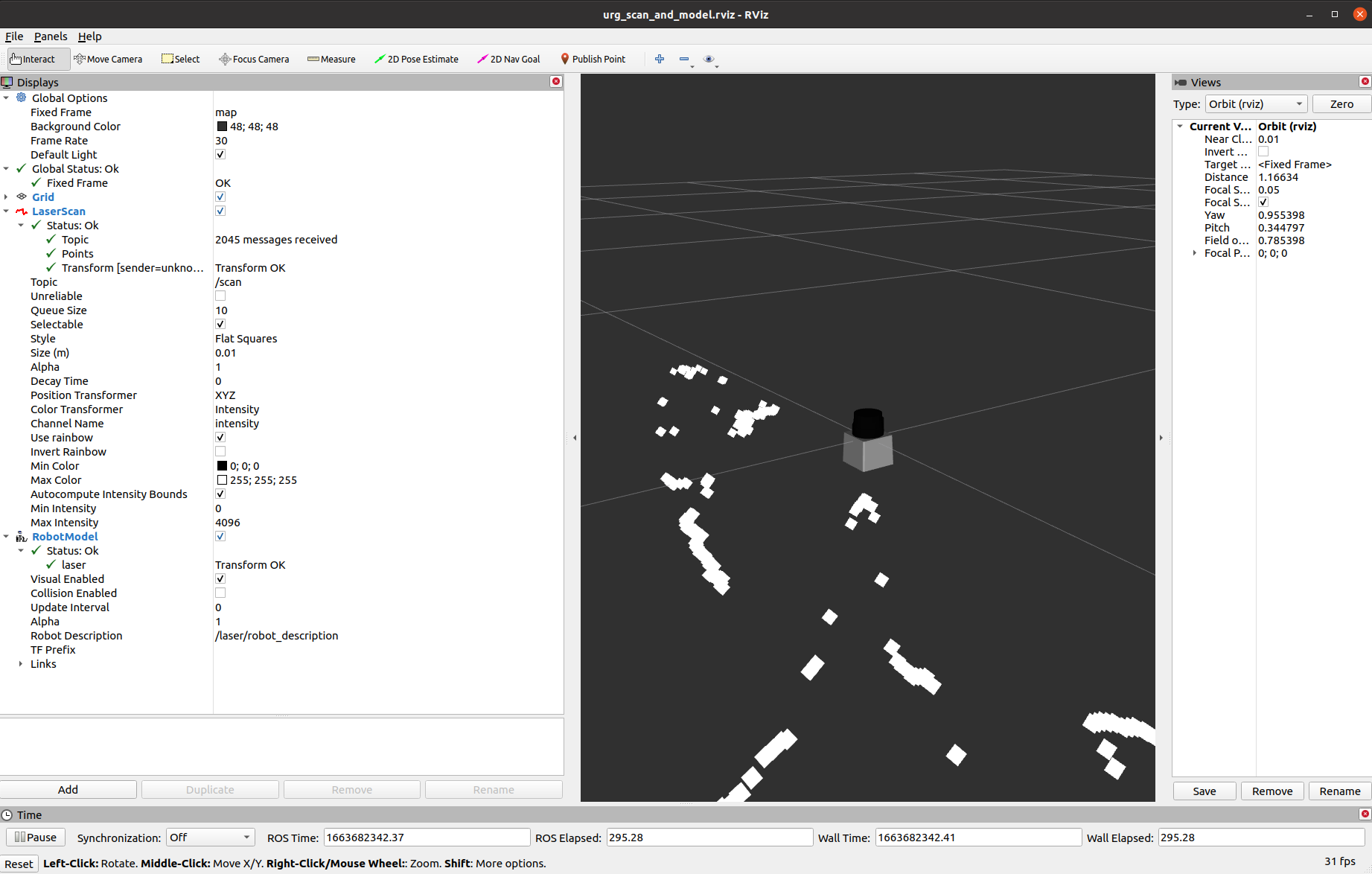

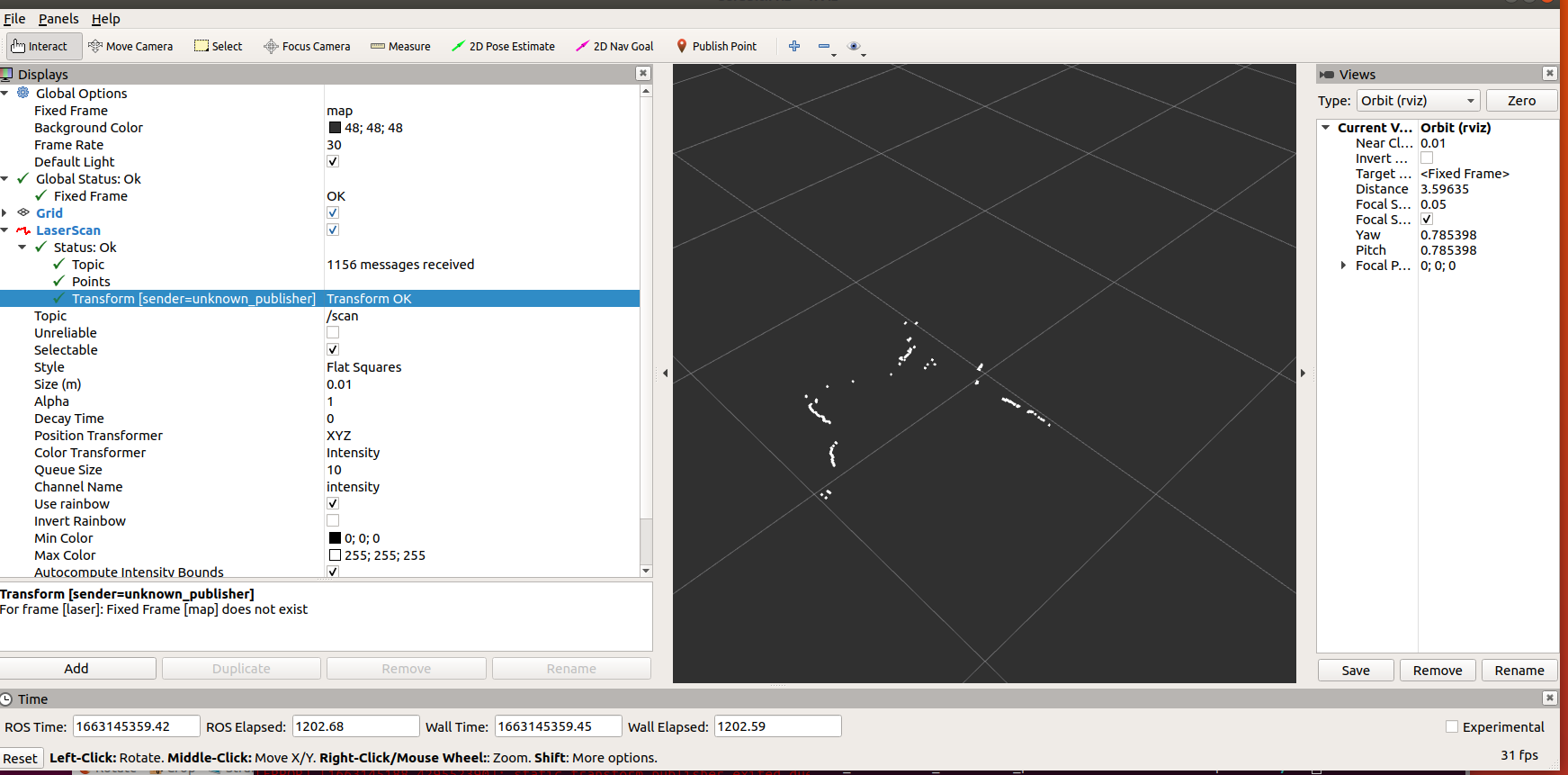

- Started rosrun rviz rviz and added in the Displays a LaserScan. Topic is directly good, but the transform of the map is missing.

- Starting rosrun tf2_ros static_transform_publisher 0 0 0 0 0 0 map laser solves this again.

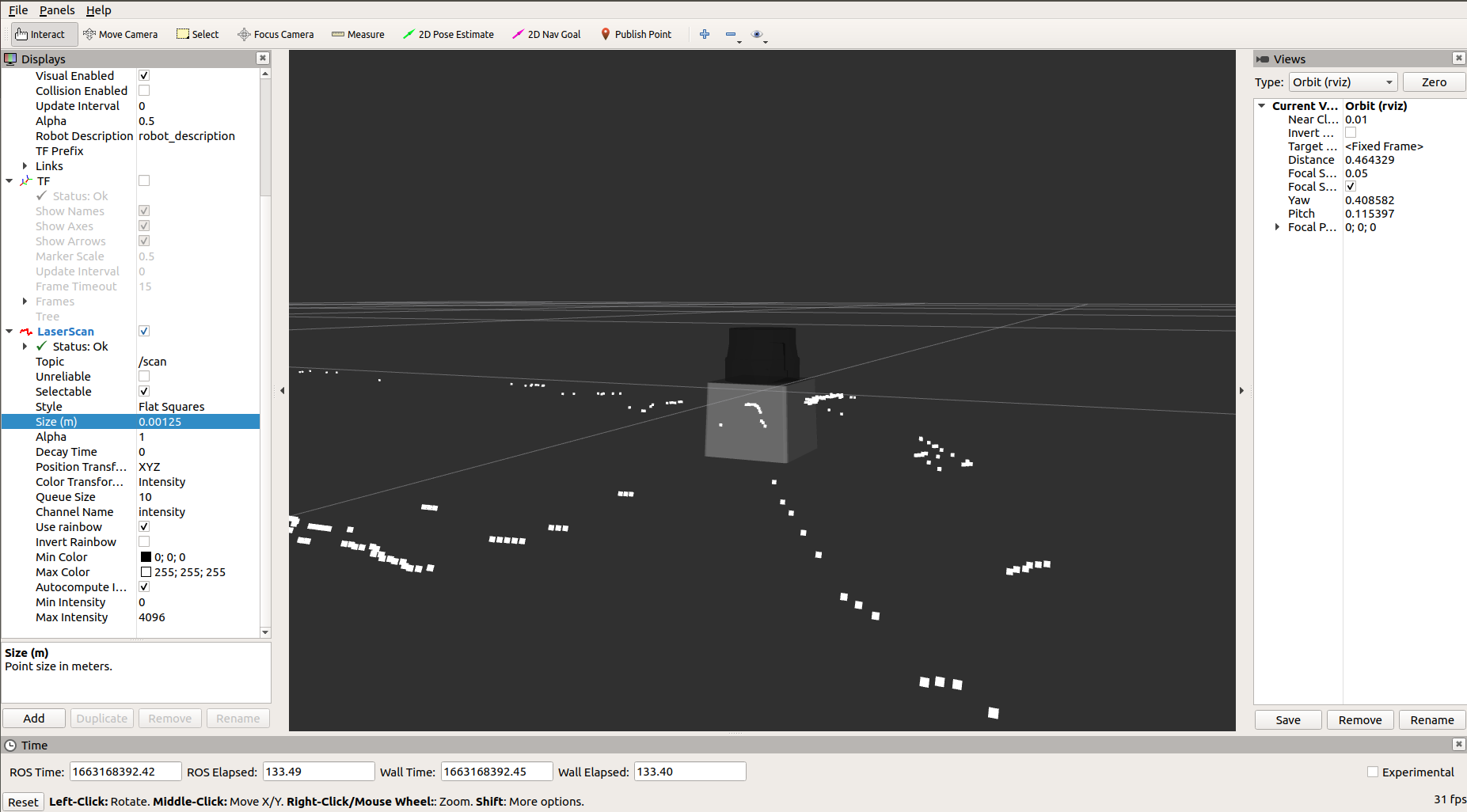

- Also roslaunch launch/urg-04lx.launch works without a catkin build. No mesh is displayed, which is not that stragne because the urg-04lx.xacro loads it from package://urdf_tutorial/meshes/hokuyo.dae.

- Did a catkin_make in ~/catkin_ws. Had to move two vdb_mapping directories to ~/catkin_make/not_building. Now I could do roscd ros_hokuyo_urg04lx_description. Changed the xarco to load package://ros_hokuyo_urg04lx_description/meshes/hokuyo.dae', which gives in rviz the message Couldn't load mesh resource.

- That is logicaly, because rviz was started without source ~/catkin_ws/devel/setup.bash. With the catkin-development environment activated, the RobotModel is displayed in ros-noetic:

>

>

September 19, 2022

- Installing ros-noetic-urg-node on nb-dual.

- For ros2, I could find a ros-bridge example with a dummy robot and urg-c ros-drivers development branch(three years old).

- Yet, installing with sudo apt install ros-foxy-urg-node seems to work, so I should test both ros-version on nb-dual.

- Started to create a new github with the URG04LX description.

- Tested the instructions for the package list. It seems to be OK, although github key itselfs fails: Failed to fetch https://cli.github.com/packages/dists/stable/InRelease

- With the updated keyring the package gh could be updated.

- Followed the instructions from cli github to solve this issue.

September 16, 2022

- This Tutorial on the Franka Emika robot in IEEE RAM would have been perfect reading material for the first week of the ERF Hackaton.

- Have to bring the Mover5 back to BrainCreators, so looking at June 11, 2022 how to fold the robot up again.

September 14, 2022

- Testing if I can read out the scans from the URG04LX lidar.

- Found this tutorial, which is based on the old hokuyo-node.

- No device in /opt/ros/melodic/sensors was created, but I am also not sure if I rebooted, so the new 93-hokuyo-sensor.rules is maybe not active yet.

- Did a sudo chmod g+c /dev/ttyACM0, and rosrun urg_node getID /dev/ttyACM0 works.

- The node can be started with roslaunch urg_node urg_lidar.launch.

- The /scan and a number of other topics are published:

/laser_status

/scan /urg_node/parameter_descriptions

/urg_node/parameter_updates

- Yet, rviz cannot display the scan because the transform of the map is missing:

- THis can be solved by running a static transform with the command rosrun tf2_ros static_transform_publisher 0 0 0.0 0 0 map laser:

- Connected the Hokuyo URG04LX via the RS232 interface (don't forget to disconnect the USB, otherwise no data is send via RS232). Used the pl2303 converter from the UMI-RTX to get the RS232 data to nb-ros.

- The command rosrun urg_node getID /dev/ttyUSB0 gives Device at /dev/ttyUSB0 has ID H0901625.

- Created an alternative launch file called urg_lidar_serial.launch. The start seems fine, but I receive no updates in rviz:

[ INFO] [1663148290.605047942]: Connected to serial device with ID: H0901625

[ INFO] [1663148291.501230189]: Starting calibration. This will take a few seconds.

[ WARN] [1663148291.501438209]: Time calibration is still experimental.

[ INFO] [1663148291.513547821]: waitForService: Service [/diagnostics_agg/add_diagnostics] has not been advertised, waiting...

[ INFO] [1663148299.555176156]: Calibration finished. Latency is: -0.0794.

[ INFO] [1663148299.766712662]: Streaming data.

- With rostopic echo /scan I saw that the topic was published.

- Restarting rviz only worked after I also restarted the transform (not only map-laser, but also rosrun tf2_ros static_transform_publisher 0 0 0.1 0 0 0 map base_link.

- This tutorial describes how to add a Hokuyo sensor-driver to a PR2 robot, but not the description.

- After a long search, I found this Pioneer P3DX-gazebo project, which has both a hokuyo mesh and xacro.

- Used the launch file, mesh and xacro to start only a robot-state-publisher node.

- First had to sudo apt install ros-melodic-robot-state-publisher, before I could test roslaunch ./launch/urg-04lx.launch.

- The node starts but crashes directly with No name given for the robot.. The robot_name is one of the parameters, but maybe it doesn't correspond with the xacro or mesh to be loaded.

- Installed sudo apt install ros-melodic-urdf-tutorial, because robot_state_publisher needs a urdf description.

- Looked at Starting from scratch.

- The first command works, although it also complains that state_publisher is deprecated and that I need to install sudo apt install ros-melodic-joint-state-publisher-gui. The urdf is minimal, but gives a name to the robot tag.

- With giving a name to the robot tag in urg-04lx.xacro file, I got a new error message: No link elements found in urdf file

- Seems that the xacro-macros are not read (interpreted as a static urdf). WIht a static link I get only a warning:

[ WARN] [1663166885.548564818]: The root link laser has an inertia specified in the URDF, but KDL does not support a root link with an inertia. As a workaround, you can add an extra dummy link to your URDF. - The node is running with only sinlge node:

NODES

/laser/

robot_state_publisher (robot_state_publisher/robot_state_publisher)

- The display.launch from the tutorial launched a three nodes:

NODES

/

joint_state_publisher (joint_state_publisher/joint_state_publisher)

robot_state_publisher (robot_state_publisher/state_publisher)

rviz (rviz/rviz)

- When I select robot_model in rviz, I get the one from 01-myfirst.urdf (which was still running in the background.

- Used the display.launch from the tutorial with the new xacro. Yet, the mesh is not loaded, although I gave the path and simplified the name:

Could not load resource [/home/arnoud/projects/hokuyo/meshes/hokuyo.dae]: Unable to open file "/home/arnoud/projects/hokuyo/meshes/hokuyo.dae". . - Copying that file to /opt/ros/melodic/share/urdf_tutorial/meshes and specifying it in the xacro as

works:

September 13, 2022

- Found a powercable for the Hokoyu URG04LX laserscanner.

- The ros hokuyo node is for more modern ros-version (as Melodic on nb-ros) replaced by urg node.

- Checked with dmesg | tail and the device seems to be initialized correctly:

[380073.118429] usb 3-1: new full-speed USB device number 6 using xhci_hcd

[380073.268982] usb 3-1: New USB device found, idVendor=15d1, idProduct=0000

[380073.268989] usb 3-1: New USB device strings: Mfr=1, Product=2, SerialNumber=0

[380073.268993] usb 3-1: Product: URG-Series USB Driver

[380073.268996] usb 3-1: Manufacturer: Hokuyo Data Flex for USB

[380073.302119] cdc_acm 3-1:1.0: ttyACM0: USB ACM device

[380073.302529] usbcore: registered new interface driver cdc_acm

[380073.302530] cdc_acm: USB Abstract Control Model driver for USB modems and ISDN adapters

- I also see the device on /dev/ttyACM0, which is rw for the group dailout (which I am member off).

- Still, rosrun urg_node getID /dev/ttyACM0 gives:

getID failed: Could not open serial Hokuyo:

/dev/ttyACM0 @ 115200

could not open serial device.

- With sudo rosrun is not known, but maybe it is the wrong bautrate.

- Connected a UTM-30LX. dmesg | tail gives:

[380919.129576] usb 3-1: new full-speed USB device number 7 using xhci_hcd

[380919.279797] usb 3-1: New USB device found, idVendor=15d1, idProduct=0000

[380919.279804] usb 3-1: New USB device strings: Mfr=1, Product=2, SerialNumber=0

[380919.279808] usb 3-1: Product: URG-Series USB Driver

[380919.279811] usb 3-1: Manufacturer: Hokuyo Data Flex for USB

[380919.281113] cdc_acm 3-1:1.0: ttyACM0: USB ACM device

- Get the same error message for the UTM-30LX.

- I am not the only with this problem, but I am part of the dailout group.

- Followed the brute force solution suggested here: sudo chmod o+rw /dev/ttyACM0. Now I get both for the UTM30LXDevice at /dev/ttyACM0 has ID H1102815 and for the URG4LX Device at /dev/ttyACM0 has ID H0901625. Not clear yet how the urg-node publishes measurements.

- Created in /lib/udev/rules.d a script 93-hokuyo-sensor.rules with the suggestion from the PR2 robot to create a symbolic link /opt/ros/melodic/sensors/hokuyo_ID

July 9, 2022

- The Lely challenge students spent 91h online, 11.3h per student, which the most dedicated one doubling that amount.

June 30, 2022

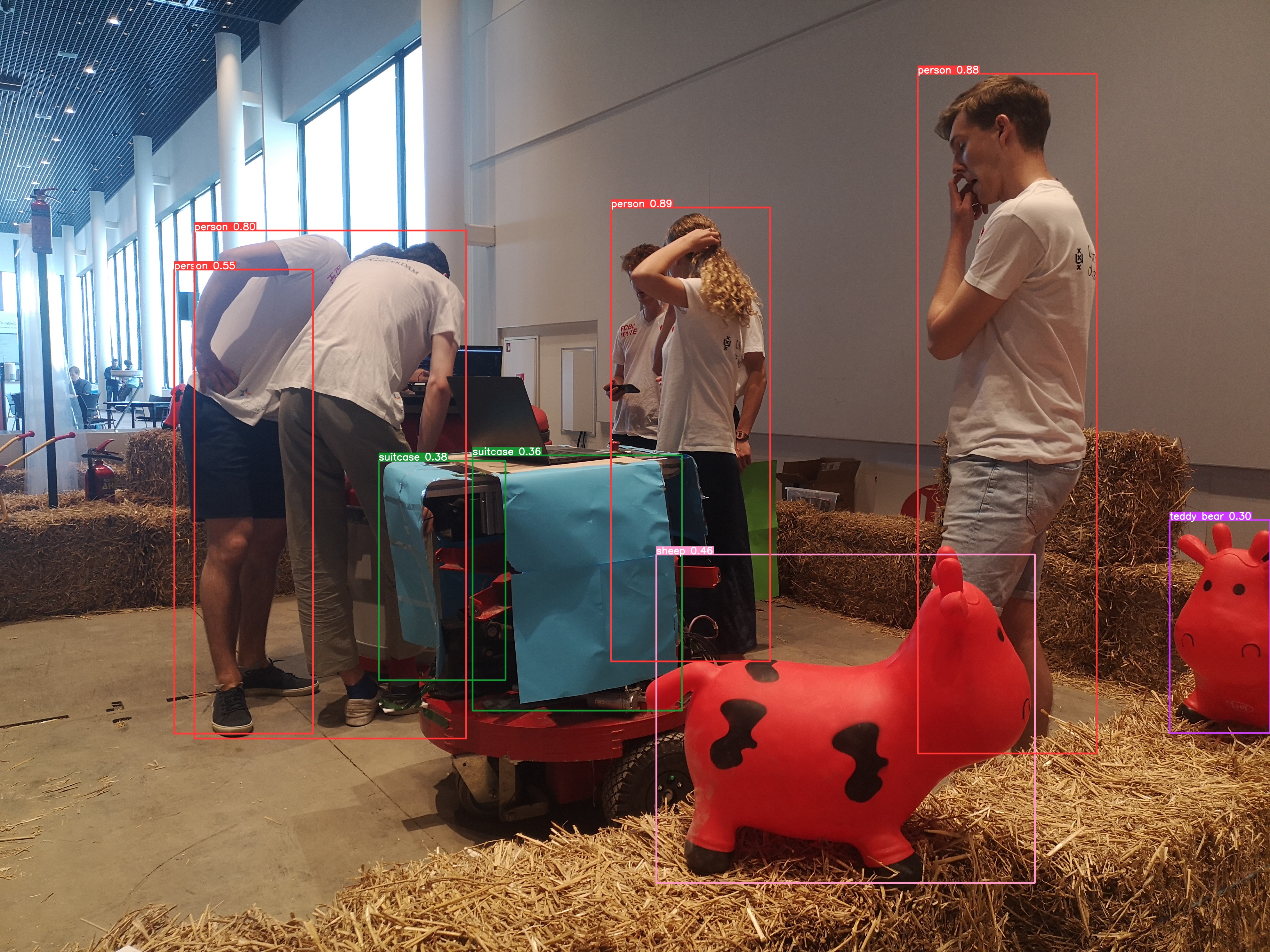

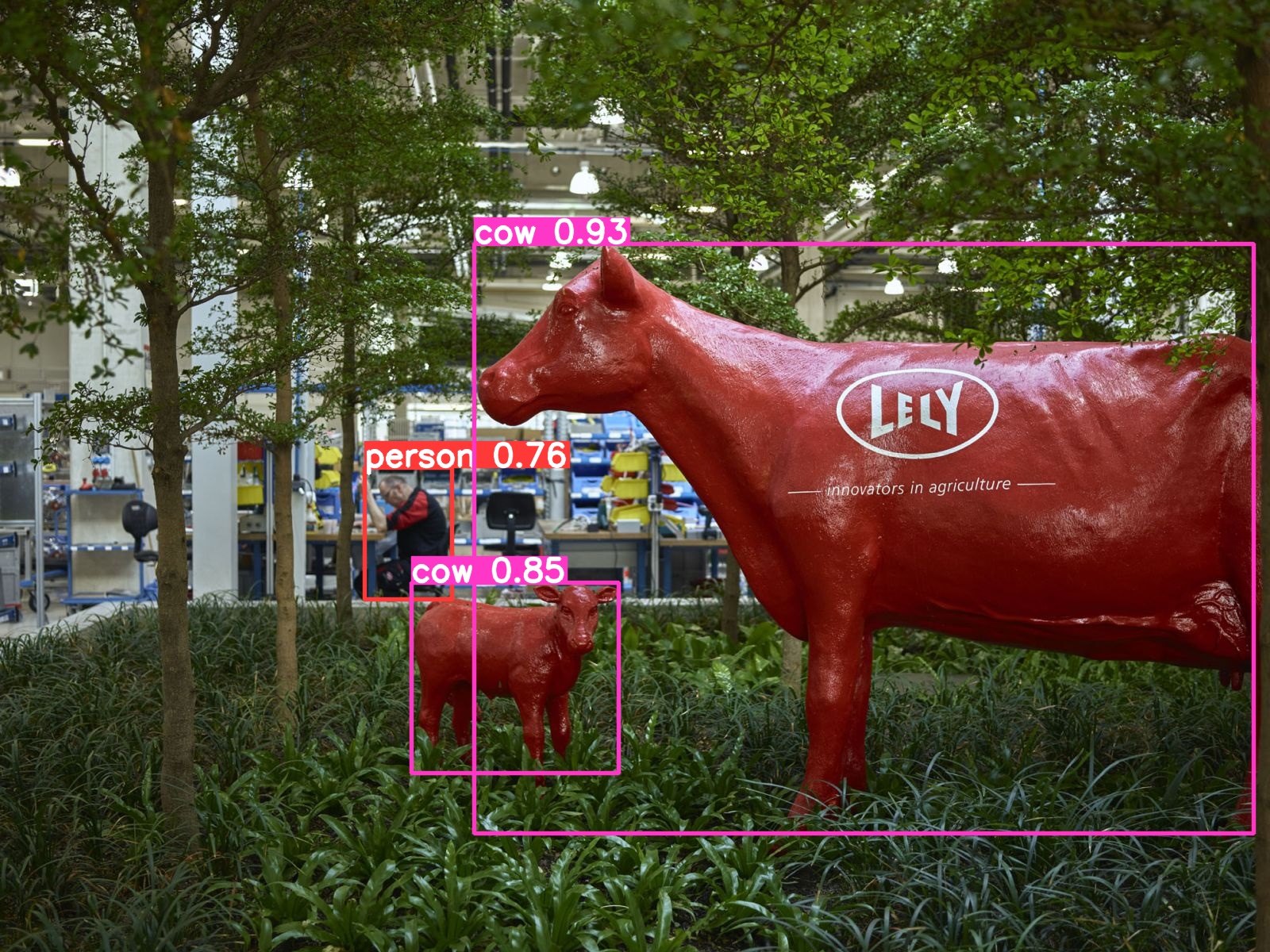

- Tried both yolov5 and yolov6 on a Lely challenge image. The students are well detected, the cows seems not realistic enough.

- With yolov6 the command python3.8 tools/infer.py --weights yolov6s.pt --source LelyBarn.jpg classified the cow as a firehose:

- With yolov5 the command python3.8 detect.py --weights=yolov5s6.pt --source data/images/LelyBarn.jpg at least the cows are recognized as sheep or teddybears.:

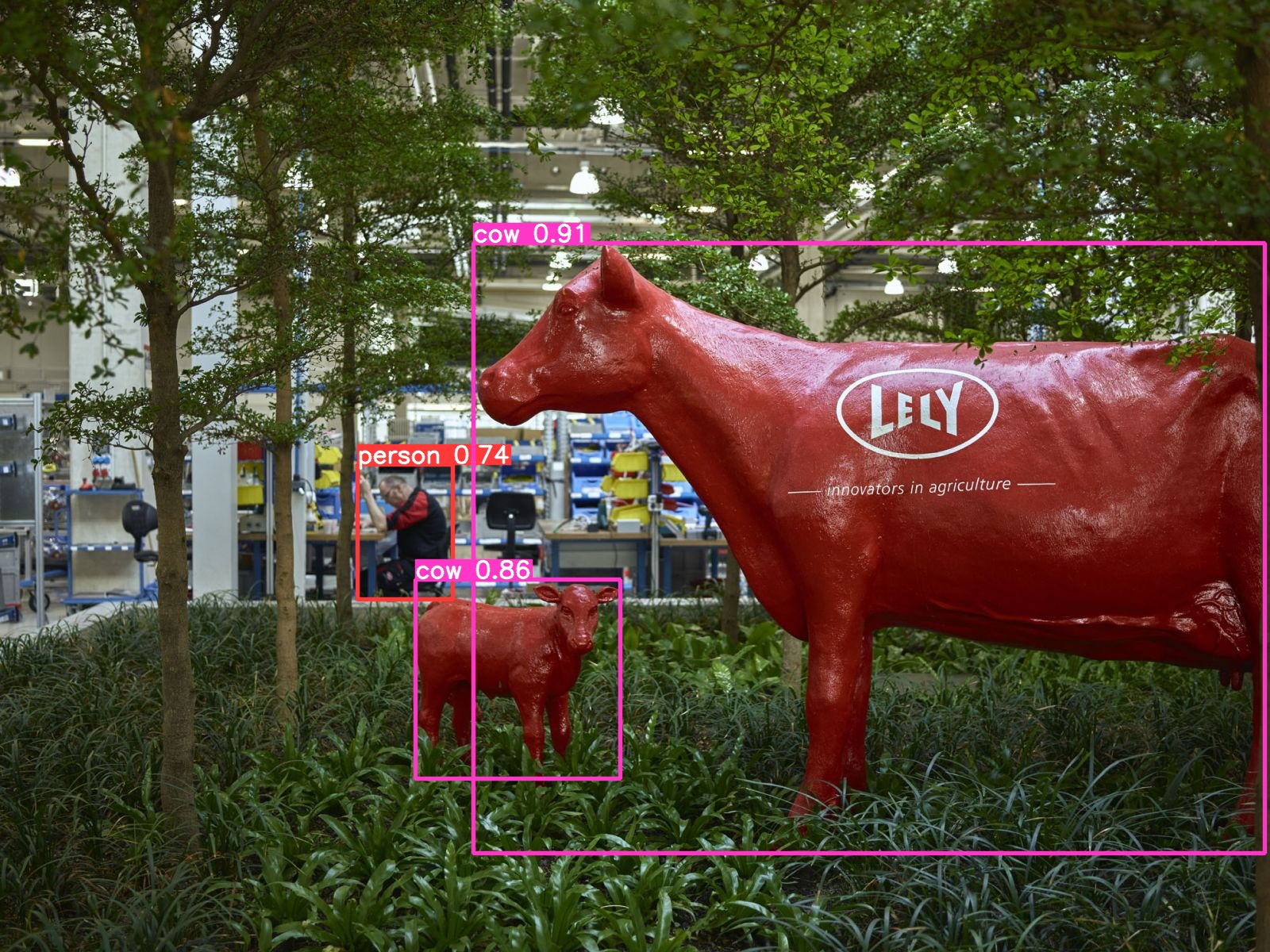

- So, tried it again with more realistic cows.

- Both yolo v5 and v6 recognized the red Lely cow with high probability:

- Both yolo v5 and v6 recognized most of the cows in a barn:

June 27, 2022

- I succesfully created a bag file while running around the kobuki. Yet, rosbag play /tmp/mylaserdata.bag --topics /laser_topic /tf_topic gives No messages to play on specified topics.

- Strange, because it is the correct format according to rosbag commandline instructions.

June 17, 2022

- Finished day 1 of the ROS Navigation course.

- Both the costmaps and maybe also the recovery_behaviors will be very important during the Barn challenge:

- Question is if the students will be able to get the Visual odometry working in such a short time, because the encoders will give wrong readings when there is food that has to be pushed on the floor.

June 14, 2022

- The Franka robot seems to have also an OpenGym simulation.

- Note that not only code is availble, but also pretrained model in the RL zoo.

- Note that the author mentions that it will not trivial (nor impossible) to deploy this result to a real Franka Emika Panda robot. The suggested solution direction is via franka_ros.

- A ros compatible simulation can be found here.

- The simulation requires first the installation of libfranka, which is possible for several combinations of Ubuntu and ROS.

- Strange enough Version 4.0.4 is more recent (2022-03-21) than Version 4.1+ (2021-01-26)

- Made an account for Franka World. libfranka here is again a link to github (latest version 0.9.0). There are also links to both ROS MoveIt planning, ros2_control, and Matlab toolbox. Version 4.1 refers to the RIDE Command Line Interface, which is available on Franka World as app-development-0.4.1.zip. Documentation for ride-cli can be found here.

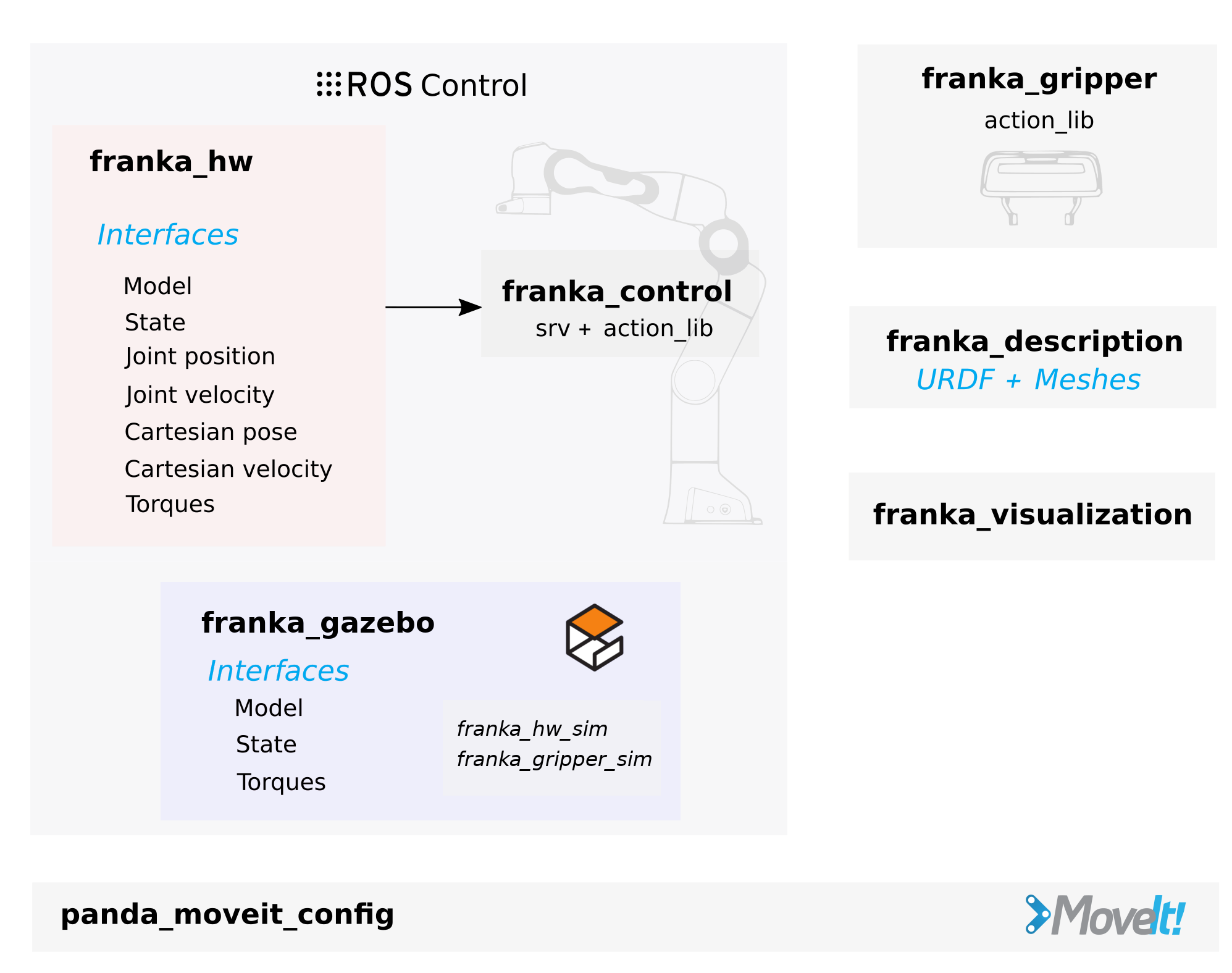

- Contrary to the install instructions, it seems possible to do sudo apt install ros-noetic-libfranka ros-noetic-franka-ros. The last one is meta-pacakage which installs several interesting packages, such as ros-noetic-franka-description, ros-noetic-franka-gazebo, ros-noetic-franka-visualization, as indicated in the documentation:

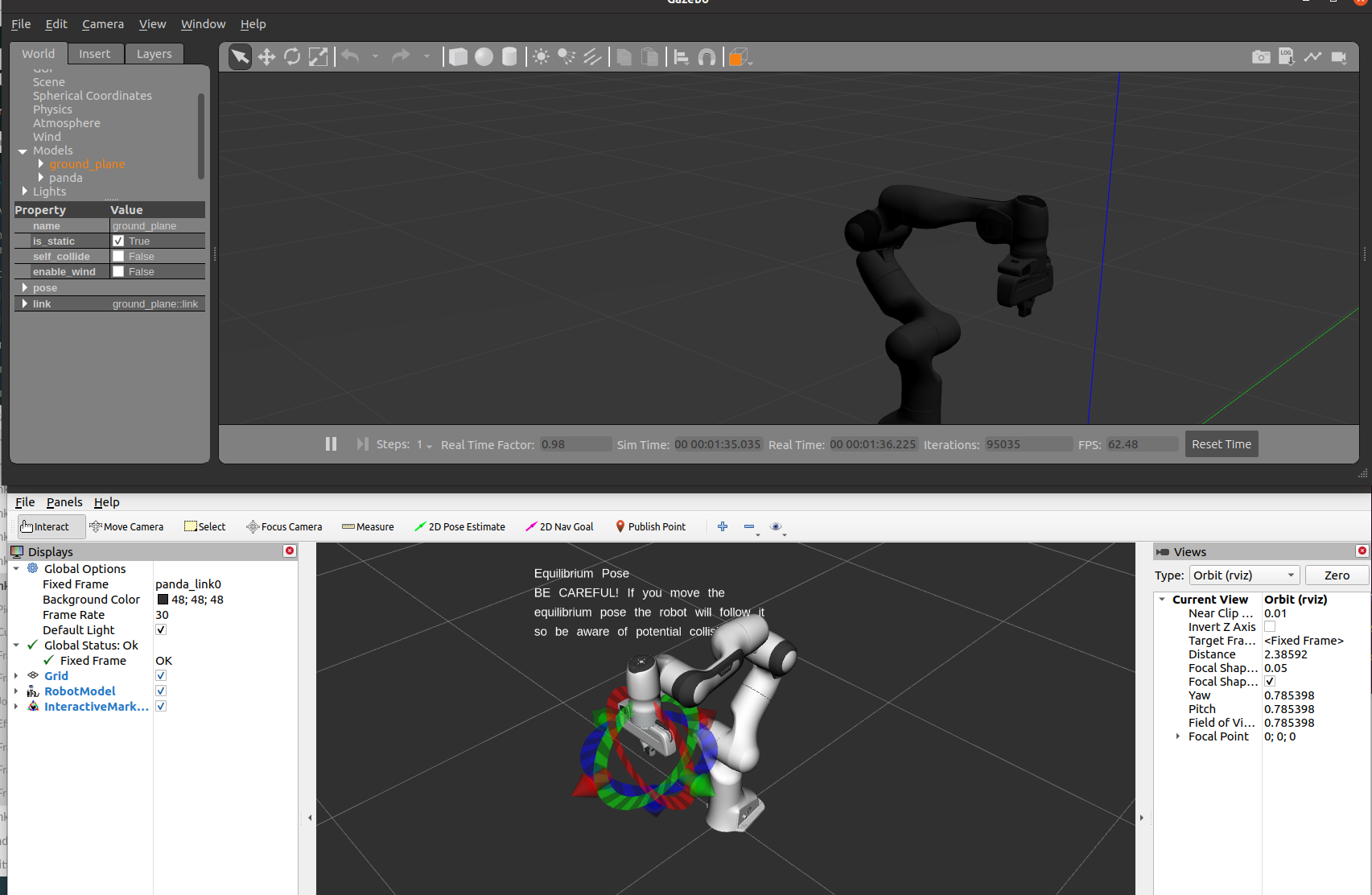

- Next I run roslaunch franka_gazebo panda.launch x:=-0.5 \

world:=$(rospack find franka_gazebo)/world/stone.sdf \

controller:=cartesian_impedance_example_controller \

rviz:=true as suggested here, which gave:

June 11, 2022

- Tried on nb-dual to start exercise 5.2.1 from ros2_controllers, but also this spawn with ros2 launch spawn_robot spawn_rrbot.launch.py fails on:

~/galactic_ws/install/spawn_robot/lib/spawn_robot/spawn_entity_client.py

Gazebo '/spawn_entity' service not available, waiting ... - On nb-dual I have as ROS1 version noetic. See if that also works with CPR Mover package.

- Found M6 bolts for the Mover's stability cross, but those bolts do not fit through the Mover's base, which are M4 holes (see page 19 of the user guide).

- Strange, because the Mover is to be mounted on that the robot stand.

- Found the User Guide

- Connected the Mover with the adapter of PEAK. The RS232 digital in/out is not used.

- With dmesg | tail the PEAK adapter is clearly recognized:

5612.310613] usb 3-8.3: new full-speed USB device number 12 using xhci_hcd

[ 5612.412251] usb 3-8.3: New USB device found, idVendor=0c72, idProduct=000c, bcdDevice=54.ff

[ 5612.412263] usb 3-8.3: New USB device strings: Mfr=10, Product=4, SerialNumber=0

[ 5612.412267] usb 3-8.3: Product: PCAN-USB

[ 5612.412269] usb 3-8.3: Manufacturer: PEAK-System Technik GmbH

[ 5613.001796] CAN device driver interface

[ 5613.005881] peak_usb 3-8.3:1.0: PEAK-System PCAN-USB adapter hwrev 84 serial FFFFFFFF (1 channel)

[ 5613.006113] peak_usb 3-8.3:1.0 can0: attached to PCAN-USB channel 0 (device 255)

[ 5613.006197] usbcore: registered new interface driver peak_usb - When I execute the suggested startup_can_interface.sh script I see with dmesg | tail:

[ 5613.005881] peak_usb 3-8.3:1.0: PEAK-System PCAN-USB adapter hwrev 84 serial FFFFFFFF (1 channel)

[ 5613.006113] peak_usb 3-8.3:1.0 can0: attached to PCAN-USB channel 0 (device 255)

[ 5613.006197] usbcore: registered new interface driver peak_usb

[ 6725.955341] can: unknown parameter 'dev' ignored

[ 6725.955424] can: controller area network core

[ 6725.955673] NET: Registered protocol family 29

[ 6725.988543] peak_usb 3-8.3:1.0 can0: setting BTR0=0x00 BTR1=0x1c

[ 6726.013482] IPv6: ADDRCONF(NETDEV_CHANGE): can0: link becomes ready

. - The ROS package is the only provided LINUX software.

- A MoveIt configuration is available on request.

- Followed the instructions, but catkin_make didn't start any build.

- Removed the build directory. catkin_make travels through 5 packages, but fails. Looking into CMakeError.log I see the error:

/usr/bin/ld: cannot find -lpthreads - Moved cv_camera package from ~/catkin_ws/src, and now the cpr_robot package is build. To activate this package you should first source devel/setup.bash.

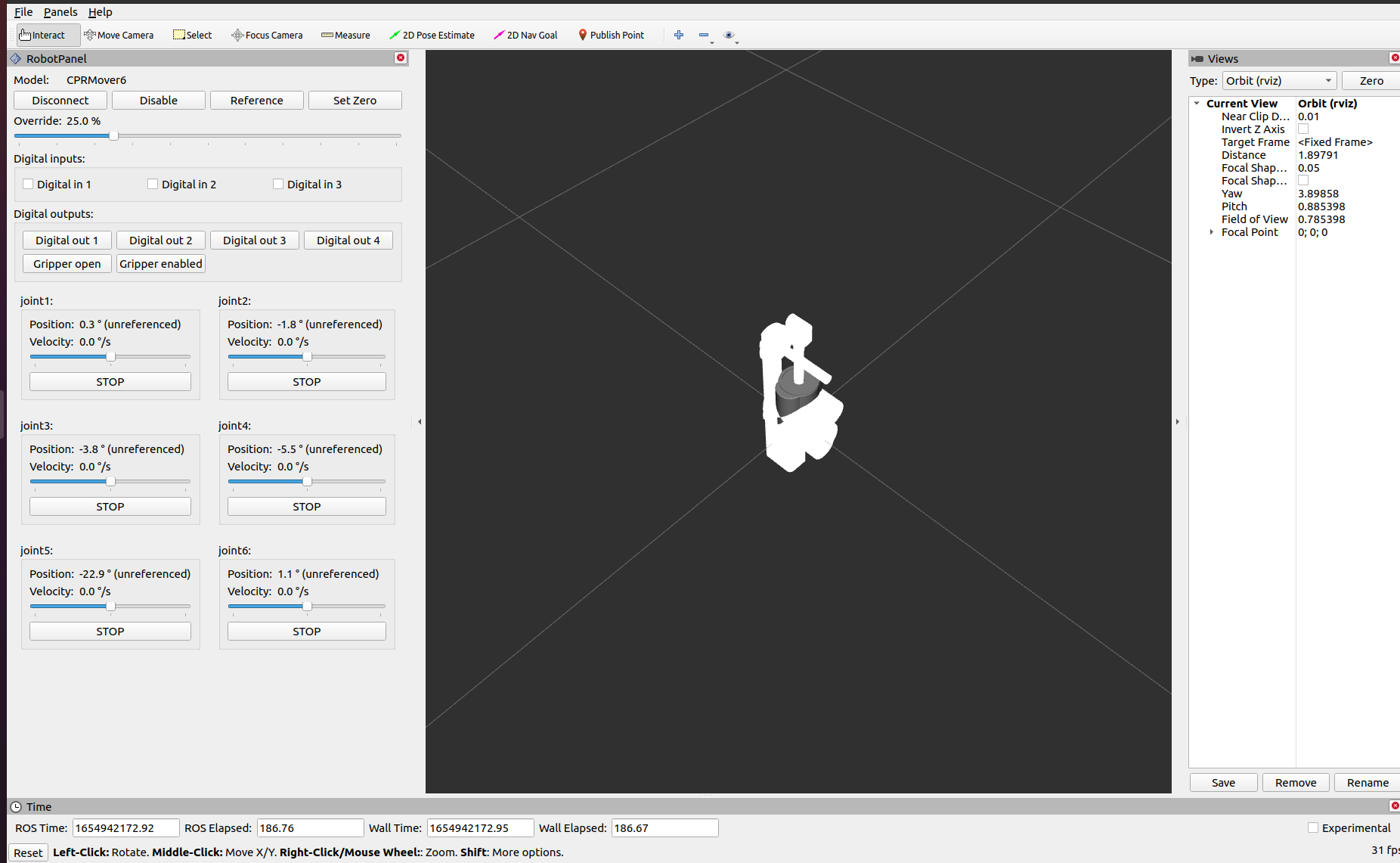

- Was able to start roslaunch cpr_robot CPRMover6.launch. Connected and enabled the robot. Note that the joints are controlled by speed, not position, so you should stop them on time.

- Restarted the robot. This gives:

[ 8015.119003] peak_usb 3-8.3:1.0 can0: bus-off - Restarting source ~/bin/startup_can_interface.sh gave:

RTNETLINK answers: Device or resource busy . - Reconnecting usb and sourcing the script gave again:

[ 8489.501184] IPv6: ADDRCONF(NETDEV_CHANGE): can0: link becomes ready - With the robot stand the Mover robot moves much stabler (although still some strange clicks occured). The RViZ has control buttons for 6 joints. I could control joint A1-A3, but nothing happened for joint A4-A6 (nor positive, nor negative).

- The gripper was closing when I pushed the 'Gripper Enabled' button, also when I switched the 'Gripper Open' button:

June 8, 2022

- Continue with Unit5 of the ros2_control framework course at ConstructSim .

- The control of the arm works fine, but when I try to control the small differential-drive robot I get the error: Couldn't contct service /controller_manager/list_controllers. Could also find no documentation how to restart thsi service.

- Found this documentation, but ros2 run controller_manager spawner list_controllers also gave Controller manager not available.

- Moved on with Unit6, which uses a Solo robot. This is Open Robot Actuator Hardware initiative.

- The Solo8 can be build with 3D-printed elements, the Solo12 can also bought from the shelf.

- Note that " the full 8-DOF quadruped was built for approximately 4000 e of material cost. ", according to the original paper.

- Launching the solo failed, although I got the message [minimal client]: Successfully spawned entity robot. The environment was restarted after inactivity, but still ros2 control list_controllers gave no contact message. Should try to do it natively (not that this is ROS2 Galaxy, not ROS2 Foxy) by git clone https://bitbucket.org/theconstructcore/ros2_control_course.git on nb-dual.

- The ROS2 distribution is inbetween Foxy and Humble, and the most recent one for Ubuntu 20.04.

- Still, the code from the repository builds fine after a source /opt/ros/foxy/setup.bash.

- Yet, spawning the robot indicates that is Waiting for service /spawn_entity

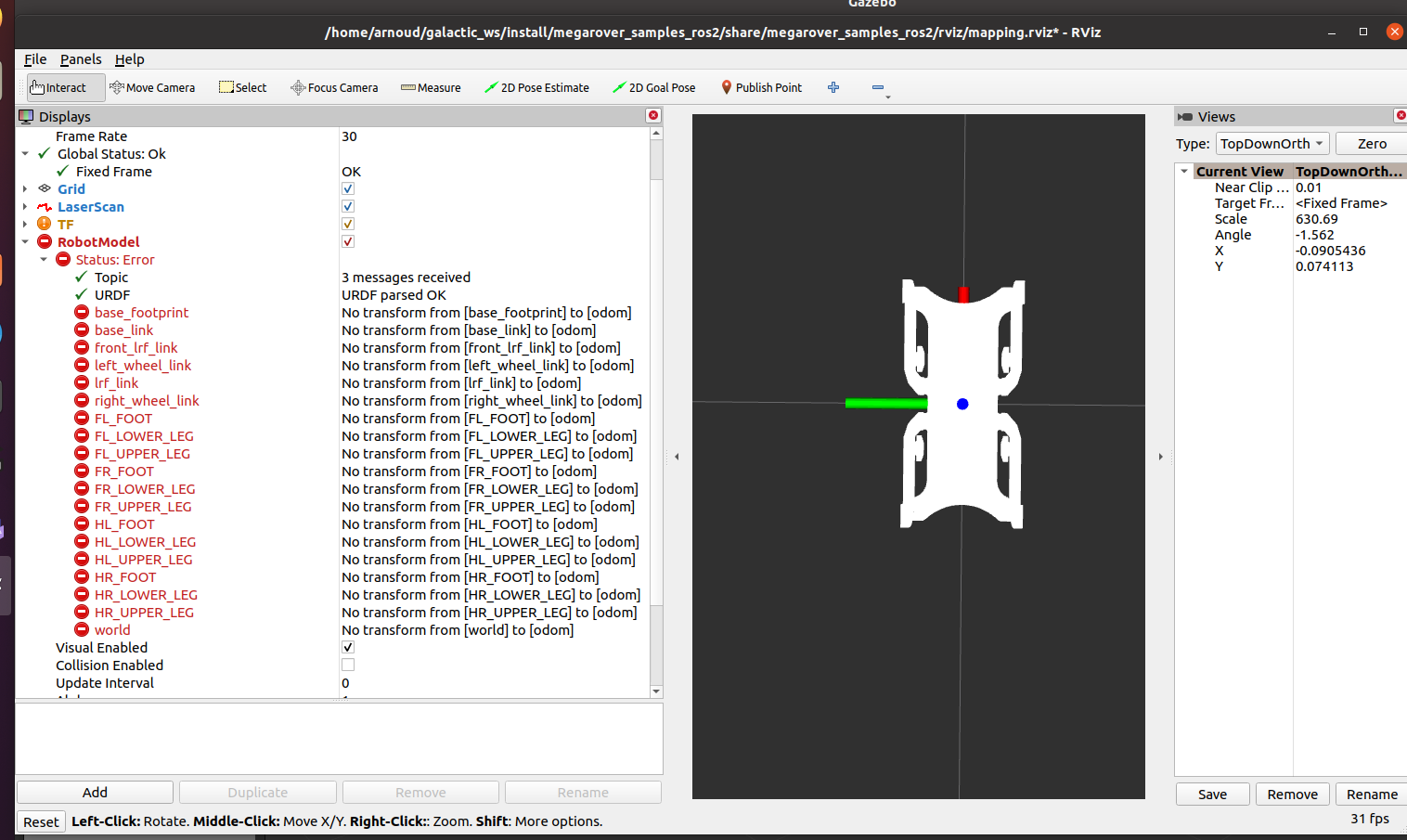

- Trying if I could spawn this gazebo sample. This example waits on a robot_description to be published. Do ros2 launch megarover_samples_ros2 robot_state_publisher.launch.py doesn't help, because some transforms are missing (while the mapping node is active):

June 7, 2022

- Waiting on the possibility to distribute the ConstructSim accounts, so explored some of the free courses.

- Started with the introduction of ros2_control framework at ConstructSim App.

- The 2nd part contains code published on . bitbucket.

- Finished the Unit 2 of ros2_control.

- Finished the Unit 3 of ros2_control.

- Unit 4 is dedicated to a specific brand of motors: the Dynamixel SDK

May 30, 2022

- Still no further details on the ERF hackaton.

- Last year there was Enrich 2021, which is an ER hackaton, although focused on a rescue scenario.

- The Commonplace Mover5 is controlled by their CPRog software.

- There is also a ROS driver, although for ROS Jade, although the latest github release is tested on Ubuntu 18.04 and ROS Melodic.

March 24, 2022

- Ros as a new open source platform: Open Robotics Middleware Framework.

- Would be an interesting task as intern to make the Locus robots RMF aware, for instance by interfacing them with Free Fleet management system.