On an old laptop, I found back my little paper “Rule learning in recurrent networks“, which I wrote in 1999 for my “Connectionism” course at Utrecht University.

I trained an SRN on the contextfree language AnBn, with 2<n<14, and checked what solutions it learned. Results might have seemed o.k. at first glance, but I quickly realized that average next symbol prediction is a terrible performance metric here — which made me skeptical for many years of connectionist papers that only reported this metric. My SRN really had only learned to predict its input (say A when you receive A), but that is correct some 85% of the time.

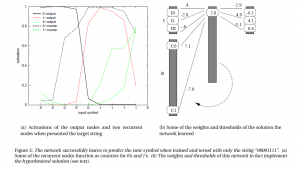

In a simple experiment where I trained a network on a single string, A4B4, I did learn that implementing a counter is quite simple in SRNs. And that convinced me that a real solution should really be in the SRN’s hypothesis space, which made me quite skeptical for many years (and until today) about claims that neural networks were fundamentally unable to learn symbolic structure. Rodriguez (2001, Neural Computation) later published a paper that showed that quite convincingly.

Nice (but slightly scary) to see how much I agree with 24 year old me…