1.4. Image Interpolation

Given the samples \(F\) of an image \(f\) the task of interpolation is to calculate a value for \(f(x,y)\) even for those \((x,y)\) that are not sample points.

In most cases it is not realistic to ask for the value \(f(x, y)\): a unique value only exists in case the sampling proces is without loss of information. For frequency-band-limited images indeed such a unique interpolated function exists, because ideal bandlimited images (functions) can be sampled without loss and thus can be reconstructed without error. 1

In these lecture notes we start from a rather pragmatic point of view. We assume that the digital images we start with are oversampled i.e. the samples are close enough to eachother to faithfully represent the image \(f\). Surely we have to take care not to undersample images in our computational analysis without presmoothing as we would be computationally introducing sampling artefacts then.

In this section we start with interpolation for 1D functions, that is we start with interpolation of sampled univariate functions. We will discuss only a few techniques that are commonly used. Then we show how to generalize these 1D interpolation methods to 2D (and nD if needed).

1.4.1. Interpolating 1D functions

Consider the samples \(F(k)\) of a function \(f(x)\). We assume that the sampling interval \(\Delta x\) is 1, i.e. \(F(k)=f(k)\). The goal of interpolation is to find an estimate of \(f(x)\) for arbitrary \(x\) given only the sampled (discrete) function \(F\).

1.4.1.1. Nearest neighbor interpolation

Nearest neighbor interpolation is the simplest interpolation method. Given the samples \(F(k)\) the value of the interpolated function \(\hat f\) at coordinate \(x\) is given as:

i.e. we simply select the sample point that is nearest to \(x\). Here \(\lfloor x \rfloor\) is the floor function, i.e. the largest integer values that is less then or equal to \(x\).

This leads to a staircase type of interpolated function. The function \(\hat f\) is indeed defined on the entire real axis but it is neither continuous (as it contains jumps) nor differentiable.

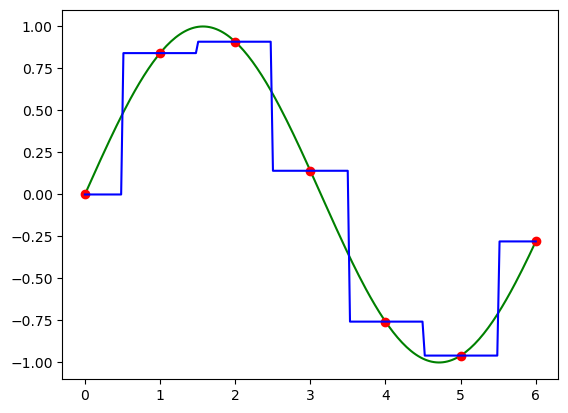

As an example we look at the function \(f(x)=sin(x)\) and its nearest neighbor interpolated version.

Show code for figure

1import numpy as np

2import matplotlib.pyplot as plt

3from scipy.interpolate import interp1d

4

5x = np.linspace(0, 6, 200); # fine sampling to represent continuous function

6xs = np.array([0, 1, 2, 3, 4, 5, 6]); # the sample points

7f = np.sin(x); F = np.sin(xs); # the 'continuous' function and its sampled version

8ifunc = interp1d(xs, F, kind='nearest'); hatf_nn = ifunc(x);

9plt.clf(); plt.plot(x, f, 'g-', xs, F, 'ro', x, hatf_nn, 'b-');

10plt.savefig('source/images/nninterp.png')

Fig. 1.10 Nearest Neighbor Interpolation. In green the function \(f\) defined on a continuous domain \(\setR\) (actually we did sample this function too… but we did so with a very small sample width and thus for our eye the plot looks like a continuous function), in red the sampled function and in blue the interpolated function.

1.4.1.2. Linear Interpolation

Linear interpolation is the scientific equivalent of what you have already learned in kindergarten: connect the dots. Between to adjacent sample points \(k\) and \(k+1\) we assume the function is a linear function and thus in this interval \([k,k+1]\) we have:

Show code for figure

1ifunc = interp1d(xs, F, kind='linear'); hatf_lin = ifunc(x);

2plt.clf(); plt.plot(x, f, 'g-', xs, F, 'ro', x, hatf_lin, 'b-');

3plt.savefig('source/images/lininterp.png')

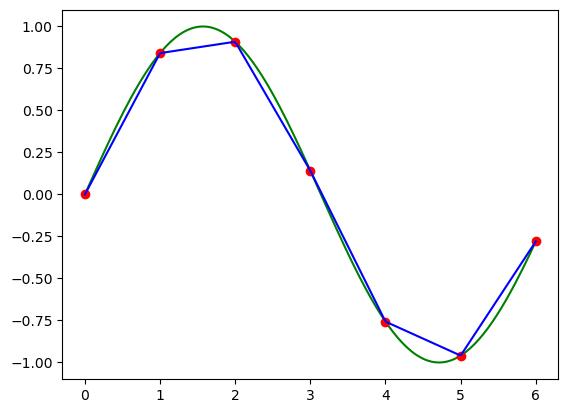

Fig. 1.11 Linear Interpolation.

The linearly interpolated function is continuous but it is not everywhere differentiable (in de sample points it is not differentiable). Furthermore for the interpolated function the second and higher order derivatives are equal to zero (except for the sample points where these are not defined).

Although a simple interpolation method, linear interpolation is very often used in practice. It is simple to implement and very fast.

1.4.1.3. Cubic Interpolation

In nearest neighbor interpolation only one sample is used (the nearest) to set the interpolated value. In linear interpolation we look at the 2 closest sample points (one on the left and one on the right). For cubic interpolation we look at two pixels on the left and two on the right.

To interpolate the function value \(f(x)\) for \(x\) in between \(x=k\) and \(x=k+1\) we fit a cubic polynomial

to the 4 sample points \(k-1, k, k+1\) and \(k+2\). For the 4 points we have:

There are four equations that are linear in the unknowns \(a\), \(b\), \(c\) and \(d\), we thus can use linear algebra methods to find these unknowns. Solving these equations for \(a,b,c\) and \(d\) we get:

Show code for figure

1ifunc = interp1d(xs, F, kind='cubic')

2hatf_cubic = ifunc(x);

3plt.clf()

4plt.plot(x, f, 'g-', xs, F, 'ro', x, hatf_cubic, 'b-')

5plt.savefig('source/images/cubicinterp.png')

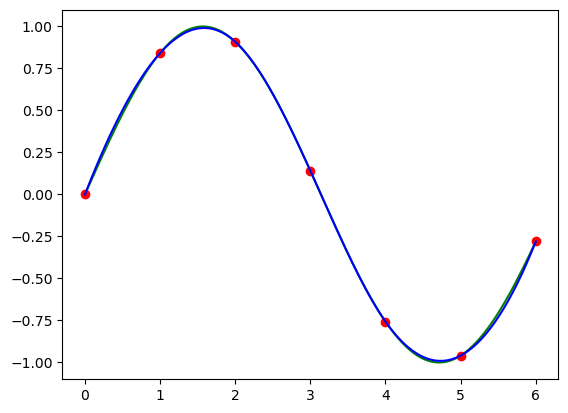

Fig. 1.12 Cubic Interpolation.

Note that we need a different cubic polynomial for every interval \(x\in[k, k+1]\). Note that the polynomial is fitted to points \((k-1, f(k-1)), (k, f(k)), (k+1,f(k+1)), (k+2, f(k+2))\) but the resulting polynomial is only used for point with \(x\in[k, k+1]\).

1.4.1.4. Even Better Interpolation

There are even better interpolation methods to be used. A straightforward generalization would be to consider even more samples to estimate the function value of \(f\). Taking \(n\) samples to the left of \(x\) and \(n\) samples to the right we need a polynomial of order \(2n-1\). A big disadvantage of higher order polynomials is overfitting of the original function: higher order polnomials tend to fluctuate wildly inbetween the sample points.

Show code for figure

1plt.clf()

2xs = np.arange(-7, 8)

3F = 1.0 * (np.abs(xs) < 3)

4plt.plot(xs, F, 'o');

5

6x = np.linspace(-7, 7, 1000)

7ifunc = interp1d(xs, F, kind='cubic'); hat_f = ifunc(x)

8plt.plot(x, hat_f);

9plt.savefig('source/images/interp_block.png')

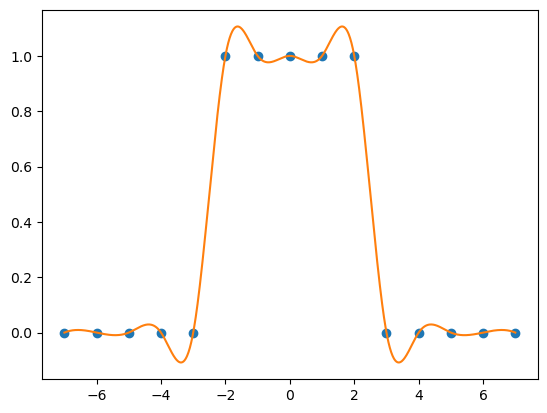

Fig. 1.13 Interpolating a sampled block function.

Another disadvantage of the interpolation schemes discussed so far is that the piecewise interpolated function \(\hat f\) shows discontinuities in the derivatives at the sample point positions. This disadvantage can be tackled with spline interpolation.

1.4.2. Interpolating 2D functions

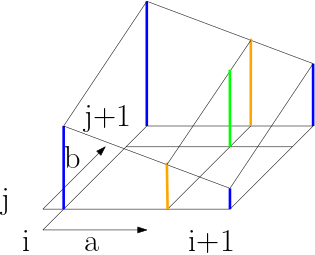

Given an interpolation method for 1D (scalar) functions it is always possible to generalize the method to work with 2D functions (and even higher dimensional ones). As an example consider the linear interpolation. To estimate a value \(f(x,y)\) with \(i\leq x\leq i+1\) and \(j\leq y\leq j+1\) we first interpolate in the ‘\(x\)-direction’ to find values \(f(x,j)\) and \(f(x,j+1)\) and then interpolate in the ‘\(y\)-direction’ to find \(f(x,y)\).

Fig. 1.14 Bilinear Interpolation. The blue lines denote the function values in the 4 sample points closest to the point \((x, y) = (i+a, j+b)\). The orange lines denote the values in \((i+a,j)\) and \((i+a,j+1)\) calculated with linear interpolation. The final value is denoted in orange and is the interpolation of the two ‘orange’ values.

Assume that \(x=i+a\) and \(y=j+b\) (with \(a\) and \(b\) in the interval \([0,1]\)) then:

When generalizing cubic interpolation to two dimensions we need 16 samples in the image (a \(4\times4\) subgrid).

For 2D functions we can also use nearest neighbor, cubic and spline interpolation.

Footnotes

- 1

We need Fourier analysis to really understand this. Fourier showed that every function (image) can be written as a sum of weighted sine and cosine functions of all frequencies. A bandlimited function is a function for which we do not need sine and cosine functions with a frequency higher than the band limition frequency. The Shannon/Nyquist sampling theorem relates the band limitation frequency with the sample frequency that causes no loss in information (i.e. the original analog signal can then be reconstructed from its samples.)